Virtualization via Containers

PUBLISHED IN

Continuous Deployment of CapabilityThe first blog entry in this series introduced the basic concepts of multicore processing and virtualization, highlighted their benefits, and outlined the challenges these technologies present. The second post addressed multicore processing, whereas the third post concentrated on virtualization via virtual machines. In this fourth post in the series, I define virtualization via containers, list its current trends, and examine its pros and cons, including its safety and security ramifications.

Definitions

Virtualization is a collection of software technologies that enable software applications to run on virtual hardware (virtualization via virtual machines and hypervisor) or virtual operating systems (virtualization via containers). Note that virtualization via containers is also known as containerization.

A container is a virtual runtime environment that runs on top of a single operating system (OS) kernel and emulates an operating system rather than the underlying hardware.

A container engine is a managed environment for deploying containerized applications. The container engine allocates cores and memory to containers, enforces spatial isolation and security, and provides scalability by enabling the addition of containers.

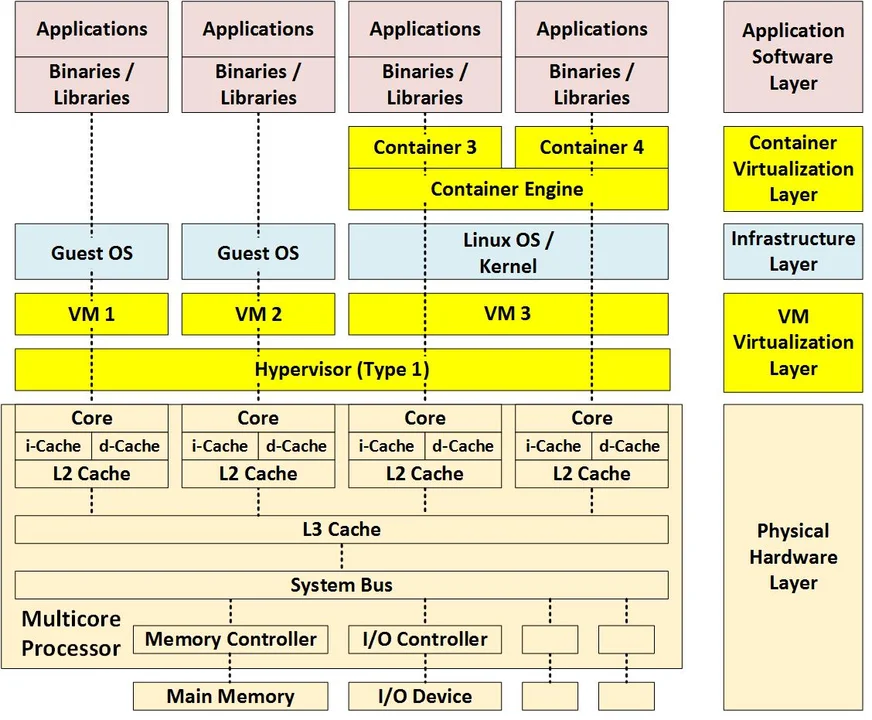

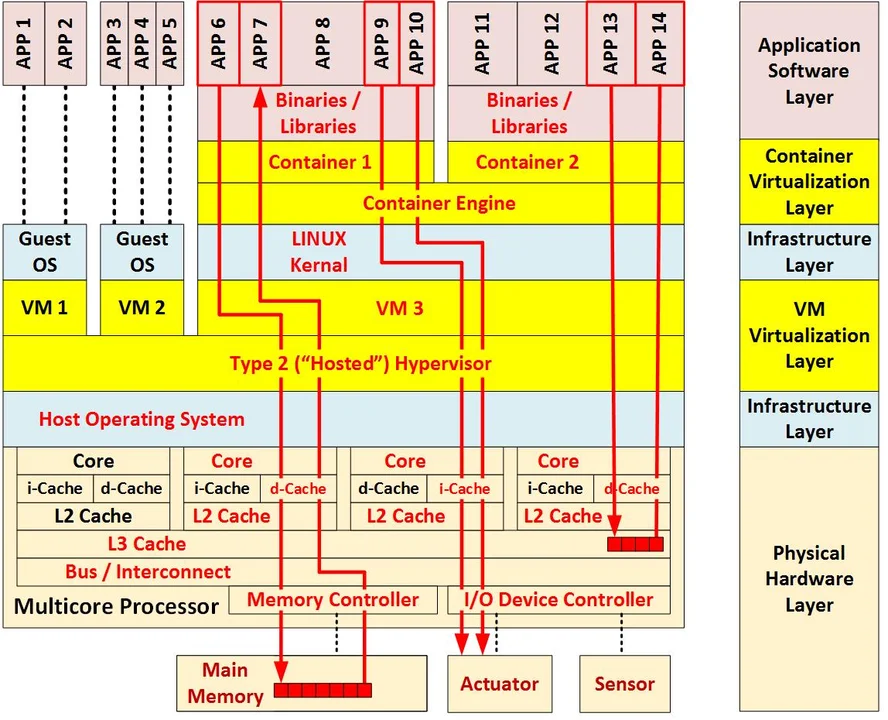

A hybrid container architecture is an architecture combining virtualization by both virtual machines and containers, i.e., the container engine and associated containers execute on top of a virtual machine. Use of a hybrid container architecture is also known as hybrid containerization.

The next four figures illustrate the definitions given above.

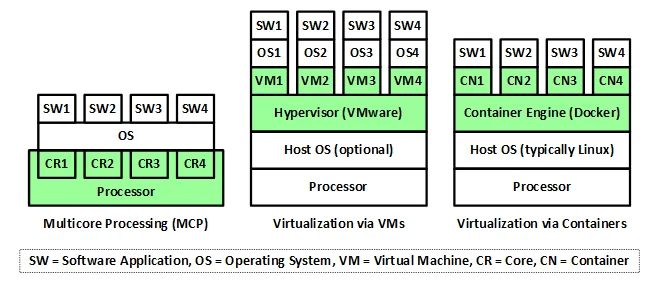

The following figure provides a high-level abstraction of the three technologies addressed in this series of blog posts, highlighting their differences. Note that VMware and Docker are merely examples of virtualization vendors; other vendors exist.

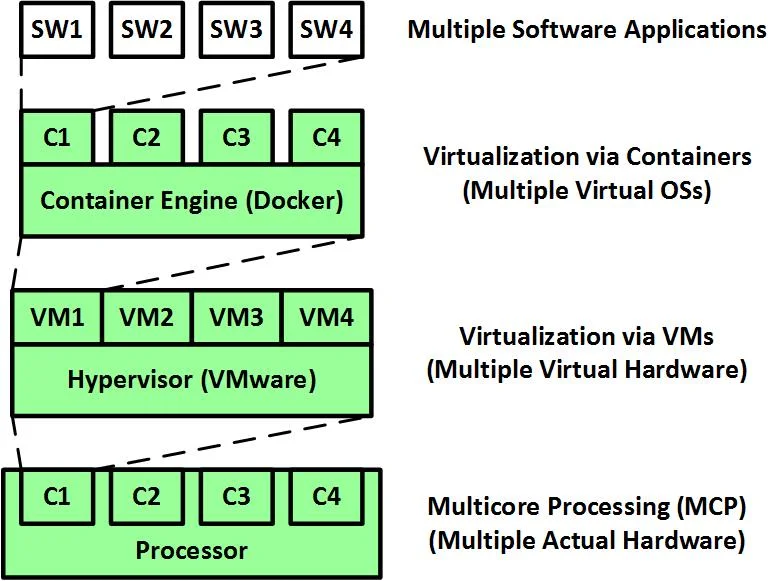

As shown in the following figure, these three technologies exist at three different layers in an architecture. Note that virtual machines emulate hardware, while containers emulate operating systems.

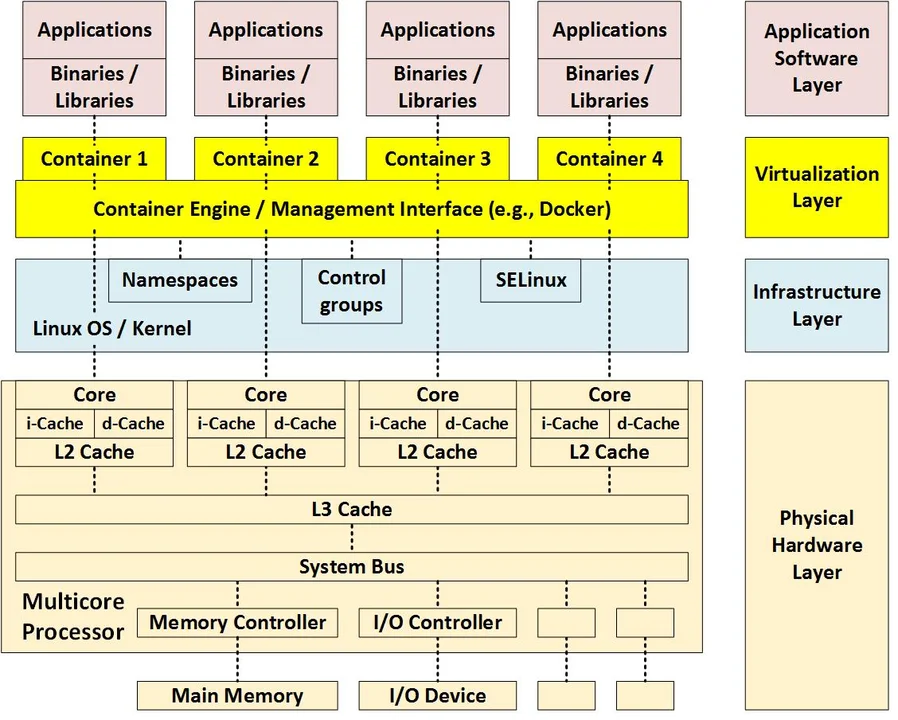

The following figure provides a more detailed illustration of virtualization via containers. Note that the virtualization layer (containers and container engine) sits on top of the infrastructure layer and depends on certain parts of the operating system/kernel.

The following figure shows a hybrid container architecture that uses virtualization by both virtual machines and containers.

Current Trends in Virtualization via Containers

Containers are becoming more widely used because they provide many of the isolation benefits of VMs without as much time and space overhead.

Although containers are typically hosted on some version of Linux, they are also now hosted on other operating systems, such as Windows and Solaris.

Pros of Virtualization via Containers

The following advantages have led to the widespread use of virtualization via containers:

- Hardware costs. Virtualization via containers decreases hardware costs by enabling consolidation (i.e., the allocation of multiple applications to the same hardware improves hardware utilization). It enables concurrent software to take advantage of the true concurrency provided by a multicore hardware architecture. In addition, it enables system architects to replace several lightly-loaded machines with fewer, more heavily-loaded machines to minimize SWAP-C (size, weight, power, and cooling), free up hardware for new functionality, support load balancing, and support cloud computing, server farms, and mobile computing.

- Reliability and robustness. The modularity and isolation provided by VMs improve reliability, robustness, and operational availability by localizing the impact of defects to a single VM and enabling software failover and recovery.

- Scalability. A single container engine can efficiently manage large numbers of containers, enabling additional containers to be created as needed.

- Spatial isolation. Containers support lightweight spatial isolation by providing each container with its own resources (e.g., core processing unit, memory, and network access) and container-specific namespaces.

- Storage. Compared with virtual machines, containers are lightweight with regard to storage size. The applications within containers share both binaries and libraries.

- Performance. Compared with virtual machines, containers increase performance (throughput) because they do not emulate the underlying hardware. Note that this advantage is lost if containers are hosted on virtual machines (i.e., when using a hybrid virtualization architecture).

- Real-time applications. Containers provide more consistent timing than virtual machines, although this advantage is lost when using hybrid virtualization.

- Continuous integration. Containers support Agile and continuous development processes by enabling the integration of increments of container-hosted functionality.

- Portability. Containers support portability from development to production environments, especially for cloud-based applications.

- Safety. Safety is improved by localizing the impact of faults and failures to individual containers.

- Security. The modular architecture provided by the containers increases the complexity and difficulty of attacks. Spatial isolation largely limits impact of malware to a single container. A container that is compromised can be terminated and replaced with a new container that is booted from a known clean image, which enables a rapid system restore or software reload following a cybersecurity compromise. Finally, security software and rules implemented at the container engine level can apply to all of its containers.

Cons of Virtualization via Containers

Although there are many advantages to moving to virtualization via containers, architects must address challenges and associated risks in the following six areas:

- Shared Resources. Applications within containers share many resources including (1) container-specific resources including the container, container engine, and OS kernel, (2) virtualization by VM resources including the virtual machine, the hypervisor, and the host operating system if using a type-2 hypervisor, and (3) multicore processing resources including (a) processor-internal resources (L3 cache, system bus, memory controller, I/O controllers, and interconnects) and (b) processor-external resources (main memory, I/O devices, and networks). Such shared resources imply (1) single points of failure exist, (2) two applications running in the samecontainer can interfere with each other, and (3) software running in onecontainer can impact software running in anothercontainer (i.e., interference can violate spatial and temporal isolation).

- Interference Analysis. Shared resources imply interference. Virtualization via containers increases the difficulty of the analysis of temporal interference (e.g., meeting timing deadlines) and tends to make the resulting timing estimates overly conservative. Interference analysis becomes more complex as the number of containers increases and virtualization via containers is combined with virtualization via VMs and multicore processing. The number of interference paths increases rapidly as the number of containers increases. The resulting large number of interference paths typically make the exhaustive analysis of all such paths impossible. This, in turn, forces the architect to select only a relatively small representative selection of paths.

The following figure shows three example interference paths in which the components with red labels are shared resources:

3. Safety.

Although containers provide significant support for isolation, the use of containers does not guarantee isolation. Temporal interference may cause delays that cause hard, real-time deadlines to be missed. Spatial interference can cause memory clashes. Whether by VM or containers, traditional safety accreditation and certification processes and policies do not take virtualization into account, making safety (re)accreditation and (re)certification difficult.

4. Security.

Containers are not by default secure and require significant work to make them secure. One must to ensure that (1) no data is stored inside containers, (2) container processes are forced to write to container-specific file systems, (3) the container's network namespace is connected to a specific, private intranet, and (4) the privileges of container services are minimized (e.g., non-root if possible). As with safety, traditional security accreditation and certification processes and policies do not take virtualization into account, making security (re)accreditation and (re)certification difficult.

5. Container Sprawl. Excessive containerization is relatively common and increases the amount of time and effort spent on container management.

Future Blog Entries

The fifth and final blog entry in this series will provide general recommendations regarding the use of multicore processing, virtualization via virtual machines, and virtualization via containers.

The SEI has published other work on virtualization via containers with posts on Container Security in DevOps and Devops and Docker.

Additional Resources

Read earlier posts in the multicore processing and virtualization series.

More By The Author

More In Continuous Deployment of Capability

PUBLISHED IN

Continuous Deployment of CapabilityGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Continuous Deployment of Capability

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed