Virtualization via Virtual Machines

This posting is the third in a series that focuses on multicore processing and virtualization, which are becoming ubiquitous in software development. The first blog entry in this series introduced the basic concepts of multicore processing and virtualization, highlighted their benefits, and outlined the challenges these technologies present. The second post addressed multicore processing. This third posting concentrates on virtualization via virtual machines (VMs). Below I define the relevant concepts underlying virtualization via VMs, list its current trends, and examine its pros and cons.

Definitions

Virtualization is a collection of software technologies that enable software applications to run on virtual hardware (virtualization via virtual machines and hypervisor) or virtual operating systems (virtualization via containers). A virtual machine (VM), also called a guest machine, is a software simulation of a hardware platform that provides a virtual operating environment for guest operating systems. A hypervisor, also called a virtual machine monitor (VMM), is a software program that runs on an actual host hardware platform and supervises the execution of the guest operating systems on the virtual machines.

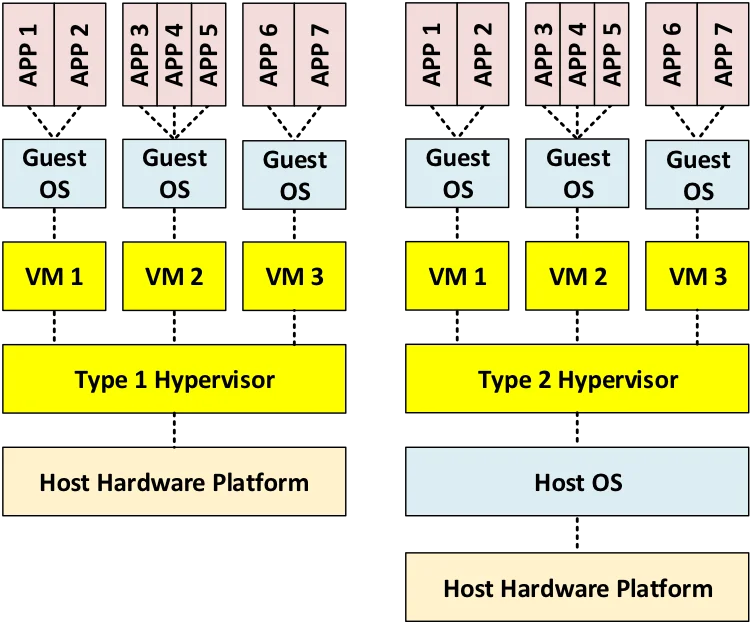

As shown by the following figure, there are two types of virtualization via VMs, based on the type of hypervisor used:

- A type 1 hypervisor, also called a native or bare metal hypervisor, is hosted directly on the underlying hardware.

- A type 2 hypervisor, also called a hosted hypervisor, is hosted on top of a host operating system.

VM Virtualization via a Type 1 Hypervisor

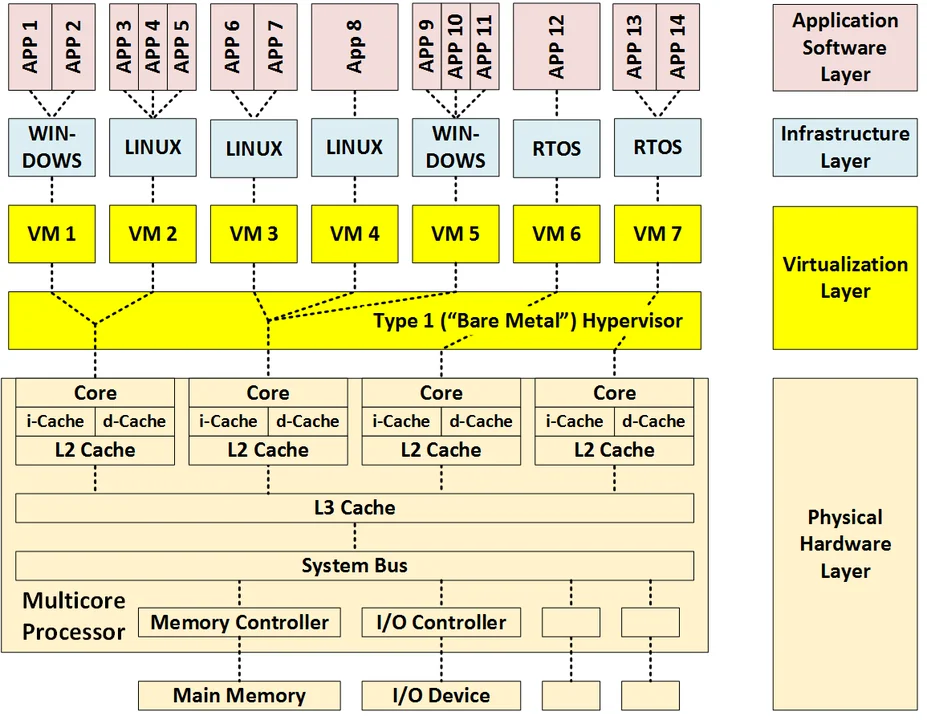

By providing additional details, the notional diagram below shows how 14 software applications running on various guest operating systems have been deployed onto 7 virtual machines running on a type-1 hypervisor. This diagram also shows how these applications, operating systems, and virtual machines have been allocated to the four cores of a multicore processor. Some interesting aspects of this architecture include the following:

- This architecture adds an additional virtualization layer, consisting of two sub layers (VMs and type-1 hypervisor), to an architecture that was already made complex by having multiple cores.

- By providing simulated hardware environments, the VMs enable the use of different operating systems.

- Applications share VMs, the hypervisor, and cores as shared resources, thereby providing single points of failure and possible interference paths. Interference can occur when the execution of one application running in one VM affects the execution of another application running in a second VM by violating either the spatial or temporal isolation of the VMs:

- Physical isolation ensures that software executing in different virtual machines cannot access the same physical hardware (e.g., memory locations such as caches and RAM).

- Temporal isolation ensures that the execution of software on one VM does not impact the temporal behavior of software running on another VM.

VM Virtualization via a Type 2 Hypervisor

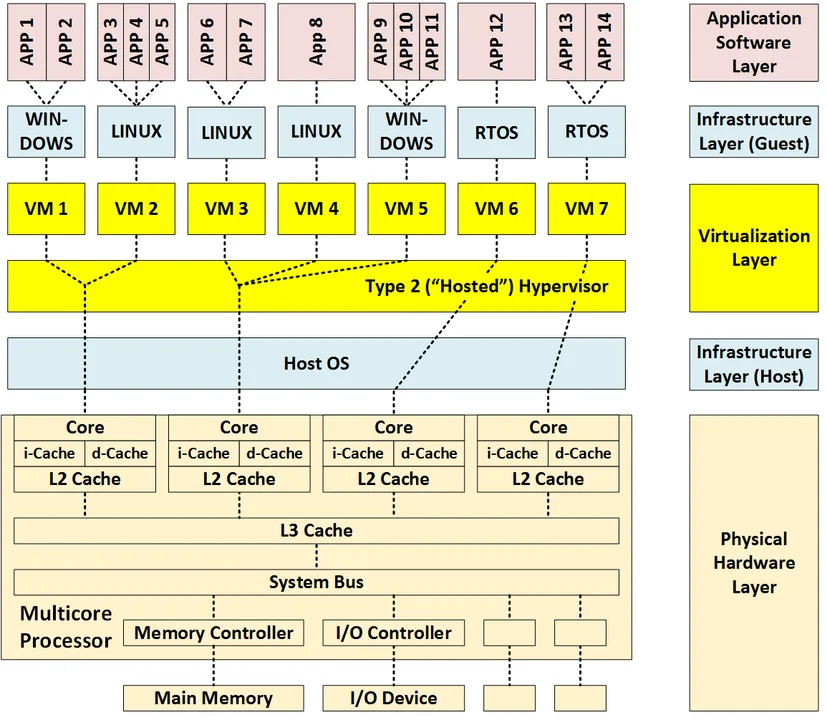

Similar to the previous diagram, the figure below shows how a type-2 hypervisor architecture differs from a type-1 hypervisor architecture. In addition to the virtualization layer, it has two infrastructure layers: a host operating system layer and a guest operating system layer. While this approach is similar to a type-1 hypervisor architecture, a type-2 hypervisor architecture is even more complex and provides an additional shared resource (the host operating system) that can act as a single point of failure and source of interference between applications.

Current Trends in Virtualization via Virtual Machines

Virtualization via VMs is becoming commonplace at the server level for IT applications, data centers, and cloud computing. Likewise, virtualization via VMs is increasingly used for storage virtualization (mass storage), network virtualization, and mobile devices (especially for testing on virtual mobile devices).

On the other hand, virtualization via VMs has significant limitations with regard to their time/space overhead and the unpredictable nature of the hypervisor's impact on scheduling. As a result, virtualization via VMs may be unsuitable for many types of real-time, safety-critical, and security-critical systems, such as automotive software, Internet of Things (IoT), and software for military combat systems.

Virtualization via VMs is being combined with virtualization via containers (the topic of the next post in this series). Where appropriate, VMs are also being replaced by containers, which are lighter-weight virtual runtime environments that run on top of a single OS kernel without emulating the underlying hardware. Thus, VMs emulate hardware, while containers emulate OSs.

Security is increasingly important as vulnerabilities (VM escapes) in virtual machines and hypervisors are discovered. For example, exploits have been discovered that enable attackers and malware to violate spatial isolation by escaping one VM and infecting another.

Pros of Virtualization via Virtual Machines

The following advantages have led to the widespread use of virtualization via VMs:

- Hardware isolation. Virtualization via VMs supports the reuse of software written for different, potentially older operating systems and hardware. It enables the upgrade of obsolete hardware, infrastructure, and software. It improves the portability of software to multiple hardware and OS platforms. In addition, it enables the creation of virtualized test beds, which is important when the relevant hardware is either unavailable or excessively expensive.

- Hardware costs. Virtualization via VMs decreases hardware costs by enabling consolidation (i.e., the allocation of multiple applications to the same hardware improves hardware utilization). It enables concurrent software to take advantage of the true concurrency provided by a multicore hardware architecture. In addition, it enables system architects to replace several lightly-loaded machines with fewer, more heavily-loaded machines to minimize SWAP-C (size, weight, power, and cooling), free up hardware for new functionality, support load balancing, and support cloud computing, server farms, and mobile computing.

- System management. This reduced hardware footprint also decreases the amount of effort and associated costs of managing the hardware. Virtualization vendors provide commercial toolkits that optimize resource utilization, provide performance monitoring and centralized resource management.

- Performance. Virtualization via VMs has been optimized for general-purpose computing and maximizing throughput and average case response time (valuable for IT and cloud computing).

- Operational availability. Virtualization via VMs may improve operational availability by supporting failover and recovery, and by enabling dynamic resource management.

- Isolation. The hypervisor should greatly improve (but does not guarantee) spatial and temporal isolation of VMs. Note that spatial isolation means that different VMs are prevented from accessing the same physical memory locations (e.g., caches and RAM), whereas temporal isolation means that the execution of software on one VM does not impact the temporal behavior of software running on another VM).

- Reliability and robustness. The modularity and isolation provided by VMs improve reliability and robustness by localizing the impact of defects to a single VM and enabling software failover and recovery.

- Flexibility. By emulating hardware, virtualization via VMs enables multiple instances of multiple operating systems to run on the same processors.

- Scalability. A single hypervisor can efficiently manage large numbers of VMs and processors. Note that this scalability is purchased at the price of having to store multiple copies of operating systems and having an extra layer of executing software in the hypervisor.

- Safety. Safety is improved by localizing the impact of faults and failures to individual VMs.

- Security. The modular architecture provided by the VMs and the separation of host and guest operating systems increases the complexity and difficulty of attacks. A bare-metal type 1 hypervisor has a relatively small attack surface and is less subject to common OS exploits and malware. Spatial isolation largely limits impact of malware to a single VM. A VM that is compromised can be terminated and replaced with a new VM that is booted from a known clean image, which enables a rapid system restore or software reload following a cybersecurity compromise. Finally, security software and rules implemented at the hypervisor level can apply to all of its VMs.

Security in virtualized IS enterprises has been demonstrated. For example, National Security Agency (NSA) Central Security Service (CSS) "Flask", a strong, flexible mandatory access control architecture, has been applied to the Xen hypervisor as the Xen Security Modules (XSM) framework. A variety of hypervisors can be efficiently implemented with Security-Enhanced Linux (SELinux).

Cons of Virtualization via Virtual Machines

Although there are many advantages to moving to virtualization via VMs, architects must address challenges and associated risks in the following six areas:

- Hardware Resources. Virtualization via VMs often increases hardware resource needs because the VMs, the hypervisor, and guest operating systems will require more processing power (for the processing performed by the virtualization layer), increased RAM, and increased mass storage to hold the associated images, such as software state and data. On the other hand, virtualization via VMs can actually decrease the need for hardware by enabling the replacement of multiple, lightly-loaded processors with a single more heavily loaded processor. Thus, virtualization via VMs virtualization can also reduce hardware resources by replacing many individual computing systems (e.g., think desktop PCs with their own processors, disks, network adapters, etc.) with a smaller (or more "concentrated") number of centralized machines that can be "auto-scaled" elastically. Put another way, whether virtualization increases hardware resources depends largely on the starting point of an organization's existing computing architecture

- Shared Resources. VMs share the hypervisor, the host OS, and the same shared resources as with multicore processors: processor-internal resources (L3 cache, system bus, memory controller, I/O controllers, and interconnects) as well as processor-external resources (main memory, I/O devices, and networks). These shared resources imply the existence of single points of failure, that two applications running on the same VM can interfere with each other, and that software running on one VM can impact software running on another VM (i.e., interference can violate spatial and temporal isolation).

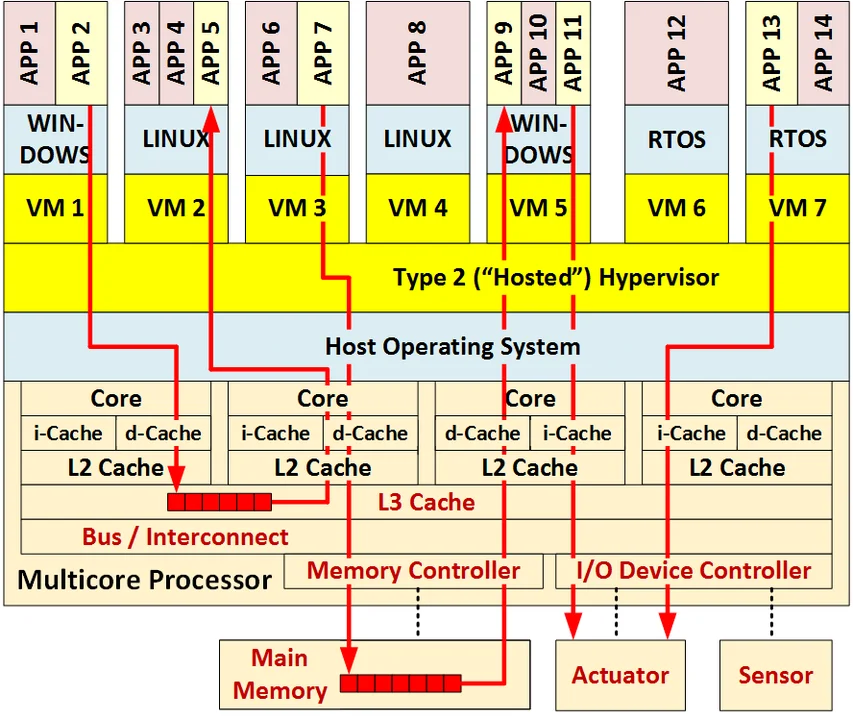

- Interference. Interference occurs when software executing on one VM impacts the behavior of software executing on other VMs. This interference includes failures of both spatial isolation (due to shared memory access) and failure of temporal isolation (due to interference delays and/or penalties). As is the case with multicore processors, the number of interference paths increases very rapidly with the number of VMs. Consequently, an exhaustive analysis of all interference paths is often impossible. The impracticality of exhaustive analysis necessitates the selection of representative interference paths when analyzing isolation. The following diagram uses the color red to show three possible interference paths involving VMs and their hypervisor between pairs of applications involving six shared resources.

4. Analysis. The use of VMs and hypervisors increases the complexity of the analysis of temporal interference (e.g., meeting timing deadlines), making it harder and/or less accurate. Many analysis techniques result in overly conservative timing estimates. Interference analysis becomes more complex as the number of VMs increases, virtualization is combined with multicore processing, and the number of interference paths increases very rapidly with the number of VMs. The exhaustive analysis of all interference paths is typically impossible, thereby making the representative selection of paths.

5. Safety. Moving to a virtualized architecture based on VMs and hypervisors probably requires safety recertification. Interference between VMs can cause missed deadlines and excessive jitter, which is a measure of the variation in the amount of time taken to accept and complete a task. In turn, this can cause the existence of hazards due to faults and the occurrence of accidents due to failures. A comprehensive hazard analysis will typically require the use of appropriate real-time scheduling and timing analysis. Based on assumptions obsoleted by the use of virtualization via VMs, safety policies need to be updated based on the following recommendations. Standard architectural approaches for ensuring reliability (such as redundant execution with voting) do not avoid single points of failure when the software is run on multiple VMs rather than multiple physical processors.

6. Security. Moving to a virtualized architecture based on VMs and hypervisors likely requires security recertification. Although the use of virtualization via VMs typically improves security, it is not a guarantee. The hypervisor itself is an additional attack vector increasing the overall attack surface. Security vulnerabilities can violate isolation enabling sophisticated exploits to escape from one VM to another via the hypervisor.

7. Performance. In a virtualized environment, hardware resources, which are de-coupled from software, no longer represent dedicated resources, and response times may lag due to data latency and the processing performed by the virtualization layer. Virtualization via VMs is a source of unpredictability due to jitter, which, in turn, can cause response time failures to meet hard real-time deadlines. The use of VMs also increases cold start and restart times.

On the other hand, optimization schemes (such as PCI[1]-passthrough available under Xen, allowing a hardware resource to run as a native resource for a guest application) are available to reduce potential data latency for high bandwidth, low latency applications.

8. Quality. Because virtualization via VMs is a relatively new technology, hypervisors and VMs often have a higher defect density than mature operating systems. The complexity and added concurrency increases the number of test cases needed for integration and system testing.

9. Cost. Virtualization via VMs increases cost in two ways: (1) by increasing the architecture, testing, and deployment effort and (2) by increasing licensing costs if free-and-open-source software (FOSS) hypervisors and VMs are not used.

Future Blog Entries

The next blog entry in this series will define virtualization via containers, list current trends, and document the pros and cons of this approach. This posting will be followed by a final blog entry providing general recommendations regarding the use of the three technologies detailed in this series: multicore processing, virtualization via VMs, and virtualization via containers.

Additional Resources

Read all posts in the multicore processing and virtualization series.

More By The Author

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed