Release of SCAIFE System Version 1.0.0 Provides Full GUI-Based Static-Analysis Adjudication System with Meta-Alert Classification

The SEI Source Code Analysis Integrated Framework Environment (SCAIFE) is a modular architecture designed to enable a wide variety of tools, systems, and users to use artificial intelligence (AI) classifiers for static-analysis meta-alerts at relatively low cost and effort. SCAIFE uses automation to reduce the significant manual effort required to adjudicate meta-alerts that are produced by static-analysis tools. The architecture also enables

- low-effort integration for tools to incorporate mathematical formulas for meta-alert prioritization,

- data aggregation in one location to improve classification using a rich labeled dataset, and

- a modular capability to use a variety of classifiers and active learning (also known as adaptive heuristics).

We developed the SEI SCAIFE system to instantiate that architecture, as a research prototype. With the March 2020 release of SCAIFE System v 1.0.0, which I describe in this blog post, users for the first time could run the functionality required for static-analysis classification fully from the SCAIFE user-interface module's graphical user interface (GUI). Users can now create a project and classifier, run the classifier on the user's project, and then see the classifier-determined confidence values displayed for the meta-alerts list.

SCAIFE is designed so that a wide variety of static-analysis tools can integrate with the SCAIFE system using the SCAIFE application programming interface (API) definitions, one per SCAIFE module. Each SCAIFE system release includes the current SCAIFE API definitions, code that instantiates SCAIFE API function calls, and a multi-server SCAIFE system and all code that we at the SEI developed for that. We distribute releases as a lightweight code tarball or on a virtual machine (VM), in either case using Docker containers for the servers. The release also includes extensive documentation of the system in an HTML manual.

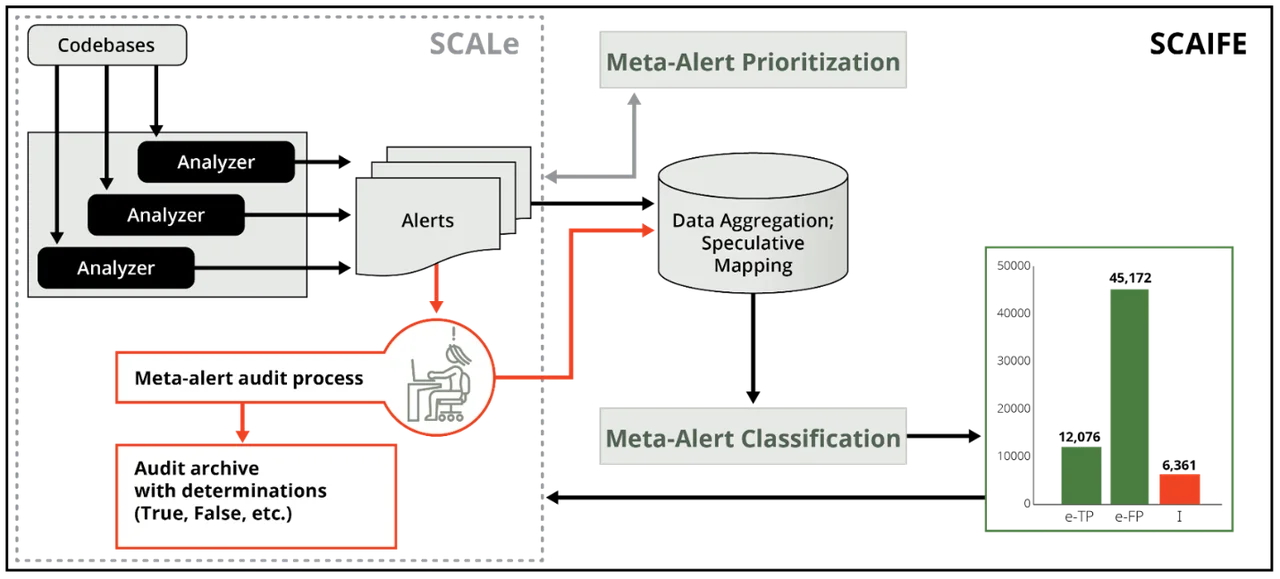

As part of my research project, we developed a version of the SEI CERT Division's SCALe (Source Code Analysis Laboratory) tool as a GUI front end for the SCAIFE system (as shown at the top of Figure 1 below). Through SCALe, auditors can examine alerts from one or more static-analysis tools and the associated code, make determinations about a meta-alert (e.g., mark it as true or false), and export the project-audit information to a database or file. We released the full SCAIFE v 1.0.0 system to five DoD collaborator teams.

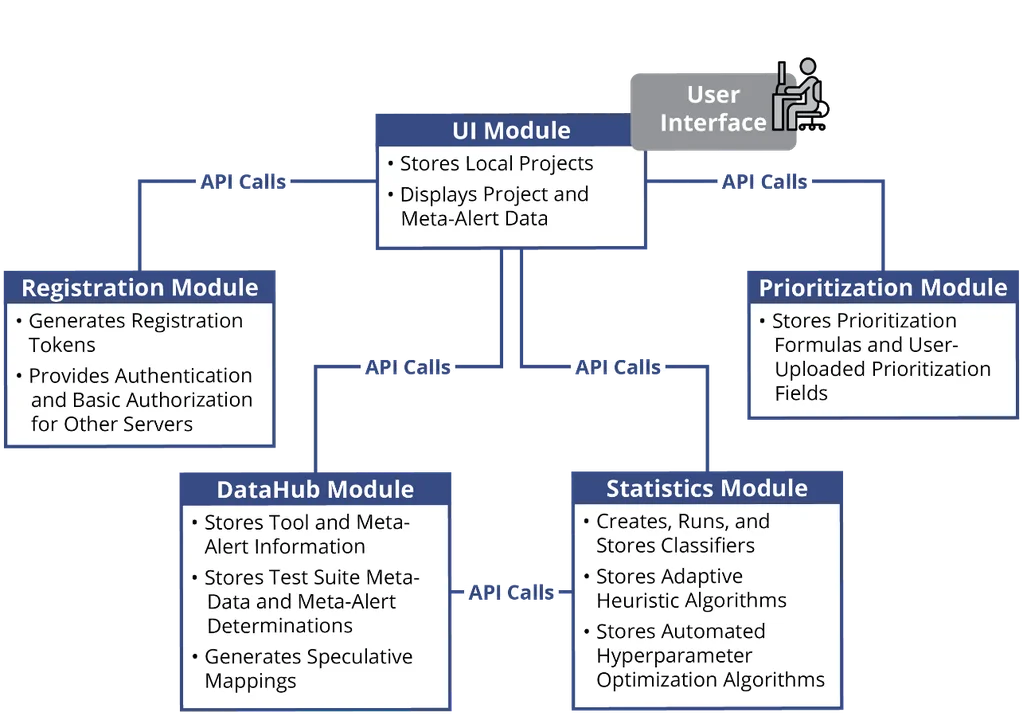

Although we cannot yet publicly publish the full SCAIFE system, we do publish some separable parts. We publish the version of the SCALe tool that is used as a SCAIFE user-interface (UI) module in the five-module SCAIFE system at https://github.com/cmu-sei/SCALe/tree/scaife-scale. That system can be used as a standalone static-analysis aggregator and adjudication tool, and can optionally be used as a pre-developed module in a different, new implementation of the SCAIFE architecture. The five SCAIFE API definitions are distributed with the full SCAIFE system, and we also distributed them publicly at https://github.com/cmu-sei/SCAIFE-API. Figure 1 below shows the modules that comprise the SCAIFE system:

Figure 2 below shows the flow of data between the SCALe GUI front end and the SCAIFE System v 1.0.0.

What Is New in SCAIFE System v 1.0.0?

The release includes SCAIFE API v 1.0.0 definitions (YAML, JSON, and HTML) for the DataHub, Statistics, Registration, Prioritization, and UI modules. The release contains much new code that instantiates this version of the SCAIFE API. This release also contains new code (and "how-to" information in the manual) that enables users to regenerate the updated API definitions in YAML, JSON, and HTML automatically after they modify the API initial .yaml files.

We encourage collaborators to enhance the API, SCALe code, SCAIFE code, and the manual, both to tailor them to their needs and to send the project useful feature enhancements from which the SEI and all collaborators can benefit. To facilitate such enhancements, this version of the SCAIFE system also includes software and how-to information for API updates, HTML/markdown SCAIFE/SCALe manual updates, and code updates.

SCAIFE version 1.0.0 includes a quick-start demo documented in the SCAIFE HTML manual. The encrypted VM file in which we released SCAIFE v 1.0.0 includes code and tool output for two SCAIFE demonstration projects. This release also contains bug fixes, performance enhancements, and many additional new features. In particular, new and modified content in this version of the API includes

- updated test suite and tool data structures

- new taxonomy, language, and secondary message models

- response messages with more data and precision (e.g., responses provide unique IDs of uploaded data, plus wider variety of error messages)

- new methods to edit previously uploaded data

The GitHub publication of the SCAIFE API for SCAIFE System v 1.0.0 (another publication, this one separate from the SCAIFE system release) added a new section, "How to get started with the API," to the README plus an explanation of access-token use in SCAIFE, both of which had been requested by reviewers.

Rationale Underlying the Release of SCAIFE System v 1.0.0

Before releasing SCAIFE v 1.0.0, we had released multiple beta versions of the SCAIFE system. Those beta versions (beta v1 Aug 2019, beta v2 Sept 2019, beta v2.1 Oct 2019) implemented many of the SCAIFE API-defined calls, plus they implemented much of the internal logic required for SCAIFE functionality. We demonstrated these beta versions by means of regression tests we implemented and used for automated testing during our internal continuous-integration testing as we developed SCAIFE. When we released the beta versions of SCAIFE to our collaborators to demonstrate functionality we had implemented so far, we gave them detailed manuals directing how they could run our set of automated tests and how to directly inspect the Mongo databases and SQLite3 databases to verify that they contained the expected data that demonstrated that the functionality developed so far was working as expected.

Although our collaborators were able to run these tests, this type of verification was not very user-friendly. Running the tests required many manual steps from the SCALe GUI in combination with commands from the terminal, each of which had to be done exactly as specified. Our collaborators had varying levels of experience with database inspections, and sometimes making a mistake in following the command-line instructions in exact sequence would throw off their entire set of results. Consequently, our collaborators asked for an all-GUI interface. Our goal was always to develop a research tool that code analysts--people adjudicating static-analysis meta-alerts--could interact with through a GUI. The beta releases did include some GUI features, and feedback from collaborators on early versions of those features helped us to improve the later versions. For example, as a result of feedback, we included more explanatory text. We also rearranged placement of menu items and added a "definitions" menu item.

Given the concerns expressed by our collaborators, SCAIFE version 1.0.0 represents a significant milestone: as of that release, users/testers/collaborators have been able to work fully from the GUI to create a project, specify a classifier, run a classifier, and see the results of classification in the GUI.

This method of releasing often to collaborators and getting their feedback is consistent with modern DevOps and Agile practices:

- soliciting public feedback on APIs and SCALe versions that have been publicly released

- soliciting collaborator feedback on APIs and full SCAIFE-system versions we have released to them

- using the feedback to improve the code and tool or system while developing it

- developing regression tests for use in automated regression tests while developing the code

- automating release builds. In this area, we have done a lot of work to containerize the SCAIFE system, create automated VM scripts (Vagrantfiles), Dockerfiles, docker-compose files, and scripts to automatically add copyright markings and version numbers, and remove proprietary data files. Although we have not yet completely automated our release builds, this is something we are currently working to finish.

We design our code and APIs so they can be extended by others to tailor the tools for their use. We also invite them to contribute their code enhancements back to us by contacting us. In addition, we use these DevOps methods to help us during this project to maintain functionality in our complex systems.

Looking Ahead to Next Steps for SCAIFE

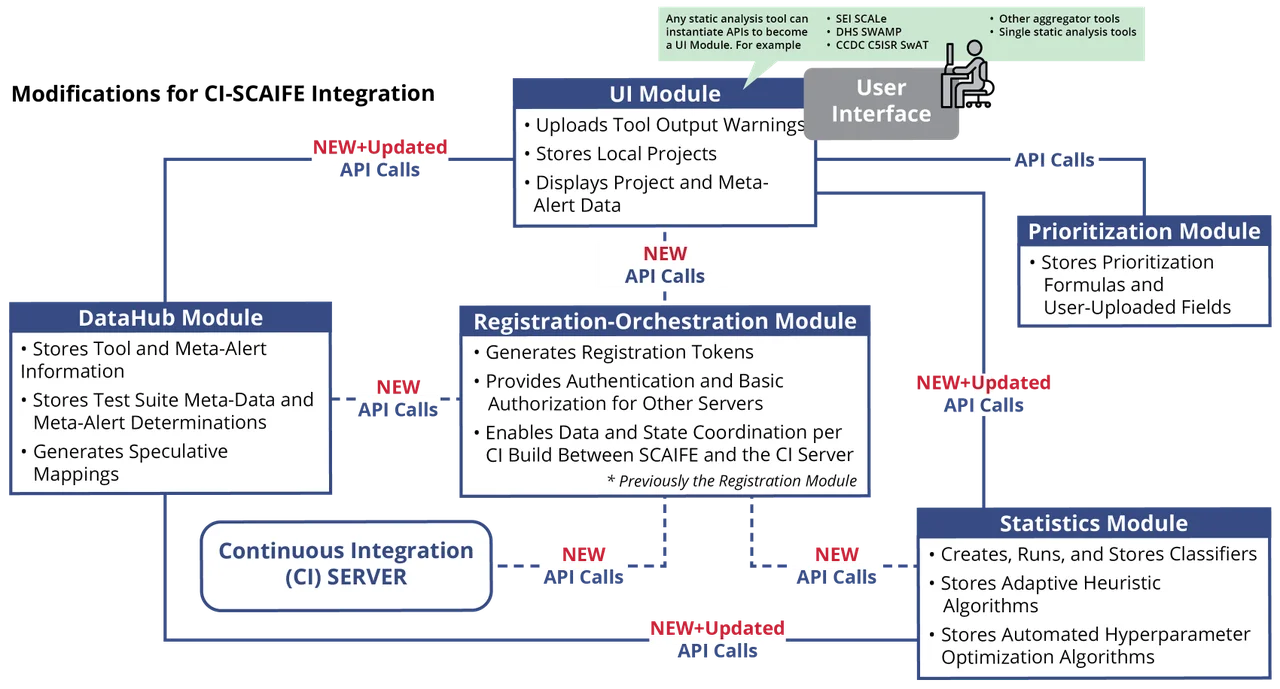

Since the initial release of v 1.0.0 in late March 2020, we and our collaborators are continuing to drive the evolution of SCAIFE by releasing interim versions with increasing sets of functionality that are getting us closer to our next goal, which is to integrate SCAIFE with a continuous-integration (CI) system. Modifications for CI-SCAIFE integration are shown below in Figure 3:

As with the v 1.0.0 release, we are implementing parts of this modified architecture, and our collaborators have been testing and providing feedback as we continue further development.

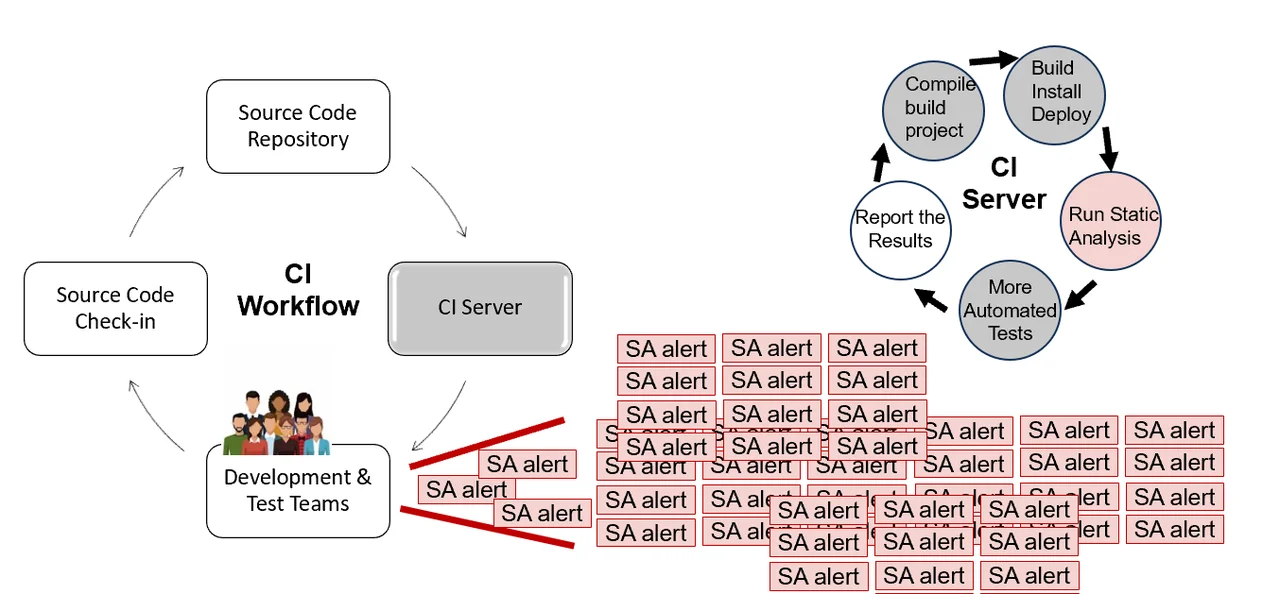

Figure 4 below shows a vision that integrates classifier use with CI systems:

Figure 4 shows CI workflow, where a member of the development and test teams develops code on a new branch (or branches) that implements a new feature or a bug fix. The coder checks their source code into the repository (e.g., git commit and git push). Next, the CI server tests that code, first setting up what is needed to run the tests (e.g., creating files and folders to record logs and test artifacts, downloading images, creating containers, running configuration scripts), then starting the automated tests. In the short CI timeframes, essential tests must be run, including: unit tests that check that small bits of functionality continue to work, integration tests that check that larger parts of the system functionality continue to interact as they should, and sometimes stress tests to ensure that the system performance has not become much worse.

Sometimes (but not always) static analysis is done during the CI-server testing. When this test occurs, it produces output with many alerts. Some meta-alerts may be false positives, and all of them must (normally) be examined manually to adjudicate true or false positives. In very short CI timeframes, however, dealing with static-analysis alerts is of low priority for development and test teams. Any failed unit or integration test must be fixed before the new code branch can be merged with the development branch, so those are of high priority. Beyond that, there are major time pressures from the CI cycle and the other developers or testers who need that bug fix or a new feature added so it does not block their own work or cause a merge conflict in the future.

I am currently leading a research project that is working to develop algorithms and system designs required to enable practical use of static-analysis classifiers during short CI cycles. The project aims to enable catching and fixing some code flaws identified by those tools early in the software-development lifecycle, and thus far more cheaply than later in the lifecycle. We are developing a CI-integrated version of SCAIFE to prototype it, which will be the focus of future blog posts.

Additional Resources

Download the scaife-scale branch of SCALe: https://github.com/cmu-sei/SCALe/tree/scaife-scale.

Examine the YAML specification of the latest published SCAIFE API version on GitHub.

Read the SEI blog post, Managing Static Analysis Alerts with Efficient Instantiation of the SCAIFE API into Code and an Automatically Classifying System

View the presentation, Static Analysis Classification Research FY16-20 for Software Assurance Community of Practice

Read the technical manual (and we hope you will follow the steps to test and instantiate SCAIFE code!),

How to Instantiate SCAIFE API Calls: Using SEI SCAIFE Code, the SCAIFE API, Swagger-Editor, and Developing Your Tool with Auto-Generated Code.

Read the SEI blog post, A Public Repository of Data for Static-Analysis Classification Research.

Read the SEI blog post, Test Suites as a Source of Training Data for Static Analysis Alert Classifiers.

Read the SEI blog post, SCALe v. 3: Automated Classification and Advanced Prioritization of Static Analysis Alerts.

Read the SEI blog post, An Application Programming Interface for Classifying and Prioritizing Static Analysis Alerts.

Read the SEI blog post, Prioritizing Security Alerts: A DoD Case Study.

Read the SEI blog post, Prioritizing Alerts from Static Analysis to Find and Fix Code Flaws.

Read the SEI technical report, Integration of Automated Static Analysis Alert Classification and Prioritization with Auditing Tools: Special Focus on SCALe.

Review the 2019 SEI presentation, Rapid Construction of Accurate Automatic Alert Handling System.

Read other SEI blog posts about static analysis alert classification and prioritization.

Watch the SEI webinar, Improve Your Static Analysis Audits Using CERT SCALe's New Features.

Read the Software QUAlities and their Dependencies (SQUADE, ICSE 2018 workshop) paper, Prioritizing Alerts from Multiple Static Analysis Tools, Using Classification Models.

View the presentation, Challenges and Progress: Automating Static Analysis Alert Handling with Machine Learning.

View the presentation (PowerPoint), Hands-On Tutorial: Auditing Static Analysis Alerts Using a Lexicon and Rules.

Watch the video, SEI Cyber Minute: Code Flaw Alert Classification.

View the presentation, Rapid Expansion of Classification Models to Prioritize Static Analysis Alerts for C.

Look at the SEI webpage describing our research on static-analysis alert automated classification and prioritization.

Read other SEI blog posts about SCALe.

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed