Prioritizing Alerts from Static Analysis to Find and Fix Code Flaws

PUBLISHED IN

Secure DevelopmentIn 2015, the National Vulnerability Database (NVD) recorded 6,488 new software vulnerabilities, and the NVD documents a total of 74,885 software vulnerabilities discovered between 1988-2016. Static analysis tools examine code for flaws, including those that could lead to software security vulnerabilities, and produce diagnostic messages ("alerts") indicating the location of the purported flaw in the source code, the nature of the flaw, and often additional contextual information. A human auditor then evaluates the validity of the purported code flaws. The effort required to manually audit all alerts and repair all confirmed code flaws is often too much for a project's budget and schedule. Auditors therefore need tools that allow them to triage alerts, strategically prioritizing the alerts for examination. This blog post describes research we are conducting that uses classification models to help analysts and coders prioritize which alerts to address.

Static Analysis Alerts: Issues and Goal

Static analysis tools examine software without running it as an executable, as opposed to dynamic analysis tools that execute the program. Often static analysis tools inspect the source code files of the application, though binary static analysis is also possible. The result of this examination is a collection of alerts. At a bare minimum, alerts contain a source code location (file path and line number, for example), and a textual description of the alert. Many static analysis tools provide additional contextual information, such as example execution paths and variable values that may trigger the undesired behavior identified by the alert. For multi-line code flaws, some static analysis tools provide a starting line and an ending line.

The alerts produced by static analysis tools may be false positives, which incorrectly mark correct code as flawed. These false positives stem from a fundamental tradeoff of static analysis between correctness and performance. In particular, a tool may have to provide imprecise results to complete its analysis in a reasonable amount of time and with a reasonable amount of computation resources. Some static analysis tools, such as theorem provers like Z3, lean more towards correctness at the cost of performance. Beyond false positives, static analysis tools are also susceptible to false negatives (not producing alerts for flawed code). Some tools exhibit false negatives simply because they do not examine the code for that particular type of flaw.

Static analysis tool vendors (and open-source static analysis tool projects) have carefully negotiated these compromises to produce enough alerts that identify real issues, without drowning their tool users in false positives. Al Bessey, from Coverity, notably discusses the difficulty of this negotiation in his article, A Few Billion Lines of Code Later: Using Static Analysis to Find Bugs in the Real World.

Previous research has shown that widely-used static analysis tools have limited overlap in the types of code flaws they can find. One reason is simply that developing new static analyses is hard work and tool makers may simply not have incorporated a particular analysis into their tools yet. Some analyses for particular code flaws also take a lot of computer memory, time, or disk space. These analyses may therefore not be incorporated in tools because that aspect is unpopular with the tool's users.

By running multiple static analysis tools on a codebase, analysts can increase the number of types of code faults found, ensuring a more comprehensive analysis. This approach, however, compounds the problem of generating too many alerts to deal with, including too many false positives.

Empirical results cited by Kremenek et. al. show that tools that effectively identify software coding errors can have a false positive rate of 30 percent or higher. Our research in this area aims to automate the classification of true and false positives as much as possible. We also assign a confidence to the alerts that require human examination to help prioritize alerts and direct auditor efforts.

Our Approach

Related research by others [Meng 2008], [Kong 2007], [Kremenek 2004a], and [Plakosh 2014] on alerts from multiple static analysis tools utilize statistical methods which take into account less complex potential correlations (for example, fewer features and/or simpler mathematical models) or lack comprehensive review of multiple analyzer alerts. There are many papers on single-analyzer alert classification and prioritization techniques that use features considered for our classification models, including [Heckman 2011], [Ruthruff 2008], [Kremenek 2004b], and [Heckman 2007].

Previous research has demonstrated success in accurately determining confidence in alerts produced by single static analysis tools and in ordering alerts using that confidence and other factors. For example, Predicting Accurate and Actionable Static Analysis Warnings: An Experimental Approach generated models that were more than 85 percent accurate in predicting false positives for alerts from the FindBugs static analysis tool. They ordered alerts for auditing based on dynamically updated information about whether code repairers historically chose to fix that type of confirmed code flaw. Previous research papers utilize code contextual information, alert type selection, data fusion, machine learning, and mathematical and statistical models to tackle the problem of sorting true and false alerts. Our research includes all of these techniques. Additional methods that others have used include dynamic detection, graph theory, and model checking. Building on others' work, we gather a wide range of features that have been used related to alert and code characteristics.

The approach we are exploring also builds on previous SEI research, such as Improving the Automated Detection and Analysis of Secure Coding Violations [Plakosh 2014] that resulted in three rule-level (for alerts mapped to SEI CERT coding rules) binary logistic regression models using as a feature the pattern of which analyzers flagged a specific diagnostic. In other words, for three SEI CERT coding rules, classifiers were developed and trained on audit data from multiple static analysis tools. The classifiers were then validated on another set of alerts by using the classifier to make predictions (True/False) for each, and then checked the prediction accuracy against the manually-audited "ground truth". This was the first published case of advanced statistical analyses using fused alerts from multiple static analysis tools to accurately predict whether an alert is true or false.

In the research described by this post, we extend the initial SEI research through evaluation of additional classification techniques and use a much larger set of features. We also analyze a larger and more heterogeneous set of data. We created rule-level classifiers and developed an initial classifier that uses the SEI CERT rule ID (consisting of a 3-letter prefix indicating the category, a number, a dash and the language, e.g., "INT33-C") as another feature for classification. We are continuing to hone our classification models by adjusting parameters used to create the classifier, adding new data to the data sets used to train and test the models, and experimenting with sets of features used as input to the classifier development. After each classifier is developed, we apply it to corresponding alerts in a test data set for evaluating the predictive accuracy of our models. Our scientific approach is novel because of its use of multiple analyzers, the large variety of features we use, and the competing advanced classification techniques whose performance we are comparing

The team of SEI researchers working with me on this project include David Svoboda, Will Snavely, Robert Stoddard, David Zubrow, Jennifer Burns, Guillermo Marce-Santurio, Eli Kanal, Christine Baek, and Richard Qin. We are partnering with Claire LeGoues, an assistant professor at CMU's School of Computer Science, who is helping us in an advisory role. Her expertise is relevant to our project, because her research focus is on software engineering and programming languages, particularly on how to construct, debug, and assure high-quality software systems. Our research aligns with goals set by the Department of Defense (DoD), which has recently prioritized rapid, assisted, and automated software analysis and validation. This research also aligns with one of the two technical areas of the SEI's strategic plan: Lifecycle Assurance of Software-Reliant Systems.

Our approach will enable software analysts and coders to order alerts to address by automatically

- Calculating a confidence metric (confidence that a given alert is true or false).

- Partitioning alerts into three categories: expected true positive (e-TP), expected false positive (e-FP), and indeterminate (I). Note that the e-TP alerts can go directly to the code repairers after being classified, without manual auditing.

- Ordering the alerts in the indeterminate category, using the confidence metric. This ordering may take into account separately-calculated metrics related to the alert such as risk and cost.

To create a classifier for a given rule from the SEI CERT coding rules, we use our archival data for all of the audited alerts that map to that rule, along with new data gathered for this project. To create a classifier based on the entire data set, we use our archival data for all the audited alerts plus the newly gathered data.

Using a review of the aforementioned previous related research (SEI and external), we identified candidate features for inclusion in our classification models. These features include the following (not a comprehensive list):

- depth

- significant lines of code (SLOC) in function/method, file, program

- lines of code (LOC) in function/method, file, program

- cyclomatic complexity

- coupling

- cohesion

- language

- fuse-able alerts (same line, file, and rule violation) from each analyzer tool used

- code churn

- alert count (file, function, etc.)

- token count in function/method

- count of functions/methods in file

- count of parameters in function/method

- average number of tokens

- average SLOC

- partially-shared filepaths

- classname (if applicable)

- method/function name

- package name (if applicable)

- many features specific to a particular tool, which vary per-alert

We are analyzing stored data on audited alerts along with the associated features listed above, using four different classification techniques:

For this research project, one of the data sets we are using includes audit data for 20 codebases representing 19,237 KLOC with 3,147 confirmed true positives and 11,772 confirmed false positives.

These 20 codebases were audited using CERT's Source Code Analysis Laboratory (SCALe), which is a framework that uses multiple commercial, open source, and experimental analysis tools to analyze codebases for potential flaws. By using multiple static code analysis tools, SCALe finds more code defects than a single static code analysis tools would find. Using multiple tools, however, increases the human work required to handle the large volume of diagnostic output. SCALe has been used to analyze more than 16 million lines of code, including codebases from the DoD, energy delivery systems, medical devices, and more.

An auditor that uses SCALe initially provides a collection of static analysis tool outputs and source code files via a graphical user interface. The SCALe application stores the alerts from the tools into a database using a common schema. Some additional attributes are computed and stored for each alert, such as the CERT coding rule to which it corresponds. These rules are drawn from CERT's Secure Coding Standards. The SCALe application includes mappings for each of the SCALe-integrated tools' alerts to CERT's Secure Coding Standards and alerts from multiple tools may map to the same CERT coding rule. The mapping from alert to rule is vital to our per-rule classification.

An auditor can use the application to inspect the alerts. The web-browser based interface allows the auditor to filter and prioritize the alerts, to examine code related to a particular alert, and to set a verdict (e.g. true or false) for each alert. SCALe updates audit determinations in the database as the auditor works.

We have enhanced SCALe for this project. This "enhanced-SCALe" collects additional information for each alert and implements some additional functionality, such as data anonymization. The additional data is drawn from a number of sources. We gather source code metrics (such as cyclomatic complexity, significant lines of code) using a modified version of the Lizard tool. We extract additional fields from the static analysis tool outputs.

Another script we developed performs fusion and analysis, preparing final data to use as input to statistical software. This script converts enhanced-SCALe's multi-table database into a flat comma-separated value (.csv) file, which is useful for classifier tools. This script also fuses alerts for the same [rule, line number, file] tuple. In addition, the script performs more analysis and adds features to the alerts, including alert count per-file, alert count per-function, and depth of file in project, and it splits the file path so that partially-shared file paths can be used as features.

Our classifier development method could easily be extended to work with other standards and other frameworks for storing audit data. For example, the CERT coding rules and/or SCALe could be replaced with other related standards and platforms. The alert mapping within SCALe could easily be extended to map alerts to other coding rules and enumerated lists related to code flaws, such as Common Weakness Enumeration, OWASP Application Security Verification Standard Project, and MISRA (Motor Industry Software Reliability Association) rules. Similarly, audit data from other multiple-static-analysis-tool frameworks can potentially be transformed into a format amenable to our classifiers and classifier development tools.

Field Testing Our Approach with DoD Collaborators

In addition to the 20 CERT-audited code bases, we also are obtaining audit data from three DoD organizations (this collaboration will be the subject of a future blog post). Two of the organizations each reported a need to conduct security audits on an excess of 100 MSLOC (million significant LOC). Extrapolating from archival data from previous CERT audits (with a ratio of 3.31 alerts per 1,000 LOC), we expect the two organizations to collectively identify approximately 662,000 alerts. Our goal is to automatically classify 90 percent of flagged anomalies as true and false positives with 95 percent accuracy. If successful, our method (and the subsequent software tools) would significantly reduce the effort needed to inspect static analysis results and prioritize confirmed defects for repair.

An organization would use the automated classification system that we developed as follows

- e-TPs (expected true positives) would be sent directly to a code repair team.

- Is (indeterminates) would be sent directly to a diagnostics analysis team for manual investigation.

- e-FPs (expected false positives) would be ignored.

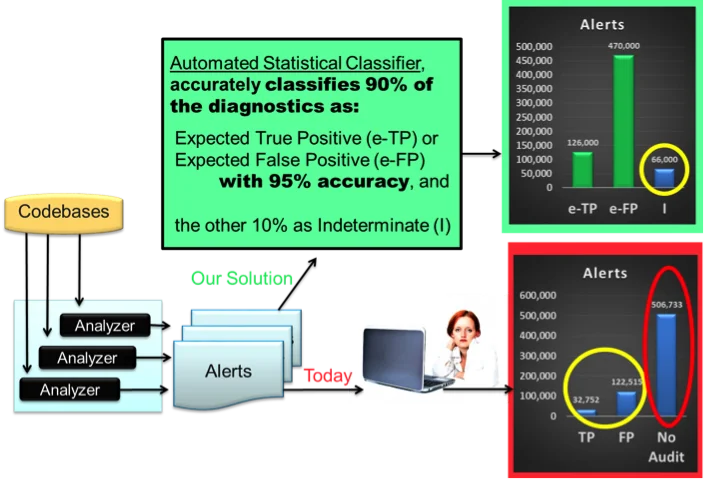

Further extrapolating from CERT's stored audit data to get a True:False ratio (of 1:3.74), in conjunction with our stretch goal of accurately classifying 90 percent of alerts as e-TP or e-FP, for the 200 MSLOC we would generate 126,000 e-TPs to send directly to the code repair teams to fix, 470,000 e-FPs to ignore, and 66,000 I's to manually investigate. These numbers describe a 90 percent reduction in time spent on manual investigation while still considering all of the alerts, without increasing code vulnerability (the 90 percent of alerts automatically classified as e-TP or e-FP directly translates into the 90 percent reduction in time manually auditing alerts). The confidence metric would help organizations prioritize which of the indeterminate alerts to audit first. In practice, an organization might still choose to not manually examine some of the lowest-priority indeterminate alerts.

The figure below shows the envisioned improvement. Multiple static analysis tools are run on the codebases and each tool outputs a set of static analysis alerts. Following the arrows from the alerts on the bottom of the figure, today a human auditor must manually examine the alerts and the associated code to determine if each alert is true or false. This effort often takes far too long to accomplish given many organization's budgetary constraints. In the red-bordered chart, therefore, the alerts that could be completed in an estimated 12,939 hours of auditing work (using the 5 minutes per alert review estimated in [Hayward, 2008]) are circled in yellow, and the remaining 506,733 un-audited alerts are circled in red. Our goal is shown along the path with the upper arrows in the figure. By automatically and accurately classifying 90 percent of the alerts as e-TP or e-FP, a human auditor is only needed to audit the 66,000 remaining indeterminate alerts. That takes only 5,500 hours to complete, which is less than half the time required in the original scenario. Moreover, using our method, all the alerts requiring a manual audit would get it.

Figure 1: The goal of our research is to greatly reduce the need for manual auditing and number of un-audited alerts. Source: the image of woman and laptop ("Woman And Laptop") is taken from http://www.publicdomainpictures.net/view-image.php?image=47526&picture=woman-and-laptop.

Future Work

Recently published research, Automatically Learning Semantic Features for Defect Prediction, has shown that using semantic features can significantly improve code defect prediction. In the future we therefore plan to incorporate semantic features into our classification models. Moreover, we plan to use features from repository logfiles to develop classifiers. We also plan to use automated parameter optimization for classifier techniques, which recent research has shown can significantly improve classifiers Automated Parameter Optimization of Classification Techniques for Defect Prediction Models.

Our future work may also incorporate advanced analysis of the costs, risks, and benefits associated with the alert and use those in addition to confidence when specifying thresholds for the classification models. Such specifications would make the classification models more likely candidates for adoption by software development organizations.

Our model supports analysis with any coding rules, and in the future we want to develop classifiers for other coding standards. A limitation of our current work resides with the set of analyzers used and the willingness of potential adopters to invest in multiple analyzers.

Future work in this area could also involve integrating the results of this research with work on automated code repair being done at the SEI. With that work, our models would be used after automatic code repair of provably-correct code refactoring, which dispositions only a limited number of alerts about potential code flaws. Our method could be used to prioritize (for expert analysis) the potential automated-assistance type of repairs that are not provably correct, but which require human input (e.g., to determine if given the intent of the code, the automated repair would be correct). For all remaining alerts not associated with automated repairs, our method could be used to classify alerts in the usual way mentioned above (into e-TP, e-FP, and I categories).

The next post in this series will discuss our collaboration on this project with the three DoD organizations mentioned above.

We welcome your feedback on this research in the comments section below.

Additional Resources

View a recent presentation that I gave, Automating Static Analysis Alert Handling with Machine Learning: 2016-2018, to Raytheon's Systems And Software Assurance Technology Interest Group.

Read, comment on, and help us to improve our SEI CERT coding standards, which are developed on public-facing wikis.

Learn more about CERT's Source Code Analysis Laboratory.

Below is a list of resources cited in the blog post:

[Heckman 2011] Heckman, Sarah, and Laurie Williams. A systematic literature review of actionable alert identification techniques for automated static code analysis. Information and Software Technology 53.4 (2011): 363-387.

[Heckman 2007] Heckman, Sarah. Adaptively ranking alerts generated from automated static analysis, Crossroads 14.1, 2007.

[Kong 2007] Kong, Deguang, et al. ISA: a source code static vulnerability detection system based on data fusion. Proceedings of the 2nd international conference on Scalable information systems. ICST, 2007.

[Kremenek 2004] Kremenek, Ted, et al. Correlation exploitation in error ranking. ACM SIGSOFT Software Engineering Notes. Vol. 29. No. 6. ACM, 2004.

[Meng 2008] N. Meng, Q. Wang, Q. Wu, H. Mei, An approach to merge results of multiple static analysis tools, Proceedings of the Eight International Conference on Quality Software, Oxford, UK, August 12-13, 2008.

[Plakosh, 2014] Plakosh, Daniel, Robert Seacord, Robert W. Stoddard, David Svoboda, and David Zubrow. Improving the Automated Detection and Analysis of Secure Coding Violations. (2014).

[Ruthruff 2008] Ruthruff, Joseph R., et al. Predicting accurate and actionable static analysis warnings: an experimental approach. Proceedings of the 30th international conference on Software engineering. ACM, 2008.

More By The Author

Release of SCAIFE System Version 2.0.0 Provides Support for Continuous-Integration (CI) Systems

• By Lori Flynn

PUBLISHED IN

Secure DevelopmentGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed