Prioritizing Security Alerts: A DoD Case Study

PUBLISHED IN

Secure DevelopmentFederal agencies and other organizations face an overwhelming security landscape. The arsenal available to these organizations for securing software includes static analysis tools, which search code for flaws, including those that could lead to software vulnerabilities. The sheer effort required by auditors and coders to triage the large number of potential code flaws typically identified by static analysis can hijack a software project's budget and schedule. Auditors need a tool to classify alerts and to prioritize some of them for manual analysis. As described in my first post in this series, I am leading a team on a research project in the SEI's CERT Division to use classification models to help analysts and coders prioritize which vulnerabilities to address. In this second post, I will detail our collaboration with three U.S. Department of Defense (DoD) organizations to field test our approach. Two of these organizations each conduct static analysis of approximately 100 million lines of code (MLOC) annually.

An Overview of Our Approach

The DoD has recently made rapid, assisted, automated software analysis and validation a priority. Relying on previous research on techniques for optimizing alert handling for single static analysis tools, we identified candidate features to include in our classification models. Our approach uses multiple static analysis tools on codebases, an approach which finds more code flaws than using any of the static analysis tools alone. Moreover, we use results from the multiple tools as additional features for the classifiers, hypothesizing that these features can be used to develop more accurate classifiers. We unify outputs from multiple tools into one list, and we fuse alerts from different tools for the same code flaw at the same location.

Fusion requires mapping alerts from different tools to at least one code flaw taxonomy (we used CERT secure coding rules). For matching code locations of alerts that map to the same coding rule, we used matching filename (including directory path) and line numbers. The script we created to perform fusion also performs additional analysis by counting alerts per file, alerts per function, and the depth of the file (with the alert) within the code project. We use results of this analysis as "features" for the classifiers. Features are types of data that are analyzed by the mathematical algorithms for the classifiers. As I explained in the previous blogpost in this series, features we provide to our classifier algorithms include significant lines of code (SLOC) in the program/file/function/method, cyclomatic complexity metrics, coupling metrics, language, and many more.

We then use classification techniques--Lasso logistic regression (LR), classification and regression trees (CART), random forest (RF), and eXtreme Gradient Boosting (XGBoost)-- to classify alerts. Each of these classification techniques assigns membership to classes, but the techniques differ in their ease of implementation and their tendency to over-fit to the training data set. For most of our work to date, we classify alerts only as expected true positive (e-TP) or expected false positive (e-FP).

For some of our work, which we will be expanding upon in 2017, we have also classified alerts as belonging to a third class: indeterminate (I). To divide alerts into the three classes, we assign membership to one of the three classes by using probabilities the classifiers produce in conjunction with user-specified thresholds. Ideally, our approach would allow software analysts and coders to immediately put the e-TPs into a set of code flaws to be fixed (that list would undergo its own prioritization for fixing) and order the indeterminate alerts (using a heuristic combining confidence, cost to repair, and estimated risk if not repaired), which would save valuable resources and time.

The team that has worked with me on this project includes Will Snavely, David Svoboda, David Zubrow, Robert Stoddard, and Nathan VanHoudnos (all of the SEI) as well as CMU students Jennifer Burns, Richard Qin, and Guillermo Marce-Santurio. We also partnered with Claire LeGoues, an assistant professor at CMU's School of Computer Science whose research interest focuses on how software engineers can construct and evolve high-quality systems. Dr. LeGoues provided helpful feedback and advice as we structured the research project. Our DoD collaborators were an important part of the team, as described below.

Getting Sanitized Data from DoD Collaborators

As part of our research, we incorporated three sets of anonymized code analysis results from DoD collaborators. All three of our collaborators used enhanced-SCALe, a framework for auditing static analysis alerts from multiple static analysis tools based on the CERT Source Code Analysis Laboratory (SCALe) tool. SCALe maps the alerts to CERT secure coding rules for four coding languages (C, C++, Perl, and Java). It has a GUI that enables examination of the code related to an alert and marking a determination (e.g., true or false), and it enables easy export of audit project information for a codebase in SQLite or CSV format.

We enhanced SCALe to provide

- more data about the alerts and codebases for building classifiers

- multiple installation options for our collaborators

- data sanitization that our collaborators needed to be able to share their data with us

The DoD organizations had differing restrictions on computing environments that they could (or were required to) use, and two of them had lengthy approval processes for requests for approvals for new environments. Our collaborators required offline installations, so we developed offline installations for enhanced-SCALe (and for SCALe) that included third-party codebases with open-source licenses. For example, some collaborators could only install the software on particular operating systems (and particular versions of those) and others needed a virtual machine with enhanced-SCALe pre-installed on it. We have received three sanitized datasets from two collaborators so far, and the third collaborator recently installed enhanced-SCALe.

The DoD organizations performed the following steps:

1. Run static analysis tools on their codebases.

Detail: SCALe currently can work with a limited number of static analysis tools (and only particular versions of those tools), so we provided information on which tools they should use. For a particular programming language and compilation platform (e.g., MS Windows or Linux) for a codebase, a different subset of SCALe-compatible static analysis tools can be used to analyze the code. The DoD organizations were limited to a subset of the SCALe-compatible commercial tools. They used most of the SCALe-compatible cost-free static analysis tools, but none used the free Rosecheckers tool, because of the time required to set it up to use. The static analysis tools could be used on different computers than those that enhanced-SCALe was installed on.

2. Install enhanced-SCALe.

Detail: Either they installed enhanced-SCALe from our offline install software package, or they installed enhanced-SCALe from the new virtual machine we created. All the collaborators installed enhanced-SCALe on computers that weren't connected to the Internet, due to concerns about sensitive data related to the codebases. For instance, the un-sanitized static analysis tool output indicates possible code flaws that attackers could take advantage of, along with details that could help an exploit writer.

3. Create enhanced-SCALe projects.

Detail: This step involved using the enhanced-SCALe graphic user interface (GUI) to upload the static analysis tools' outputs and the codebase on which the static analysis tools had been run, then naming the project. Enhanced-SCALe automatically runs a code metrics tool (an SEI-branched fork of the Lizard tool) as it creates the project. Code metrics analyzed by Lizard include the number of lines of code without comments, cyclomatic complexity, function token count, function parameter count, and more. A script developed by our team parses output from Lizard and stores data of interest so it can be correlated with the appropriate alerts.

When examining options for a code metrics tool, we looked for tools that are low-cost or free and simple to install (we assumed that if the software was too time-consuming to install a collaborator might drop out). We also wanted to use a tool that worked relatively quickly (because speed was also important to our collaborators) and to find a tool that would provide many of the metrics that we were seeking in our research.

4. Auditors analyzed validity of alerts and made determinations using the enhanced-SCALe GUI.

Detail: Two of the DoD organizations were only auditing for a subset of the CERT C secure coding rules; they used the GUI to filter alerts, so they only saw alerts related to those rules. The third DoD organization audited any Java alerts. By selecting an alert, the auditor can view the related code in the GUI, and then analyze whether the alert is a true or false positive. The auditor used the GUI to enter that determination, which is stored, along with the other data, in the enhanced-SCALe auditing project.

5. They exported each enhanced-SCALe project (one project per codebase) as an SQLite database.

Detail: The enhanced-SCALe GUI enables users to export the project. The database contains information about each alert (e.g., checker name, file, line number for code related to the alert, filepath, mappings between alerts and coding rules, many additional features of the codebase (and especially of the code related to the line where the alert is associated), and audit determinations (e.g., true and false) for alerts that have been audited.

6. From the command line, the auditors ran a sanitizing script (also developed by our team and part of the enhanced-SCALe software package) on the enhanced-SCALe database.

Detail: The script anonymizes fields of the database(s) to prevent leakage of sensitive information contained in class names, function and method names, package names, file names, file paths, and project names. The sanitizing script also discards diagnostic messages from the static analysis tool that sometimes contain snippets of code. The sanitized information is written to a new sanitized database, along with copies of the other data from the original database that was not potentially sensitive. At the request of one DoD collaborator, we created a second version of the sanitizing script, which outputs the sanitized data into 8 comma-separated value (CSV) files rather than the SQLite format.

We anonymized data by using SHA-256 hashing with a salt. The salt greatly increases the difficulty of creating lookup tables. Lookup tables have pre-hashed dictionary words (and variations), making hashes reversible by enabling quick lookup of words that each hash value could have come from. The salt should be a random string that is relatively long, which is concatenated to the original string meant to be hashed. Concatenating this string is intended to foil potential attackers by making the creation of a lookup table take too much time and space to be worthwhile. For the file path, we gave each directory a separate anonymized name, and used slashes to separate hashed names along a filepath.

The database sanitization script performs the following tasks:

- It looks for a filename "salt.txt" in the sanitizer script's directory to check whether the collaborator has already created its own salt. If the file does not yet exist, the script generates a salt using the method described below.

- For each potentially sensitive field in the database to be sanitized (as opposed to discarded), it

a. concatenates the salt with whatever string it is about to hash

b. hashes the salted string (the concatenated text)

The salt should be generated using a cryptographically secure pseudo-random number generator. We instructed collaborators to create their own secret (secret-from-the-SEI) salts. If the collaborator didn't do that, then the script auto-generated a salt and put it into a file named "salt.txt." The following command could be used for generating the salt on a Linux computer from a bash terminal: head /dev/urandom -c 16 | base64

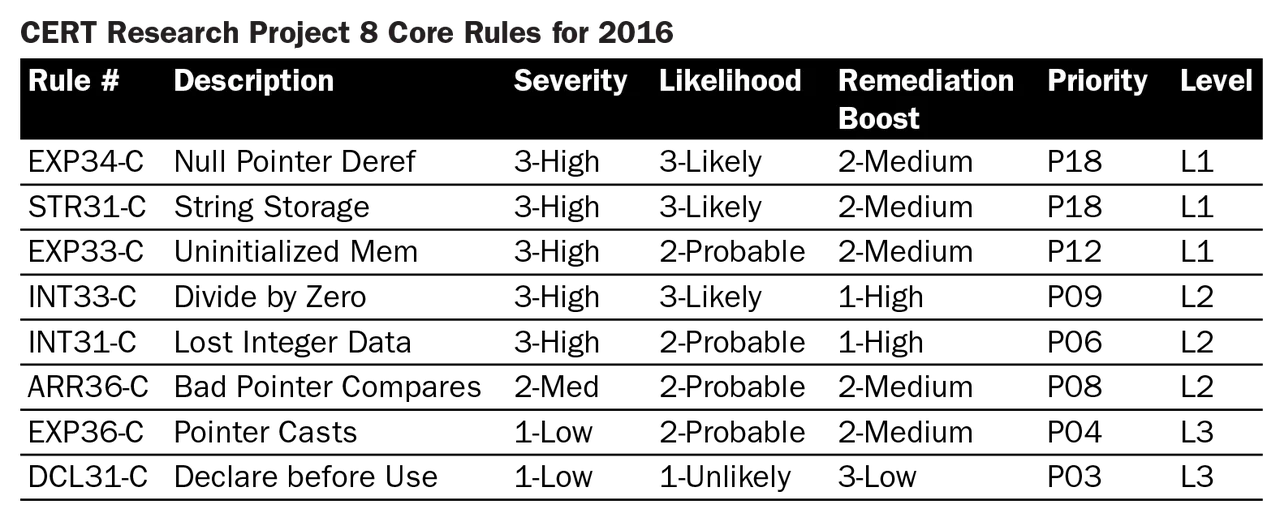

To best benefit from limited collaborator time to do auditing, for the two organizations that annually audit a combined 200 million LOC, we focused their efforts on auditing just 8 coding rules for the C language. The language was chosen by those two collaborators, based on availability of potential auditors already working with particular codebases, often as developers. The language chosen was also based on sensitivity of codebases, because some codebases were considered too sensitive and permissions might take too long to get.

In CERT-audited archives, alerts that map to 15 CERT secure coding rules have 20 or more audit determinations that all are one type (100 percent true or 100 percent false). We wanted collaborators instead to focus their auditing efforts on alerts mapped to coding rules that had mixed audit determinations in CERT audit archives. The audit determinations needed to be mixed true and false to create per-rule classifiers. Many of the coding rules have little or no CERT-audited archive data. There are 382 CERT secure coding rules that cover four languages, and we currently have audit data for 158 of those rules.

In the CERT-audited archives, eight of the coding rules for C had mixed audit determinations, some as few as one of each type. We verified one true and one false randomly selected audit determination from the CERT audit archives for each of these rules to make sure our collaborators' time would be used only on mixed-determination rules. At the time, only the third DoD organization was able to share results from auditing one codebase, which happened to be in Java. To maximize the audit data they could give us from auditing alerts from the single codebase, we didn't ask the third organization to limit audits to particular Java coding rules.

Audit Quality: Lexicon and Rules, Training, and Test

We developed a two-day training class intended to increase data quality. High data quality is obtained when different auditors make the same determination for any given alert. Auditors must use the same rules to make audit determinations. To ensure that happens, auditing rules must be explicitly defined and taught. Corner cases exist where experienced auditors with a good grasp of coding rules and the programming language could make different determinations with reasonable rationales.

Also, terms in an auditing lexicon mean different things to different people unless definitions are documented and taught to all auditors. Currently, however, there is no widely accepted standard lexicon for auditing rules. For example, even within a single DoD collaborator organization, different sub-organizations used the determination 'true' in different ways: some used it only to indicate that the flaw indeed existed, whereas others used it to indicate dangerous code constructs, whether or not the indicated flaw actually exists. No lexicon or auditing rules were documented or taught.

As part of our work on this project, we developed auditing rules and an auditing lexicon, and we published them in a conference paper ("Static Analysis Alert Audits: Formal Lexicon & Rules," IEEE SecDev, November 2016). The DoD collaborators provided helpful input as we developed the lexicon and rules. The two-day training class taught auditing rules, an auditing lexicon, how to use enhanced-SCALe, the eight CERT C secure coding rules that the trainees would audit for, and an overview of research methods related to efficient static analysis alert auditing.

Initial Results and Looking Ahead

Our long-term project goal is to develop an automated and accurate statistical classifier, intended to efficiently use analyst effort and to remove code flaws. If successful, our method would significantly reduce the effort needed to inspect static analysis results and prioritize confirmed defects for repair.

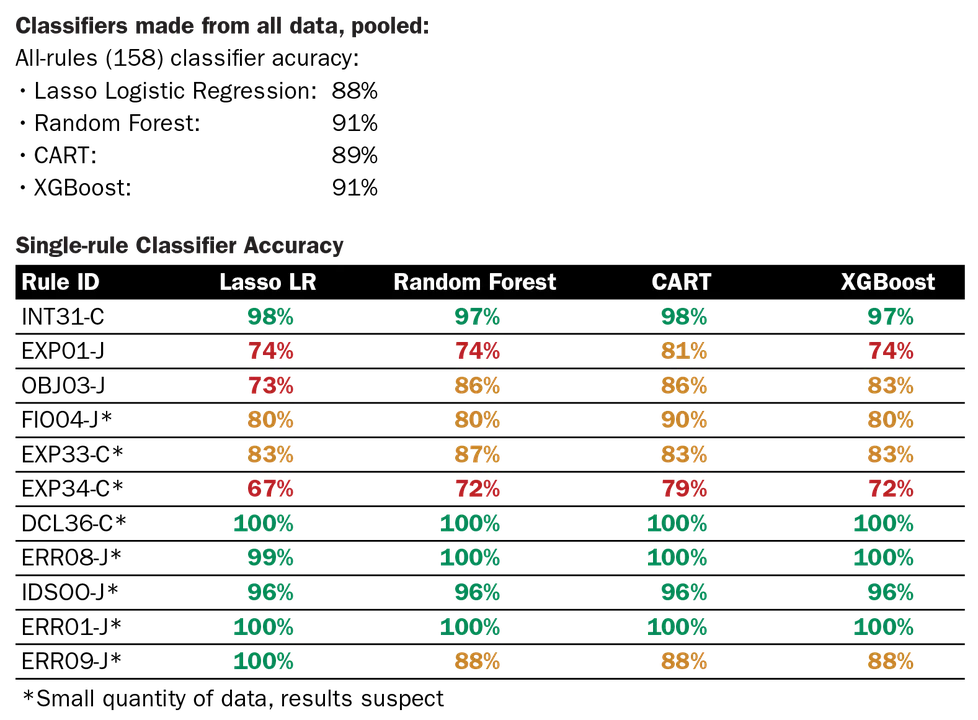

We were able to develop some highly accurate classifiers, but we lacked sufficient data for per-rule classifiers. We created two types of classifiers: (1) all-data, using all data but with the rule names used as a feature, and (2) per-rule, which use only data with alerts mapped to that particular coding rule.

We pooled data (CERT-audited and collaborator data) and segmented it so 70 percent of the data was used for training the classifier models, and 30 percent was used to test the accuracy of the classifier predictions. Results for both types of classifiers are shown below. Accuracy of the all-rules classifiers ranged from 88-91 percent. For the single-rule classifiers, however, we were only able to construct 11 classifiers using our mathematical software, and of those only three had sufficient data to have confidence in the classifier predictions.

In addition to the results shown in the charts above, we also created classifier variations on the dataset (per-language, with or without tools, with different ways of handling missing data, etc.). We also created classifiers using only the CERT-audited data, then tested them on the collaborator data to see if or how results might differ. An upcoming research paper that our team is currently working on will describe those results in detail.

A summary of the general results is listed below, with some terms used in the summary described here.

- All-pooled test data refers to classifiers developed and tested using all of the audit archive data (from CERT and from DoD collaborators, using all of the audited alerts no matter which CERT coding rules the alerts mapped to).

- Collaborator test data refers to classifiers developed and tested using only aggregated audit archive data using audited alerts only from DoD collaborators. Different types of classifiers had better accuracies for the two different datasets. Although our classifier development tool was able to build classifiers for 11 coding rules, only 3 of the coding rules really had sufficient data to build standard classifiers and the other results were suspect. The classifier for the CERT coding rule INT31-C had the highest accuracy of the per-rule classifiers.

- With-tools-as-feature classifiers refers to classifiers that use the static analysis tool ID as a feature. Our results show that there is a benefit to using multiple static analysis tools and using the tool IDs as a feature.

- A single language classifier is developed and tested using only audit archive data for a single coding language (e.g., only C or only Java).

- An all-language classifier is developed and tested using audit archive data without filtering out any coding languages.

- The results summarized below were not true for every single test, since there were many combinations of classifier variations.

General results (not true for every test)

- Classifier accuracy rankings for all-pooled test data:

XGBoost ≈ RF > CART ≈ LR

The above mathematical notation means that the accuracy of XGBoost is about equivalent to that of Random Forest and better than CART (which is about equivalent to that of Logistic Regression).

- Classifier accuracy rankings for collaborator test data:

LR ≈ RF > XGBoost > CART

The above mathematical notation means that that the accuracy of Logistic Regression classifiers is about equivalent to that of Random Forest classifiers, which is better than that of SGBoost classifiers, which is better than CART classifiers.

- Per-rule classifiers generally not useful (lack data), but 3 rules (INT31-C best) are exceptions.

- With-tools-as-feature classifiers had better accuracy than classifiers without tools as a feature.

- Accuracy of single language vs. all-languages data:

C > all-combined > Java

The above mathematical notation means that the accuracy of classifiers developed for alerts using only audited data for the C language was the best, which was better than classifiers developed using data from all languages, which was better than classifiers developed using only data for the Java language.

In the year to come, we look forward to continuing work with our three DoD collaborators to improve classifiers for static analysis alerts.

Additional Resources

View a recent presentation that I gave, Automating Static Analysis Alert Handling with Machine Learning: 2016-2018, to Raytheon's Systems And Software Assurance Technology Interest Group.

View the November 2016 slide presentation Prioritizing Alerts from Static Analysis with Classification Models .

View the poster Prioritizing Alerts from Static Analysis with Classification Models.

Read the paper Static Analysis Alert Audits: Lexicon & Rules , which I coauthored with David Svoboda and Will Snavely for the IEEE Cybersecurity Development Conference (IEEE SecDev), November 3-4, 2016.

Read my previous post in this series, Prioritizing Alerts from Static Analysis to Find and Fix Code Flaws.

For more information about the SEI CERT Coding Standards, please click here.

More By The Author

Release of SCAIFE System Version 2.0.0 Provides Support for Continuous-Integration (CI) Systems

• By Lori Flynn

PUBLISHED IN

Secure DevelopmentGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed