Release of SCAIFE System Version 2.0.0 Provides Support for Continuous-Integration (CI) Systems

PUBLISHED IN

Secure DevelopmentThe Source Code Analysis Integrated Framework Environment (SCAIFE) system is a research prototype for a modular architecture. The architecture is designed to enable a wide variety of tools, systems, and users to use artificial intelligence (AI) classifiers for static-analysis results (meta-alerts) at relatively low cost and effort. SCAIFE uses automation to reduce the significant manual effort required to adjudicate the large number of meta-alerts that static-analysis tools produce. In June 2021, we released Version 2.0.0 of the full SCAIFE system with new features for working with continuous-integration (CI) systems. In this blog post, I describe the key features in this new release and the status of evolving SEI work on SCAIFE.

SCAIFE for Continuous Integration

Continuous integration (CI) has traditionally been defined as “the practice of merging all developers’ working copies to a shared mainline several times a day” and usually includes automated builds and tests by a CI server. In a previous SEI blog post, Continuous Integration in DevOps, C. Aaron Cois wrote,

This continual merging prevents a developer's local copy of a software project from drifting too far afield as new code is added by others, avoiding catastrophic merge conflicts…If a failure is seen, the development team is expected to refocus and fix the build before making any additional code changes. While this may seem disruptive, in practice it focuses the development team on a singular stability metric: a working automated build of the software.

We have enabled the SCAIFE system to work with a range of variations of CI, including those that are broader than daily merges of developer branches on a shared server and CI-server automated testing. CI can range from daily merges by developers when they commit changes to a code repository server, to less frequent merges. An example of a less frequent merge is when a CI build automatically tests developer branches on the CI server until all tests pass, and only after that the developer branch is merged into the mainline branch, which might for example take a week or longer. We also enable testing by development organizations that don’t even use a CI server yet, but that have generated different versions of the codebase and the static-analysis tool output on both versions of the codebase. This approach is a less automated version of updates that a CI server provides to SCAIFE automatically, and that SCAIFE uses to update its project information, including information about static-analysis results.

CI-SCAIFE Demo

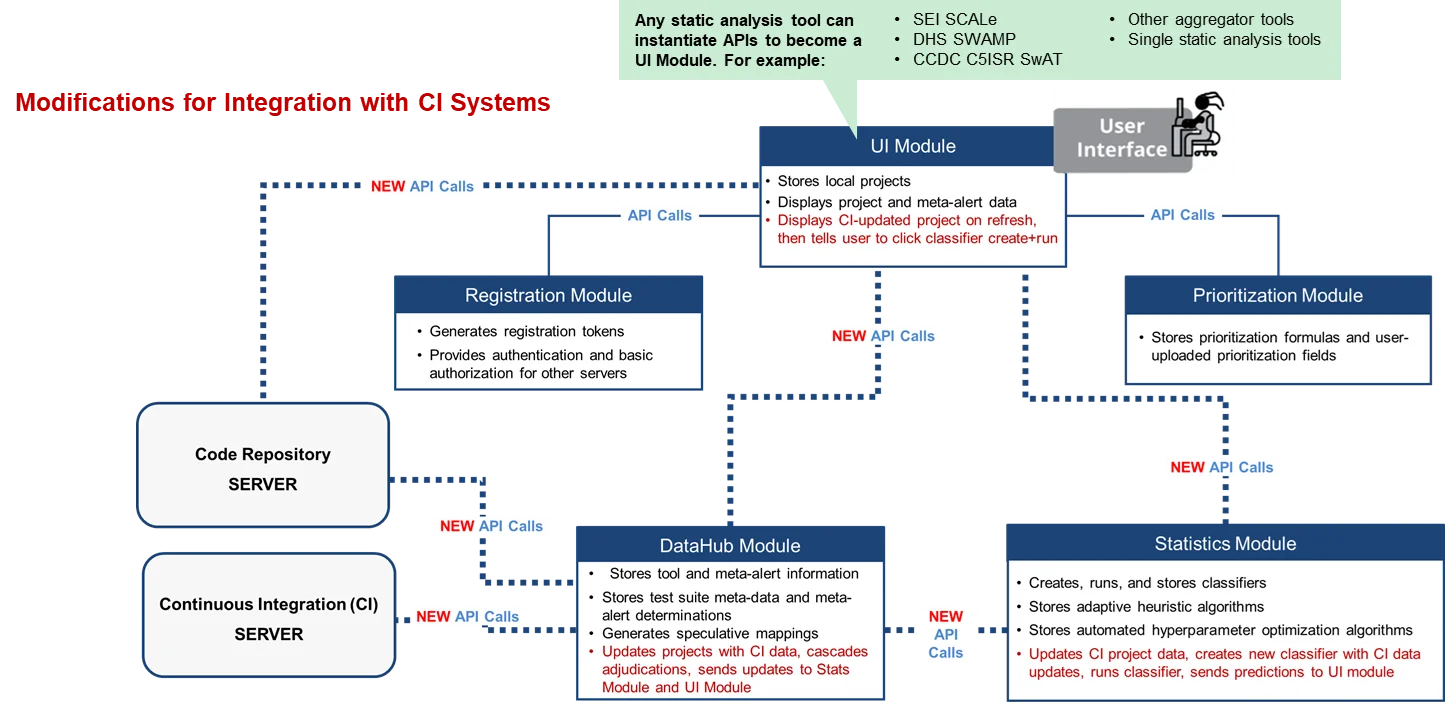

Version 2.0.0 of SCAIFE includes four versions of a hands-on CI-SCAIFE integration test that demonstrates features that enable SCAIFE to work with CI systems. The versions differ by the amount of automation that the tester is able to use. For example, some testers may not have a CI server available or may not have enough available time (about a half day) to run the full CI-server version of the demo. Users create a SCAIFE CI project using a git code repository, where a new code commit to the code repository automatically causes an update—code commit and new static-analysis tool output—to be sent to the SCAIFE DataHub module (see Figure 1 below), which processes the CI update.

Figure 1 below shows the architecture of the SCAIFE system with modifications for CI-SCAIFE integration:

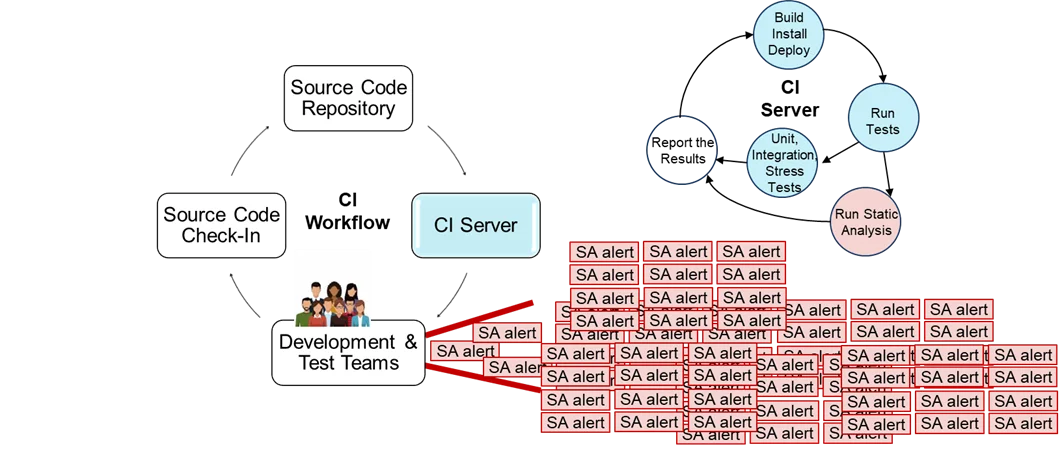

Figure 2 below shows a vision that integrates classifier use with CI systems.

Figure 2 shows CI workflow, where a member of the development and test teams develops code on a new branch (or branches) that implements a new feature or a bug fix. The coder checks their source code into the repository (e.g., git commit and git push). Next, the CI server tests that code, first setting up what is needed to run the tests (e.g., creating files and folders to record logs and test artifacts, downloading images, creating containers, running configuration scripts), then starting the automated tests. In the short CI timeframes, essential tests must be run, including

- unit tests that check that small bits of functionality continue to work,

- integration tests that check that larger parts of the system functionality continue to interact as they should, and sometimes,

- stress tests to ensure that the system performance has not become much worse.

Sometimes (but not always) static analysis is done during the CI-server testing. When this test occurs, it produces output with many alerts. Some meta-alerts may be false positives, and all of them must (normally) be examined manually to adjudicate true or false positives. In very short CI timeframes, however, dealing with static-analysis alerts is of low priority for development and test teams. Any failed unit or integration test must be fixed before the new code branch can be merged with the development branch, so those are of high priority. Beyond that, there are major time pressures from the CI cycle and the other developers or testers who need that bug fix or a new feature added so it does not block their own work or cause a merge conflict in the future.

To make use of static analysis practical during short CI builds, we

- enabled

diff-based adjudication cascading in the SCAIFE DataHub, static-analysis classification that automates the handling of some results; - enabled the user to set thresholds for classification confidence, above which the results are considered high confidence and below which they are considered indeterminate; and

- described a method by which users can focus on a small number of code-flaw conditions during CI builds in short time frames. At other CI stages, users can widen such a set of code flaws and still use SCAIFE, in a static-analysis adjudication process that takes more time to address a wider range of potential code-flaw conditions reported from static-analysis results.

The DataHub Module API provides a CI endpoint that automates analysis using SCAIFE if a package is configured to utilize CI integration. Configuring a package for CI integration means that the DataHub Module will directly connect to a git-based version-control system to analyze the source code used in the SCAIFE application. After static analysis runs on the source code, the results are sent to the DataHub API to begin automated processing with SCAIFE.

The DataHub updates per-project data, including all information about files and functions; the sets of static-analysis alerts and meta-alerts; and adjudication cascading. Adjudication cascading involves matching static-analysis results from the previous code version with new static-analysis results for the new code version. A matching meta-alert “cascades” any previous manual adjudication of true or false to the new meta-alert and sends the updated project data to the SEI CERT Division's SCALe (Source Code Analysis Laboratory) tool. SCALE is a graphical user interface (GUI) front end for the SCAIFE system (shown at the top of Figure 1 above) that auditors use to export project-audit information to a database or file.

We provide variations of the demo tests, to enable users to run the type of CI-SCAIFE demo test appropriate to their systems and testers. The different test version instructions are for testers who

- have their own CI systems and

gitcode-repository servers, - have

gitcode-repository servers but no CI, or - don't have access to a CI or

gitcode-repository server.

There are four different types of demos that users can run:

- If the user has a CI system and wants to fully exercise the demo, this version includes use of a CI server, a

gitrepository, and the Rosecheckers static-analysis tool. - For users who have only a few minutes, this is the fastest demo, where a script does most of the steps: Users follow the steps shown here: Demo with Completely Automated Demo Script. The script uses the preset data with two code versions and Rosecheckers output that is provided for each version of source code, and the script itself creates a local

gitrepository. Code-terminal output explains the significance of what happens at steps of the demo, verifies counts of meta-alerts for both versions of the codebase, and explains the adjudication-cascading results. - To exercise the fastest non-scripted demo requiring the smallest amount of effort, users use the preset data at Demo without using a CI Server and follow Approach 1 using the Rosecheckers output that is provided for each version of source code. This approach has the user edit a provided shell script, to specify a token, URL,

gitcommit hash, and other data gathered during specification of the CI Project in SCAIFE while following the instructions. The user then executes the shell script. - To exercise the second-fastest non-scripted demo requiring a bit more effort than (3), users use the preset data in Demo without using a CI Server and follow Approach 2 using the Rosecheckers output that is provided for each version of source code. This approach uses the DataHub container’s Swagger user interface, plus the static-analysis results, to submit static-analysis results to SCAIFE.

In creating the demos, we also published the Docker container image for the Rosecheckers static-analysis tool (accessible with command-line install command: docker pull ghcr.io/cmu-sei/cert-rosecheckers/rosebud:latest) and the code at https://github.com/cmu-sei/cert-rosecheckers, with an updated README file. Our project team created the new Docker-container-image publication, which enables would-be users to quickly and easily start to use Rosecheckers with a relatively low-bandwidth download and fast container start on any base machine. We published it to enable this tooling to be easy to access and set up as fast as possible, for our collaborators to run and test some versions of our SCAIFE-CI demo more quickly.

Status of Release and Planned Next Steps

We are in the process of sharing the full SCAIFE system with DoD organizations and DoD contractors so that we can receive feedback and review. We also provide access to SCALe—one of five SCAIFE modules, the user-interface (UI) module, and the SCAIFE API, to the general public at https://github.com/cmu-sei/SCALe/tree/scaife-scale. We welcome test and review feedback, as well as potential collaborations! DoD and DoD contractor organizations interested in testing SCAIFE, please contact us and we will get you a copy of the full SCAIFE system.

Additional Resources

Download the scaife-scale branch of SCALe: https://github.com/cmu-sei/SCALe/tree/scaife-scale.

Examine the YAML specification of the latest published SCAIFE API version on GitHub.

Read the SEI blog post, Release of SCAIFE System Version 1.0.0 Provides Full GUI-Based Static-Analysis Adjudication System with Meta-Alert Classification.

Read the SEI blog post, Managing Static Analysis Alerts with Efficient Instantiation of the SCAIFE API into Code and an Automatically Classifying System.

View the presentation, Static Analysis Classification Research FY16–20 for Software Assurance Community of Practice.

Read the SEI blog post, A Public Repository of Data for Static-Analysis Classification Research.

Read the SEI blog post, Test Suites as a Source of Training Data for Static Analysis Alert Classifiers.

Read the SEI blog post, SCALe v. 3: Automated Classification and Advanced Prioritization of Static Analysis Alerts.

Read the SEI blog post, An Application Programming Interface for Classifying and Prioritizing Static Analysis Alerts.

Read other SEI blog posts about static analysis alert classification and prioritization.

Read other SEI blog posts about DevSecOps.

Watch the SEI webinar, Improve Your Static Analysis Audits Using CERT SCALe’s New Features.

Read the Software QUAlities and their Dependencies (SQUADE, ICSE 2018 workshop) paper, Prioritizing Alerts from Multiple Static Analysis Tools, Using Classification Models.

View the presentation, Challenges and Progress: Automating Static Analysis Alert Handling with Machine Learning.

View the presentation (PowerPoint), Hands-On Tutorial: Auditing Static Analysis Alerts Using a Lexicon and Rules.

Watch the video, SEI Cyber Minute: Code Flaw Alert Classification.

Look at the SEI webpage describing our research on static-analysis alert automated classification and prioritization.

Read other SEI blog posts about SCALe.

More By The Author

Release of SCAIFE System Version 1.0.0 Provides Full GUI-Based Static-Analysis Adjudication System with Meta-Alert Classification

• By Lori Flynn

PUBLISHED IN

Secure DevelopmentPart of a Collection

Collection of Static Analysis Assets

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed