Don't Incentivize the Wrong Behaviors in Agile Development

All too often, organizations collect certain metrics just because those are the metrics that they've always collected. Ordinarily, if an organization finds the metrics useful, there is no issue. Indeed, the SEI has long advocated the use of metrics to support the business goals of the organization. However, consider an organization that has changed from waterfall to Agile development; all metrics related to development must be reconsidered to determine if they support the Agile development goal.

It is widely understood that in software development metrics incentivize behavior. There is a common desire among professionals to satisfy management by showing improvements in the area being measured. When project managers place too much emphasis on the metric and efforts to improve on that metric, the sometimes forgotten corollary of this dynamic is that other equally important areas of work receive less attention, which impacts the quality of work. Another unfortunate result is that an incentive to solve a problem can often result in unintended consequences (e.g., the cobra effect).

In this post--the first of two posts on the use of metrics to incentivize behaviors in Agile development--we start with a cautionary tale. Obviously, we use metrics as incentives to induce desired outcomes; but, if we don't consider the metrics carefully enough then the end result may not be as expected. To demonstrate how a metric can create the wrong behavior we will focus on defect phase containment metrics. The purpose of the defect phase containment metric is to catch and fix defects before they escape into later phases where, data informs us, the defects are many times more expensive to fix.

Defect Phase Containment Metrics

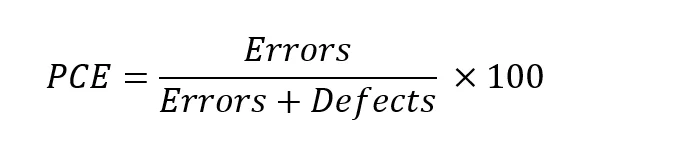

Phase containment of defects is often measured in terms of phase containment effectiveness (PCE), which is typically defined, per phase, as

This definition has very specific meanings: an Error is a fault that is generated, detected, and corrected all in the same phase, and a Defect is a fault that is generated in one phase and detected (and we hope corrected) in a later phase.

This careful distinction, however, leaves open questions of interpretation. For example, consider a fault that originated in the development phase of one component but can only be detected in the integration phase because it relies on interaction with some other system component. Should this fault be considered a defect even though it couldn't have been detected in the phase when it originated?

This distinction matters most when PCE is being reported by a contractor to the Program Management Office and is used as part of a reward structure. An alternative interpretation would be that a fault is considered an error if it is recovered in the same phase when it was detected if it couldn't have been detected in any earlier phase. However, this alternative has the potential to postpone the search for faults until late phases to reduce the value of the PCE.

Four Behaviors Resulting from Phase Containment Metrics

The use of phase containment metrics in Agile development places too much emphasis on software development phases, which are the underpinning of the waterfall development method. Agile development does not rely on the phases dictated by waterfall development (i.e., a requirements phase, an architecture phase, a design phase). However, a phase-based approach is risky and can invite failure, as noted in Managing the Development of Large Software Systems. Our concern is that use of phase containment metrics incentivizes the following four unproductive behaviors.

The remainder of this section outlines four behaviors that result from the use of phase containment metrics in Agile development.

Focus on Phase-Based Development. Clearly, a metric that is focused on a phase-based development model will lead to development in phases. However, phase-based development without redefinition of phases is incompatible with Agile development. Moreover, it has been documented many times that a phase-based approach is no predictor of success.

Phases are typically defined in terms of requirements, architecture, design, implementation, integration, testing, and operations. In an Agile development, the activities implied by these phases still occur, just not in single phases. They can occur continuously and even simultaneously. For example, if development has adopted a continuous integration mindset, then integrations occur frequently--perhaps many times per day--and are considered a part of implementation. Moreover, if development also includes automated testing then much of the testing phase becomes part of implementation. As the development cycle time reduces, increasingly smaller pieces of the system are defined, architected, designed, implemented, integrated, and tested. Consequently, the relative cost of collecting and reporting on phase-based metrics increases with respect to development cost.

Even the phase names become problematic from an Agile perspective. There isn't a one-to-one mapping from waterfall phase names to Agile terminology. For example, requirements definition is a typical waterfall phase and might be thought to map to Agile backlog grooming, but the latter involves elements of architecture and design.

Gaming a Phase-Based Metric. Because PCE is expressed in terms of a ratio, the value may be raised by either increasing the numerator or decreasing the denominator. This ratio may be raised by increasing the number of errors detected in a given phase or reducing the number of defects detected in later phases. PCE alone doesn't indicate which of the above behaviors may have occurred. Clearly, the desired behavior is a reduction in the number of defects discovered in later phases. However, an organization that wishes to show improvement in the value of PCE over time can simply classify more faults as errors or be more careless in implementation as long as unit testing will uncover the errors, a behavior memorialized by Scott Adams in a Dilbert cartoon.

Emphasis on Gates Between Phases. As discussed earlier in this post, the preferable method to increase the value of PCE is to reduce the number of defects found in subsequent phases by detecting and correcting errors in a single phase. However, such an approach leads to the creation of gates at the end of each phase to ensure that every possible fault has been detected and corrected.

It is an illusion to think that perfection can be achieved at each phase. As each phase is lengthened by the efforts to detect and correct errors, it is increasingly likely that the environment for which the system is intended will change. As the environment changes, the requirements for the system itself often will, too. Consequently, errors and defects are created simply by focusing on minimizing the number of defects that escape from a phase.

Perpetuation of False Positive Feasibility.. Until the system or parts of the system are available for users to see and, preferably, experiment with, there is only an assumption that the system being constructed will satisfy the needs of the users or even perform as expected. In other words, everyone involved in the development is positive that the system being developed will behave appropriately, but no one can be sure that it will do so. This belief in the correctness of the work to date is called "false positive feasibility." Sure, there's a chance that the system may be exactly what is wanted, but there's also a chance that it won't be! The longer we wait before getting tangible pieces of the system in front of users, the longer we perpetuate this false positive feasibility.

Wrapping Up and Looking Ahead

As this post illustrates, the use of phase-containment metrics will lead to behaviors incompatible with an Agile development process. In the next post in this series we will three alternative containment metrics for consideration in Agile development.

Additional Resources

Read the SEI Blog Post Agile Metrics: Seven Categories by Will Haye

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed