Agile Metrics: A New Approach to Oversight

There's been a widespread movement in recent years from traditional waterfall development to Agile approaches in government software acquisition programs. This transition has created the need for personnel who oversee government software acquisitions to become fluent in metrics used to monitor systems developed with Agile methods. This post, which is a follow-up to my earlier post on Agile metrics, presents updates on our Agile-metrics work based on recent interactions with government programs.

Opportunities and Challenges of Transitioning from Waterfall to Agile

Agile methods have enabled program offices to deliver software products more rapidly and in smaller increments than is customary in the environments in which they operate. Agile development approaches are becoming more prominent in government settings, and they create opportunities for rapid deployment of new capabilities. However, Agile approaches also pose challenges to traditional government oversight and management practices. For example, government acquirers often lack experience with the metrics required to assess progress, so they struggle to gain the insight needed. In particular, their challenge is to meet the needs of large-scale program management without disrupting the practices necessary for a team to succeed using Agile methods.

Changing the oversight approach in Agile settings means asking different questions on a new cadence than oversight organizations have in the past. This leads to different measurement and reporting approaches. Agile methods emphasize an empirical approach to software, so Agile metrics focus on demonstrable results. I summarized in an earlier post the findings from a technical note that we published on this topic: Agile Metrics: Progress Monitoring of Agile Contractors, which I co-authored with Suzanne Miller, Mary Ann Lapham, Eileen Wrubel, and Timothy A. Chick. In that technical note, we described three key views of Agile team metrics--velocity, sprint burn-down chart, and release burn-up chart--that are typical of most team-level implementations of Agile methods.

This prior work led us to identify seven means--software size, effort and staffing, schedule, quality and customer satisfaction, cost and funding, requirements, and delivery and progress--of monitoring progress in Agile developments that help programs account for the regulatory requirements that are common in the DoD. Our work with major DoD acquisition programs has allowed us to shift our focus to addressing these issues at scale rather than just at the team level. The remainder of this blog posting is focused on program-level measurement.

Failing According to Plan

Most approaches to metrics are dominated by a "conformance to plan" perspective in collecting and evaluating data. Stakeholders for software development legitimately care about questions such as

- Do deliverables arrive on time?

- Are we spending funds at the planned pace?

- Does the product work as specified in the requirements?

- .... and the list goes on ...

All the questions above presume that the baseline requirements, budget, and schedule are (and remain) the correct targets for the program. When looking at most major acquisition programs in the DoD portfolio, however, we see episodic re-baselines for requirements, budget, and schedule throughout the life of the program. Managing a program through actions that force conformance to an outdated plan driven by requirements that have been supplanted by evolving user needs (and technological innovation) seems unwise. Yet the metrics we typically use drive us in that very direction!

Rather than treating the plan as a goal unto itself, successful Agile programs satisfy legitimate stakeholder questions while maintaining the focus on outcomes that the plan was devised to achieve. Adoption of an empirical process based on incremental delivery of working software enables a near-term view of end results. This iterative approach is a stark contrast to the exclusive reliance on proxies of progress against long-range targets, which is common in traditional waterfall approaches. Useful metrics for Agile organizations have a tactical focus, to motivate near-term and local actions that enhance outcomes while addressing the long-term roadmap and big-picture view of performance goals for the program.

Focus on Flow, Not Utilization

In our work with large acquisition programs, we have seen this emphasis on "conformance to plan" drive actions that undermine Agile success. For example, when a billion-dollar program is behind schedule, program managers' instincts often lead them to focus on the details, working to ensure that each task completes on time (according to the plan) and achieves 100 percent utilization of resources (even pressing people to go beyond normal capacity).

However, interventions intended to limit variation at the detailed level may over-correct for the uncertainty that is intrinsic in knowledge-intensive work. Large programs are an aggregation of many moving parts, with some tasks completing early relative to their estimates and others completing late. Conformance to plan at the program level is rarely achieved by increasing the fidelity of conformance to plan at the lowest levels. When a program is in trouble, our instincts lead us to micromanage the small tasks, hoping to get the whole program on track. In practice, however, no matter how well we plan, it is unrealistic to expect that each and every 15-minute task can be made to complete as prescribed by the plan.

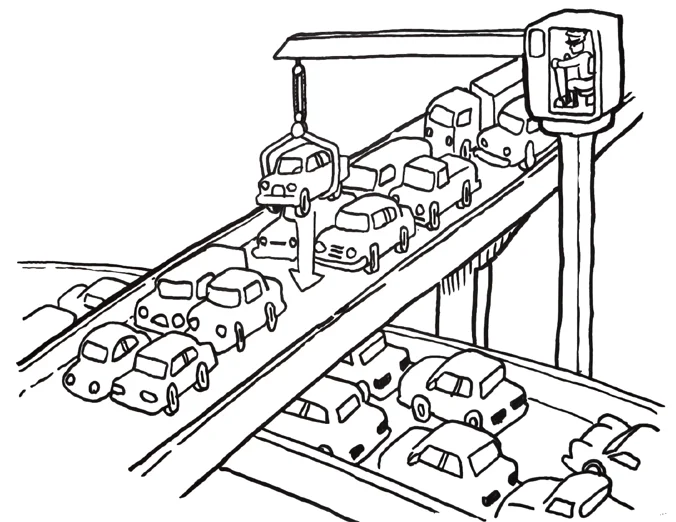

This deterministic treatment of an intrinsically variable process may be an artifact of our reliance on manufacturing examples to explain how software project management works. We behave as if there is a strict algebraic relationship between the micro and the macro, without appreciation for the coupling among the technical tasks or the wide range of engineering talents among the staff (among other things). Packing the schedules of the staff and asking them to multi-task under pressure does not result in speeding up the program. Consider the intervention depicted below:

This misplaced focus on utilization is almost certain to yield longer cycle times and introduce unwanted delay. Many software-development personnel slice their time into ever smaller intervals in an effort to accommodate the demand to get more done with less. In this new form of gridlock, we convert the long death-march programs of the past into a rapid succession of two-week endurance races we call sprints; they are actually marathons with every waking hour accounted for by a tyrannical focus on meeting detailed estimates.

The traffic lights you see at the on-ramp for some of our most crowded highways are there to promote traffic flow by introducing a small gap between the cars. Utilization, after a certain point, works against flow. While commercial parking lot operators strive for 100 percent utilization of pavement, we have a different goal for our highways. Evaluating development organizations exclusively by how well they meet their detailed estimates makes the project plan a goal unto itself, rather than focusing on successfully producing the capability under development.

Focus on Outcomes, Not Project Management

We are seeing government personnel who oversee Agile programs increase their focus on outcomes to achieve through use of the software system. Evaluating the minimum viable products demonstrated by development teams requires a robust mission focus. Measures of the systems' performance (e.g., key performance parameters) have always been important to government acquirers, though their evaluation is often relegated to major milestones where they are examined retrospectively.

The timely delivery of prioritized capabilities, in accordance with the roadmap that governs developers' priorities, should be emphasized. Proxies for project-management performance, such as cost and schedule are important, but our attention to them must not overshadow the importance of meeting operational needs.

Successful Agile organizations have found that managing teams by providing unambiguous short-term priorities--accompanied by a clear focus on acceptance criteria for the work--enables software-development professionals to pursue their chosen profession, rather than having to focus exclusively on matching the parameters found in the project plan. The intent of Agile is to enable short learning cycles so that developers can pivot, when needed, to move in a direction that is more beneficial to the operational needs of system users.

Getting closer to what is needed in a given solution requires a keen sense of the benefit that government seeks to achieve from a system. Achieving this goal, in turn, requires that government acquisition managers ask different questions about what they are getting from their software-development efforts, with an emphasis on mission needs and an unwavering focus on product quality. To take full advantage of the benefits of Agile development, government acquirers must align the oversight with iterative delivery of small batches of work--evaluated against mission needs, not budgets and schedules.

Wrapping Up and Looking Ahead

As we continue to serve those in the defense department working on the transition to Agile approaches, we will report lessons and insights they provide through outlets like this blog. In the near term, the influence of architecture -especially concepts relating to Open Systems- is starting to have a more prominent role in optimizing Agile approaches. In the long term, we are seeing a need to focus beyond software to integrate hardware and other aspects of the program with iterative and incremental approaches at the system level.

Additional Resources

Read my earlier post on Agile metrics, Agile Metrics: Seven Categories.

Download our technical note, Agile Metrics: Progress Monitoring of Agile Contractors.

Read our congressional testimony on the current state of the Social Security Administration's (SSA) Information Technology (IT) modernization plan and best practices for IT modernization, including oversight of agile software development.

Watch my webinar, Three Secrets to Successful Agile Metrics.

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed