The Seven Virtues of Reconciling Agile and Earned Value Management (EVM)

As more government acquisition programs adopt an Agile development methodology, there are more opportunities for Agile to interact with and perhaps contend with earned value management (EVM) principles. EVM has been a mainstay within the U.S. government acquisition community for longer than Agile has, but both are firmly entrenched in policy that mandates their use. While not all EVM programs are Agile and not all Agile programs use EVM, it is becoming more common that government programs use both methods. Professionals within the acquisition community are usually more comfortable with one methodology than they are with the other, and some even perceive them to be at odds. This perception is perhaps forgivable upon examination of the fundamental assumptions for both practices. This blog post will discuss the interactions between Agile and EVM.

To identify potential disconnects and to help acquirers gain maximum benefit from these methodologies, we discuss in this post some of the differences between Agile and EVM and explore ideas for how to make them work well together. We summarize the main source of incompatibility, perceived or otherwise, as follows:

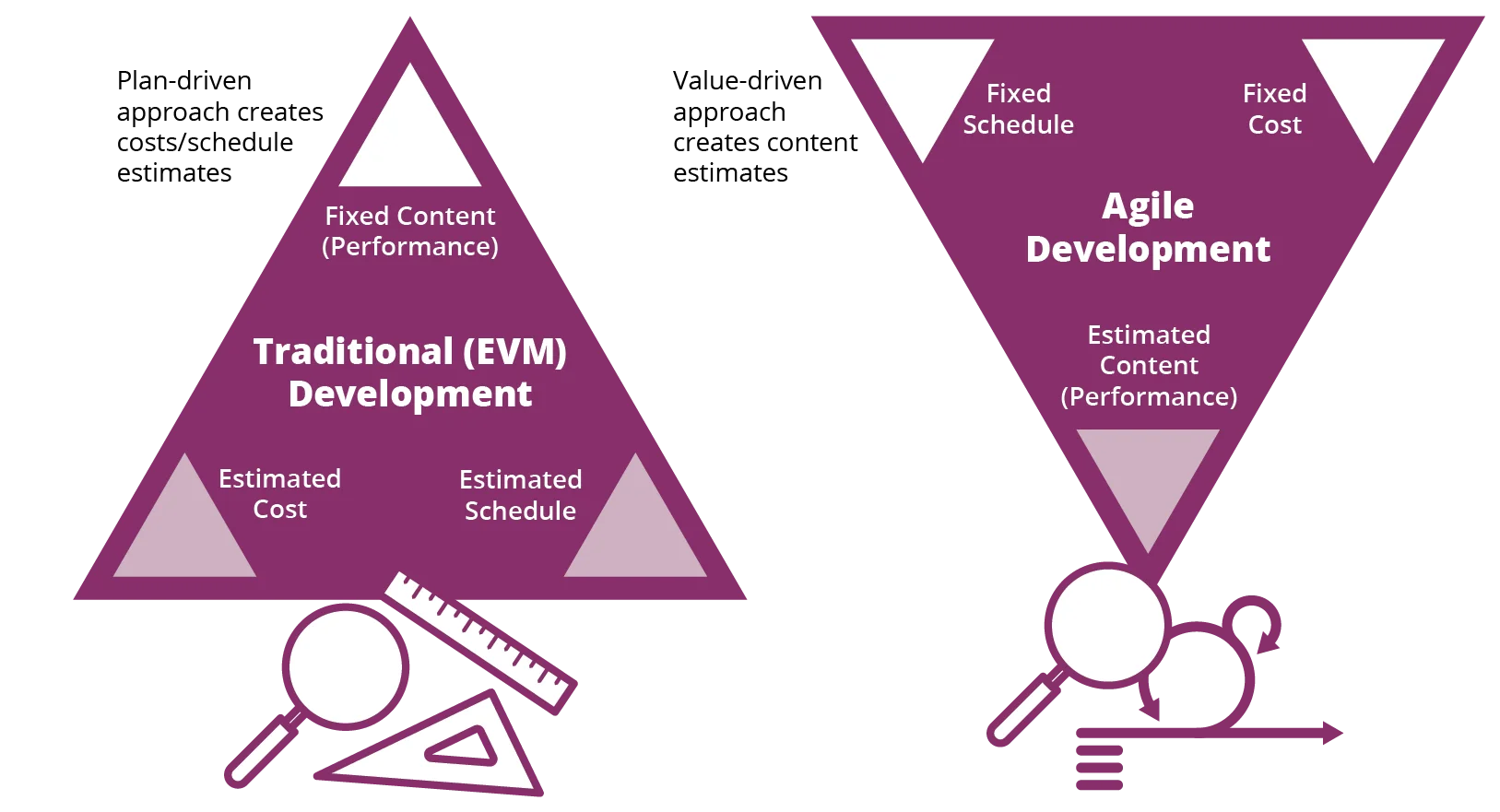

- EVM attempts to baseline the project management triangle of cost, schedule, and performance early in a program’s lifecycle. The capability evolution (performance) of the system being acquired is mapped over time (schedule) with the labor and materials (cost) needed to perform the development. These factors are captured in the performance measurement baseline (PMB). The program’s earned value management system (EVMS) assesses the actual cost and schedule to achieve the stated performance evolution against the predicted cost and schedule that’s baselined in the PMB. Essentially, with this methodology, the solution is fixed early and the EVMS assesses value by how well the program progresses to that solution based on how much cost and schedule it takes to achieve it.

- Agile inverts this PM triangle because it utilizes cadence-based planning instead of the capability-based planning used by EVM. The iterative and fixed timeboxes that Agile employs to develop the system being acquired welcome evolutionary changes in the system’s performance because learning is expected over time. With Agile, cost and schedule are relatively fixed within the iterative timeboxes, and the solution that emerges is assessed for value.

EVM and Agile both greatly influence how a program conducts operations and informs its decisions. Agile, though, is more concerned with the process, and EVM is more concerned with measuring the performance of that process, in terms of cost and schedule. These methods could and should support each other. Yet, anecdotal evidence captured in SEI engagements with programs reveals that many programs are struggling to follow Agile development processes and accurately measure their progress with EVM.

Acknowledging that both EVM and Agile techniques are usually tailored to meet the needs of each program, we will not take a one-size-fits-all approach in this post to solve this dilemma. (It's worth acknowledging that Agile concepts also apply well to ongoing evolution of a deployed system, where continuous delivery models are used. In those settings, constructs that derive from Kanban and eXtreme Programming will be more prominent than the familiar constructs of Scrum. As well, in those settings, EVM may not be used, or may be applied very differently.) Instead, we will highlight where SEI staff members have observed some of the more problematic interactions. The following will provide some real-world considerations that acquisition professionals should be aware of with Agile and EVM acquisition programs and projects. Ideally, these considerations would be resolved early in a program’s lifecycle, but they can be encountered throughout the program’s evolution.

Big Design Up Front (BDUF) Versus Planning as Late as Possible

Traditional acquisition professionals are biased toward planning the future of the program with as much defined granularity in the schedule as possible to support the various systems engineering technical reviews (SETRs). The SETRs function as formal and comprehensive reviews where the program is supposed to convey how well the design is understood and justify the cost and schedule to develop. This approach encourages a program to forecast a mature, long-term plan and provide the artifacts to support and defend that plan, manifesting in a plan-driven, fixed-requirements approach, often referred to as big design up front (BDUF). The EVMS measures progress against that plan, and acquirers evaluate the program’s success based on its adherence to the plan. This traditional approach, which is almost muscle memory to many in the acquisition community, can discourage program agility throughout the performance period when new information, learning, or technological advances may suggest a better but different path forward.

Conversely, the Agile method generally presumes that the program doesn’t know as much now as it will later, and not only allows but also expects solutions to evolve over time with learning. Program pivots are made so long as evolutionary changes fit within the relatively fixed cost-and-schedule guiderails. Typically, Agile will use timeboxed planning that has relatively short windows of time to learn, develop, and evaluate the solution set. There is minimal detail planning beyond the current release-planning timebox, often referred to as the program increment/planning interval (PI) or the Agile cadence release. Ultimately these two mindsets (traditional acquisition BDUF and Agile) will clash early in the program, often as the first SETR approaches.

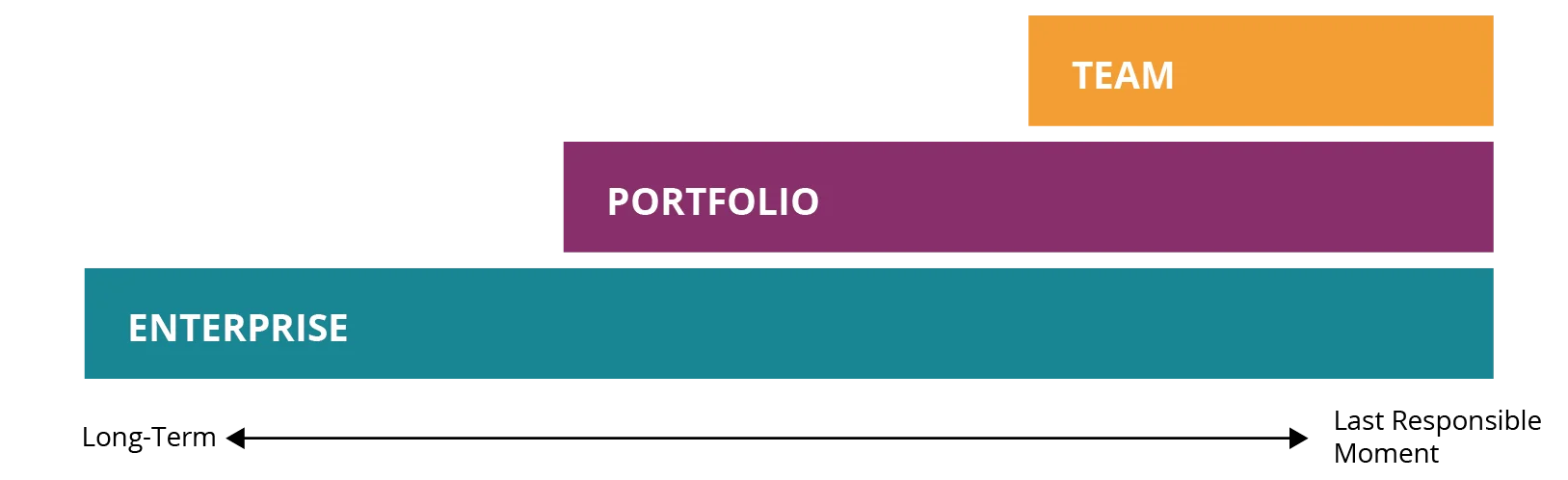

These two mindsets can be thought of as two ends of a planning continuum—big up-front (long-term) planning versus cadence-based short-term planning (sometimes referred to as rolling wave planning). A program should be aware of the pros and cons of each. The EVM mindset is often associated with a BDUF approach, and it will likely be more familiar to the organization’s professionals. But EVM is less flexible in supporting the solution and requirements flexibility that are fundamental to Agile. Successful programs find a balance between such extremes, and managing progress requires long-term but less-defined high-level plans and short-term detail planning captured in the EVMS. It’s best that the program manager, EVM, and engineering communities discuss this continuum early and ensure that they are synchronized to prevent confusion later.

As is usually the case, the optimal approach lies somewhere in between, and it is situational. In general, the larger the audience or the higher up in the organizational hierarchy that the decision directly affects, the sooner the decision and planning should be performed. Often this situation means that enterprise-wide planning happens before portfolio planning, and portfolio planning happens before team planning. This approach is particularly relevant with architectural planning decisions. For instance, in systems of systems, the architectural plan and vision must be sufficiently defined up front to enable teams to build compatible work. Things like communication protocols, operating systems, and timing, which affect the entire enterprise, are best determined up front. But architectural decisions that affect only a single team should be deferred until later to exploit potential tradeoffs before being documented and implemented.

Assessing Feasibility

Both EVM and Agile development place significant emphasis on assessing the feasibility of a program; however, there are significant differences in their approaches.

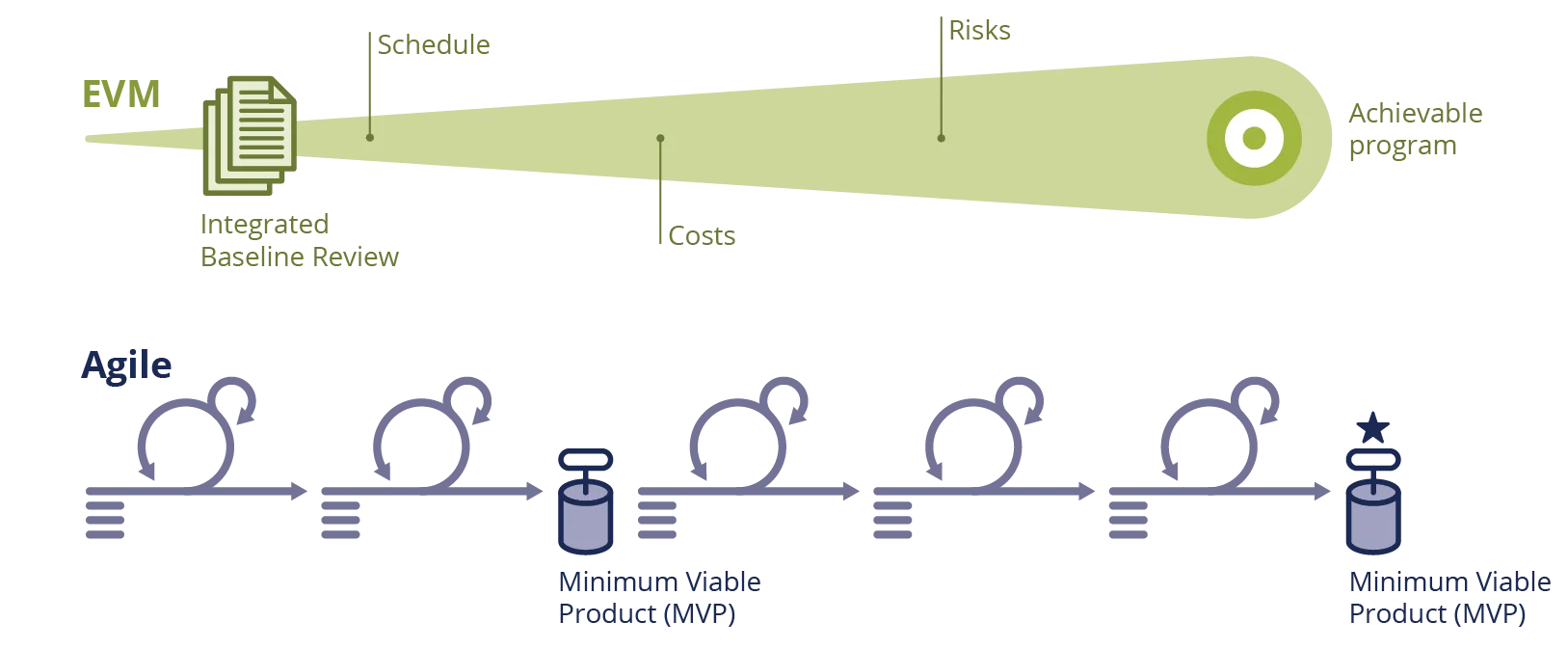

For programs that will use EVM, there is a requirement that an integrated baseline review (IBR) be conducted in which both work and organizational structures are examined in the context of schedule, budget, and other resources, as well as any known risks. The main purposes of the IBR are to identify additional risk and assess whether the baseline defines a program that can be achieved. The EVM team plays a significant role in assessing the feasibility of the program. In essence, the IBR is a forward-looking, multi-factor (e.g., cost, schedule, performance, risk) approach to assessing feasibility based on the plans developed for the program.

In contrast, Agile programs, particularly those following the Lean Startup model, focus on the development of a minimum viable product (MVP), which is a development of software to confirm or refute the hypothesis that the program (or some part of it) is feasible. It's an engineering problem, based on schedule and complexity, to determine when an MVP can and should be produced. Since the MVP must be constructed first, feasibility is assessed in a backward-looking manner to determine whether the hypothesis was sustained.

In large, complex programs, an IBR may take place long before an MVP can be developed, particularly when the hypothesis to be tested is of a complex nature. Moreover, the IBR considers a broad range of factors whereas the typical MVP is limited to answering a smaller set of questions. The MVP, however, is a concrete demonstration, based on executing code, whereas the IBR is invariably based on projections into the future.

The two approaches are compatible with each other. For large programs that will use both Agile methods and EVM, it is likely that an IBR will be conducted as usual, though it should be considered that a part of the IBR might include a demonstration of an MVP (if it can be developed in time). Regardless of the presence of an MVP, the following questions should be answered no later than the IBR:

- How will the EVM and Agile structures be aligned so that the EVM coding structures [such as the work-breakdown structure (WBS) and organizational breakdown structure (OBS)] are reflected in the application lifecycle management tool’s hierarchy?

- How will the Agile roadmap be synchronized with EVM artifacts such as the integrated master schedule (IMS)?

- How are the Agile backlog(s), priorities, and commitments integrated with the authorized scope?

- How will the baseline schedule be aligned with the Agile cadence-based timeboxes?

- What mechanisms will be used to reflect Agile learning in the baseline schedule?

- How will rework be handled?

How Far Down into the Hierarchy of Agile Work Does the EVMS Track?

Historically, programs have followed the BDUF method; not only for the system to be built, but also for all the associated management processes. The system isn’t the only thing designed up front; so are all organizational and management structures. These organizational designs typically follow the organizational construct and often are not seen in Agile developments, though recent work in team topologies suggests mechanisms for organizing the teams according to the desired structure of the system. For both the system and the organizational structure, though, there is a tension between essentially fixed structures in a traditional development and fluid structures in an Agile development.

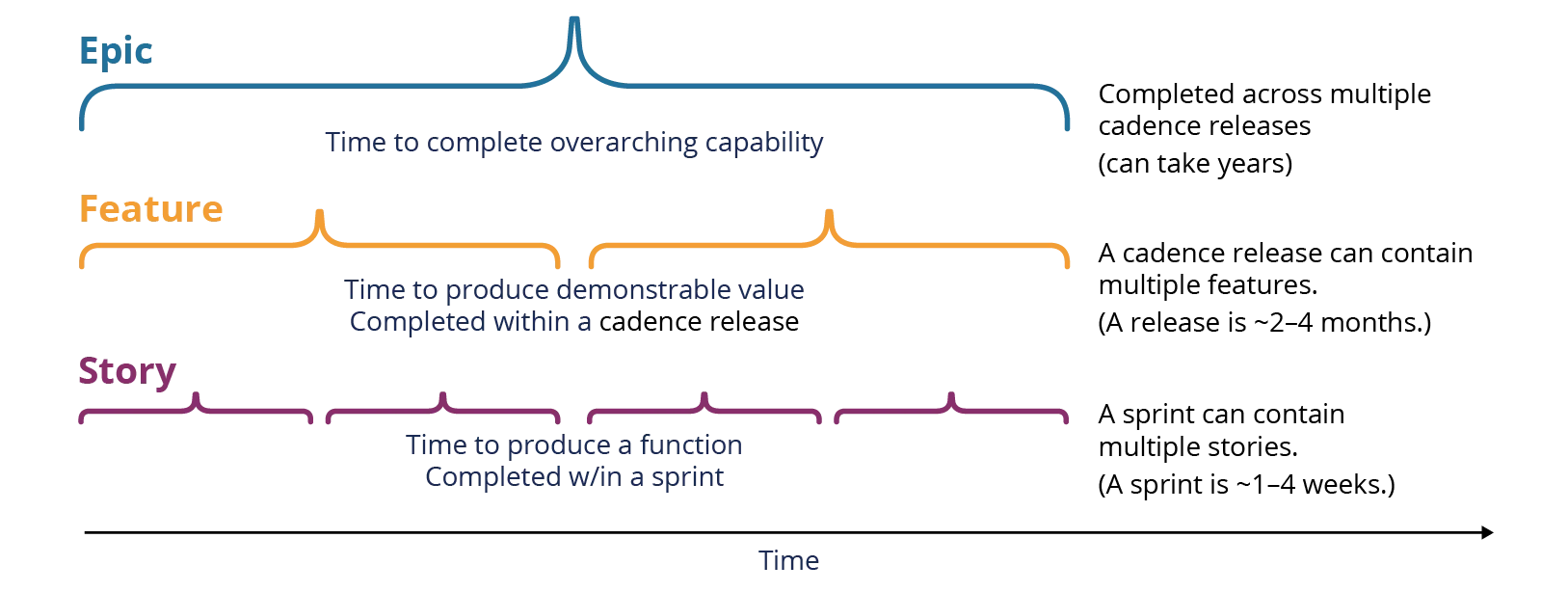

An Agile program’s development work is broken down into hierarchical categories based on their ontology. Typically, the highest levels of work, often called epics, will be the overarching capabilities or requirements that can take years to complete. The program will then break down that higher level work into smaller, more defined pieces that fit within the different timeboxed cadence releases and iterations. A commonly prescribed hierarchy would be to have epics at the top, then features, and finally stories at the lowest level.

Features are usually defined as detail planned work that fits within a program’s Agile cadence release or PI and provides demonstrable value. Stories are typically pieces of work that can be accomplished by an Agile team that fit within an iteration timebox, usually 1 to 4 weeks. However, this nominal hierarchy of epicsàfeaturesàstories is often not practiced. Many programs have more than three levels of hierarchy and use different terminology and definitions for reasons unique to them. Often the terminology will evolve over a program’s development lifetime to accommodate changing business and engineering practices.

Regardless of terminology or how many levels, all Agile programs will have a breakdown of work that the EVMS will inevitably have to measure. Naturally, some may think that the EVMS should track the lowest level of work since it is usually the most defined and detail planned. However, this approach will likely be administratively burdensome and unnecessary because the lowest level of work is too detailed for the larger development context. The feature level of work (using the above paragraph’s nominal hierarchy and terminology) will almost certainly provide sufficient measurable value to the overall program requirements without having to add so many detail-planned work packages into the PMB.

The best level of tracking is to go no lower than is necessary, but low enough to gain the perspective needed for management to make the right decisions. A program will have to determine what works for them early and ensure that it can be applied uniformly. Otherwise, disconnects between the business and development team will occur.

Resolving the Tension Between Relative and Absolute Estimation

Estimation is used by both EVM and Agile development but with different goals in mind and, consequently, different approaches. In practice neither approach assumes perfect estimates. Estimation in EVM is intended to provide management with an assessment of how long it will take and how much it will cost to build a required artifact. Consequently, these estimates are usually given in units of time and costs associated with components to be built. Earned value is then assessed by comparison of actual time and costs along with reported progress to the estimates.

In contrast, in Agile development, estimation is used almost exclusively for assessing the feasibility of near-term (and team-specific) objectives. However, these estimates are typically divorced from any units. The team performing the estimation identifies the smallest work item then assesses all other work items relative to the smallest work item; no units are implied by the estimate. Moreover, these estimates are typically created at the time work is about to begin and not at some period in advance, as is necessary for traditional EVM.

For both EVM and Agile, the estimates are based on historical performance. In the case of EVM the history comes from past programs, whereas in Agile the history is from recent timeboxes. In theory, the Agile estimates should be more accurate because they are based on up-to-date information, but these estimates can be flawed, particularly when there is management pressure to hit schedule targets. A final consideration is that the typical EVM estimate of effort is generally created in a top-down fashion constrained by the final negotiated contract, while Agile proponents recommend that the teams develop their estimates with a bottom-up approach.

Any Agile development that is also being tracked with EVM must contend with the issue of how to convert unitless measures to unit-based measures. There are several ways this might be accomplished:

- Agreement that each point equates to some number of hours of development work–This agreement is sometimes accomplished by estimating in “ideal days” as described well by Mike Cohn in his book Agile Estimating and Planning. Although this approach is practiced frequently, there are downsides to this approach because it encourages the team to think in absolute rather than relative estimates. The human brain is good at relative estimation. As an example, consider the different cup sizes on display at the coffee shop; without knowing the absolute quantities, we can still quickly decide which size we want. Another downside is that ideal days mean that the team must estimate not only task size but also task duration, whereas relative estimation is focused solely on size. Chapter 8 of Cohn’s book is an excellent resource for more detail on this topic.

- Using a variable mapping of points to hours—This mapping could be achieved by summing up all the points associated with a piece of work and then dividing the absolute estimate produced for EVM purposes by the points to get the mapping for this piece of the work. This would require that the Agile team commit to the initial estimate of all the work, which may discourage learning as the development progresses. Further, it would be meaningless to compare story-point velocities within a team from one piece of work to the next since it would be unlikely that the ratio of hours to points would be the same on any two pieces of work.

- Simply ignoring the differences between story points and hours (or ideal days)—The preceding suggestions point out difficulties with reconciling story points and hours. The question would then arise as to the value of using two different estimation techniques that, particularly for calculation of progress (percent complete) would be unlikely to give the same answers. Policy documents typically define how to use story points to compute percent complete of a feature but give no guidance with respect to calculation of costs that would be better focused on actual hours vs. planned hours for completed work. The issue is that, for good reasons of consistency, EVM requires that cost and schedule performance indicators be based on the same data and units. Therefore, it may make sense to allow Agile teams and EVM system users to use their own estimates and not try to reconcile them outside of the context for which they were intended.

Words Matter—Agree Early

Vocabularies are important and foster a common understanding. A shared vocabulary is particularly important in Agile–EVM discussions since the communities (developers and program management) are typically new to or not very familiar with each other’s terms. If people don’t take time to develop a common understanding of terms, they will believe that they have agreements when, in fact, they do not because of different interpretations of the words used.

Agile and EVM both bring an extensive list of not-so-common terminology to an already vocabulary-dense world of DoD acquisitions. It’s likely that two parties in the same program have nuanced interpretations of the same word, even after they’ve been on the program for a while. Worse, SEI Agile and EVM practitioners have observed that the Agile hierarchy terms and the definitions of each level can be a source of confusion and disconnect. These problems can happen because many programs will evolve their Agile hierarchy by adding or removing levels, which will drive updates to their definitions. The Agile hierarchy forms the architecture by which the EVMS will evaluate the program’s development progress (see How Far Down into the Hierarchy of Agile Work Does the EVMS Track?, above). Therefore, Agile terminology changes are analogous to engineering changes, and the operational definition of key terms may need to be controlled in a similarly rigorous fashion.

A word of caution: When common Agile terms, such as feature or epic, are used differently, there is a risk of misunderstanding with outside entities as well since those terms are often used by other programs.

What’s the Right Amount of Administrative Review When Doing Baseline Change Requests (BCRs)?

When an Agile program plans its work for the next cadence release or PI, work will be decomposed from the larger items in the hierarchy, and detail planning will occur with the most up-to-date information. Usually this is done collectively across the enterprise with subject-matter experts and stakeholders included for buy-in. The agreed-upon planned work then needs to be captured in the EVMS, which will require baseline change requests (BCRs).

With a traditional plan-driven approach [see Big Design Up Front (BDUF) Versus Planning as Late as Possible, above], BCRs are often viewed to be fixes to mistakes in the plan—they are deviations from the otherwise long-term plan that isn’t supposed to change under the traditional acquisition paradigm. Because of this, the typical BCR process requires oversight by stakeholders relevant to the BCR, sometimes by a BCR board, who review to determine if the change can be made to the PMB. Often, the experts that are required to review and approve the BCR were present in the PI planning that generated the BCR. Therefore, this BCR oversight by a board may be duplicative and unnecessary, especially if the EVM subject matter experts, like the control account managers (CAMs) and planners, are also a part of the release planning to ensure that EVM rules aren’t breached and unexpected schedule perturbations don’t occur.

Programs may want to have two different BCR approval processes:

- A streamlined process for the planning changes that are identified in the cadence-release/PI planning events when all stakeholders are present, and

- A traditional, more thorough review process (if needed) for changes that occur outside of the release-planning events.

Regardless of the approval process that is used, it’s also important to leverage application lifecycle management tools and real-time information flows to involve stakeholders in a timely and efficient way, and to ensure that the appropriate people are involved to approve a BCR.

Assessing Progress

EVM’s value is derived from its use of actual project-performance data to measure progress. This data is then used to determine the value of the work completed. The easiest and most common approach is physical percent complete. While it is straightforward and easy to understand because it is based on tangible evidence of work completion, it may not consider constant changes to the scope of the project, could be subject to interpretation, and may not provide a consistent view of progress across different teams.

Within the Agile philosophy, value is achieved only with working software. In the strictest implementation, there would be only two options: 0 percent or 100 percent complete. Likewise, EVM guidance suggests that if work packages will be completed within one reporting cycle, a 0/100 measure of completeness would be appropriate.

Large systems of systems often require involvement with organizations outside the control of the software developers, such as formal test organizations, certification authorities, platform integration, etc. This approach does not accurately represent completed work and makes accounting for rework difficult.

In this case, the use of 0/X/…/Z/100 methodology makes more sense. Each stage or state is represented with a value of completion agreed to in advance. Programs will have to determine what the intermediary values should be. These values serve as indicators of stage or state completions versus an exact percentage complete.

For example, if the system required external testing and formal certification, a 0/30/75/100 valuation may be deemed appropriate. The work package would be determined to be 30 percent complete when it was ready for the external testing. It would then be assessed at 75 percent after testing and any required rework was completed. Finally, after certification (and any rework) was complete, it would be closed out at 100 percent complete.

Setting Up an EVM and Agile Program for Success: The Twain Shall Meet

All these considerations are just that—considerations. Each program has nuances that will determine what the best path forward is for their situation. It’s exciting to know that there is no one exact way to do this, but instead there are likely unlimited ways to set up an EVM and Agile program for success. The setup may even be more of an art than a science.

Our experience shows that practitioners of EVM and Agile will likely encounter all the tradeoffs detailed in this post (and probably more that weren’t listed). Even though there's not one right way to remedy these, there’s evidence that early engagement between EVM and Agile stakeholders can reduce potential for both disciplines to become burdensome and instead work together to provide valuable insight in managing the outcomes of effort. As with most meaningful things in life, teams will have to adapt through the period of performance, so it’s important to adopt a learning mindset and set up the Agile and EVM framework to allow for evolution.

We hope that this blog post highlights some of the important trade spaces early for the readers so that practitioners will be able to think about them before they present serious problems. All the different considerations enumerated in this post underscore the need to be mindful when utilizing Agile and EVM; it’s not just business as usual. It’s important to remember the intent of Agile and EVM and leverage the most useful portions of each while not using the components that take away from program execution and monitoring. When done appropriately, practitioners will enjoy the merits of both practices.

Additional Resources

Learn about the SEI’s work in Agile development.

Read other SEI blog posts about Agile.

Read other SEI blog posts about measurement and analysis.

Read the SEI technical report, An Investigation of Techniques for Detecting Data Anomalies in Earned Value Management Data.

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed