3 Metrics to Incentive the Right Behavior in Agile Development

The use of incentives to elicit certain behaviors in agile software development can often result in unintended consequences. One trap that we have seen project managers fall into is introducing metrics simply because they are familiar. As we stated in our first post in this series, there are many examples where an incentive to solve a problem creates an unintended, undesirable behavior. Software project managers must instead consider the overall goals, including goals for development, of the project and then try to select metrics that encourage team members to pursue the behaviors needed to achieve those goals.

In this post, the second in our series on the use of metrics to incentivize behaviors in agile development, we present three containment metrics that could incentivize the right behaviors in agile software development.

Three Containment Metrics to Consider for Agile Development

In our previous post in this series, we presented an argument that demonstrates why phase-containment metrics often incentivize behavior that is at odds with agile development. Containment metrics do, however, have merit.

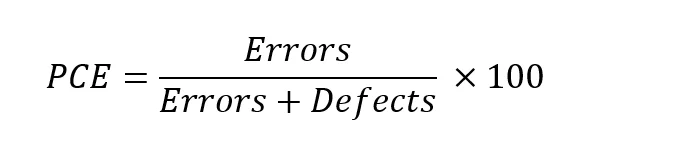

To recap, phase-containment of defects is often measured in terms of phase-containment effectiveness (PCE), which is typically defined, per phase, as

This definition has very specific meanings: an Error is a problem or an issue that is generated, detected, and corrected all in the same phase, and a Defect is a problem that is generated in one phase and detected (and we hope corrected) in a later phase. Generally, containing problems to a single phase is better than allowing them to appear in a later one.

In the remainder of this post we explore three containment metrics that could prove valuable in agile development.

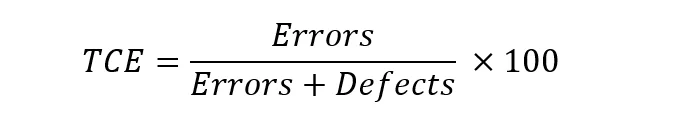

Total Containment Effectiveness (TCE). This metric is similar to PCE in that it is defined as

where Errors represents all problems discovered before a release is made and Defects represents all the problems discovered after a release. Clearly this metric can be gamed in the same way as PCE. If it is not being gamed, however, the metric can usefully be compared with historic measures of TCE (assuming they exist) and provides one indication of how the agile development process is performing in comparison to past practices.

A note of caution: defining what is and what is not a defect isn't as simple as it seems. Consider the issue of problems related to missing functionality. It may be a problem that, in a given release, some specific piece of functionality is not, or is only partially, present. However, that problem should not be classified as a defect unless the functionality was expected to be present at that given release. This temporal aspect of missing functionality is further complicated when considering a situation where a piece of functionality is expected in a given release but, perhaps because of unpredictable circumstances, is not ready in time and is not released. It isn't immediately clear if this latter problem should be considered as a defect.

Activity Containment Metric. Clearly, any agile development comprises many activities that, typically, are performed in rapid cycles. Phase containment could be translated into activity containment, and errors and defects could be measured at the activity level.

There are a number of concerns, however, with respect to activity containment metrics:

- The metric depends on the precise nature of the activities and how those activities are coordinated to form the whole development process. Any changes in either the activities or their coordination are likely to invalidate any historical comparisons.

- Activities are small and tend to repeat frequently, so such a metric has the potential to flood those collecting the data and those to whom the metric is reported. In a government acquisition, an activity containment metric is probably better for the contractor's own use than for reporting to the government.

- The burden of collecting problem reports and classifying them as errors and defects is likely to outweigh the benefit of an activity containment metric.

Definition of Done Containment Metrics. A key concept found in most agile development processes is Definition of Done. This concept can be used to define attributes of the software being developed (e.g., must have a clean scan from a given code inspection tool) or artifacts that must accompany the software (e.g., updated user manuals when appropriate). Moreover, it is common to have different Definitions of Done that represent different collections of activities. For example, the Definition of Done for a sprint is likely to differ from the Definition of Done for a release. Regardless of the specific Definition of Done, we might consider problems discovered before the software has been signed off as "Done" to be errors and those discovered after the signoff to be defects.

The large number of problems likely present in a large development would cause a sprint-level "Definition of Done" containment metric to suffer the same issues as discussed for the activity containment metric. However, for higher level concepts, such as release, a Definition of Done containment metric may prove of value. Assuming that the metric isn't being gamed, if there is low containment effectiveness, then we might conclude that either the development process is causing the problems or that the Definition of Done is too weak.

Wrapping Up and Looking Ahead

It is important to note that these metrics have not been tested to determine the behaviors that they encourage. We recommend piloting them before engaging in full-scale use.

As this series of posts details, traditional phase-containment metrics are incompatible with agile development. The metrics suggested in this post--Total Containment Effectiveness, Activity Containment Metric, and Definition of Done Containment Metric--instead focus on containing defects to concepts consistent with agile development.

Additional Resources

Visit https://www.sei.cmu.edu/go/agile to learn more about the SEI's work in helping the DoD and other government agencies adopt Agile software development approaches.

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed