Rapid Software Composition by Assessing Untrusted Components

PUBLISHED IN

Software ArchitectureToday, organizations build applications on top of existing platforms, frameworks, components, and tools; no one constructs software from scratch. Hence today's software development paradigm challenges developers to build trusted systems that include increasing numbers of largely untrusted components. Bad decisions are easy to make and have significant long-term consequences. For example, decisions based on outdated knowledge or documentation, or skewed to one criterion (such as performance) may lead to substantial quality problems, security risks, and technical debt over the life of the project. But there is typically a tradeoff between decision-making speed and confidence. Confidence increases with more experience, testing, analysis, and prototyping, but these all are costly and time consuming. In this blog post, I describe research that aims to increase both speed and confidence by applying existing automated-analysis techniques and tools (e.g., code and software-project repository analyses) mapping extracted information to common quality indicators from DoD projects.

We have applied our approach to the assessment and comparison of security frameworks. This example helps to illustrate the methodology and shows examples of some of the tooling that we can apply for quality attributes in addition to security, with the end goal of supporting rapid software composition.

Data-Driven Selection of Security Frameworks During Architectural Design

The selection of application frameworks is an important aspect of architectural design. Selection often requires satisficing; that is, searching a potentially large space of design alternatives until an acceptable solution is found. In our work, we focused on the criteria used by practicing software architects in selecting security frameworks. We also proposed how information associated with some of the criteria that are important to architects can be obtained manually or in an automated way from online sources, such as GitHub.

In the case of the security-frameworks comparison, we did a combination of manual and automated analyses as part of our process of learning what to do and what questions to ask and answer. Our eventual goal is to automate as much as possible, to enhance rigor and repeatability.

Architectural design can be performed systematically using a method such as Attribute-Driven Design (ADD). Generally, software architects reuse proven solutions to address recurring design problems. These proven solutions can be conceptual in nature, such as design patterns, or they can be concrete, such as application framework or runtime components and services.

An application framework (commonly known as just a framework) is a collection of reusable software elements that provide generic functionality addressing recurring domain and quality attribute concerns across a broad range of applications. There are application frameworks for many problem domains including user interfaces, object-to-relational-mapping, generation of reports, and security. Selecting an appropriate framework is an important design decision that may be costly to revert. Our aim was to help software architects make better and more informed design decisions, particularly regarding the selection of components such as application frameworks during the architectural design process.

Application frameworks can be categorized according to different characteristics, or criteria, that are important for their selection. We based the list of criteria below on informal interviews with software architects, and later validated it with a survey (of a different, larger group of architects). The criteria we selected are

- Functional completeness. The framework offers the functions that are needed.

- Ease of integration. The framework integrates easily with other technologies that are used in the project.

- Community engagement. Bugs are fixed quickly.

- Quality of documentation. Good documentation and examples are available.

- Cost. The framework is free or reasonably priced.

- Usability. The framework is easy to use.

- Learnability. The framework is easy to learn.

- Support. The vendor or community provides support, answers questions quickly.

- Familiarity. I or my team was already familiar with it.

- Popularity. Lots of other projects were already using this framework.

- Runtime performance. The framework does not introduce an unacceptable performance penalty.

- Evolution. New, useful features can be easily added to the framework.

We chose to focus on open-source software-security frameworks supporting the Java programming language and dedicated to the quality attribute of security, selecting 11 popular security frameworks for comparison. We focused on these topics since the domain of security frameworks is rich, with many products available, and Java has been the most popular programming language for enterprise applications for well over a decade. Our selection was simply to scope our analysis efforts: none of the techniques that we applied are specific to either the Java language or the domain of security.

We asked our survey participants to select preferences among the 11 candidate security frameworks and to identify the criteria that they considered when selecting their preferred frameworks. We then chose to examine in more detail four criteria that had influenced their choices: functional completeness, community engagement, evolution, and popularity. We chose to examine these and not other criteria that survey participants rated more highly; the purpose of this examination was to explore strategies of partially or fully automated data collection from online sources, and criteria such as ease of integration and total cost of ownership are difficult to measure by gathering data solely from online sources.

Functional Completeness

We measured functional completeness in terms of coverage of the domain. For this reason we scrutinized each framework to determine how many distinct areas of security concerns the framework addresses.

Security tactics exhaustively define the facets of software security that a framework could be architected to address. Tactics are generic design primitives that have been organized according to the quality attribute that they primarily affect: availability, modifiability, security, usability, testability, and so forth. Tactics have been used to guide both design and analysis. The way that we employ tactics here is as a kind of analysis: tactics describe the space of possible design objectives with respect to a quality attribute.

By determining which tactics a framework realizes, we get a measure of the functional completeness of the framework. In this way we can rank the frameworks according to the degree of each framework's coverage of security tactics. Security tactics abstract the complete domain of design choices for software security.

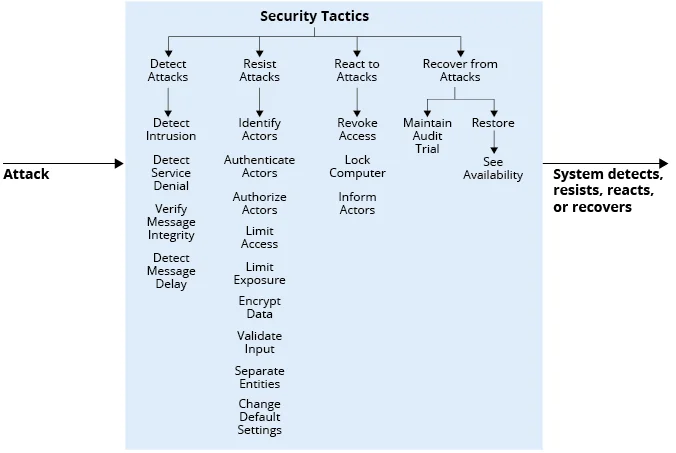

Figure 1 below shows the security tactics hierarchy. There are four broad software-based strategies for addressing security: detecting, resisting, reacting to, and recovering from attacks. These are the top-level design choices that an architect can make when considering how to address software security. The leaf nodes further refine these top-level categories. For example, to resist an attack, an architect may choose to authorize users, authenticate users, validate input, encrypt data, etc. Each of these is a separate design choice that must be implemented, either by custom coding or by employing a software component such as a framework.

Figure 1: Security Tactics

By understanding which specific tactics are addressed by a security framework, we measure its functional coverage (reported as the number of tactics that are realized by the framework). For example, a security framework may specialize in providing encryption features and hence is only implementing the "Encrypt Data" tactic shown in Figure 1 above. The functional coverage of the framework is therefore quite limited since it only covers a single tactic.

To measure functional coverage, we first reviewed the published descriptions of all the frameworks under investigation. These descriptions were primarily obtained from the frameworks' homepages. An example is the description from the Spring Security framework:

Spring Security is a framework that focuses on providing both authentication and authorization to Java applications. Like all Spring projects, the real power of Spring Security is found in how easily it can be extended to meet custom requirements.

We also looked for additional materials such as online articles and tutorials. This initial review gave us an idea of the overall emphasis of each framework in its coverage. We then delved into the individual application programming interfaces (APIs) that support specific security tactics to verify the claims made in the frameworks' descriptions. Finally, we captured results in a template that served as a checklist of all known security tactics.

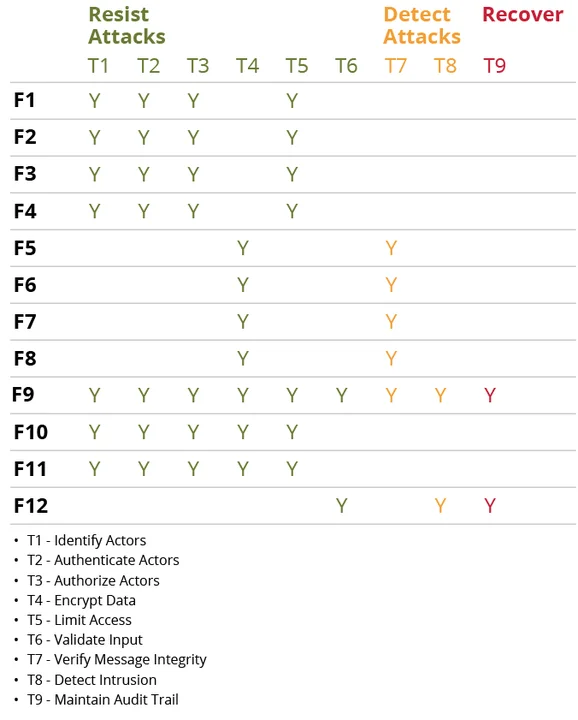

Table 1 summarizes the functional coverage of all the security frameworks we considered in this study (Y's mean the tactic is covered by the framework; the numbers F1 to F12 correspond to the frameworks we considered). Note that in the table we omitted the tactics not covered by any of the frameworks we reviewed. Thus the tactics that are covered are the following:

- T1 - Identify actors

- T2 - Authenticate actors

- T3 - Authorize actors

- T4 - Encrypt data

- T5 - Limit access

- T6 - Validate input

- T7 - Verify message integrity

- T8 - Detect intrusion

- T9 - Maintain audit trail

Table 1: Functional Coverage

We manually gathered the information for the functional completeness criterion. While it is possible to create a tool that performs text analysis of each framework's homepage and identify keywords that can be connected to specific tactics (such as authorization), this is currently beyond the scope of our research.

Community Engagement

Unlike functional completeness, data for community engagement (an important criterion for architects) can be gathered in a relatively simple way based on publicly available information. We used three different measures to evaluate the community engagement for frameworks:

- The ratio of resolved issues vs. open issues. Issues include reported bugs that must be fixed and feature-enhancement requests. A high resolution ratio indicates that the community that develops the framework actively works toward improving its quality.

- The average time it takes for issues to be resolved. A smaller number on this measure indicates that the members of the community actively work toward quickly addressing issues and improving the quality of the framework.

- The number of contributors (and committers). A high number of contributors also indicates that there is a vigorous community committed to the development of the framework.

The calculation of these measures requires that the framework have a publicly accessible issue-tracking system. We used the official GitHub API v3 to obtain the issue-tracking data for six of the candidate frameworks that use GitHub to handle project issues. Two of the frameworks use JIRA, and two more use sourceforge.net. Data collection from such sites is easily automated via a web-crawler. Two other projects do not have publicly accessible issue-tracking systems because they are developed as part of the Java API.

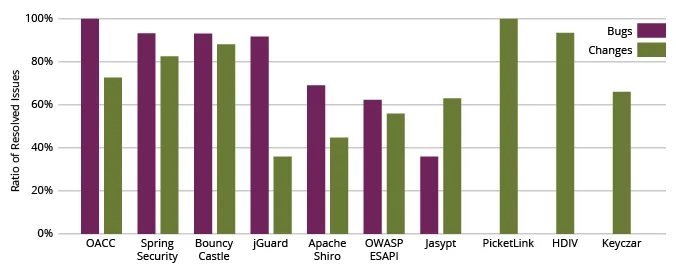

Figure 2 shows the ratios of resolved issues including bug fixes and change requests (new features) for each of the frameworks evaluated.

Fig 2: Ratio of Resolved Issues

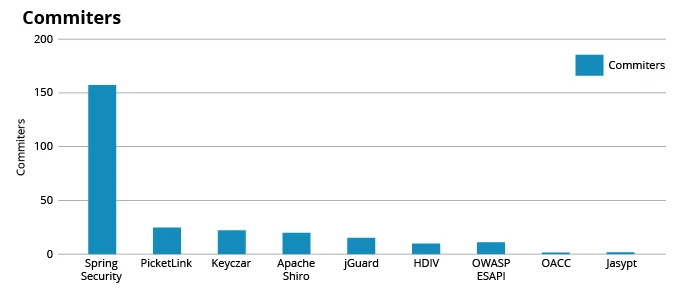

Next, we tracked the distribution of issue-resolution time for the security frameworks. If issues found in a security framework are resolved promptly, this becomes another measure indicating community engagement. Finally, Figure 3 displays the results with respect to the number of committers for the different frameworks.

Figure 3: Number of Committers

Evolution

We consider that the evolution criterion is related to maintainability--how easy it is for a community of developers to modify a framework to fix bugs (including newly emerging security threats), and to add features (corresponding to new security requirements or variants on existing security requirements). While these terms are not identical, they are strongly related.

The degree to which a system can be easily evolved is the degree to which it is easy to find the location of a bug or feature, and independently modify the code responsible for that bug or feature. A system that is not maintainable typically suffers from problems such as high coupling, low cohesion, large monolithic modules, and complex code. All of these characteristics inhibit the evolution of the system, which is why we consider these two terms to be largely interchangeable.

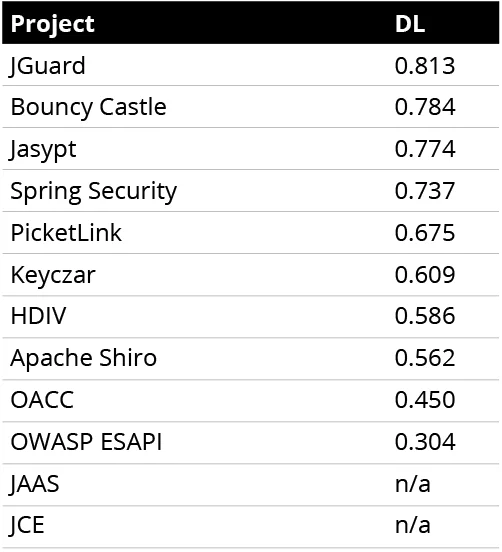

For the purposes of this work, we examined the architectural complexity of each of our candidate frameworks, as measured by its decoupling level (DL)--an architecture-level coupling metric. DL measures how well a system's modules are decoupled from each other and has been shown to strongly correlate with true maintenance costs. This metric was calculated using the Titan tool suite.

Table 2 presents the results of the DL calculations for 10 of the 12 frameworks. (We were unable to obtain the source code for the other two, and so could not calculate their DL values.)

Table 2: Results of the DL Calculations

With the DL metric, the higher the value the better. In our analysis of 129 large-scale software projects covering a broad range of application areas (108 open source and 21 industrial projects), 60 percent of these projects were shown to have DLs between 0.46 and 0.75, with 20 percent having DLs above 0.75 and 20 percent having DL values below 0.46.

Popularity

Popularity is also an important criterion for evaluating a security framework. While the respondents to our survey did not rank popularity as a major criterion, we postulate that more widespread adoption and popularity implies higher quality, better support, greater likelihood of longevity, and better usability. We therefore included it in our survey as a factor worth measuring. Of course, a more popular framework may be inferior in some other ways, such as having less coverage than newer or less known frameworks. Once again, this is why we have chosen orthogonal measures of framework quality in our evaluation method.

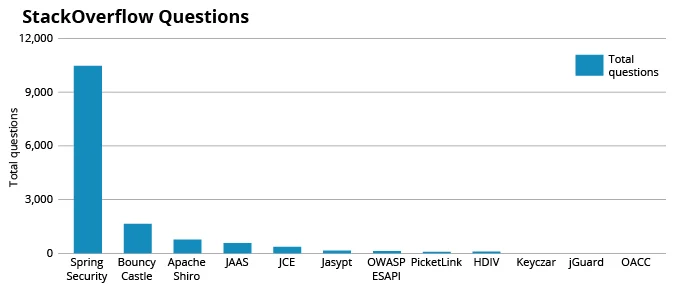

We extracted data, based on search results from Stack Overflow, to quantify the security frameworks' popularity. Figure 4 below shows the overall results for the popularity criterion.

Figure 4: Number of Questions on Stack Overflow

Wrapping Up and Future Work

This study of criteria that are useful to architects in the selection of application frameworks has allowed us to understand which criteria are important to practitioners and how data associated with some of these criteria can be gathered from online sources in a partially or fully automated fashion.

At this point we gathered data for only a small number of criteria, and our future work includes identifying ways to gather data for additional criteria, although this may be challenging for some criteria, such as learnability or ease of Integration. Future work also includes creating a decision-support tool that provides support to architects in the application-framework selection process, based on the information that is gathered from online sources.

While the goals of our study are rather narrow--looking at decisions affecting adoption of security frameworks for Java applications--the methodology that we have applied is not specific to either Java or security, but was simply our initial target. We believe, however, that the reasoning, criteria, and tools we have used to collect data in this study are generic. We therefore claim that our research represents a first step towards creating scorecards for third-party components, supporting the rapid selection of such components.

In our current work, we have created a reasoning framework and supporting tooling for rapidly identifying suitable software components (such as libraries, APIs, modules, etc.) within a continuous-integration climate. Our main result is that a suitably aggregated set of quality attribute indicators (e.g., latency, maintainability, security threats) provides a quick and efficient way to rapidly evaluate component-integration decisions. It shortens decision time while increasing confidence.

Component selection and evaluation has been recognized as important since at least the 1980s. We capture these properties as a four-stage process: establish criteria, identify candidates, triage, conduct detailed analysis and evaluation. Our recent work has focused on examining the costs and benefits of ways to rapidly accomplish Stage 3, triage. Triage shortens decision time by dramatically reducing the number of components to evaluate, as well as the quality attributes to evaluate for each of them.

We have now reified our reasoning framework as a set of automated and semi-automated tools that, at the press of a button, provide quality-attributed-specific scores that then can be aggregated into overall component scores by weighting each of the individual measures according to stakeholder preferences.

Additional Resources

Read Insights from 15 Years of ATAM Data: Towards Agile Architecture by Stephany Bellomo, Ian Gorton, and Rick Kazman in IEEE Software, September/October, 2015.

Read Component selection in software engineering - which attributes are the most important in the decision process? by Chatzipetrou et al in Proceedings of the EUROMICRO Conference on Software Engineering and Advanced Applications, 2018.

Read Choosing component origins for software intensive systems: In-house, COTS, OSS or outsourcing?--a case survey in IEEE Transactions on Software Engineering, 44(3):237-261, 2018.

More By The Author

More In Software Architecture

PUBLISHED IN

Software ArchitectureGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Software Architecture

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed