Cost-Effective Software Security Assurance Workflows

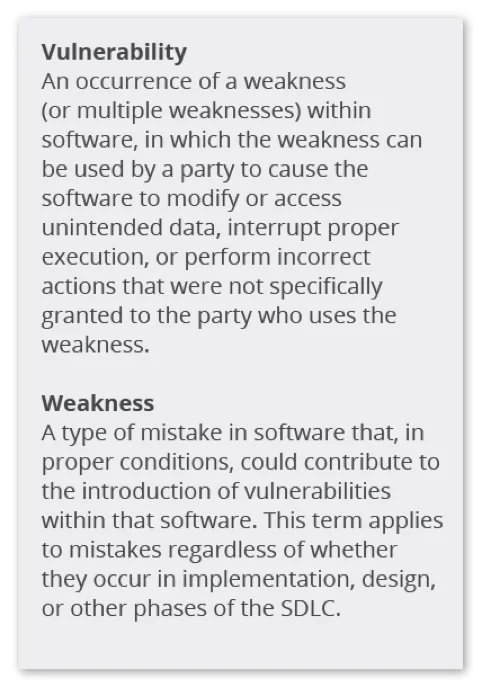

Software developers are increasingly pressured to rapidly deliver cutting-edge software at an affordable cost. An increasingly important software attribute is security, meaning that the software must be resistant to malicious attacks. Software becomes vulnerable when one or more weaknesses can be exploited by an attacker to cause to modify or access data, interrupt proper execution, or perform incorrect actions.

Building secure code requires not only good design, but also sound implementation. The vulnerabilities, after all, result from weaknesses that include a subset of implementation defects that can contribute to a vulnerability. Recent research indicates that between one and five percent of the defects discovered in at least some types of deployed software contribute to vulnerabilities. This relationship suggests that reducing defects in general may reduce the levels of weaknesses and therefore vulnerabilities

This blog post, the first in a two-part series, describes research aimed at developing a model to help the DoD predict the effectiveness and cost of selected software security assurance tools. Rather than study security enhancing tools directly as used by researchers under controlled conditions, we study tool costs and benefits when used by professional developers in production environments. The study is timely because it addresses an urgent need.

A DoD Mandate

Software vulnerabilities have become so pervasive that Congress has issued a mandate to secure software from attack (see Section 933). As a result, the DoD issued guidance--Enclosure 3 section 13(b) states that program managers should include SwA as a countermeasure to mitigate risks to mission-critical components in accordance with DoDI 5200.44--requiring project managers to document the verification for assurance in the program protection plan. This guidance essentially requires the use of automated weakness and vulnerability scanning tools.

One challenge is that vulnerabilities represent a small portion of defects. A typical 50,000 lines of code (LOC) will contain an average of 3 vulnerabilities but 125 released defects. Some defects cause a weakness that could potentially compromise the system. A weakness that can be directly exploited is a vulnerability. Put another way, vulnerabilities are rare and hard to find, but defects are common and easy to count. If correlated, the count of common defects found can be a better predictor of the actual vulnerability level than the discovered vulnerabilities.

Some of our collaborating software development groups are writing new code and others are enhancing legacy systems. They have chosen to use static analysis tools on the code and binary. Their choices dictate our focus in this initial phase of our research.

Software developers, who are under tremendous pressure to produce and deploy, often have little data or time to track what tools they are using and the effectiveness of those tools. One of our initial challenges is to acquire enough metrics from our collaborators to establish a reliable ground truth. Moreover, software developers often measure outcomes differently, so it is important that we have consistent metrics when comparing one tool or approach to another.

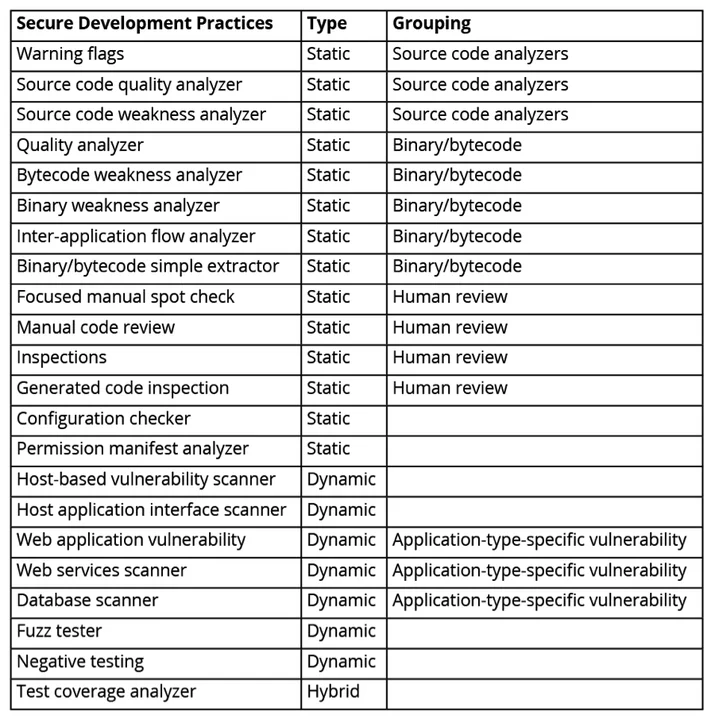

There are many tools that can be applied throughout the software development lifecycle to assure critical assets such as requirements, designs, source code, test cases, binaries, and executables. Some tools can scan the artifact for known exploits or for common weaknesses. Other tools execute programs to discover weaknesses in running code. The table below lists some of these tools and practices by category:

One challenge for federal agencies charged with integrating these tools into the software development lifecycle is that, although there are many tools available, there is scant empirical data on their effectiveness, cost, or constraints. Without a clear understanding of the real-world cost, impact, and effectiveness of these tools, DoD program managers will be able to integrate these tools, but they will not be able to make the cost-benefit tradeoffs. As a result, they will not realize the benefits of these tools or the full cost.

Our Approach

Our approach is to conduct field effectiveness studies in which we gather software engineering data from about 30 projects that develop software under normal conditions, that is with minimal intervention or guidance from us. We use their data to build a prediction model to compare potential workflows before development begins. With grounded data and a model, software developers can make informed trade-offs on the cost and effectiveness of techniques in various combinations.

One advantage of our approach is that we will not necessarily need to distinguish between defects and vulnerabilities because they appear to be correlated. Most software vulnerabilities begin as some kind of software defect, half of which are conventional coding defects. Our previous work has successfully applied the planning of software development work using a model for the injection and removal of defects. We are adapting this model to include the injection and removal of the subset of defects found by the security tools. If this approach is successful, we could model alternate approaches to meet cost and assurance goals.

To conduct this study, we needed to obtain data for software development projects before and after security tools were introduced. The majority of our collaborators are fairly mature software development organizations. We therefore have reliable data on the types of practices they are using and how much time they spend verifying software using various tools.

For each project, we first obtain a baseline of its practice before inserting additional tools into the development workflow. We then calibrate the model using data from the added tools and predict future behavior and validate that the model predictions are accurate. For each project we examine which tools were used, where they were applied, the effort required to use them, the number of issues found at each stage of development, and the cost to find and fix those issues.

We use the data outlined above to estimate the effectiveness and cost of tools as applied in context. We then validate that the model can accurately predict cost, effort, and escaped weaknesses by comparing the model predictions with actual performance on future development cycles for each collaborator's software development project.

Most of our current collaborators are primarily interested in using static analysis tools, so that is our initial focus. Our collaborators face constraints with respect to what tools they can implement, so they often aren't using a wide variety of tools.

Our initial data analysis does not attempt to compare one tool against another. Rather, we focus on helping our collaborators with the following tasks:

- selecting tools to insert into their process

- inserting tools into the development workflow

- automating use and instrumentation of tools

- interpreting and analyzing tool output

Ideally, when our collaborators identify defects, we track data on their defect levels, vulnerability location, tools used, and percentage of defects removed by chosen tools. We then use this data to populate our defect prediction model. If we can map the data from our collaborators to common weakness enumerations (CWEs), we can demonstrate more directly how effective and efficient the tools are at removing security defects.

Early Results and Looking Ahead

We have early results that will be discussed in detail in a subsequent blog post. A short summary of our findings follows:

- As used in practice, each of the tools was modestly effective.

- The tools cannot (or should not) replace other old school techniques.

- Using tools as a crutch might tend to crowd out use of other effective practices, an area where additional research is needed.

- Rather than slowing development, the code scanning tools save time by finding bugs early and reducing the fix time in test.

- Although the tools work as soon the product is ready (e.g. code must be written before analyzed by a static code checker) they also work later. There may be some advantages to not using automated tools immediately.

We expect our research will serve as a catalyst to acquire ground truth data on the real-world cost and effectiveness of including these tools in the software development process. We have used a defect injection and removal model in the past to plan defect removal activities to achieve quality goals. Ideally, a similar model can be useful for planning the activities to remove weaknesses and vulnerabilities.

The lack of quantitative data prevents software developers in defense and industry from making educated tradeoffs on cost, schedule, and security. In an ideal world, this research project will net data on static analysis tools and, ultimately, on the effectiveness and use of dynamic analysis tools. Eventually, we plan to create a tool based on the model we developed that will allow software developers to decide what tools and techniques are most effective for removing defects. If our approach proves successful, we can apply it to different classes of tools beyond static analysis tools.

We welcome your feedback in the comments section below.

Additional Resources

Read the SEI technical report that explores this work in greater detail, Composing Effective Software Security Assurance Workflows.

Read the SEI technical note, Predicting Software Assurance Using Quality and Reliability Measures.

More By The Author

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed