Containerization at the Edge

Containerization is a technology that addresses many of the challenges of operating software systems at the edge. Containerization is a virtualization method where an application’s software files (including code, dependencies, and configuration files) are bundled into a package and executed on a host by a container runtime engine. The package is called a container image, which then becomes a container when it is executed. While similar to virtual machines (VMs), containers do not virtualize the operating system kernel (usually Linux) and instead use the host’s kernel. This approach removes some of the resource overhead associated with virtualization, though it makes containers less isolated and portable than virtual machines.

While the concept of containerization has existed since Unix’s chroot system was introduced in 1979, it has escalated in popularity over the past several years after Docker was introduced in 2013. Containers are now widely used across all areas of software and are instrumental in many projects’ continuous integration/continuous delivery (CI/CD) pipelines. In this blog post, we discuss the benefits and challenges of using containerization at the edge. This discussion can help software architects analyze tradeoffs while designing software systems for the edge.

Container Benefits for the Edge

Our previous blog post about edge computing, Operating at the Edge, discusses several key quality attributes that are important to the edge. In this section, we discuss how software systems at the edge can leverage containers to improve a few of these quality attributes, including reliability, scalability, portability, and security.

- Reliability—One key aspect of reliability at the edge is building software systems that avoid fault scenarios. The isolation of containers means that all the application dependencies are packaged within the container and thus cannot conflict with software in other containers or on the host system. Container applications can be developed and tested in the cloud or other servers with a high certainty that they will operate as expected when deployed to the edge. Especially when performing container updates at the edge, this isolation allows developers to upgrade applications without worrying about conflicts with the host or other container applications.

Another aspect of reliability is the ability of software systems to recover and continue operation under fault conditions. Containers enable microservice architectures, so if a container application crashes, only a single capability goes down rather than the whole system. In addition, container-orchestration systems, such as Kubernetes (or edge variants like microk8s or k3s), can utilize virtual IP addresses to allow failover to a backup container if the primary container goes down. Orchestration systems can also automatically redeploy containers for long-term stability. Containers can be easily spread across multiple edge systems to increase the chance that operation will continue if one of the systems gets disconnected or destroyed.

- Scalability—Software systems at the edge must be scalable to support connecting and coordinating a high number of computing nodes. Designing containers as microservices with single capabilities allows these capabilities to be distributed across heterogenous nodes and started or stopped as the load changes. In addition, container-orchestration tools greatly ease coordination and scaling across nodes. If the load increases, orchestration can handle autoscaling to meet demands.

This scalability also enables container-based systems at the edge to adapt as mission priorities shift from moment to moment. Containers can easily be started or stopped depending on which capabilities are required at the current stage of the mission. Given computation and memory limitations at the edge, systems can also save resources by temporarily shutting down or by limiting resources to containers that are not necessary in the moment.

- Portability—A major benefit of containers is that they are isolated and portable units of execution, which allows developers to create and test them on one platform and then move them to another platform. Because edge devices usually have size, weight, and power (SWaP) constraints, they are not always the best fit for performing development and testing. One possible CI/CD workflow is to develop and test containers on the cloud or powerful servers and then transfer their images over to the edge at deployment time.

To create the same functionality that is available on servers with edge devices, the number of machines is increased, and work is coordinated between them. Given the large number of devices, maintaining a consistent environment becomes an increasingly hard and time-consuming effort. Containerization allows deployment from a single file that can be shared easily between devices.

While containers are not inherently portable across hardware architectures (e.g., x64 to arm64), containers can often be ported merely by swapping the base image from one architecture to a parallel base image from the target architecture. As we’ll discuss in the next section, there are also cases where containers are not portable.

- Security—In many edge environments—especially in tactical edge environments that operate near adversaries who are attempting to compromise the mission and gain access to software running on devices—securing applications running on edge devices is vital because there are more attack vectors available for compromise. While not as secure as VMs, containers do offer an added layer of isolation from the host operating system that can provide security benefits. Developers can choose which files are shared and which ports are exposed to the host and other containers.

Container security is important in many fields, so many specialized security tools have emerged, such as Anchore and Clair. These tools can scan containers to find vulnerabilities and assess the overall security of a container. In addition, the DoD has an Enterprise DevSecOps Initiative (DSOP) that has defined a container-hardening process to help organizations meet security requirements and achieve authority to operate (ATO).

However, security is not a win across the board for containers at the edge, and we’ll discuss below some downsides to edge container security.

- Open-Source Ecosystem—Containerization has a rich ecosystem of open-source software and community collaboration that helps support the mix of software and devices at the edge. The most popular containerization platform, Docker, is open source, except for the Mac and Windows versions. In addition, the most popular container orchestration platform, Kubernetes, is an open-source project maintained by the Cloud Native Computing Foundation.

Vital to both of these projects is the Open Container Initiative (OCI), which was founded in 2015 by Docker, CoreOS, and other container leaders to create industry standards for container format and runtime. The OCI GitHub page (https://github.com/opencontainers) maintains the OCI image format (image-spec), runtime specification (runtime-spec), and distribution specification (distribution-spec). A wide variety of open-source tools and libraries exist that use the OCI specifications and are backed by both industry partners and community, including podman, buildah, runc, skopeo, and umoci.

Container Challenges at the Edge

Despite all the benefits that containers bring to managing quality attributes related to edge computing, they are not always appropriate and do have some challenges at the edge. In particular, portability and security have both benefits and challenges.

Storage

One downside of containerization is that because containers package all an application’s software files and dependencies, they tend to be a larger size (sometimes as much as 10 times larger) than what is necessary for the application to run. As we have posted previously, edge devices deployed at the humanitarian and tactical edge have SWaP constraints. The scarcity of resources at the edge directly conflicts with storage waste in container images. For storage-limited edge devices, these constraints could prevent new capabilities from being deployed or require extensive manual developer effort to reduce container sizes.

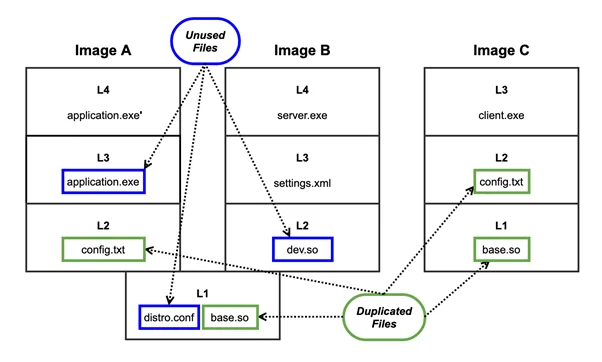

Container images are composed of layers, which are sets of files that are merged at runtime to form the container’s file system. One source of container storage waste is unused files. These are files that exist in container layers but are never needed nor used during application runtime. One class of unused files is development files. It is common practice for container image builds to pull in development dependencies to build the end application for the container. These development dependencies are not required for runtime, so they remain unused unless the developer deliberately strips them out of the container.

Another class of unused files is unused distribution files from the base image. Base images, such as Ubuntu and CentOS, can contain 100MB+ of files before the end application is even added; and while some of these files can be useful for development or debugging, many go unused. The last class of unused files is overwritten files. These are files that were added in a lower layer before a new file with the same path and name is added in a later layer. Container layers are immutable and composed to form a final container image, so these overwritten files stay as hidden bloat in the image wherever it goes. While this bloat is fairly rare, poor container-build practices with large files could yield large storage waste.

A second source of container storage waste is duplicated files. These are identical files that are stored or contained in different layers of multiple container images that are deployed on the same device. This duplication yields wasted storage that could be prevented by storing the file in a shared base layer. Much of this duplication can be avoided by building shared dependencies into a base container image. However, more complex systems may deploy containers developed by different organizations that use different build practices. Using a shared base image could require modifying or integrating build practices, which can be very costly.

Some strategies exist for minimizing container sizes, such as using multi-stage builds to keep only runtime dependencies, starting from minimal base images (e.g., Alpine Linux, distroless images, and scratch images), and sharing base layers between images. In addition, there are a few tools designed to analyze existing container images to discover which files are required for application operation and then to remove any unnecessary files, such as minicon and DockerSlim.

Update Size

In addition to being storage-limited, in tactical edge scenarios, edge devices commonly operate over delayed or disconnected, intermittently connected, low-bandwidth (disconnected, intermittent, and limited or “DIL”) networks. When operating on DIL networks, minimizing network traffic is essential both to save bandwidth for all required messaging and to keep communications concealed. While containers make updating applications simple, the larger-than-necessary container images result in larger container updates as well. For edge systems where application updates must be pushed to the edge, this larger update size can conflict with the DIL networks that they need to be transmitted over.

Containers have built-in layers that can make updates faster because only the changed layers must be transmitted. If a base layer dependency is updated, however, all subsequent layers must be rebuilt and thus retransmitted for updates. The same minimization strategies mentioned in the previous section would also apply to address this update-size issue.

Multi-Node Orchestration

Edge systems may operate over DIL networks where steady communication between nodes is not guaranteed. Nodes may disconnect and reconnect to the network randomly. The containers and their applications must be robust to network disconnects so that they can still work toward mission objectives. Likewise, the software that orchestrates containers must be robust to DIL network communication.

One of the weaknesses with existing solutions, such as Kubernetes, is that they are designed primarily for handling containers running on servers or the cloud where network connectivity is highly reliable. Kubernetes relies on having a single master control-plane node with an etcd key-value store that coordinates all the nodes. For a DIL network, if a node gets disconnected, it must be able to both operate on its own as a single-node cluster and then merge back into the larger cluster when reconnection occurs. When a master node drops out, however, Kubernetes and etcd perform leader election, which requires a minimum of three nodes to achieve quorum. New and innovative solutions are needed to achieve seamless container orchestration at the edge.

Real-Time Requirements

Many edge-software use cases involve systems that have real-time requirements. Even if these systems could receive many benefits from containers, some aspects of container technology can be a barrier. Most container technology is built to work on top of the Linux kernel, which is not a real-time operating system (RTOS). In addition, containerization introduces additional runtime overhead, which may not be noticeable for powerful servers, but might be prohibitive for SWaP-constrained embedded real-time edge systems. Containers with real-time scheduling require coordination among the RTOS, the container runtime engine, and the container configurations, which makes this a challenging problem.

There is active research in this area, however, and some groups have already made progress. VxWorks is a popular RTOS that in 2021 announced support for OCI-compliant containers. Others have tried to alter Docker to work with real-time Linux kernels, such as Real-Time Linux (RTLinux) from the Linux Foundation.

Portability

Containers do not virtualize the host operating system and underlying hardware, so containers are not able to run seamlessly across any platform. For example, Docker can run natively on both Linux and Windows, but MacOS support is achieved only by running a Linux virtual machine with Docker containers running inside it. Moreover, because a container directly uses the host’s kernel, its supported architecture (e.g., amd64 and arm64) is tied to the container image. For low-power edge devices that do not run Linux, containers are likely not compatible. However, they may still have use in the development CI/CD toolchain for the edge software running on those devices.

Security

While containers provide some security benefits, there are also security downsides and concerns to containerization. Containers share the same underlying kernel, so a rogue process in a container could cause a kernel panic and take down the host machine. In addition, users are not namespaced, so if a running application breaks out of the container, it will have the same privileges on the host machine. Many containers are built using the “root” user for ease or convenience, but this design can result in additional vulnerabilities. Containers run on the container runtime engine (e.g., Docker runtime, runc), so the runtime engine can be a single point of failure if it gets compromised.

The Future of Containerization at the Edge

Containerization brings many benefits to edge-computing use cases. However, there are still challenges and areas for improvement, such as

- container build and deployment processes with built-in container minimization

- container orchestration that is robust to DIL networks

- container runtime engines for microcontrollers and Internet of Things (IoT) devices

- Integration of container runtimes with real-time operating systems

To help address the first challenge, the SEI is currently researching strategies for improving single-container minimization technologies and combining them with cross-container file deduplication. The goal of the research is to create a minimization technology that integrates seamlessly into existing CI/CD processes that enables smaller, more secure containers for resource-constrained edge deployment.

Additional Resources

Read the SEI blog post, Operating at the Edge.

Read the SEI blog post, 11 Leading Practices When Implementing a Container Strategy.

Read the SEI blog post, Virtualization via Containers.

Read the SEI blog post, 7 Quick Steps to Using Containers Securely.

Read other SEI blog posts about edge computing.

More By The Authors

More In Edge Computing

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Edge Computing

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed