Software Engineering for Machine Learning: Characterizing and Detecting Mismatch in Machine-Learning Systems

PUBLISHED IN

Artificial Intelligence EngineeringThere is growing interest today in incorporating artificial intelligence (AI) and machine-learning (ML) components into software systems. This interest results from the increasing availability of frameworks and tools for developing ML components, as well as their promise to improve solutions to data-driven decision problems. In industry and DoD alike, putting systems that include ML components into production can be challenging. Developing an ML system is more than just building an ML model: The model must be tested for production readiness, integrated into larger systems, monitored at run time, and then evolved as data changes and redeployed. Because of this complexity, software engineering for machine learning (SE4ML) is emerging as a field of interest.

This blog post describes how we at the SEI are creating and assessing empirically validated practices to guide the development of ML-enabled systems as part of AI engineering—an emergent discipline focused on developing tools, systems, and processes to enable the application of artificial intelligence in real-world contexts. AI engineering comprises development and application of practices and techniques that ensure development and adoption of transformative AI solutions that are human-centered, robust, secure, and scalable.

An ML-enabled system is a software system that relies on one or more ML components to provide capabilities. ML-enabled systems must be engineered such that

- Integration of ML components is straightforward.

- The system is instrumented for runtime monitoring of ML components and production data.

- The cycle of training and retraining these systems is accelerated.

Many existing software engineering practices apply directly to these requirements, but these practices typically are not used in data science, the field of study that focuses on development of ML algorithms and models that are incorporated into software systems. Other software engineering practices will require adaptation or extension to deal with ML components.

ML Mismatch

In a blog post we published last June, Detecting Mismatches in Machine-Learning Systems, we observed that the ability to integrate ML components into applications is limited by, among other factors, mismatches between different system components. One reason is that development and deployment of ML-enabled systems involves three distinct disciplines: data science, software engineering, and operations. The distinct perspectives of these disciplines, when misaligned, cause ML mismatches that can result in failed systems.

For example, if an ML model is trained on data that is different from data in the production environment, the performance of the ML component in the field will be reduced dramatically. We at the SEI have been working to develop new ways to detect and prevent mismatches in ML-enabled systems. Our goal is to ensure that ML can be adopted with greater success and achieve the functional improvements that motivate inclusion of ML components in systems.

What makes ML components different from the traditional components in software systems is that they are highly data dependent. Their performance in production thus depends on how similar the production data is to the data that was used to train the ML model. This dependency is often called the training-serving skew. To succeed, ML-enabled systems must provide a way to know when model performance is degrading, and they must provide enough information to effectively retrain the models when it does. The more comprehensive and detailed the information gathered, the quicker a model can be developed, retrained, and redeployed.

Characterizing and Detecting Mismatch in ML-Enabled Systems

Here are some examples of mismatch in ML-enabled systems:

- computing-resource mismatch—poor system performance because the computing resources that are required to execute the model are not available in the production environment

- data-distribution mismatch—poor model accuracy because the training data doesn’t match the production data

- API mismatch—the need to generate a lot of glue code because the ML component is expecting different inputs and outputs than what is provided by the system in which it is integrated

- test-data mismatch—inability of software engineers to properly test a component because they don’t have access to test data or don’t fully understand the component or know how to test it

- monitoring mismatch—inability of the monitoring tools in the production environment to collect ML-relevant metrics, such as model accuracy

As part of our work on SE4ML, we developed a set of machine-readable descriptors for elements of ML-enabled systems that externalize and codify the assumptions made by different system stakeholders. The goal for the descriptors is to support automated detection of mismatches at both design time and run time.

As an example of how we could use these descriptors, imagine that multiple stakeholders are part of the ML-enabled system development. A project or product team would create the descriptor for the model task and purpose; a data-science team would create the descriptors for the trained model and the training data; data owners would create the descriptor for the raw data to train the model; operations personnel would create descriptors for the production environment and the production data; and software engineers would create the descriptors for the development environment.

As parts of the system are being developed and the trained model is handed off from one team to another, these descriptors would ensure that all the information needed for avoiding mismatch is available, explicit, and visible by all stakeholders, including program-office personnel, to provide examples of information to request or requirements to impose.

Study to Characterize and Codify ML Mismatches

To inform the development of these machine-readable descriptors, we conducted a study to learn more about ML mismatches. The study had two phases. In Phase 1, we interviewed practitioners to elicit mismatches and consequences. We asked two questions: Can you tell us an example of a mismatch that occurred because you or someone you worked with made an incorrect assumption? What information should have been shared that would have avoided that mismatch? After we grouped the identified mismatches into categories, we followed with a practitioner survey that assessed the importance of sharing information in each of these categories to avoid mismatches. In parallel, we conducted a literature review in search of documented successful practices for developing ML systems, looking in particular for reports of effective information sharing among disparate stakeholders.

Phase 2 of the study consisted of mapping the validated mismatches that we identified in the interviews to the system attributes identified in the literature review that would enable detection of each mismatch. When there was not a clear mapping between a mismatch and an attribute, or vice versa, we conducted a gap analysis to identify additional attributes that would be necessary for detection. Finally, to support our goal of automation, we codified the resulting attributes into descriptors specified using JSON schema.

Phase 1: Categorizing Mismatches

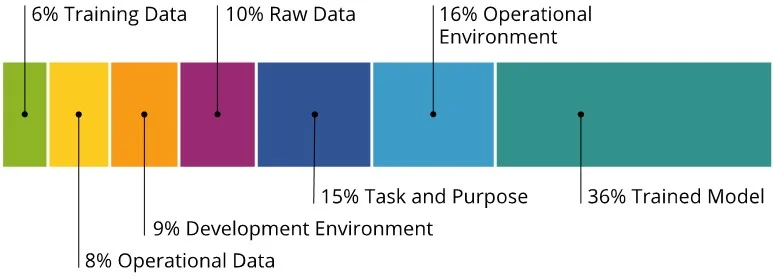

We conducted a total of 20 interviews and identified a total of 140 mismatches that led to 232 distinct examples of information that led to mismatches because it was not effectively communicated. We separated the mismatches that we identified into categories, shown in Figure 1.

Most identified mismatches refer to incorrect assumptions about the trained model (36 percent), which is the model trained by data scientists that is passed to software engineers for integration into a larger system. The next category is operational environment (16 percent), which refers to the computing environment in which the trained model executes (i.e., the model-serving or production environment).

Categories that follow are task and purpose (15 percent) which are the expectations and constraints for the model, and raw data (10 percent), which is the operational or acquired data from which training data is derived. Finally, in smaller proportions, are the development environment (9 percent) used by software engineers for model integration and testing; the operational data (8 percent), which is the data processed by the model during operations; and the training data (6 percent) used to train the model.

Here are some observations about the findings in these categories:

- trained model—Mismatches in this category split evenly between information related to test cases and test data, and lack of information about the API and specifications. One participant said, I had many attempts, but was never able to get from the [data scientists] a description of what components exist, what are their specifications, what would be some reasonable test we could run against them so we could reproduce all their results. In this example, the participant notes the absence of a formal or even informal specification of the model that would help integrate that model into the system.

- operational environment—Many of the mismatches in this category were related to runtime metrics and data. The model was put into operation, and the operations staff did not know what they were supposed to monitor. The data scientists had assumed that the operations staff would know how to do the monitoring and that they were collecting the needed runtime metrics and data.

- task and purpose—This category is related to requirements: What must be communicated between project owners and data scientists so that data scientists build the model that project owners expect? Twenty-nine percent of these mismatches had to do with unclear business goals. One data scientist said, It feels like the most broken part of the process because the task that comes to a data scientist frequently is, “Hey, we have a lot of data. Go do some data science to it…like go!” And then that leaves a lot of the problem specification task in the hands of the data scientist. Again, sharing complete information from the beginning would eliminate this kind of inefficiency.

- raw data—These are the datasets that data scientists or data engineers transform into training data. Most raw-data mismatches were associated with lack of metadata—how it was collected, when it was collected, how it was distributed, its geographic location, and the time frames during which it was collected; and descriptions of data elements—as well as information about field names, descriptions, values, and meaning of missing or null value. One of our participants said, Whenever they had data documentation available, that was amazing because you can immediately reference everything, bring it together, know what’s missing, know how it all relates. In the absence of that, it gets incredibly difficult because you never know exactly what you’re seeing; like is this normal? Is it not normal? Can I remove outliers? What am I lacking? What do some of these categorical variables actually mean? Access to information of this kind helps the data scientist do a better job, and many participants in our study said that such information is not often shared.

- development environment—These mismatches most often had to do with programming languages. Mismatches can result from data scientists failing to share information about the programming language that was used to develop the model or software engineers failing to share information about the programming language used in the actual system. In one instance, a group was trying to reproduce a model in Python that had been developed in R, and then realized that there was an underlying, low-level difference between the way the two different libraries were handling floating-point numbers, resulting in errors. The ideal environment for an ML system should require no porting between different programming languages. If porting is necessary, however, this need should be shared from the outset so that the porting step can be factored into the project schedule.

- operational data—Most mismatches here stemmed from lack of operational data statistics—the data that the data scientist trained the model with did not accurately represent the data in the operational environment.

- training data—Most of these mismatches arose from lack of details of data preparation pipelines to derive training data from raw data. In one example, a group developed an architecture for an ML pipeline, but the pipeline went into feature engineering as well, which precluded the team from exploring feature-engineering alternatives and locked them into a specific architecture. This example illustrates the need for a clean separation between the data pipeline and the model component.

To validate these findings, we conducted a survey where the main question was, How important is it for you as a practitioner to be aware of this information in order to avoid mismatch? Although we had only 31 responses to our survey, fewer than we wanted, the survey clearly affirmed that the information that we had gathered was representative of information that these respondents thought should be shared. Different information was deemed more or less important depending on the role of the respondent—data scientist, software engineer, or operations staff member—but taken as a whole, the results of the survey affirm the importance of communication to avoid mismatches.

A future blog post will report in detail on Phase 2 of our study. We will also introduce our follow-on work:

- development of an automated mismatch-detection tool based on the descriptors

- extension of the descriptors to support ML component testing

- development of an ML Component Testing Assistant that leverages these extensions.

Challenges in Deploying, Operating, and Sustaining Machine–Learning–Enabled Systems

Empirically validated practices and tools to support software engineering of ML-enabled systems are still in their infancy. In this post, we presented the results of Phase 1 of our study to understand the types of mismatch that occur in the development and deployment of ML-enabled systems caused by incorrect assumptions made by different stakeholders. Understanding how to deploy, operate, and sustain these models remains a challenge. The seven categories of ML mismatch that we identified, along with their 34 subcategories, contribute to codifying the nature of the challenges. The Phase 1 results of our study demonstrate that improved communication and automation of ML-mismatch awareness and detection can help improve software engineering of ML-enabled systems.

Our goal is to make the descriptors publicly available and create a community around tool development and descriptor extensions to improve the state of the engineering practices for developing, deploying, operating, and evolving ML-enabled systems.

Additional Resources

Read the SEI blog post, Detecting Mismatches in Machine-Learning Systems.

Read the paper, Characterizing and Detecting Mismatch in Machine-Learning-Enabled Systems by Grace A. Lewis, Stephany Bellomo, and Ipek Ozkaya.

Watch the SEI webinar, Software Engineering for Machine Learning.

Read more SEI blog posts about artificial intelligence and machine learning.

Join the National AI Engineering Initiative.

More By The Authors

More In Artificial Intelligence Engineering

PUBLISHED IN

Artificial Intelligence EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed