Managing Software Complexity in Models

PUBLISHED IN

Software ArchitectureBy Julien Delange

Member of the Technical Staff

Software Solutions Division

For decades, safety-critical systems have become more software intensive in every domain--in avionics, aerospace, automobiles, and medicine. Software acquisition is now one of the biggest production costs for safety-critical systems. These systems are made up of several software and hardware components, executed on different components, and interconnected using various buses and protocols. For instance, cars are now equipped with more than 70 electronic control units (ECUs) interconnected with different buses and require about 100 million source lines of code (SLOC) to provide driver assistance, entertainment systems, and all necessary safety features, etc. This blog post discusses the impact of complexity in software models and presents our tool that produces complexity metrics from software models.

The Challenges of Increasing Use of Software

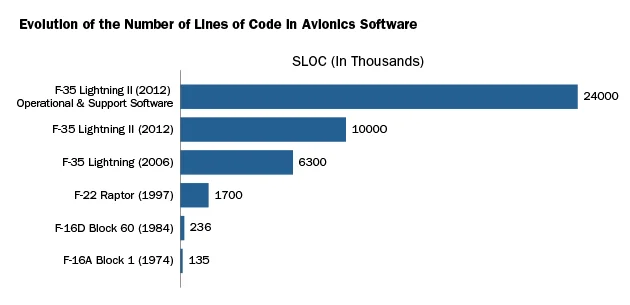

Figure 1 illustrates the increasing use and complexity of software in avionics: from 135 KSLOC to 24,000 KSLOC in the last 40 years.

Figure 1: Evolution of the Number of Lines of Code in Avionics Software

This increasing use of software brings new challenges for safety-critical systems. In particular, having so many interconnected components makes system design, implementation, testing, and validation more difficult. Because the functions of safety-critical systems operate at different criticality levels (as the Design Assurance Level of DO178-C), system designers must ensure that low-criticality functions do not affect those at a higher criticality (e.g., so that the entertainment system of your car does not impact the cruise control or brakes). Despite rigorous development methods, safety-critical systems still experience safety and security issues: in July 2015, almost 2 million cars were recalled because of software issues (1.4 million for Chrysler and more than 400,000 for Ford).

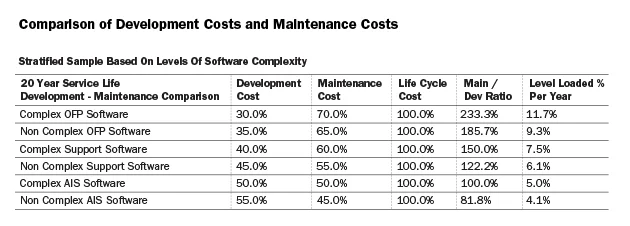

Software complexity has a huge impact on software acquisition costs, for both development and maintenance activities. Some studies suggest that software complexity can increase maintenance cost by 25 percent, which is significant since maintenance operations represent about 70 percent of the total acquisition costs (cf. Figure 2). Thus, on a $4.5 million project, managing software complexity could help save at least $750,000 on maintenance activities, or about 16.6 percent of the total lifecycle costs (which is a fairly low estimate, especially for safety-critical systems, where maintenance requires more testing and other verification methods than in any other domain). For this reason, being able to measure and keep software complexity under control is of paramount importance.

Figure 2: Comparison of Development Costs and Maintenance Costs

The work of several researchers--for example, McCabe, Halstead, and Zage--has focused on measuring software complexity. These complexity metrics provide a way to quantify not only the overall software complexity but also the evolution of the complexity over the lifecycle (e.g., increasing complexity when updating some pieces of code). This work has been mostly related to specific third-generation programming languages, such as C and Java. Safety-critical systems, however, are moving toward model-based development, which relies on several different tools and languages. System behavior is now implemented using software such as ANSYS SCADE or MATLAB Simulink, and implementation code is generated automatically from models. For example, the control and display system of the Airbus A380 was designed with SCADE. However, the use of models brings new challenges to addressing complexity-related issues:

- As modeling languages rely on different semantics, we need to adapt and tailor existing complexity metrics to modeling languages.

- Modeling tools need to provide a user-friendly environment that helps users make good design choices and avoid design patterns that might incur additional complexity.

Model-Based Complexity Metrics

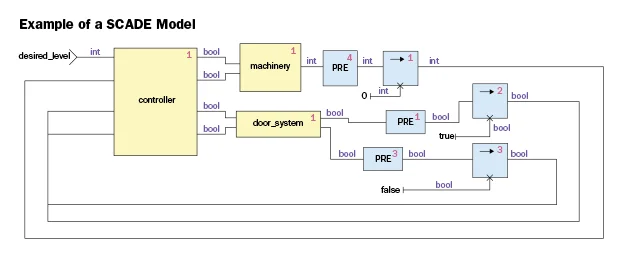

Modeling languages use different paradigms and notations than traditional programming languages. In addition, modeling languages use graphical representation, whereas legacy languages use textual notation. Modeling languages are composed of blocks that exchange data through connections, as shown in Figure 3. They can also define their behavior using state machines. We can interpret a block as a function (or procedure) and parameters as function calls (exchanges of data). Mapping the programming concepts--such as functions, function calls, and data types--from third-generation programming languages to modeling notations is a first step toward adapting the complexity metrics created for specific programming languages to model-based development.

Figure 3: Example of a SCADE Model

With this mapping in mind, we tailored several existing complexity metrics and implemented a plugin in the SCADE modeling framework to evaluate model complexity. We implemented the following complexity metrics:

- The McCabe complexity metric provides a measure of different data flow paths in the models. An increasing number of data flow paths means an increasing dependency between inputs and outputs: the more paths we have, the more component interfaces are connected. Avoiding value increases would then keep component and interface dependencies under control.

- The Halstead metric uses the number of operators and operands in a program to compute its volume, difficulty, and effort. In a modeling language, operators are represented by components, and operands are represented by interfaces. The Halstead Metric is a good way to estimate the complexity within a component (also known as internal complexity).

- Zage provides internal and external complexity metrics. The internal complexity metric uses factors such as the number of invocations, call to inputs/outputs, and use of complex data types. The external complexity metric depends on the number of inputs, outputs, and fan-in or fan-out. The external complexity metric is useful when looking at a component as a black box: one can then follow the complexity related to this component without having to consider its implementation.

We developed a new plugin in the SCADE modeling environment that produces these metrics directly in the modeling environment. The installation procedure is simple and helps designers evaluate how complex their models are. While there is no definitive threshold for these metrics in a modeling environment, having a means to measure and keep track of the overall system complexity represents a major step forward and will help system designers track system complexity throughout the lifecycle.

Our plugin is available under a BSD license, the source code is available on the SEI GitHub area, and we have also documented the installation procedure and usage.

Avoiding Complexity in Modeling Tools

Some development environments and tools--including Eclipse, Visual Studio, and Understand--include functionality to detect and avoid software complexity. For example, when a function contains an unused variable, some editors suggest removing it. Likewise, when the code misses a value in a switch/case statement, the editor can automatically generate the missing code. These suggestions help developers avoid coding errors and reduce complexity. As system development shifts from traditional languages to modeling tools, we need to understand how modeling environments can be improved to detect potential modeling errors, suggest design patterns to fix them, and ultimately reduce model complexity.

In that context, the SEI performed an experiment to understand the use of modeling tools. The study involved four groups selected for the following criteria: students and professionals with and without experience in modeling tools and language. The experiment was executed in three steps. First, ANSYS, the SCADE tool vendor, delivered training on the SCADE modeling tool so that we were sure all participants had the necessary knowledge to complete the rest of the study. Then, participants reviewed a model and answered several questions that indicated their understanding of the model and how comfortable they were with the tool. In the last part of the study, we provided a textual description of a system and asked participants to implement the system with a model. We are currently analyzing the results, and our findings will help us understand not only how users are using modeling technologies but also how we can improve the tools to detect and automatically avoid software complexity in software models.

Conclusion

As systems are becoming more software reliant, managing software complexity is now of paramount importance and a potential source of savings. Software complexity impacts not only development but also the maintenance process, and research analysis has shown that keeping complexity under control could reduce costs. Project managers could reasonably expect to see savings of 17 percent over a system's lifecycle. Because the development of safety-critical systems is moving toward model-based tools, we need to design metrics and tools and adapt the development environment to detect and avoid complexity as much as possible.

To address these challenges, we adapted existing complexity metrics to a model-based environment. While we collaborated with ANSYS and implemented models in SCADE, our adapted measures can be implemented in other model-based environments. Because language semantics differ among modeling tools, users can calibrate the metrics and establish thresholds to evaluate what values indicate a design issue for a system under development.

We also performed an experiment to understand how users perceive software complexity in models, how they use a modeling environment, and how they introduce complexity when designing software models. We expect the results will show us how to improve modeling tools and languages to automatically detect complexity in models. We will present our findings at the SCADE User Group Conference in Paris, October 15-16, and later in an SEI technical report, which will be available online.

Additional Resources

SEI complexity tools are available in the SEI GitHub space: https://github.com/cmu-sei/eraces.

More By The Author

More In Software Architecture

PUBLISHED IN

Software ArchitectureGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Software Architecture

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed