Verifying Evolving Software

When we verify a software program, we increase our confidence in its trustworthiness. We can be confident that the program will behave as it should and meet the requirements it was designed to fulfill. Verification is an ongoing process because software continuously undergoes change. While software is being created, developers upgrade and patch it, add new features, and fix known bugs. When software is being compiled, it evolves from program language statements to executable code. Even during runtime, software is transformed by just-in-time compilation. Following every such transformation, we need assurance that the change has not altered program behavior in some unintended way and that important correctness and security properties are preserved. The need to re-verify a program after every change presents a major challenge to practitioners--one that is central to our research. This blog post describes solutions that we are exploring to address that challenge and to raise the level of trust that verification provides.

As we strive to ease the burden of effort surrounding verification for practitioners, we attempt to answer this question:

How can we ensure that the amount of verification work is proportional to the size of the change, as opposed to the size of the system?

It is possible for even a small change to a program to trigger a complete re-verification of it.

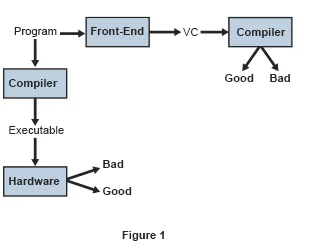

In our project, we study this problem of evolving verification in the context of a compiler. Two standard tools play a role in the process we describe here. The first is the compiler, which takes the source code of a program entered by the programmer and transforms it into an executable. The second is a verifier that takes a program and a property and determines whether the program satisfies the property. Figure 1 below shows a typical interaction of the compiler and the verifier. Both take the source code of a program in and manipulate it.

While to the user, the compiler is a black box that takes source code and produces binaries, internally it is structured as a series of transformations. Each transformation makes a small refinement to the program at each pass. This optimizes the program--that is, effects some improvement. For example, compilation optimizations might eliminate useless code or enable the program to use fewer resources. This iterative improvement is comparable to what happens to a program as it evolves over time. Thus, compilation provides a well-defined software evolution that we have chosen to study in our research.

One of the main hazards to verification results is that the compiler and verifier might use incompatible interpretations (semantics) of the source code. We refer to this hazard as the semantics gap. The semantics gap is as a major hazard to the validity of formal verification, and mitigating this hazard is one of our research objectives.

Towards our goals of overcoming the semantics gap, expanding the information provided to the practitioner, and automating the verification process, we are developing a certified compiler, which tightly integrates the compilation and verification tool chains. When verification is successful, this compiler provides a certificate--documenting a rigorous formal proof that shows why the software will behave as expected regarding a desired condition. The certificate provides an extra layer of trust to the user.

One major advantage of our approach is that it does not involve building the system from scratch. Instead, we have found a way to combine an existing compiler and verifier. We use an LLVM compiler, which is designed as a set of reusable libraries with well-defined interfaces and is written in C++. Our process also involves an intermediate representation in LLVM bitcode. It is this intermediate representation that allows the compiler and verifier to overcome their semantic differences and "talk to each other." Our verifier is UFO, which we have recently pioneered; it is a framework and tool for verifying (and finding bugs in) sequential C programs. UFO is a new brand of verifier and a major reason we are hopeful for success in our endeavor. As the winner in four categories of the 2013 Software Verification Competition, it currently represents the state-of-the-art in software verification.

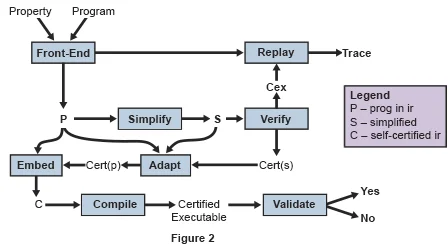

Figure 2 below represents the architecture of our proposed certified compiler. Key to the process is the common front end that translates the input program into a formally defined intermediate representation. As the figure shows, that representation is simplified for verification, then proceeds on to be verified. If an error is detected, it is communicated to the user through an error report. Otherwise, a certificate is generated, and the compilation tool-chain takes over.

The compiler first embeds the verification certificate into the program. When the program is compiled, the resulting executable is validated against this embedded certificate. If the validation passes, the certificate is removed, and the executable is returned to the user. The compiler and the verifier work on the same formal intermediate representation, so there is no semantic gap.

Impact

We anticipate that the certifier+compiler will provide significant benefit because it

- presents the user with more understandable verification messages

- allows for validation of correctness of compiler optimization

- allows for producing self-certifiable executable code

Our verifier+compiler architecture will simplify the verification effort considerably, as it automatically propagates verification results across the compilation tool chain. This propagation enables safe use of optimization in safety-critical systems and will have widespread impact. If successful, our toolset will enable many new applications of verification techniques in safety- and mission-critical environments such as defense and commercial aviation domains.

The verifier+compiler will also significantly speed system development and make it more efficient. Moreover, the optimized code better utilizes available resources and so will aid in fulfilling resource requirements. Development cost will decrease because automatic optimization is cheaper than the manual optimization that is currently performed. Verification costs will also decrease; for several reasons, code manually optimized for resource usage is much harder to verify than unoptimized code. For example, the (manual) optimization process often trims the code, which makes it less complete and harder to verify. In addition, many compilers have been designed to generate code that runs quickly, rather than code that can be verified quickly. Automated verification that occurs before automatic optimization resolves these issues.

Looking well into the future, we see possibilities for exciting applications of our research. If we were able to achieve optimal speed and efficiency for the verifier+compiler, we might apply it, for example, to an autonomous system. Such a system uses sensors to determine what is happening in the environment and changes its behavior accordingly. For example, it could rewrite its own program as it runs. With sufficient runtime performance, our verifier+compiler could verify the system as it runs and evolves.

Qualifying Tools

Other new applications of verification techniques in safety- and mission-critical setting could involve qualification of tools. The DoD and related government agencies require that safety-critical systems meet certain standards, for example, as described in DO-178 B, which is guidance used to determine whether the software will perform adequately in a safety-critical airborne environment. When the system satisfactorily meets these standards, the agency declares it certified (not to be confused with software certified by the verifier as described above). Government agency certification also requires that tools used to build safety-critical systems be qualified: tools used to develop or verify safety-critical software must not introduce errors or fail to detect errors, respectively. So, to build a certifiable avionics system, for example, you must use tools that you can demonstrate to be qualified. For system analysis tools, the new verifier+compiler architecture would make such qualification easier than it is currently. In particular, closing the semantics gap with the new architecture would have a significant impact both on how the development tools are qualified and how compilers are used in safety-critical domains.

Conclusion

If successful, we believe that our research will have a broad impact and numerous applications, including improving scalability of automated verification, simplifying software certification, and enabling novel architectures for adaptive and/or autonomous systems that are re-verified on-the-fly. We also expect that the prototype currently under development will be valuable towards demonstrating the technology to stakeholders and find new applications in safety-critical domain.

We welcome your feedback in our work in the comments section below.

Additional Resources

To read the paper, UFO: Verification with Interpolants and Abstract Interpretation (Competition Contribution) by Aws Albarghouthi, Arie Gurfinkel, Yi Li, Sagar Chaki, and Marsha, Chechik, please visit https://link.springer.com/chapter/10.1007%2F978-3-642-36742-7_52.

To read the paper, Synthesizing Safe Bit-Precise Invariants, by Arie Gurfinkel, Anton Belov, and João Marques-Silva, please visit

https://rd.springer.com/content/pdf/10.1007%2F978-3-642-54862-8_7.pdf.

To read the paper FrankenBit: Bit-Precise Verification with Many Bits - (Competition Contribution) by Arie Gurfinkel and Anton Belov, please visit

https://link.springer.com/content/pdf/10.1007%2F978-3-642-54862-8_32.pdf.

To read the paper, Incremental Verification of Compiler Optimizations, by Grigory Fedyukovich, Arie Gurfinkel, and Natasha Sharygina, please visit

http://verify.inf.usi.ch/sites/default/files/nfm2014.pdf.

To view the presentation, Trust in Formal Methods Toolchains, please visit

https://arieg.bitbucket.io/pdf/2013-07-14-VeriSure.pdf.

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed