SCALe: A Tool for Managing Output from Static Analysis Tools

PUBLISHED IN

Secure DevelopmentExperience shows that most software contains code flaws that can lead to vulnerabilities. Static analysis tools used to identify potential vulnerabilities in source code produce a large number of alerts with high false-positive rates that an engineer must painstakingly examine to find legitimate flaws. As described in this blog post, we in the SEI's CERT Division have developed the SCALe (Source Code Analysis Laboratory) tool, as we have researched and prototyped methods to help analysts be more efficient and effective at auditing static analysis alerts. In August 2018 we released a version of SCALe to the public (open-source via Github).

SCALe provides a graphical user interface (GUI) front end for auditors to examine alerts from one or more static analysis tools and the associated code, to make determinations about an alert (e.g., mark it as true or false), and export the project audit information to a database or file. The publicly released version of SCALe can be used for auditing software in four languages (C, C++, Java, and Perl) and two code-flaw taxonomies [SEI CERT Coding Rules and MITRE's Common Weakness Enumeration (CWE)].

Development of the SCALe tool began in 2010 as a project in the SEI's Secure Coding Initiative, and its first release outside the SEI (SCALe version 1) was in 2015. Up until this recent public release, SEI had previously shared SCALe only with DoD organizations and with private companies that had service agreements with the SEI. SCALe has been used to analyze software for the DoD, energy delivery systems, medical devices, and more.

The first GitHub release was SCALe version 2.1.4.0. We've recently released SCALe version 3.0.0.0 to our research project collaborators. We will soon publish an SEI technical report describing new features in SCALe 3.0.0.0, many of which are also in the GitHub version of SCALe. We are also producing a new series of video tutorials on SCALE and we will update this post with links to these tutorials and the technical report as soon as they are available.

For the past three years, almost all the development of the SCALe tool has been done as part of the alert classification and prioritization research projects that I have led, to add features and functionality needed by the projects. These research projects focus on (1) developing accurate automated classifiers for static analysis alerts, to reduce manual effort required to examine alerts and (2) developing (and automating) sophisticated alert-prioritization schemes intended to allow organizations to prioritize manual alert auditing according to factors important to them.

My project teams have added and modified features in SCALe so the project collaborators could audit their own codebases with it and produce audit archives (that they sent to us) with the features needed for classifier development methods being researched and compared. Collaborators also tested new auditing methods (e.g., new determination types and alert fusion as discussed below) incorporated into SCALe and provided user feedback (plus audit data) to the research projects. This initial GitHub release is based on a version of my research project's code from around February 2018, plus some bug fixes and performance improvements made by the wider Secure Coding team to prepare the code for this release.

By using output from multiple flaw-finding static code analysis tools, more code defects can usually be identified than if only a single flaw-finding static code analysis tool is used. Using multiple tools, however, increases the human work required to handle the large volume of alert output. Alerts produced by the flaw-finding static code analysis tools must be examined by a human expert who determines whether each alert represents an actual code defect (true positive) or not (false positive). The SCALe system incorporates features designed to make this process easier, and my research projects are adding features designed to automate much of it.

SCALe uses filters to screen for alerts that can be mapped to common weakness enumeration (CWE) categories or CERT coding rules, thereby reducing the number of alerts that might be examined by an analyst. SCALe also facilitates the auditor's process by providing an easy-to-use GUI for examining alerts, identifying true positives, and saving that information to a database. SCALe provides many scripts and other software to help run static analysis tools, to create a code audit report, and to manage information about the analysis (and also about the source code being analyzed).

Static analysis tools analyze the code without executing it. SCALe uses output from two kinds of static analysis tools: flaw-finding tools and code metrics tools. These tools can be open-source or proprietary. Each flaw-finding tool produces output (often copious) containing alerts--that is, problems in the source code identified by the tool. Some, but not all, of these alerts indicate code flaws (such as buffer overflows).

Flaw-finding analysis tools may report problems related to style (such as insufficient comments) or performance (such as call of a slow function when a faster one would suffice) but not related to security. Only alerts related to security are mapped to CERT coding rules. Any alert that doesn't have at least one mapping to either a CWE or CERT coding rule is filtered from the results.

Those alerts and the code metrics are then placed into a database where the alerts are examined by one or more auditors. When auditors are examining an alert, they must decide if it is correct (a true violation of a secure coding rule), incorrect (a false positive), or if a different verdict (using the set of primary and supplemental determinations described in our paper, e.g., the Dependent determination) applies. Typically, alerts are too numerous for the auditors to examine all of them. SCALe addresses this issue in two ways:

- The first method prioritizes alerts for manual examination, and auditor effort/time is used auditing the prioritized list in order.

- A second method partitions alerts into buckets; each bucket contains all the alerts associated with a single secure-coding rule.

For each bucket in the second method, the auditor analyzes the alerts until a confirmed violation is found or until the bucket is exhausted. If the auditor finds a confirmed violation, all remaining unconfirmed alerts are marked as suspicious (probable violations), and the auditor moves on to the next bucket. The result of the second method is a classification of the security alerts into true violations, probable violations, and false positives.

The SCALe application simplifies the process of auditing alerts. It takes as input the source code and the feedback from the various tools that can be run on the code. With this information, it provides a web-based interface to the alerts, allowing auditors to quickly view all information associated with the alert, including the source code it applies to. It makes auditor work more efficient by fusing alerts into a single meta-alert view, where alerts for the same filepath, line, and condition are shown together. It also enables auditors to check if previous audit determinations or notes have been provided for the same checker, filepath, and line but different condition(s).

SCALe allows auditors to mark a meta-alert's (single) verdict, mark any combination of supplemental verdict(s), add notes, and add a flag. SCALe enables auditors to export the alerts (and meta-alerts, code metrics, and all other SCALe database data) for outside work, such as for classifier development or for building a spreadsheet of the alerts marked as true positives. Finally, for the last couple of years, SCALe code has included a script that creates a sanitized version of an exported SCALe database, anonymizing fields (and deleting some fields) that may contain sensitive data.

Adding sanitization support to SCALe was necessary to enable research project collaborators to share their audit data with us so we could create classifiers and compare their accuracy. However, the sanitization functionality is not completely updated for the GitHub version of the exported database sqlite3 and .csv file exports. So for now, GitHub SCALe users should not depend on the sanitizer to secure their sensitive data.

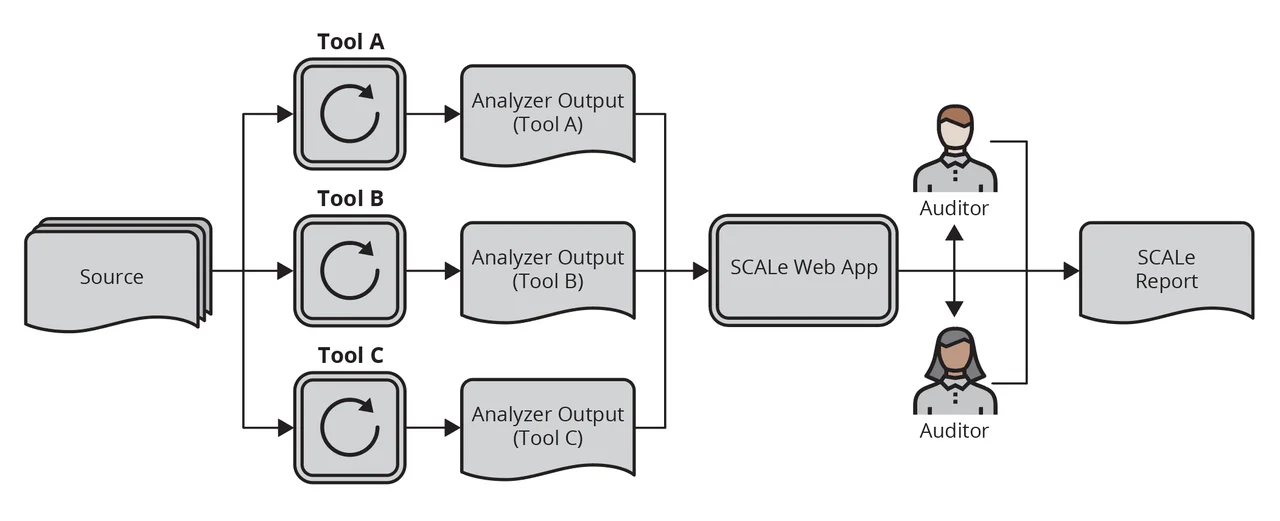

The diagram above gives a high-level overview of the SCALe audit process. A source codebase is analyzed with various static analysis tools. Output from the tools are uploaded to the SCALe web app. Auditors use the web app to evaluate the alerts and make a determination (e.g., true or false). The results of auditor evaluations can be exported into a database or other file, and that can be compiled into a report. The SCALe web app recognizes outputs from many popular static analyzers.

More About New Version of SCALe Tool

New functionality in the GitHub release that was added by my research projects in the past three years includes

- Different set of determination options, consisting of the primary and supplemental verdicts recommended by our paper Static Analysis Alert Audits: Lexicon & Rules, to support using the auditing rules and lexicon so different auditors will make consistent determinations.

- Ability to map alerts to multiple conditions that can be in one or more taxonomies. (A condition is a code flaw identified by a code-flaw taxonomy. In this version of SCALe, a single alert can be mapped to any number of CERT secure coding rules, and it may also be mapped to any number of CWEs.)

- Fusion of alerts into meta-alerts, making auditor work more efficient. Alerts are fused into a single meta-alert view, where alerts for the same [filepath, line, and condition] 3-tuple are shown together.

- Inclusion of a hyperlinked meta-alert ID; selecting it enables auditors to check if previous audit determinations or notes have been provided for the same checker, filepath, and line but different condition(s).

- Ability to upload code-metrics tool output [from tools such as Lizard and C Code Source Metrics (CCSM)] to a project archive.

- Ability to use output from more flaw-finding static analysis tools.

- Addition of Notes field (for auditors to add information about alerts, code, and/or conditions).

- Modified database structures for easy extensibility to more code-flaw taxonomies (such as CWEs, CERT coding rules, MISRA rules, etc.).

- Addition of CWE taxonomy to SCALe.

- New per-alert fields for CWE metrics (CWE likelihood) and condition name (e.g., "CWE-190").

- New filters that allow limiting alert views to CWEs or CERT rules.

- New filter to allow limited alert views based on filepath.

- Ability to add static analysis (flaw-finding and/or code metrics) tool output via the GUI after the project has been created.

- Ability to substitute new output from a tool for old output from a tool, via the GUI after the project has been created.

- Addition of per-alert fields to GUI for classifier confidence and alert priority. These are placeholders, which the latest version of SCALe (not this first Github release) are using.

- Reconfigured database structures intended for incorporation of confidence and prioritization. These are placeholders, which the latest version of SCALe (not this first Github release) are using.

- Database sanitizer script (partially functional; see above). When the sanitizer is fully functional, all potentially sensitive fields are either hashed (using a SHA-256 hash with a salt) or removed completely.

- More project data provided in exported versions of the project (database and .csv file versions).

We have included in this release an updated SCALe manual (an HTML-viewable document referred to in the README) that explains in great detail what functionality is provided and how to use it, plus instructions on how to install SCALe and information that can be used to extend the SCALe software (e.g., for integrating output from new static analysis tools).

Next Steps, and How You Can Help

We are continuing to develop SCALe, and are close to releasing SCALe v3 as a replaceable part of a system that enables sophisticated alert classification and prioritization. This release will be limited at first, but we hope to eventually release that system and SCALe v3 to the general public.

We encourage users to share their SCALe extensions. Please submit pull requests via the GitHub repository for features you add that you think may be useful to the wider public.

For some users who have licenses to proprietary static analysis tools, SEI will be able to provide third-party tool integration files--please contact us. We also welcome collaborations or testing using more recent (non-public) versions of SCALe.

Additional Resources

Read SEI press release, SEI CERT Division Releases Downloadable Source Code Analysis Tool.

Read the SEI blog post, Static Analysis Alert Test Suites as a Source of Training Data for Alert Classifiers.

Read the Software QUAlities and their Dependencies (SQUADE, ICSE 2018 workshop) paper Prioritizing Alerts from Multiple Static Analysis Tools, Using Classification Models.

Read the SEI blog post, Prioritizing Security Alerts: A DoD Case Study (In addition to discussing other new SCALe features, it details how the audit archive sanitizer works.)

Read the SEI blog post, Prioritizing Alerts from Static Analysis to Find and Fix Code Flaws.

View the presentation Challenges and Progress: Automating Static Analysis Alert Handling with Machine Learning.

View the presentation (PowerPoint): Hands-On Tutorial: Auditing Static Analysis Alerts Using a Lexicon and Rules

Watch the video: SEI Cyber Minute: Code Flaw Alert Classification

View the presentation: Rapid Expansion of Classification Models to Prioritize Static Analysis Alerts for C

View the presentation: Prioritizing Alerts from Static Analysis with Classification Models

Look at the SEI webpage focused on our research on static analysis alert automated classification and prioritization

Read the SEI paper, Static Analysis Alert Audits: Lexicon & Rules, presented at the IEEE Cybersecurity Development Conference (IEEE SecDev), which took place in Boston, MA on November 3-4, 2016.

Read the SEI Technical Note, Improving the Automated Detection and Analysis of Secure Coding Violations.

Read the SEI Technical Note, Source Code Analysis Laboratory (SCALe).

Read the SEI Technical Report, Source Code Analysis Laboratory (SCALe) for Energy Delivery Systems.

Watch the SEI Webinar, Source Code Analysis Laboratory (SCALe).

Read the SEI Technical Note, Supporting the Use of CERT Secure Coding Standards in DoD Acquisitions.

Watch the SEI Webinar, Secure Coding - Avoiding Future Security Incidents.

More By The Author

Release of SCAIFE System Version 2.0.0 Provides Support for Continuous-Integration (CI) Systems

• By Lori Flynn

PUBLISHED IN

Secure DevelopmentGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed