Revealing True Emotions Through Micro-Expressions: A Machine Learning Approach

Micro-expressions--involuntary, fleeting facial movements that reveal true emotions--hold valuable information for scenarios ranging from security interviews and interrogations to media analysis. They occur on various regions of the face, last only a fraction of a second, and are universal across cultures. In contrast to macro-expressions like big smiles and frowns, micro-expressions are extremely subtle and nearly impossible to suppress or fake. Because micro-expressions can reveal emotions people may be trying to hide, recognizing micro-expressions can aid DoD forensics and intelligence mission capabilities by providing clues to predict and intercept dangerous situations. This blog post, the latest highlighting research from the SEI Emerging Technology Center in machine emotional intelligence, describes our work on developing a prototype software tool to recognize micro-expressions in near real-time.

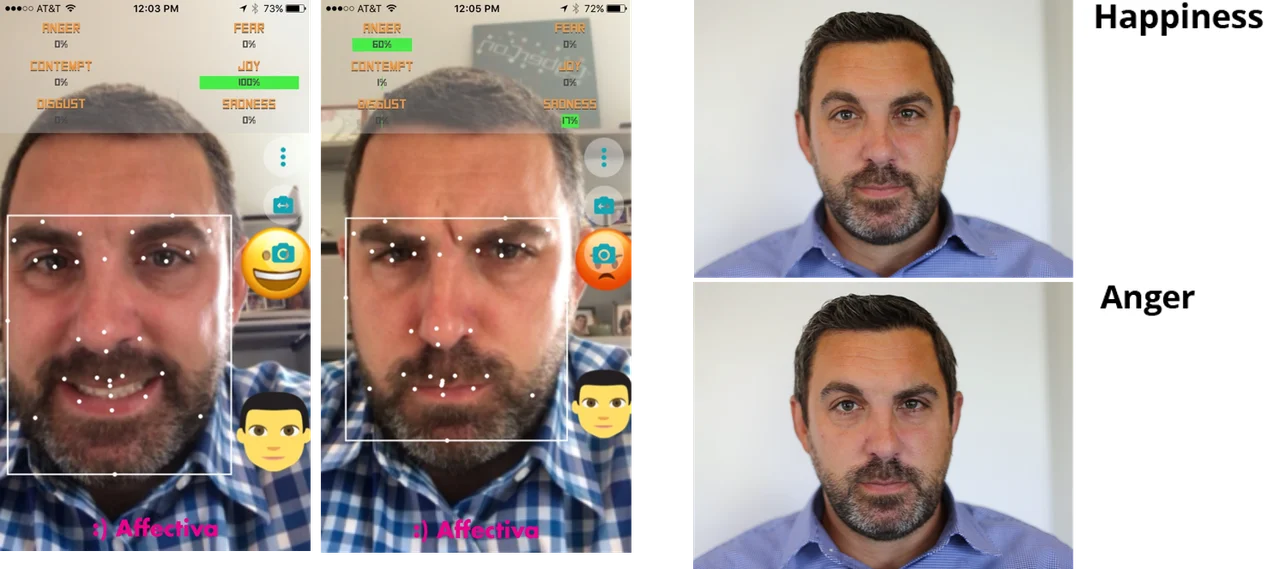

Macro-Expressions vs. Micro-Expressions: Tools like Affectiva detect macro-expressions (left). Micro-expressions (right) are subtle and difficult even for humans to recognize.

Recognizing Micro-Expressions: Foundations and Current State of the Art

Dr. Paul Ekman, a psychologist and pioneer in micro-expression recognition, linked micro-expressions with deception in the 1960s when working with clinical patients who tried to disguise strong negative feelings. Ekman set out to determine whether he could identify deception in depressed patients to prevent suicide. He found that some patients who were suffering from depression would lie in interviews and say they were improving. By replaying films of interviews with patients, he identified instances where micro-expressions indicated despair. That work led to the Facial Action Coding System, which formed the basis for training humans to detect micro-expressions. Human interviewers can learn to read faces with specialized, continuous training (human skills degrade over time, so re-training is necessary). Such training can be costly and time-consuming.

The difficulty and promise of recognizing micro-expressions inspired us to investigate whether computers could be used to reveal the emotions hidden in these subtle facial movements. In 2016, we embarked on a project to develop a prototype software tool to recognize micro-expressions--one that builds on our interest in machine emotional intelligence, the research area that focuses on teaching machines how to detect, understand, and respond to humans by channeling their emotions. Machine emotional intelligence is a key for improving how machines and humans work together (human-machine teaming)--a priority for the Department of Defense (DoD).

The study of affective computing--systems that can recognize, detect, and respond to human emotions--is a growing field. One recent report, which noted increased demand for facial feature extraction software, predicted that the global affective computing market will grow from $12.2 billion in 2016 to $53.98 billion by 2021.

Current software tools like Affectiva can successfully identify emotions based on macro-expressions like broad smiles, exaggerated frowns, and obviously narrowed eyes and pursed lips. However, these movements can be easily faked.

Current approaches for recognizing micro-expressions use hand-crafted features and treat each video frame as a stand-alone image. This approach is brittle, is slow, and has limited accuracy.

Our Approach: Designing and Building a Micro-Expression Recognition System

In contrast to tools that use traditional hand-crafted features or techniques that search pre-defined areas of the face for facial action units, our approach uses machine learning features that treat the whole face as a canvas. One challenge we faced for this project was finding a dataset with accurately labeled data to establish ground truth. Few existing databases capture subjects' suppressed reactions, and even fewer capture those reactions at a consistent quality (e.g., video resolution).

For this project, we selected a database of spontaneous micro-expressions provided by the Chinese Academy of Sciences (CASME) in which participants were shown a series of videos and were asked to show no emotion. The database includes five emotional classes: happiness, surprise, disgust, repression, and other. To deal with variations in video duration times for different expression categories, we normalized the videos using time interpolation.

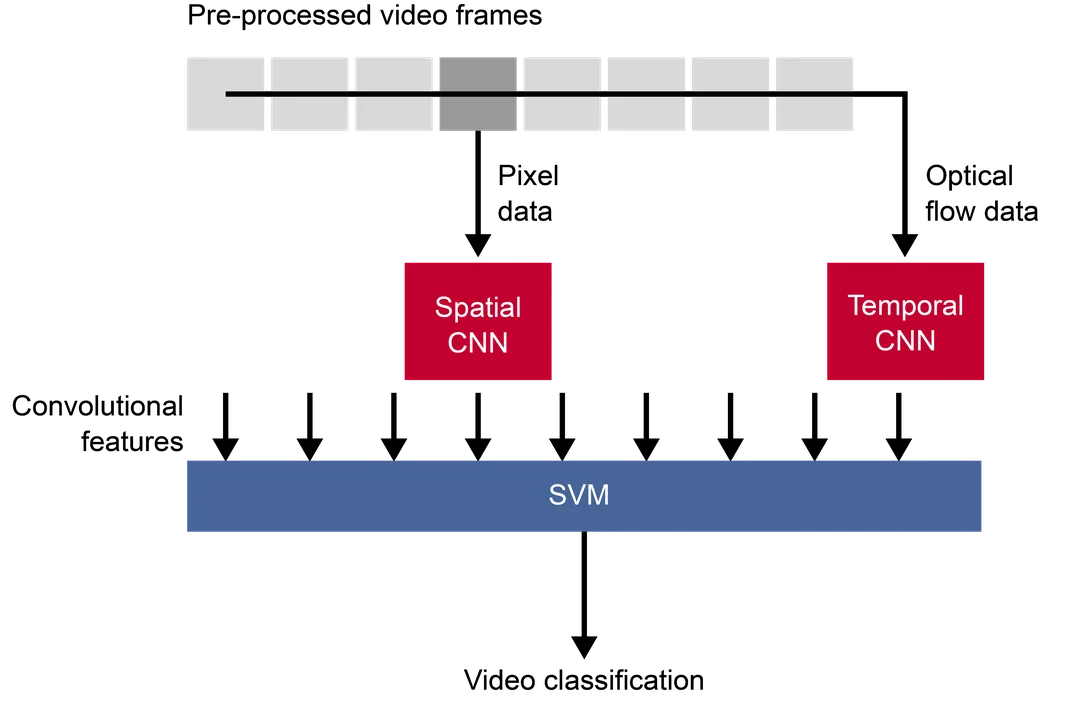

Our approach uses two convolutional neural networks (CNNs): a spatial CNN that has been pre-trained on faces from ImageNet, and a temporal CNN for analyzing changes over time. We feed pre-processed video frames into the CNNs, which use both pixel data and optical flow data to capture spatial and temporal information. The CNNs generate machine learned features, and both streams of data are integrated into a single classifier that predicts the emotion associated with the micro-expression. Our approach is 67.7% accurate at recognizing micro-expressions, on par with the state of the art.

Mission-Practical Applications of Our Work

An overarching principle behind what we do is making our work mission-practical. We are actively working to improve our results and make them applicable in real-world settings, including polygraph testing, media analysis and exploitation, and detection of post-traumatic stress disorder.

To make our approach practical for defense missions and other real-world scenarios, we need to make improvements in the following areas:

- Ability to handle long-running videos. The system that we designed and built must be able to handle input like long-running videos and, eventually, real-time data.

- Combine detection with recognition. We aim to achieve accuracy in both detecting and recognizing micro-expressions. Right now, our focus is on recognizing micro-expressions. Detection of micro-expressions is especially useful for long-running videos, where subtle changes indicate the presence of micro-expressions.

- Migrate to GPUs for faster performance. We can speed the performance of our tool by taking advantage of high-performance computing platforms.

- Combine with other modes for more accurate emotion recognition. By combining micro-expression recognition with analysis of other physiological information (e.g., heart rate), we can gain a fuller picture of a subject's emotional state.

Looking Ahead: A DoD Mission of Human-Machine Teaming

Micro-expression recognition is part of a larger portfolio of SEI work in the field of machine emotional intelligence, a research area focused on using physiological characteristics to enable machines to better understand humans. Our previous work in this field focused on the development of a tool to extract heart rate from video. Future work includes exploring how computers can analyze other physiological information--from posture and gait to changes in the human voice--to provide a better understanding of human bodies and emotions.

Our work in machine emotional intelligence aligns with the DoD's focus on human-machine teaming and how we might make the most of human capabilities in cooperation with the computational power of machines. Advances in this field can revolutionize media and video analysis, interrogations, and security checkpoint encounters.

Additional Resources

Read our previous blog post, Real-Time Extraction of Biometric Data from Video.

More By The Author

More In Artificial Intelligence Engineering

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed