Information Technology Systems Modernization

PUBLISHED IN

Software ArchitectureLegacy systems represent a massive operations and maintenance (O&M) expense. According to a recent study, 75 percent of North American and European enterprise information technology (IT) budgets are expended on ongoing O&M, leaving a mere 25 percent for new investments. Another study found nearly three quarters of the U.S. federal IT budget is spent supporting legacy systems. For decades, the Department of Defense (DoD) has been attempting to modernize about 2,200 business systems, which are supported by billions of dollars in annual expenditures that are intended to support business functions and operations.

Many of these legacy systems were built decades ago using technologies available at the time and have been operating successfully for many years. Unfortunately, these systems were built with components that are becoming obsolete and have accompanying high-licensing costs for commercial off-the-shelf (COTS) components, awkward user interfaces, and business processes that evolved based on expediency rather than optimality. In addition, new software engineers familiar with current technology are unfamiliar with the domain, and documentation is scarce and outdated. Other problematic factors include business rules that are embedded in code written in obsolete languages using obsolete data structures and the fact that the cadre of aging domain experts maintaining legacy systems are unfamiliar with newer technologies. This blog post provides a case study of a modernization effort conducted for a federal agency by SEI researchers on such a large-scale, legacy IT system.

The Horseshoe Model

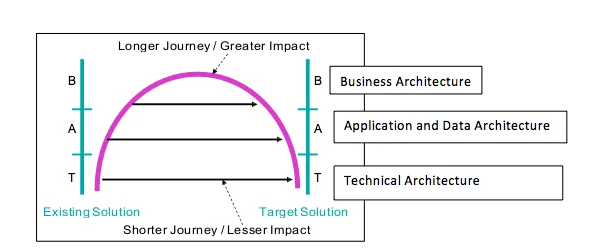

A general approach to modernization is based on the horseshoe model shown in the figure below.

The basic principle of the horseshoe model is that the transformations are more costly at the higher levels. Merely changing technologies (e.g., moving from one commercial data base management system (DBMS) to another) is done at the technical level, and there are many commercial tools to assist in this transformation. If there are significant changes to business processes, roles, and user interfaces, the modernization will take a greater effort, as measured in terms of cost, performance, and risk.

Recently a number of SEI engineers were tasked to plan a proposed modernization of a large-scale, legacy IT system (main frame, hierarchical database, COBOL, job control language [JCL], green screens, spaghetti code) to a modern architectural framework (commodity hardware, Linux OS, JAVA, relational database, well defined framework for distributed processing, 4-layer applications). The modern architectural framework was defined by a technical reference architecture (TRA) and a common platform infrastructure (CPI) satisfying the TRA. The TRA included many constraints on the development, operation, and support for both the data structures and the applications. The CPI included the computing device, software language and associated tools, operating system, relational database and associated tools and services, web services, and other services. The CPI would also be used by other modernization projects within the federal agency.

We defined a plan with four phases but were involved directly with only the first two phases. The first phase consisted of only SEI engineers. An SEI engineer led the second phase, which included five customer representatives. The phases are described in detail below:

First Phase. The team conducted an analysis of a number (14) of responses to a request for information (RFI) by commercial IT contractors to migrate the legacy software to the modern framework. This migration involved making as few changes as possible to get it to the new TRA/CPI; move it to the CPI in an infrastructure minimally satisfying the TRA; use the CPI, including a specific COTS relational database with associated tools; leave the COBOL and green screens; and make as few changes as possible to the spaghetti code, legacy applications, and data structures' architecture. This approach was designated as a "lift and shift" (L&S).

The RFI mentioned six issues to be addressed.

a. migration from hierarchical database to a relational database

b. migration of application code

c. integration and testing of the modernized system

d. maintenance of the application code

e. acquisition and contracting constraints

f. past performance

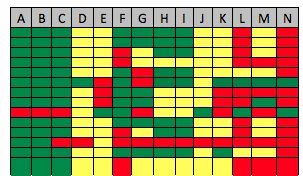

These responses form the top six rows in the table shown below.

The team also considered whether the proposals included sufficient technical detail to resolve these six issues and these considerations formed the next six rows of the table. We included two more issues that form the bottom two rows.

Each proposal also included an expected cost and schedule. The table below shows how the proposals (A through N) were judged to have addressed the issues.

The results for each response are shown in the adjacent table. The green color indicates that an issue was addressed in a satisfactory manner, yellow that it was addressed somewhat, and red that it was not addressed. The cost and schedule numbers were not included in the table, but the costs varied by a factor of two. The schedules were all about the same.

The proposers A, B, and C are almost completely "green" indicating that they both understood the issues and knew how to tackle them technically. The proposers F, G, H, and I clearly understood the issues, but did not describe the technology they would use to resolve them in sufficient detail. The proposals L, M, and N more or less indicated "we are good at this sort of thing" but failed to address the issues and gave no technical detail. The proposers D, E, J, and K were rather vague on the issues and technology.

The analysis convinced the team that most responders considered a lift and shift to be feasible, so we proceeded on that basis.

The team recommended that an effort be started immediately to better understand the structure of the legacy software and data structures and how the different classes of users interacted with these structures. We called this process discovery and analysis (D&A) and it consisted of performing the following activities: review the documentation (it was sparse and dated); analyze the code and data structures for connectivity relationships using commercial tools, such as Lattix; determine how business processes and user roles accessed the code and data structures; and collect the real-time traffic patterns already being measured on the system. In addition, the programmers knew that there were existing issues with the system, such as dead code, dead tables, dead attributes, redundant copies of attributes, and cloned and owned procedures. These issues can also be identified with the same tools.

Analysis of the relationships between software elements (data files, programs) can then be used to form clusters of data files, programs, and users with minimal interactions. These clusters would then form the basis for a phased lift and shift effort.

Other important considerations that require management involvement included the following

- Can the authoritative data be split between the legacy system and the modern system?

- Is there a need to failback to the legacy system when a failure occurs in the modern system?

- How much of the transformation can be accomplished using tools, and how much manual intervention is required? It was recommended that a small task to be initiated to transform one of the clusters.

In addition, some constraints must be placed on any solution including

- ensuring that the most critical users are not inconvenienced by the requirement that they log in to and switch between multiple systems to accomplish their work

- avoiding transactions that will require atomicity across the legacy and modern systems

However, a D&A could not be initiated without performing a return on investment (ROI) analysis for the whole migration. This ROI analysis was done by a customer expert together with SEI input.

Second Phase. Based on the RFI responses (but not the D&A results, which had not yet been conducted) the team proposed six alternative approaches ranging from doing nothing (as a baseline) to completely re-engineering the system.

The team viewed the lift and shift as a transformation at the technical architectural level, a tool-based transformation of the applications and replacement of green screens as occurring at an applications architectural level, and a re-engineering effort as being at the business architecture level.

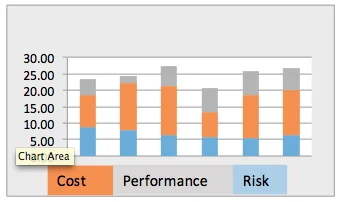

The team developed a set of 23 factors to compare between alternatives. These factors can be found in the Additional Resources section at the end of this blog. The factors were initially based on the team's architectural knowledge and experience and the knowledge of the software engineers on the team tasked with sustaining the legacy system. The factors were then reviewed against those from the OMB Exhibit 300, which the Office of Management and Budget (OMB) uses (along with other factors) to analyze proposed and ongoing programs. This comparison led to the introduction of a few more factors. The factors were grouped by cost, performance, and risk. We developed a simple set of measures (1, 3, 5 and 8), which were applied to each factor with 1 being worst and 8 being best. Because the factors were unevenly split among the groups, we also normalized the measures over the groups. The ranking of the alternatives is shown in the graphic below.

As the chart above indicates, higher numbers were the best options

The decision makers decided on a hybrid approach

i. Conduct a D&A

ii. Lift and shift

iii. Transform

iv. Re-engineer

In addition, the team needed to meet with users to discover which business processes were cumbersome and time consuming and should be re-engineered.

Third Phase. Build an end-state architecture on top of the modern architectural framework, defining applications as services, and data structures and mapping them to

- legacy applications and data files

- transformation method to be used

- legacy COTS tools and the CPI COTS tools (developmental, test, and operational).

- The external interfaces

In addition, the end-state architecture must account for use case sequences and business case sequences.

The end-state architecture must be given a thorough review by stakeholders, and a registry of technical risks must be established based on the evaluation and subsequent upgrades and decisions.

Fourth Phase. Build a multi-phase architectural roadmap that allows deviations from the framework constraints at the intermediate phases. This roadmap should start with some pilot projects to better understand the difficulties in all approaches: lift & shift, transform and re-engineer. The phasing should take into account the following:

- the progress in the development of the CPI and its impact on the migration

- choosing low-hanging fruit early

- organizational boundaries that will impact the development

- splitting of authoritative data

- process to be followed: move data first, move code first, move a grouping of code/data

- efficacy of tool support

Each phase of the target architecture must have views showing the following:

- which legacy elements are to be replaced and a mapping of where they are to be introduced in this phase

- what end-state elements are to be introduced

- cross coupling between systems

- additions and removals of interfaces between the legacy system and the PTA

- how each class of user will interact with the mixed system

As progress is made on a phase, especially the first or pilot phase, more and more information will become available to modify the roadmap, both within the current phase being implemented and the next phase. For example if a new CPI capability is unstable, and this impacts the move of a particular application, then re-schedule the move until the CPI stabilizes.

Wrapping Up and Looking Ahead

A basis for modernizing the system was determined, and criteria were created for choosing between alternative approaches. A way of clustering the software elements was described, and the basis for a target architecture was defined. The factors to be used in a modernization effort will depend somewhat on

- the attributes of the legacy system

- the attributes of the TRA and CPI

- business drivers of the program office funding the migration.

The factors developed for this migration effort can serve as a basis for developing factors for another migration effort.

Building a roadmap, however, relies on choosing the order of moving the clusters, and the milestones when some class of users will be cutover from the legacy system to the modernized one. Building a roadmap is a complex optimization problem, in which many issues must be resolved and decisions made at different levels:

- For example, whether or not to have authoritative data split between the two systems is a high-level decision made at the program management level. If the split is allowed, then at specific milestones some users can start using the modern system, while others still use the legacy system, demonstrating progress against a schedule. If the split is not allowed, then progress can only be measured by progress against tests conducted at milestones.

- The efficacy of tool support can only be determined by using the tools to migrate a portion of a cluster and gaining tool familiarity; then writing a lessons-learned report to provide guidelines to later engineers on how best to use the tools.

- The decisions made to order the migration of clusters is an optimization problem with a large search space. First, there is the problem of defining the utility function to minimize, expressing the problem mathematically and defining the feasible solution space where all hard constraints are satisfied. A good example of a hard constraint might be that critical users conducting critical capabilities must always use either the legacy system or the modernized system.

Some of these issues are being addressed in a new research effort underway at the SEI.

We welcome your feedback on this research. Please leave comments below.

Additional Resources

To view the presentation Architectural Insights into Planning a Legacy Systems Migration, which I co-presented with Michael Gagliardi and Philip Bianco at the 2014 TSP Symposium, please click here.

More In Software Architecture

PUBLISHED IN

Software ArchitectureGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Software Architecture

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed