How to Use Static Analysis to Enforce SEI CERT Coding Standards for IoT Applications

PUBLISHED IN

Secure DevelopmentThe Jeep hack, methods to hack ATMs, and even hacks to a casino's fish tank provide stark evidence of the risks associated with the Internet of Things (IoT). High-end automobiles today have more than 100 million lines of code, and connectivity between cars and the outside world through, for example, infotainment systems and the Global Positioning System (GPS) expose a number of interfaces that can be attacked to communicate with an automobile in unintended and potentially dangerous ways. In Part 1 of this two-part blog post on the use of SEI CERT Coding Standards to improve the security of the IoT, I wrote about how these standards work and how they can reduce risk in Internet-connected systems. In this post, I describe how developers of large-scale systems, such as IoT systems in automobiles, can use static analysis to enforce these coding standards.

Static-analysis tools examine software without running it as an executable, as opposed to dynamic-analysis tools that execute the program. Often static-analysis tools inspect the source-code files of the application. The result of this examination is a collection of alerts. At a minimum, alerts contain a source-code location (file path and line number, for example), and a textual description of the alert. Many static-analysis tools provide additional contextual information, such as example execution paths and variable values that may trigger the undesired behavior identified by the alert. For multi-line code flaws, some static-analysis tools provide a starting line and an ending line.

Securing code without using static or dynamic analysis requires peer review--manual evaluation of the code by one or more people with similar experience with the code as the producers of the code--to identify any deviations from coding standards. For an automobile or other large-scale IoT system, which may have a hundred million lines of code, peer review is not feasible. In addition to the physical impossibility of manually examining that much code, the peer-review process is prone to human error and inconsistencies between different reviewers. Finding repeated instances of the same error is also hard in peer review.

Static analysis, on the other hand, is enforceable; tools unambiguously and reliably identify vulnerabilities in code and do so at a much lower cost in human resources. Static analysis can serve as an enforcement tool for a coding standard, identifying violations of coding standards in a practical and consistent way.

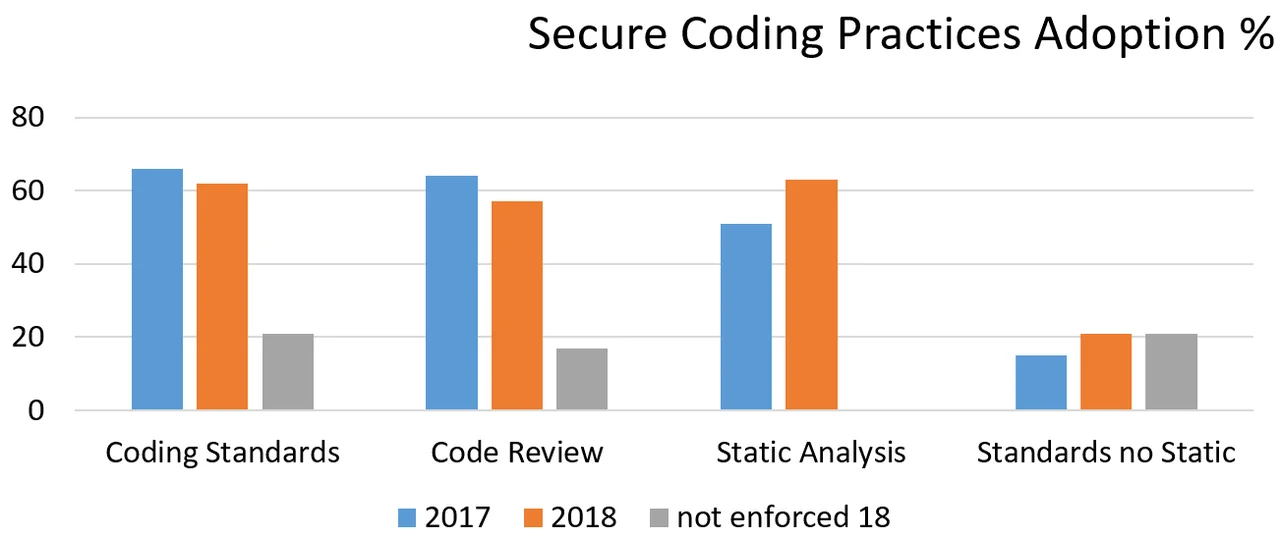

The disparity between the use of standards, such as the SEI CERT Coding rules, and the use of static analysis tools to identify violations of coding rules is illustrated by data from the Barr Group Embedded Security Safety Report from 2017 and 2018 (see Figure 1 below).

Figure 1: Barr Group Embedded Security Safety Report 2017 and 2018

The bars on the right indicate a gap between people using coding standards, such as the SEI CERT standards, and people running a static-analysis tool.

Static analysis is best approached not just as the application of a tool, but as a way of enforcing policies within an organization. This approach requires decisions about which individuals or organizational functions are responsible for running static-analysis tools on code, which projects use it, and what rules will be the basis for analysis, such as CERT, Motor Industry Software Reliability Association (MISRA), or some proprietary set of rules.

The distinction between rules and recommendations in the SEI CERT Coding Standards allows organizations to decide, for example, to have strong policies for enforcing rules and more relaxed policies for enforcing recommendations if there is a good reason to do so. Priority levels in CERT rules also enable organizations to establish a range of policies based on priority levels of vulnerabilities that analysis tools identify. Such policies are necessary because static-analysis tools can flag many thousands of issues when they are run on large-scale systems. An organization will therefore need to triage which ones to fix immediately and which can be fixed later, as time and money permit.

Organizations will also need to establish policies for use of static-analysis tools on legacy code. If time and resources allow, one policy is to clean up all legacy code, although running 100 rules on a million lines of code that has been in operation for five years or more may be neither feasible nor wise. Another policy is to fix only those pieces of code that have changed over time, or to fix only code for which a bug has been reported in the field. Priority levels and other metrics, such as those in the SEI CERT Coding Standards, help in defining policies. For example, an organization can decide that all errors flagged by tools as "major" must be fixed, and those errors that violate a CERT rule with priority level 1 (critical) must be fixed immediately.

Employing static-analysis tools during development is a way of implementing a test-as-you-go strategy to reduce costs and improve software quality. Such a strategy is an example of the principle of secure-by-design: rather than building software and then trying to add security into it, software is designed to be secure from the outset. For example, if one of the functions of software is to collect data, a secure-by-design approach would require encrypting collected data by default, with decisions not to encrypt data allowed only by exception. Creating high-quality software and creating secure software are solutions to the same problem; it is easier and far less costly to protect software that is free of defects than it is to protect software that is riddled with vulnerabilities.

Training is another key to successfully using static analysis in an organization. Effective training goes beyond simple instruction in the mechanics of using the chosen static-analysis tool. It should also include the basic principles of secure coding, as well as how to use and interpret the chosen coding standard based on the organizational policy for its use.

Developers need to understand when they are allowed to suppress rules and standards, what their documentation responsibilities are, and which violations they can safely ignore. Such training is necessary to ensure that when developers fix the most egregious problems identified by static analysis, they do not introduce new problems when they change code.

How to Use Static-Analysis Tools Effectively

Some reluctance or resistance to the use of static-analysis tools comes from a belief that they are "noisy," or that they produce an abundance of what are commonly referred to as false positives. In many cases, tools produce an unmanageable number of alerts about faulty code. This is not because of a flaw in the tool, but because of the way the tool is being implemented: the rule that the tool is enforcing might generate alerts on code that is not really vulnerable, or the implementation of the rule may be incorrect. Finally, developers may incorrectly dismiss an alert as a false positive due to lack of understanding of the alert or secure-coding principles. A tool with many false positives invites developers to ignore more, or even all alerts from that tool.

When using a static-analysis tool, it is important to understand whether the tool has been set to enforce the right rules and to flag vulnerabilities at the right levels of severity for the organization's purposes. For example, if an organization were using a tool for the first time, an effective strategy might be to use the SEI CERT C Coding Standard and to turn on only the checkers that enforce the rules and not the recommendations.

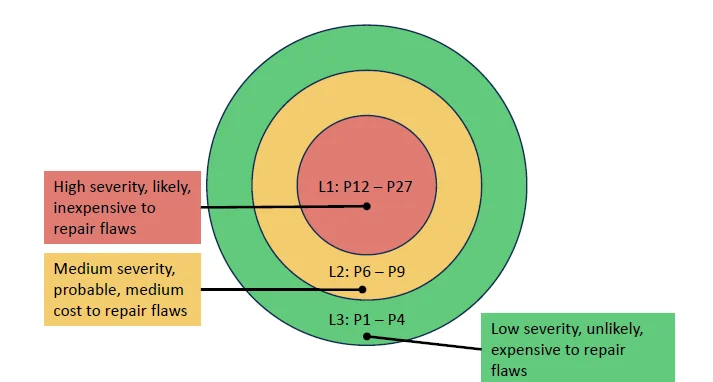

Figure 2: Priority Levels

Static analysis is an incremental process. One option is to confine the analysis only to Level 1 violations; and, when there are only a small number of Level 1 violations, to then include Level 2 violations. The solution to the noise or false-positive problem is often to adjust the process of how the tool is used. Many so-called false positives can be attributed to improper configuration, improper training, or poor internal documentation.

A simple piece of advice that I give people when they are just beginning to use static analysis is to turn off rules that not everyone agrees to. Then run the tool, show the flagged violations to everybody on the team, and if anyone argues that a result is not pertinent or useful, turn that rule off at the outset. This approach is not necessarily a good long-term strategy, but in the beginning, it is important that the tool find violations that are important to everyone on the team so that they can fix them immediately and appreciate the value that the tool adds.

The problem of false positives can be more psychological than technical. The important thing is to build comfort, belief, and trust in the tool within the development team and then, over time, increase the rigor of the tool with the goal of eventually producing error-free code. To put this another way, go into the shallow end of the pool and then work your way to the deep end. Don't search for issues that you don't plan on fixing immediately; doing so feeds the negative view about noise and false positives.

In matching tool usage to organizational needs, it is important to understand the difference between a bad configuration, a lack of training, and a rule or implementation that is incorrect. When reviewing results produced by a tool, you might understand a result but conclude that the flagged issue is not important to you or your organization. On the other hand, if you agree with a rule but believe that the flagging of a violation is wrong, you will want to contact the tool vendor to get the tool fixed.

Next Steps: Ensuring the Safety of IoT Applications Through Compliance Auditing

As IoT appliances in the home become increasingly common and ubiquitous, concerns about the safety of these appliances are well founded, as I discussed in the first post in this series. I would like to enjoy the functional benefits of having IoT products in my home without exposing myself to an unacceptable level of risk.

In addition to supporting developers, use of static analysis on IoT applications also supports the needs of the auditor whose job is to monitor the safety of these applications. Static-analysis tools enable auditors to select which rules to enforce and then to provide full traceability from the flagged issue to the fix that ensures compliance. An example might be to require that static analysis be used on an application and that all flagged issues with priority levels of 12 or higher must be fixed.

Judicious use of static analysis by developers and auditors promises to increase consumer confidence in IoT applications and products. Security is achievable with a proactive approach that builds software to be secure at the outset (secure-by-design) rather than relying on adding security after the software is developed.

Additional Resources

Read the first post in this series, Using the SEI CERT Coding Standards to Improve Security of the Internet of Things.

Access current versions of SEI CERT Secure Coding Standards.

Watch the Webinar,How Can I Enforce the SEI CERT C Coding Standard Using Static Analysis by David Svoboda and Arthur Hicken.

Read other SEI blog posts related to secure coding.

Read SEI blog posts related to the Secure Coding Analysis Laboratory (SCALe) tool

More By The Author

PUBLISHED IN

Secure DevelopmentPart of a Collection

Collection of Static Analysis Assets

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed