How to Mitigate Insider Threats by Learning from Past Incidents

PUBLISHED IN

Insider ThreatA growing remote workforce and a wave of resignations in recent years have exacerbated risks to a company’s confidential information from insider threats. A recent report from Workforce Security Software provider DTEX Systems highlights the rise of insider threats as a result of the trend toward working from anywhere, catalyzed by the pandemic. According to recent data, 5 million Americans currently define themselves as digital nomads or gig workers, and surveys consistently find that employees are opting to keep their flexible working patterns after the pandemic.

These trends present cybersecurity challenges, particularly with respect to insider threat. “While most industries made the shift to remote work due to the pandemic, it created new attack surfaces for cybercriminals to take advantage of, such as home devices being used for business purposes,” Microsoft explained in their recent Digital Defense Report.

The SEI CERT Division defines insider threat as “the potential for an individual who has or had authorized access to an organization’s critical assets to use their access, either maliciously or unintentionally, to act in a way that could negatively affect the organization.” Although the methods of attack can vary, the primary types of incidents—theft of intellectual property (IP), sabotage, fraud, espionage, unintentional incidents, and misuse—continue to put organizations at risk. In our work with public and private industry, we continue to see that insider threats are influenced by a combination of technical, behavioral, and organizational issues.

In this blog post, I introduce our newly published 7th edition of the Common Sense Guide to Mitigating Insider Threats, and highlight and summarize a new best practice that we have added to this edition of the guide: Practice 22, Learn from Past Insider Threat Incidents. Gathering such data, analyzing it, and engaging with external information-sharing bodies can bolster an organization’s insider risk-mitigation program. This activity is essential to ensuring that analytics operate effectively and that risk determinations are being made using the best available data. It also forms the foundation for return-on-investment cases to be made for insider threat programs.

What's New in the Latest Guide

The Common Sense Guide consists largely of 22 best practices that organizations can use to manage insider risk. Each practice includes recommendations for quick wins and high-impact solutions, implementation guidance, and additional resources. The practices are also mapped to the CERT Resilience Management Model (CERT-RMM) and security and privacy standards such as, among others, ISO/IEC 27002, the National Institute of Standards and Technology (NIST) Cybersecurity Framework, and—new to this edition—the NIST Privacy Framework. These mappings help identify the alignment between insider threat programs and broader cybersecurity, privacy, and risk-management frameworks, which is key to fostering enterprise-wide collaboration.

The Common Sense Guide springs from more than 20 years of insider threat research at the SEI, much of it underpinned by the CERT Division’s insider threat database, which is drawn from public records of more than 3,000 insider threat incidents. In 2005, the U.S. Secret Service sponsored the SEI’s first published study of the topic. Since then, CERT research has helped mature the organizational practices for mitigating insider threats and managing their risk. The guide has evolved with changes in the threat landscape, technological mitigations, and shifts in data-privacy policies. The 7th edition continues this evolution with new and updated practices, improved layout and imagery to enhance usability, and more refined terms. It has also added research from management science to its multidisciplinary approach.

Learning from Past Insider Threat Incidents

New to the 7th edition of the guide is Best Practice 22, Learn from Past Insider Threat Incidents. The practice offers guidance for developing a repository of insider trends within an organization and its sector. In the remainder of this post, I present excerpts and summaries from the full description of the practice in the guide.

Organizations that learn from the past are better prepared for the future. Understanding how previous incidents unfolded, whether in the organization or elsewhere, provides crucial insight into the efficacy of current insider risk-management practices; potential gaps in prevention, detection, and response controls; and areas of emphasis for insider threat awareness and training efforts.

Developing the capability to collect and analyze insider incident data is a key component of an effective insider risk management program (IRMP) and should inform its operations, including risk quantification, analysis, and incident response.

Designing an Insider Incident Repository

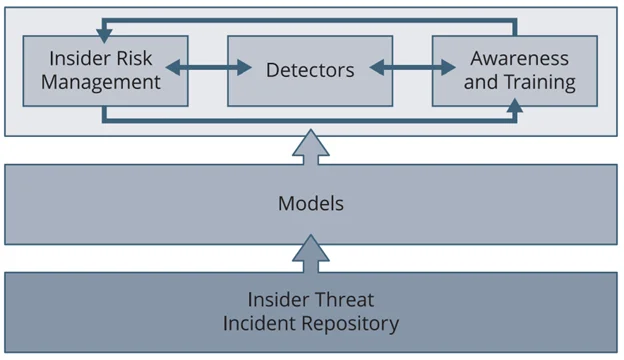

Figure 1 below shows how an insider threat incident repository provides a foundation for organizational preparedness.

Having information available about previous insider incidents enables the organization to derive insider threat models, make risk decisions based on historical information, and use examples of insider threat incidents for awareness campaigns and training. Those who are responsible for risk management must collect this information. They typically search for examples as the need arises. This reactive approach is time consuming, however, and can result in duplication of effort every time previous incident data is needed. To lessen these issues, the organization should design its own insider threat incident repository.

Internal development of an insider threat incident repository helps inform IRMP operations and, in turn, improves operational resilience more broadly. For example, supply-chain security management processes can also be informed by previous incidents captured in an insider threat incident repository. Moreover, the repository can help limit reputation risk by supporting the faster detection of incidents. Aggregated data from an insider threat incident repository can highlight potential high-risk networks or environments where enhanced monitoring or specialized tools should be deployed, or where additional mitigations should be implemented.

Developing and maintaining an insider threat incident repository can be as simple or complex as required to meet the organization’s needs. In all cases, leveraging existing standards and practices to implement incident collection and information sharing makes the effort associated with those activities more manageable.

In the simplest form, an insider threat incident repository is a collection of information (e.g., files, media reports) that is organized in a repository. For example, some organizations have a de-facto incident repository of internal incidents in their case-management system. A more complex repository example is when the organization uses the formal knowledge-management roles and responsibilities of its workforce to collect and store information in a dedicated repository.

Regardless of its format, several knowledge-management activities are involved in developing and maintaining an insider threat incident repository:

- Define the purpose and use cases for the insider threat incident repository—The repository is a tool for meeting operational needs. Those needs should be documented so that the repository stewards can ensure that the repository is developed and maintained to meet those needs. Designers of the initial repository must consider both the insights needed from the data in the repository and use cases that show how users need to interact with the repository (e.g., analyze data directly on the repository platform, pull information into separate analysis tools).

- Build a container for an insider threat incident repository—The repository’s container can be a database, code repository, document repository, or incident tracker/management system. The container should have a documented structure that reflects the data needs for use cases. These use cases should be documented in a data code book that (1) describes the data so that users can understand what it tells them and (2) defines the data expectations for the repository. For example, if the repository is a database, then the code book should provide structural information about each field (e.g., datatype). If the repository contains only files, then the code book should define expectations for different file types. The organization should also establish expectations for the repository’s use and maintenance (e.g., access control, update schedules, data cleaning, and allowed and prohibited information such as whether or not personally identifiable information or disciplinary actions are permissible data points).

- Collect information—To fully support the IRMP, the information collected should include both internal and external sources. Examples of external sources that are publicly available include court records, media reports, social media online forums, and information-security bulletins. This information might include incident-specific information, or best practice or trend information that can be applied to updating repository management. For internal information, the organization should capture information from incident investigations and insights gathered from post-mortem evaluations of responses to incidents.

- Share incident data as appropriate—Since the purpose of the insider threat incident repository is to help the organization and its members make better insider risk decisions, it is important that the repository be used to derive insights, and that those insights are shared with the people who need them. Since information from the organization is seldom enough to understand the breadth of insider threats, it is important to also gather and share incident data with the broader counter-insider threat practitioner community. In addition to providing general insider risk insights, sharing this information can lead to the exchange of indicators of compromise, tools, tactics, or procedures, and even approaches for prevention, detection, mitigation, or response.

Insight that benefits the organization can be derived from an insider threat incident repository in many ways. The most straightforward way is using incidents as case studies or examples for increasing workforce awareness of insider threat and training the workforce to recognize and respond to insider threat. Other ways include root-cause analysis, summary statistics, trend identification, and correlations. Each of these has its own use cases for the insights they provide.

Each organization should perform some foundational analyses of its repository data, especially the parts that are related to incidents inside the organization and within its information-sharing partnerships. Foundational practices for deriving insights from repository data can be qualitative or quantitative. An example of a qualitative foundational practice is creating incident-repository case studies for use in training and awareness activities. A quantitative example is providing trends on how the number and severity of insider incidents are changing over time, which can influence threat likelihood and impact calculations.

Performing advanced analysis practices requires specialized knowledge or tools. These practices can enable automatically processing (e.g., ingesting) of incident data into the insider threat incident repository. They can also provide insights that are more complex to derive, such as complex (or hidden) correlations between data points. For organizations using technical controls, such as user activity monitoring or user and entity behavioral analytics, advanced analyses should be used to refine and implement the threat models and risk-scoring algorithms the controls provide.

Many organizations that rely on out-of-the-box configurations of these controls quickly find that they must tailor them to their organization’s specific risk appetite, priorities, and cultural norms. An insider threat incident repository is a vital resource that an organization can use to ensure that the IRMP’s detective capability aligns (and continues to stay aligned) with the continuously changing threat landscape.

Preventing Insider Incidents

In the COVID era, with increasing numbers of employees working remotely, mitigating insider threat is more important than ever. The 22 recommendations in the Guide—including the one described in this post—are designed for decision makers and stakeholders to work together to effectively prevent, detect, and respond to insider threats. In a future post, I will map the Guide to the NIST Privacy Framework.

Additional Resources

Download the Common Sense Guide to Mitigating Insider Threats, Seventh Edition, from the SEI’s Digital Library.

Find out more about the SEI’s insider threat and insider risk research on our website.

Read other SEI Blog posts about insider threat.

More By The Author

More In Insider Threat

PUBLISHED IN

Insider ThreatGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Insider Threat

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed