Evaluating Static Analysis Alerts with LLMs

PUBLISHED IN

Cybersecurity EngineeringFor safety-critical systems in areas such as defense and medical devices, software assurance is crucial. Analysts can use static analysis tools to evaluate source code without running it, allowing them to identify potential vulnerabilities. Despite their usefulness, the current generation of heuristic static analysis tools require significant manual effort and are prone to producing both false positives (spurious warnings) and false negatives (missed warnings). Recent research from the SEI estimates that these tools can identify up to one candidate error (“weakness”) every three lines of code, and engineers often choose to prioritize fixing the most common and severe errors.

However, less common errors can still lead to critical vulnerabilities. For example, a “flooding” attack on a network-based service can overwhelm a target with requests, causing the service to crash. However, neither of the related weaknesses (“improper resource shutdown or release” or “allocation of resources without limits or throttling”) is on the 2023 Top 25 Dangerous CWEs list, the Known Exploited Vulnerabilities (KEV) Top 10 list, or the Stubborn Top 25 CWE 2019-23 list.

In our research, large language models (LLMs) show promising initial results in adjudicating static analysis alerts and providing rationales for the adjudication, offering possibilities for better vulnerability detection. In this blog post, we discuss our initial experiments using GPT-4 to evaluate static analysis alerts. This post also explores the limitations of using LLMs in static analysis alert evaluation and opportunities for collaborating with us on future work.

What LLMs Offer

Recent research indicates that LLMs, such as GPT-4, may be a significant step forward in static analysis adjudication. In one recent study, researchers were able to use LLMs to identify more than 250 kinds of vulnerabilities and reduce those vulnerabilities by 90 percent. Unlike older machine learning (ML) techniques, newer models can produce detailed explanations for their output. Analysts can then verify the output and associated explanations to ensure accurate results. As we discuss below, GPT-4 has also often shown the ability to correct its own mistakes when prompted to check its work.

Notably, we have found that LLMs perform much better when given specific instructions, such as asking the model to resolve a particular issue on a line of code rather than prompting an LLM to find all errors in a codebase. Based on these findings, we have developed an approach for using LLMs to adjudicate static analysis alerts. The initial results show an improvement in productivity in handling the many alerts from existing static analysis tools, though there will continue to be false positives and false negatives.

Our Approach in Action

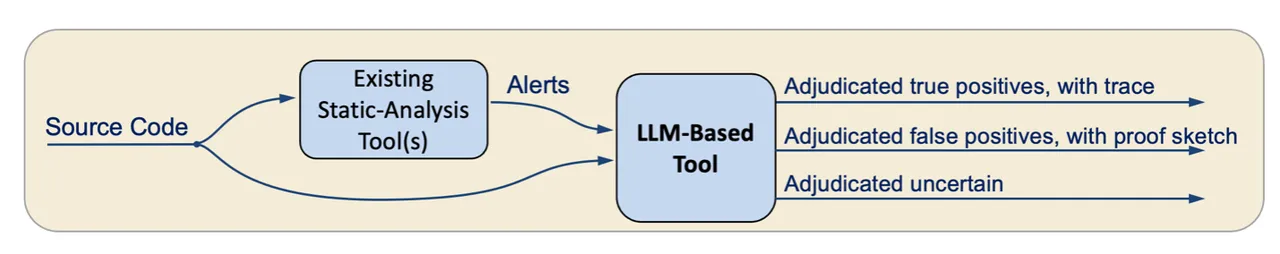

In our approach, illustrated in Figure 1, an LLM-based tool ingests source code and static analysis alerts. From there, for each alert, the tool creates a prompt that includes the alert from the static analyzer, the source code of the relevant function, and instructions on how to adjudicate the alert. The prompt is provided as input to the LLM, which then adjudicates the alert (as true positive, false positive, or uncertain) and provides its final answer in a JSON format.

To test this approach, we asked GPT-4 to adjudicate an alert for vulnerability CVE-2022-41674, an integer overflow that leads to a buffer overflow in Linux. Our script added comments to identify the code locations from the alert and gave GPT-4 the following prompt:

I want you to adjudicate whether a static-analysis alert is correct or a false alarm. The alert warns of a buffer overflow during memcpy on the line ending with "// ALERT-2" that happens if there is an integer overflow on the line ending with "// ALERT-1".

We then included the source code of the function we wanted GPT-4 to adjudicate. Finally, our prompt ended with the following text:

If you can determine whether the alert is correct or a false alarm, please indicate this determination and explain your reasoning, and at the end of your response, say either {"answer": "true positive"} or {"answer": "false positive"}. First identify whether integer overflow can happen. If it can't, then report the alert is false positive. If it can happen, then examine whether it can lead to a buffer overflow. Note: u8 here denotes an unsigned 8-bit integer type.

The full prompt and output are available online.

In response, GPT-4 identified where and how an integer overflow could occur:

For an integer overflow to occur, we need the sum of

mbssid[1]and2to exceed the bounds of theu8type (unsigned 8-bit integer).Given that

u8can represent values between 0 and 255, the maximum valuembssid[1]can have is 255. However, ifmbssid[1]was 255, adding 2 would indeed cause an overflow, resulting in a value of 1.Line:

cpy_len = mbssid[1] + 2; // ALERT-1This line can cause an integer overflow if

mbssid[1]is equal to 255.

GPT-4 then explained how an integer overflow can lead to a buffer overflow, concluding that the alert is a true positive:

If the integer overflow occurs,

cpy_lenwould be set to 1. The next line of interest is:memcpy(pos, mbssid + cpy_len, ((ie + ielen) - (mbssid + cpy_len))); // ALERT-2

The third argument of memcpy is the number of bytes to copy. If cpy_len is set to 1 due to an overflow, the value being copied from will start 1 byte after mbssid.

The amount being copied ((ie + ielen) - (mbssid + cpy_len)) would then be almost the entirety of the buffer, minus just a few bytes.

The destination buffer, pos, is a part of new_ie, which has been allocated with a size of new_ie_len.

The crucial question is: can (ie + ielen) - (mbssid + cpy_len) exceed new_ie_len?

However, due to previous operations, we've adjusted new_ie_len with statements like new_ie_len -= trans_ssid[1] and new_ie_len -= mbssid[1]. In the case where mbssid[1] is 255 (and overflows), this will reduce new_ie_len by 255.

The code is trying to ensure that new_ie_len is always correctly adjusted to represent the total expected size. But if mbssid[1] overflows, we're subtracting 255 from new_ie_len and then adding almost the entire length of the buffer back due to the overflow in cpy_len, potentially copying more data into new_ie than it can hold.

Finally, we prompted GPT-4 with a patched version of the code. The LLM declared that the vulnerability was no longer present and returned that the alert was a false positive.

LLM Limitations and Mitigations

Limited Context Window

A significant limitation for LLMs is the narrow context window. While LLMs can ingest a single function, they typically cannot ingest an entire codebase. To ensure the LLM has the necessary context, prompts need to summarize the most relevant parts of a codebase. Strategies for providing this context include using outputs from traditional static analysis and using the LLM itself to generate function summaries.

We have also tested additional strategies to mitigate the limited context window. In one test, we prompted GPT-4 to ask for required information, such as definitions of called functions. We then supplied the function’s definition, and the LLM properly adjudicated the alert as a false positive.

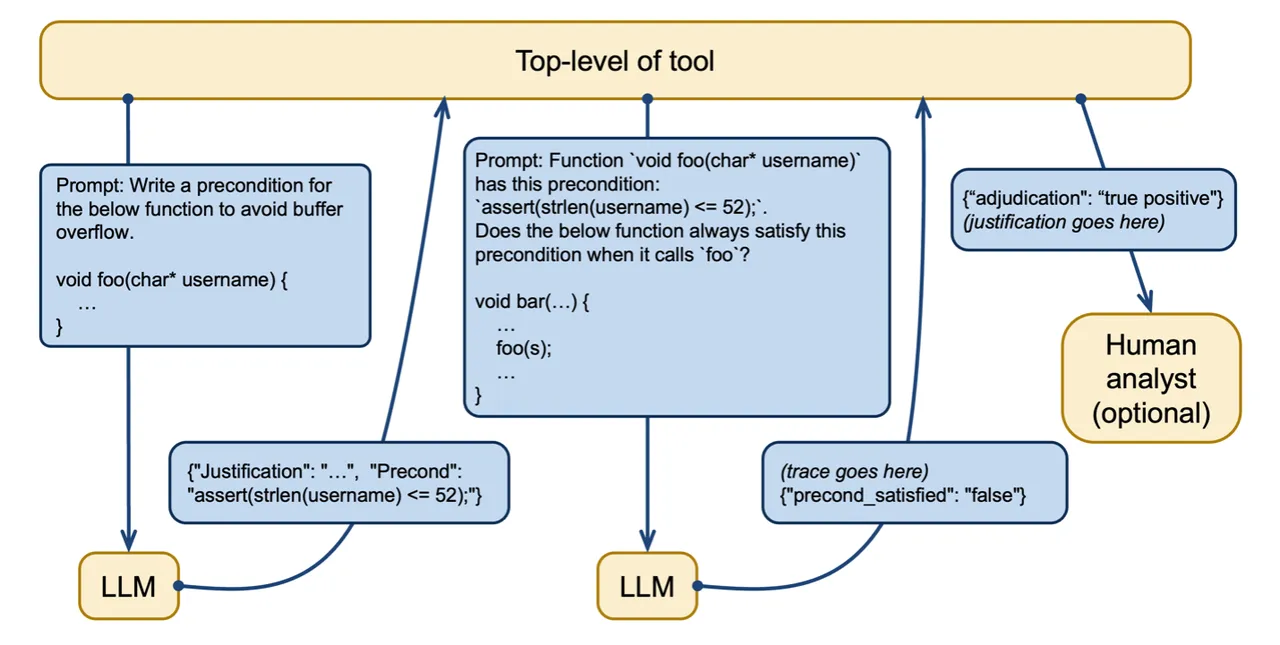

In another test, we used the LLM to generate a precondition necessary and sufficient for proving the absence of buffer overflow. We then asked the LLM to check if the precondition was fulfilled. In this example, we prompted GPT-4 with a function called greet_user that takes a string as an argument and asked it to adjudicate an alert about a buffer overflow. GPT-4 determined that a buffer overflow could occur if the input string was too long and returned a precondition for avoiding a buffer overflow. In this case, the string needed to be less than or equal to 52 characters in length.

GPT-4 was then prompted to analyze a function that calls the greet_user function to determine if it met the precondition. In this case, the LLM correctly determined that the function could violate the precondition. Figure 2 illustrates this process of creating and using preconditions in LLM prompts. As an extra check, a human analyst can evaluate the LLM’s output to assess accuracy.

Mitigating LLM Mistakes

Another barrier is the well-known tendency of LLMs to sometimes make mistakes. In our research, we found that one generally effective mitigation strategy is to ask the LLM to double-check its work. In one experiment, GPT-4 incorrectly identified a use-after-free vulnerability. We then asked GPT-4 to check its work with this prompt:

OK, now please double-check that the trace is correct, stepping thru the code in detail.

The LLM responded with a walkthrough of the code and then correctly concluded that there was no vulnerability present:

Upon a detailed step-by-step walkthrough, the trace provided previously appears to be incorrect. The use-after-free scenario doesn't occur because when an item from full_list is copied to sub_list using the 'C' command, a duplicate of the string is created with strdup(), ensuring that sub_list entries are independent of any reallocations or frees that might occur with full_list entries.

Therefore, there is no use-after-free vulnerability with the described trace in the provided code.

Deleting the strdup call in the above example introduces a real use-after-free bug. We removed this call to strdup and separately (i.e., in a new conversation) asked GPT-4 to analyze this version of the code. GPT-4 correctly identified the use-after-free bug and correctly reported that it is a real bug when asked to double-check the trace in detail.

Using an LLM to Write Proof Annotations

We also used a similar technique when asking the LLM to verify a proof. Some tools such as Frama-C can verify hand-written proofs of certain properties of programs. Since writing these proofs can be time-consuming and often requires specific expertise, we wanted to test the accuracy of GPT-4 performing this function and potentially speeding up the verification process.

We asked GPT-4 to write a precondition and verify that no buffer overflow is present in the following function when the precondition is satisfied:

Initially, the LLM produced an invalid precondition. However, when we prompted GPT-4 with the error message from Frama-C, we received a correct precondition along with a detailed explanation of the error. While GPT-4 doesn’t yet have the capabilities to write program annotations consistently, fine-tuning the LLM and providing it with error messages to help it to correct its work may improve performance in the future.

Work with Us

Over the next two years, we plan to build on these initial experiments through collaborator testing and feedback. We want to partner with organizations and test our models in their environments with input on which code weaknesses to prioritize. We are also interested in collaborating to improve the ability of LLMs to write and correct proofs, as well as enhancing LLM prompts. We can also help advise on the use of on-premise LLMs, which some organizations may require due to the sensitivity of their data.

Reach out to us to discuss potential collaboration opportunities. You can partner with the SEI to improve the security of your code and contribute to advancement of the field.

Additional Resources

Read the blog post Using ChatGPT to Analyze Your Code? Not So Fast.

Read the paper Using LLMs to Automate Static-Analysis Adjudication and Rationales.

View the presentation Static Analysis Classification and Automated Code Repair.

Read the paper Static Analysis Alert Audits: Lexicon & Rules.

More By The Authors

Release of SCAIFE System Version 2.0.0 Provides Support for Continuous-Integration (CI) Systems

• By Lori Flynn

A Technique for Decompiling Binary Code for Software Assurance and Localized Repair

• By William Klieber

More In Cybersecurity Engineering

PUBLISHED IN

Cybersecurity EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Cybersecurity Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed