Designing Great Challenges for Cybersecurity Competitions

PUBLISHED IN

Cyber Workforce DevelopmentRecently, the Department of Homeland Security (DHS) identified the need to encourage hands-on learning through cybersecurity competitions to address a shortage of skilled cyber defenders. Likewise, in 2019, Executive Order 13870 addressed the need to identify, challenge, and reward the United States government’s best cybersecurity practitioners and teams across offensive and defensive cybersecurity disciplines. Well-developed cybersecurity competitions offer a way for government organizations to fulfill that order.

The Software Engineering Institute (SEI) has been working with the DHS Cybersecurity & Infrastructure Security Agency (CISA) to bring unique cybersecurity challenges to the federal cyber workforce. This blog post highlights the SEI’s experience developing cybersecurity challenges for the President’s Cup Cybersecurity Competition and general-purpose guidelines and best practices for developing effective challenges. It also discusses tools the SEI has developed and made freely available to support the development of cybersecurity challenges. The SEI technical report Challenge Development Guidelines for Cybersecurity Competitions explores these ideas in greater detail.

The Purpose and Value of Cybersecurity Challenges

Cybersecurity challenges are the heart of cybersecurity competitions. They provide the hands-on tasks competitors perform as part of the competition. Cybersecurity challenges can take several forms and can involve different responses, such as performing actions on one or many virtual machines (VM), analyzing various types of files or data, or writing code. A single cybersecurity competition might comprise several different challenges.

The goal of these cybersecurity challenges is to teach or assess cybersecurity skills through hands-on exercises. Consequently, when building challenges, developers select mission-critical work roles and tasks from the National Initiative for Cybersecurity Education Workforce Framework for Cybersecurity (NICE Framework), a document published by the National Institute of Standards and Technology (NIST) and the National Initiative for Cybersecurity Careers and Studies (NICCS). The NICE Framework defines 52 work roles and provides detailed information about the specific knowledge, skills, and abilities (KSAs) required to perform tasks in each.

Using the NICE Framework helps developers focus challenges on critical skills that best represent the cybersecurity workforce. Each challenge clearly states which NICE work role and tasks it targets. By identifying the knowledge and skills each challenge targets, competitors can easily focus on challenges that address their strengths during the competition and isolate learning opportunities when using challenges for training.

Challenge Planning

Creating successful cybersecurity challenges begins with comprehensive planning to determine the level of difficulty for each challenge, assessing the points available for each challenge, and identifying the tools required to solve the challenges. In terms of difficulty, competition organizers want participants to feel engaged and challenged. Challenges that are too easy will make more advanced participants lose interest, and challenges that are too hard will frustrate competitors. Competitions generally should include challenges that are suitable for all levels—beginner, intermediate, and advanced.

Scoring

Points systems are used to reward competitors for the time and effort they spend solving each challenge. Moreover, competition organizers can use points to determine competitor placement—competitors with higher scores can advance to future rounds, and organizers can recognize those with the highest points as winners. Points should be commensurate with the difficulty posed by the challenge and effort required to solve it. Point allocation can be a subjective process, a matter we will return to in the section Challenge Testing and Review section below.

Challenge Tooling

Identifying the tools required to solve a challenge is an important step in the development process for two reasons:

- It ensures that challenge developers install all required tools in the challenge environment.

- It is good practice to provide competitors a list tools available in the challenge environment, especially for competitions in which organizers provide competitors with the analysis environment.

Developers should be careful to build challenges that do not require the use of paid or licensed software. Open source or free tools, applications, and operating systems are vital because some competitors might not have access to certain software licenses, which would put them at a disadvantage or even prevent them from completing altogether.

Challenge Development

Developers must be well-versed in cybersecurity subject matter to devise innovative approaches to test competitors. Not only must developers identify the skills the challenge will target and the scenario it will simulate, they must also develop the technical aspects of the challenge, implement an automated and auditable grading system, incorporate variability, and write documentation for both the testers and the competitors.

Pre-Development Considerations

Developers should begin by identifying the work roles and skills their challenge aims to assess. By so doing, they can build more precise challenges and avoid including tasks that do not assess applicable skills or that test too wide an array of skills. After they’ve defined the work role associated with a given challenge, developers can form a challenge idea.

The challenge idea includes the technical tasks competitors must complete and the location in which the challenge scenario will take place. All challenge tasks should resemble the tasks that professionals undertake as part of their jobs. Developers are free to be as creative as they wish when building the scenario. Topical challenges based on real-world cybersecurity events offer another way to add unique and creative scenarios to challenges.

Technical Component Considerations

The technical components of challenge development generally involve VM, network, and service configuration. This configuration ensures the challenge environment deploys correctly when competitors attempt the challenge. Development of technical components might include:

- Configuring VMs or services to incorporate known vulnerabilities

- Configuring routers, firewalls, services, etc., to the state developers want

- Staging attack artifacts or evidence throughout networks or logs

- Completing other actions that prepare the environment for the challenge

Developers might also purposefully misconfigure aspects of the environment if the challenge targets identifying and fixing misconfigurations.

Best Practices for Developing Challenges

Each challenge targets different skills, so there is no standard process for developing a cybersecurity challenge. However, developers should apply the following best practices:

- Ensure the technical skills assessed by the challenge are applicable in the real world.

- Ensure the tools required to solve the challenge are free to use and available to the competitors.

- Make a list of the tools available to competitors in the hosted environment.

- Ensure challenges do not force competitors down a single solution path. Competitors should be able to solve challenges in any realistic manner.

- Remove unnecessary hints or shortcuts from the challenge, including command history, browsing data, and other data that could allow competitors a shortcut to solving the challenge.

Challenge Grading

In general, developers should automate grading through an authoritative server that receives answers from the competitors and determines how many points to award the submission. The submission system should generally ignore differences in capitalization, white space, special characters, and other variations that are ultimately irrelevant to correctness. Doing so ensures competitors aren’t unfairly penalized for immaterial errors.

Ignoring these errors might seem to contradict an assessment of operational readiness in cases where exact precision is required. However, cybersecurity competitions have goals and considerations beyond evaluating operational proficiency, such as ensuring a fair competition and encouraging broad participation.

Developers may apply different grading methods, including the following:

- Token discovery. In token-discovery grading, competitors must find a string or token that follows a defined format (these tokens can also be called “flags”). Developers can place the token in any part of the challenge where the competitor will find it by completing the challenge tasks.

- Question-and-answer problems. For question-and-answer problems, the competitor must find the correct answer to one or more questions by performing challenge tasks. The answers to the challenge questions can take several forms, such as entering file paths, IP addresses, hostnames, usernames, or other fields and formats that are clearly defined.

- Environment verification. In environment verification grading, the system grades competitors based on changes they make to the challenge environment. Challenges can task competitors with fixing a misconfiguration, mitigating a vulnerability, attacking a service, or any other activity where success can be measured dynamically. When the grading system verifies changes to the environment state, it provides competitors with a success token.

Challenge Variation

Developers should include some level of variation between different deployments of a challenge to allow for different correct answers to the same challenge. Doing so is important for two reasons. First, it helps promote a fair competition by discouraging competitors from sharing answers. Second, it allows competition organizers to reuse challenges without losing educational value. Challenges that can be completed numerous times without resulting in the same answer enable competitors to learn and hone their skills through repeated practice of the same challenge.

Developers can introduce variation into challenges in several ways, depending on the type of grading that they use:

- Token-based variation. Challenges using token-discovery or environment-verification grading can randomly generate unique tokens for each competitor when the challenge is deployed. Developers can insert dynamically generated submission tokens into the challenge environment (e.g., inserting guestinfo variables into VMs), and they can copy them to the locations where they expect competitors to receive the challenge answers.

- Question-and-answer variation. In question-and-answer challenges, developers can introduce variation by configuring different answers to the same questions or by asking different questions.

Challenge Documentation

The two key documents developers must create in support of their challenge are the challenge guide and the solution guide.

The challenge guide, which is visible to the competitors, provides a short description of the challenge, the skills and tasks the challenge assesses, the scenario and any background information that is required to understand the environment, machine credentials, and the submission area or areas.

The challenge document should describe the scenario in a way that competitors can easily follow and understand. The challenge scenario and background information should avoid logical leaps and the difficulty level should not hinge on information internationally left out of the guide.

The solution guide provides a walk-through of one way to complete the challenge. During testing, developers use the solution guide to ensure the challenge can be solved. Developers can also release the solution guide to the public after the conclusion of the competition to serve as a community learning resource.

The intended audience for this guide is the general cybersecurity community. Consequently, developers should assume the reader is familiar with basic IT and cybersecurity skills, but is not an expert in the field. Screenshots and other images are helpful additions to these guides.

Challenge Testing and Review

After developers build a challenge, it should go through several rounds of testing and review. Developers test challenges to ensure quality, and they review them to estimate the challenge’s difficulty.

Developers should perform an initial round of testing to catch any errors that arise during the challenge deployment and initialization process. They should also ensure that competitors can fully solve the challenge in at least one way. A second round of testing should be conducted by qualified technical staff unfamiliar with the challenge. Testers should be encouraged to attempt solving the challenge on their own but may be provided the developer’s solution guide for help.

The testers should ensure each challenge meets the following quality assurance criteria:

- The challenge deploys as expected and without errors.

- The challenge VMs are accessible.

- The challenge is solvable.

- There are no unintentional shortcuts to solving the challenge.

- Challenge instructions and questions are properly formatted and give a clear indication of what competitors must do.

In their review of the challenge, testers should take notes about the content, including estimates of difficulty and length of time it would take competitors to solve. After testers complete their review, competition organizers can examine the difficulty assessments and compare each challenge with others. This comparison ensures that easier challenges remain in earlier rounds and are worth fewer points than challenges judged as more difficult.

When deciding challenge point allocations, organizers can use a base or standard score allotment as a starting point (e.g., all challenges are worth 1,000 points at the beginning of the process). Organizers can then increase or decrease point allocations based on the available difficulty data, keeping in mind that the main goal is for the number of points they allocate to a challenge to directly correspond with the effort required for solving it. Point allocations should consider both the difficulty and the time it takes to solve the challenge.

SEI Open Source Applications for Cybersecurity Challenge Competitions

Developers can use several open source applications to develop challenges and to orchestrate cybersecurity competitions. The SEI has developed the following two applications for running cybersecurity competitions:

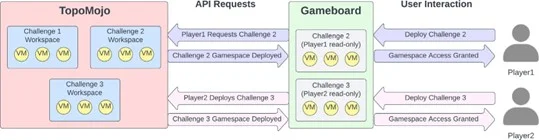

- TopoMojo is an open source lab builder and player application that developers can use to develop cybersecurity challenges. It provides virtual workspaces in which challenge development can take place. The workspaces allow developers to add VMs, virtual networks, and any other resources that are required for developing or solving a single challenge.

- Gameboard is an open source application that organizers can use for orchestrating cybersecurity competitions. It enables organizers to create competitions that can either be team or individual based and that consist of either single or multiple rounds. Challenges are organized into rounds and competitors attempt to solve as many challenges as they can to maximize their score. Gameboard uses the TopoMojo API to deploy the competitors’ game space for each challenge.

Gameboard also serves as the authoritative location for competitors to submit answers or tokens. Moreover, as part of handling answer and token submissions, Gameboard has logging, brute force protections, and other features to ensure the integrity of the competition.

Figure 1 shows how the TopoMojo and Gameboard applications interact. Developers use TopoMojo workspaces to develop challenges. Competitors then use Gameboard to deploy and in- teract with challenges. When a player deploys a challenge, Gameboard will interact with the To- poMojo API to request a new game space for the competitor. TopoMojo creates and returns the player’s challenge game space.

Best Practices Support Better Cybersecurity Competitions

The development practices we have highlighted in this post are the result of the SEI’s experience developing cybersecurity challenges for the President’s Cup Cybersecurity Competition. Cybersecurity competitions provide a fun and interesting way to exercise technical skills, identify and recognize cybersecurity talent, and engage students and professionals in the field. They can also serve as education and training opportunities. With the United States government, and the nation as a whole, facing a significant shortage in the cybersecurity workforce, cybersecurity competitions play an important role in developing and expanding the workforce pipeline.

There is no single way to run a competition, and there is no one way to develop cybersecurity challenges. However, these best practices can help developers ensure the challenges they create are effective and engaging. Challenge development is the single most important and time-consuming aspect of running a cybersecurity competition. It requires meticulous planning, technical development, and a rigorous quality-assurance process. In our experience, these practices ensure successfully executed competitions and enduring, hands-on cybersecurity assets that competition organizers and others can reuse many times over.

If you would like to learn more about the work we do to strengthen the cybersecurity workforce and the tools we have developed to support this mission, contact us at info@sei.cmu.edu.

Additional Resources

For a much more in-depth discussion of the best practices for creating great challenges for cybersecurity competitions described in this post, read the SEI technical report Challenge Development Guidelines for Cybersecurity Competitions.

TopoMojo, an SEI open Source lab builder and player application

Gameboard, an SEI open source cybersecurity competition support application

PUBLISHED IN

Cyber Workforce DevelopmentPart of a Collection

Cyber Readiness Research

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed