Cyber-Informed Machine Learning

PUBLISHED IN

Cybersecurity EngineeringConsider a security operations center (SOC) that monitors network and endpoint data in real time to identify threats to their enterprise. Depending on the size of its organization, the SOC may receive about 200,000 alerts per day. Only a small portion of these alerts can receive human attention because each investigated alert may require 15-to-20 minutes of analyst attention to answer a critical question for the enterprise: Is this a benign event, or is my organization under attack? This is a challenge for nearly all organizations, since even small enterprises generate far more network, endpoint, and log events than humans can effectively monitor. SOCs therefore must employ security monitoring software to pre-screen and downsample the number of logged events requiring human investigation.

Machine learning (ML) for cybersecurity has been researched extensively because SOC activities are data rich, and ML is now increasingly deployed into security software. ML is not yet broadly trusted in SOCs, and a major barrier is that ML methods suffer from a lack of explainability. Without explanations, it is reasonable for SOC analysts not to trust the ML.

Outside of cybersecurity, there are broad general demands for ML explainability. The European General Data Protection Regulation (Article 22 and Recital 71) encodes into law the “right to an explanation” when ML is used in a way that significantly affects an individual. The SOC analyst also has a need for explanations because the decisions they must make, often under time pressure and with ambiguous information, can have significant impacts on their organization.

We propose cyber-informed machine learning as a conceptual framework for emphasizing three types of explainability when ML is used for cybersecurity:

- data-to-human

- model-to-human

- human-to-model

In this blog post, we provide an overview of each type of explainability, and we recommend research needed to achieve the level of explainability necessary to encourage use of ML-based systems intended to support cybersecurity operations.

Data-to-Human Explainability

Data-to-human explainability seeks to answer: What is my data telling me? It is the most mature form of explainability, and it is a primary motivation of statistics, data science, and related fields. In the SOC, a basic use case is to understand the normal network traffic profile, and a more specific use case might be to understand the history of a particular internal internet protocol (IP) address interacting with a particular external IP address.

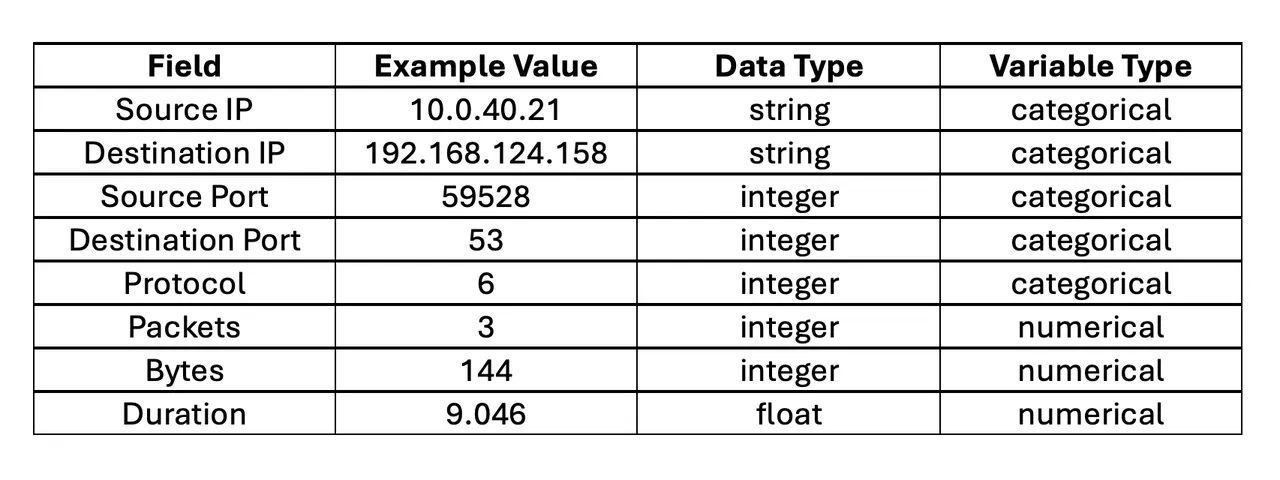

While this type of explainability may seem straightforward, there are several cybersecurity-specific challenges. For example, consider the NetFlow fields identified in Table 1.

ML methods can readily be applied to the numerical fields: packets, bytes, and duration. However, source IP and destination IP are strings, and in the context of ML they are categorical variables. A variable is categorical if its range of possible values is a set of levels (categories). While source port, destination port, protocol, and type are represented as integers, they are actually categorical variables. Furthermore, they are non-ordinal because their levels have no sense of order or scale (e.g., port 59528 is not somehow subsequent to or larger than port 53).

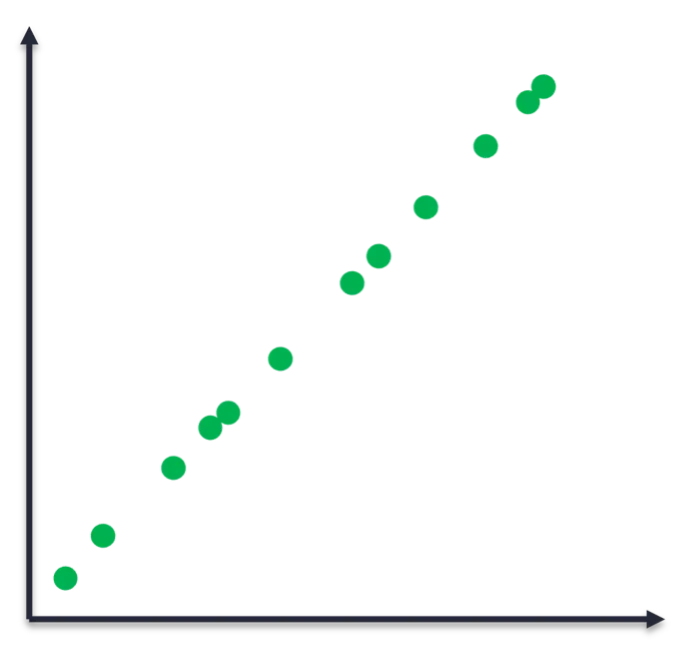

Consider the data points in Figure 1 to understand why the distinction between numerical and categorical variables is important. The underlying function that generated the data is clearly linear. We can therefore fit a linear model and use it to predict future points. Input variables that are non-ordinal categorical (e.g., IP address, ports, and protocols) challenge ML because there is no sense of order or scale to leverage. These challenges often limit us to basic statistics and threshold alerts in SOC applications.

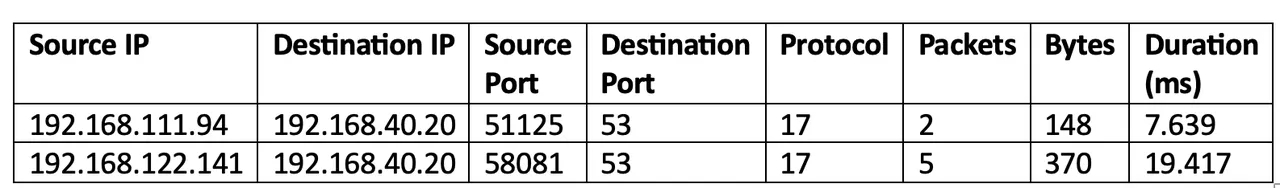

A related challenge is that cyber data often have a weak notation of distance. For example, how would we quantify the distance between the two NetFlow logs in Table 2? For the numerical variable flow duration, the distance between the two logs is 19.417 – 7.639, or 11.787 milliseconds. However, there is no similar notion of distance between the two ephemeral ports, as well as the other categorical variables.

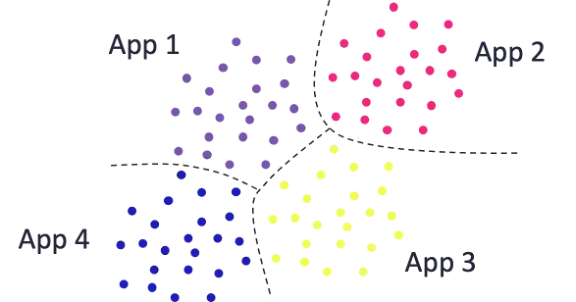

There are some techniques for quantifying similarity between logs with categorical variables. For example, we could count the number of equivalently-valued fields between the two logs. Logs that share more field values in common are in some sense more similar. Now that we have some quantitative measure of distance, we can try unsupervised clustering to discover natural clusters of logs within the data. We might hope that these clusters would be cyber-meaningful, such as grouping by the application that generated each log, as Figure 2 depicts. However, such cyber-meaningful groupings do not occur in practice without some cajoling, and that cajoling is an example of cyber-informed machine learning: imparting our human cyber expertise into the ML pipeline.

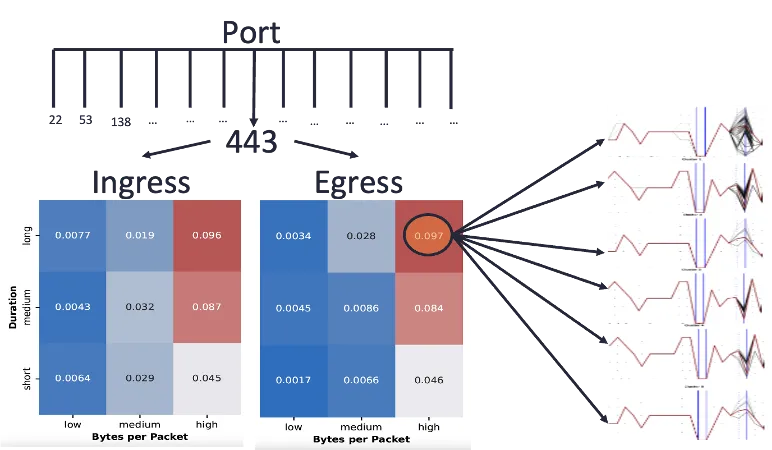

Figure 3 illustrates how we might impart human knowledge into a ML pipeline. Instead of naively clustering all the logs without any preprocessing, data scientists can elicit from cyber analysts the relationships they already know to exist in the data, as well as the types of clusters they would like to understand better. For example, port, flow direction, and packet volumetrics might be of interest. In that case we might pre-partition the logs by those fields, and then perform clustering on the resulting bins to understand their composition.

While data-to-human is the most mature type of explainability, we have discussed some of the challenges that cyber data present. Exacerbating these challenges is the large volume of data that cyber processes generate. It is therefore important for data scientists to engage cyber analysts and find ways to impart their expertise into the analysis pipelines.

Model-to-Human Explainability

Model-to-human explainability seeks to answer: What is my model telling me and why? A common SOC use case is understanding why an anomaly detector alerted to a particular event. To avoid worsening the alert burden already facing SOC analysts, it is critical that ML systems deployed in the SOC include model-to-human explainability.

Demand for model-to-human explainability is increasing as more organizations deploy ML into production environments. The European General Data Protection Regulation, the National Artificial Intelligence Engineering initiative, and a widely cited article in Nature Machine Intelligence all emphasize the importance of model-to-human explainability.

ML models can be classified as white box or black box, depending on how readily their parameters can be inspected and interpreted. White box models can be fully interpretable, and the basis for their predictions can be understood precisely. Note that even white box models can lack interpretability, especially when they become very large. White box models include linear regression, logistic regression, decision tree, and neighbor-based methods (e.g., k-nearest neighbor). Black box models are not interpretable, and the basis for their predictions must be inferred indirectly through methods like examining global and local feature importance. Black box models include neural networks, ensemble methods (e.g., random forest, isolation forest, XGBOOST), and kernel-based methods (e.g., support vector machine).

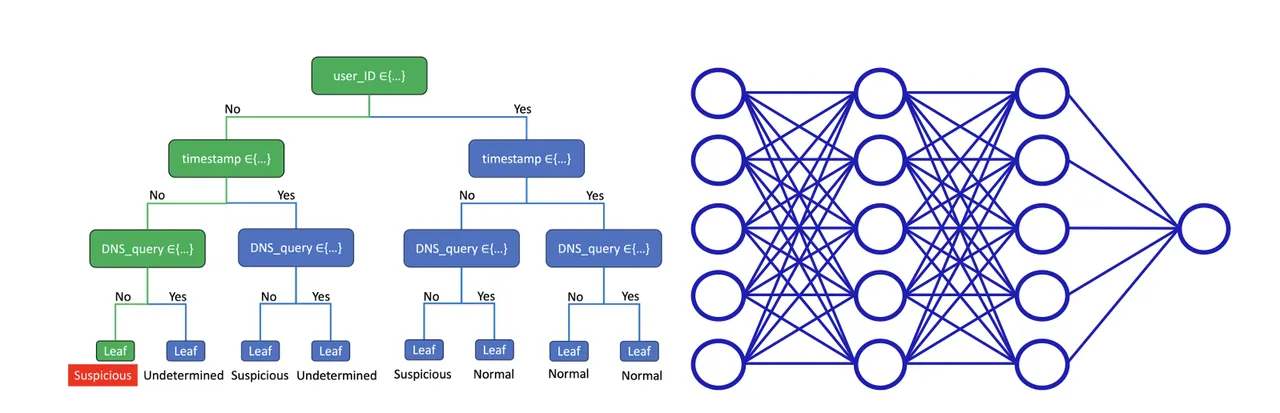

In our previous blog post, we discussed the decision tree as an example of a white box predictive model admitting a high degree of model-to-human explainability; every prediction is completely interpretable. After a decision tree is trained, its rules can be implemented directly into software solutions without having to use the ML model object. These rules can be presented visually in the form of a tree (Figure 4, left panel), easing communication to non-technical stakeholders. Inspecting the tree provides quick and intuitive insights into what features the model estimates to be most predictive of the response.

Although complex models like neural networks (Figure 4, right panel) can more accurately model complex systems, this is not always the case. For example, a survey by Xin et al. compares the performance of various model forms, developed by many researchers, across many benchmark cybersecurity datasets. This survey shows that simple models like decision trees often perform similarly to complex models like neural networks. A tradeoff occurs when complex models outperform more interpretable models: improved performance comes at the expense of reduced explainability. However, the survey by Xin et al. also shows that the improved performance is often incremental, and in these cases we think that system architects should favor the interpretable model for the sake of model-to-human explainability.

Human-to-Model Explainability

Human-to-model explainability seeks to enable end users to influence an existing trained model. Consider an SOC analyst wanting to tell the anomaly detection model not to alert to a particular log type anymore because it’s benign. Because the end user is seldom a data scientist, a key part of human-to-model explainability is integrating adjustments into a predictive model based on judgments made by SOC analysts. This is the least mature form of explainability and requires new research.

A simple example is the encoding step of an ML pipeline. Recall that ML requires numerical features, but cyber data include many categorical features. Encoding is a technique that transforms categorical into numerical features, and there are many generic encoding techniques. For example, integer encoding might assign each IP address to an arbitrary integer. This would be naïve, and a better approach would be to work with the SOC analyst to develop cyber-meaningful encoding strategies. For example, we might group IP addresses into internal and external, by geographic region, or by using threat intelligence. By doing this, we impart cyber expertise into the data science pipeline, and this is an example of human-to-model explainability.

We take inspiration from a successful movement called physics-informed machine learning [Karniadakis et al. and Hao et al.], which is enabling ML to be used in some engineering design applications. In physics, we have governing equations that describe natural laws like the conservation of mass and the conservation of energy. Governing equations are encoded into models used for engineering analysis. If we were to exercise these models over a large design space, we could use the resulting data (inputs mapped to outputs) to train ML models. This is one example of how our human expertise in physics can be imparted into ML models.

In cybersecurity, we do not have stable mathematical models of system and user behavior, but we do have sources of cyber expertise. We have human cyber analysts with knowledge, reasoning, and intuition built on experience. We also have cyber analytics, which are encoded forms of our human expertise. Like the physics community, cybersecurity needs methods that enable our rich human expertise to influence ML models that we use.

Recommendations for Cybersecurity Organizations Using ML

We conclude with a few practical recommendations for cybersecurity organizations using ML. Data-to-human explainability methods are relatively mature. Organizations seeking to learn more from their data can transition methods from existing research and off-the-shelf tools into practice.

Model-to-human explainability can be greatly improved by assigning, at least in the early stages of adoption, a data scientist to support the ML end users as questions arise. Developing cybersecurity data citizens internally is also helpful, and there are abundant professional development opportunities to help cyber professionals acquire these skills. Finally, end users can inquire with their security software vendors as to whether their ML tools include various kinds of explainability. ML models should at least report feature importance—indicating which features of inputs are most influential to the model’s predictions.

While research is required to further develop human-to-model explainability methods in cybersecurity, there are a few steps that can be taken now. End users can inquire with their security software vendors as to whether their ML tools can be calibrated with human feedback. SOCs might also consider collecting benign alerts dispositioned by manual investigation into a structured database for future model calibration. Finally, the act of retraining a model is a form of calibration, and evaluating when and how SOC models are retrained can be a step toward influencing their performance.

Additional Resources

Read the blog post Explainability in Cybersecurity Data Science.

Read the blog post What is Explainable AI?

References cited in this post include the following:

- The Carnegie Mellon University Software Engineering Institute, AI Engineering: A National Initiative. https://www.sei.cmu.edu/our-work/projects/display.cfm?customel_datapageid_4050=311883. Accessed: 2023-08-29.

- European Parliament and Council of the European Union, Regulation (EU) 2016/679 of the European Parliament and of the Council. https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679. Accessed: 2024-12-11.

- Field, T. The Power of Cognitive Security: Denis Kennelly of IBM Security Describes a Cognitive Security Operations Center. Information Security Media Group (iSMG). February 15, 2017. https://www.bankinfosecurity.com/kennelly-ibm-a-9697. Accessed: 2024-12-11.

- Goodman, B., & Flaxman, S. EU regulations on algorithmic decision-making and a “right to explanation”, in ICML Workshop on Human Interpretability in Machine Learning (WHI 2016), New York, NY. http://arxiv.org/abs/1606.08813v1. Accessed: 2024-12-11.

- Hao, Z., Liu, S., Zhang, Y., Ying, C., Feng, Y., Su, H., & Zhu, J. (2022). Physics-informed machine learning: A survey on problems, methods and applications. arXiv preprint arXiv:2211.08064. https://arxiv.org/pdf/2211.08064. Accessed: 2024-12-11.

- Karniadakis, G. E., Kevrekidis, I. G., Lu, L., Perdikaris, P., Wang, S., & Yang, L. (2021). Physics-informed machine learning. Nature Reviews Physics, 3(6), 422-440. https://www.nature.com/articles/s42254-021-00314-5. Accessed: 2024-12-11.

- Mellon, J., & Worrell, C. (2023, November 20). Explainability in Cybersecurity Data Science. https://doi.org/10.58012/5jky-v459. Accessed: 2024-12-11.

- C. Rudin, Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead, Nature Machine Intelligence, 1 (2019), pp. 206–215. https://www.nature.com/articles/s42256-019-0048-x. Accessed: 2024-12-11.

- Sarker, I. H., Kayes, A. S. M., Badsha, S., Alqahtani, H., Watters, P., & Ng, A. (2020). Cybersecurity data science: an overview from machine learning perspective. Journal of Big data, 7, 1-29. https://link.springer.com/content/pdf/10.1186/s40537-020-00318-5.pdf. Accessed: 2024-12-11.

- Y. Xin, L. Kong, Z. Liu, Y. Chen, Y. Li, H. Zhu, M. Gao, H. Hou, and C. Wang, Machine learning and deep learning methods for cybersecurity, IEEE Access, 6 (2018), pp. 35365–35381. https://ieeexplore.ieee.org/document/8359287. Accessed: 2024-12-11.

More By The Authors

More In Cybersecurity Engineering

PUBLISHED IN

Cybersecurity EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Cybersecurity Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed