Measuring Resilience in Artificial Intelligence and Machine Learning Systems

PUBLISHED IN

Artificial Intelligence EngineeringArtificial intelligence (AI) and machine learning (ML) systems are quickly becoming integrated into a wide array of business and military operational environments. Organizations should ensure the resilience of these new systems, just as they would any other mission-critical asset. However, the "black box" decision-making processes that can make AI and ML systems so useful may also make the measurement of their resilience different than traditional measures. This blog posts describes the challenges of measuring the resilience of AI and ML systems with the current set of resilience assessment tools. While the discussion in this post applies to all AI systems, whether driven by ML or not, we will primarily focus on ML-based systems, as they present the most difficult set of challenges. We believe that the solutions to these problems require an expansion of the existing set of tools, with a possibility that underlying models may need to be changed as the ramifications of employing AI and ML systems are better understood.

New Technology, New Challenges

Both the Executive Office of the President of the United States' National Artificial Intelligence Research and Development Strategic Plan and the Summary of the 2018 Department of Defense Artificial Intelligence Strategy identify specific roles that ML systems are expected to play in our national security, including supply chain management, safety assurance, and business operations. These systems are expected to make significant decisions within their operating environments. The United States is not the only nation to recognize the potential for this transformative technology; Russia and China are investing heavily in AI and ML for military and industrial purposes.

ML systems will be expected to operate in contested and adversarial environments. Their resilience, or ability to adapt to risks, will be critical to each mission that they support. There are plenty of examples of the resilience of ML systems being compromised because of unintended failures in development and operational processes: serious accidents involving self-driving vehicles made by Tesla and Uber, IBM's Watson giving erroneous medical recommendations, or systems having inherent racial or gender bias. There is also a growing list of resilience failures due to malicious interactions with ML systems: corrupting its training data, using a mask to gain unauthorized access, or developing and using "deepfakes."

We cannot afford to let the resilience of these systems be an afterthought. Ensuring resilience requires measuring resilience; measuring resilience requires the right set of tools. The SEI possesses unique capabilities and expertise to develop these tools.

Resilience and the CERT-RMM

The SEI has years of experience in developing, applying, and improving resilience tools, most notably the CERT Resilience Management Model (CERT-RMM) and its derivatives such as the U.S. Department of Homeland Security's Cyber Resilience Review (CRR) and External Dependencies Management assessments (EDM). These tools were designed with the understanding that a transparent, effective, human-driven dialogue about risk is required to drive policies, procedures, and practices that lead to more resilient systems.

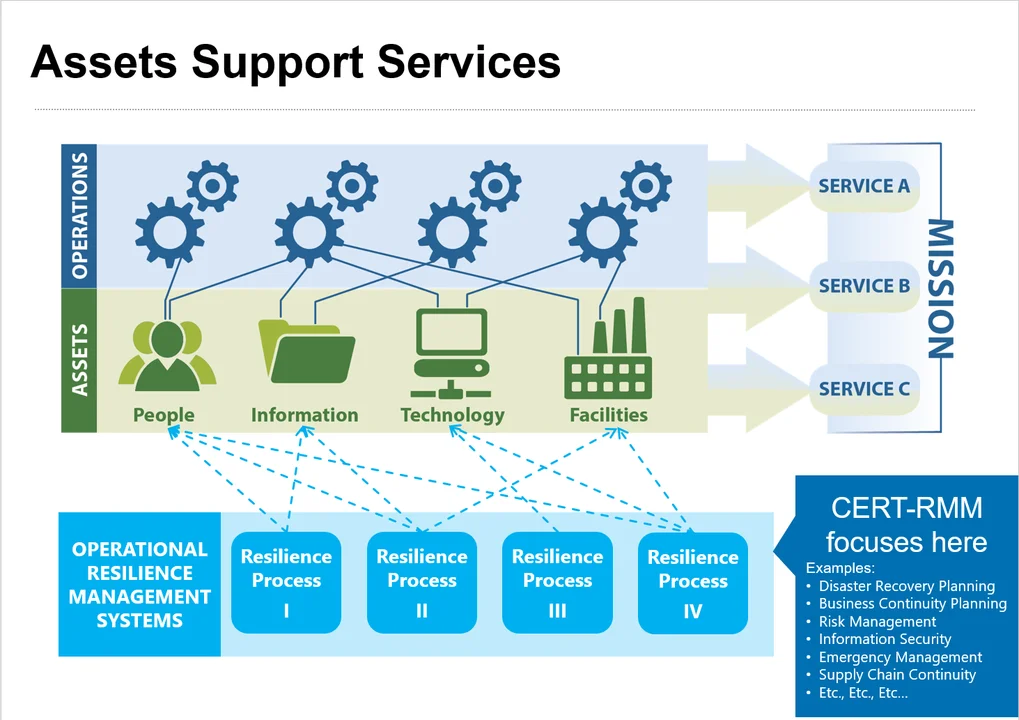

CERT-RMM assumes a general model of an organization, illustrated in the diagram below. Each organization, regardless of size, has a mission and services that exist to achieve the mission. The organization expends limited resources to execute those services through various business operations supported by various assets (People, Information, Technology, and Facilities).

The resilience processes developed by the business, shown at the bottom of the diagram, are intended to minimize disruption to those assets so they can consistently support the operations that support the service's contribution to the mission. CERT-RMM and its tools operate in the space of the organization's policies, processes, and activities that make an organization resilient. CERT-RMM does not focus on the specific missions, services, business operations, or assets that an organization has, which are left up to the organization to manage.

ML Systems and the CERT-RMM

The most prominent examples of ML systems are those that are major services to an organization--service A, B, or C in the diagram above--such as IBM's Watson, which has become a product line for the technology company. However, ML assets could also support a broader operation, such as threat monitoring, identifying vulnerabilities, or managing assets--the "Technology" assets above.

How do ML systems fit into the CERT-RMM? A crucial aspect of the CERT-RMM is that it is agnostic to the type of missions or services an organization has. The core goal of resilience remains the same, regardless of the nature of the underlying service: continued operation of core business functions despite disruption and stress factors. This core goal remains, whether we are examining a traditional system or an ML system. The model is also agnostic about what kinds of technology assets the organization has. They can be traditional computing systems, ML systems, or factory machinery. CERT-RMM regards an organization's engagement with or support of any technology assets in the same way.

However, while the CERT-RMM is capable of accommodating ML systems, we need to fill the gaps between existing tools by adding an ML-centric assessment.

Resilience: Uniqueness of ML Systems

So why does the introduction of ML require us to revisit how to address or assess resilience? While many aspects of the SEI's resilience tools apply to ML systems, they are different from traditional systems in important ways. We will discuss two of these differences.

First, an ML system is likely to use a "black box" decision-making process, usually in ways that are difficult or impossible for human operators to comprehend. Existing assessments presume that people are responsible for making any decisions that may affect resilience. During the course of an assessment, interviews are conducted with key personnel to elicit an understanding of the processes surrounding those decisions. Unfortunately, it is not possible to interview a black-box system. Instead, an assessor would need to dive into the details of how personnel interact with the black-box system and understand how it will behave in various situations.

Exploring these details might involve asking questions like the following:

- How do we know if the system is making resilience-affecting decisions consistent with the organization's wishes?

- As organizations update their resilience policies, processes, or procedures, how are these changes propagated to the ML systems?

- If the ML has determined a more effective process or procedure that benefits resilience, how is that communicated to the organization? How does it track those changes?

Second, ML systems differ when it comes to issues like training. The CERT-RMM recognizes that training people is critical to the resilience of an organization because it helps ensure that individuals understand the policies, processes, and procedures of the organization that make them resilient. Training is addressed in the "organizational training and awareness" process area of the CERT-RMM, which serves as the reference for the "training and awareness" domain of the CRR. People performing activities on behalf of the organization should be trained to leverage different asset types, including other people, in a manner commensurate with the intent and expectations of the organization to support the critical service that, in turn, supports the organization's mission. Organizations are expected to ensure personnel are properly trained and qualified, meeting some baseline of expected behavior, and are expected to offer refresher training to ensure personnel remain qualified to conduct organizational activities. These core concepts are addressed in every domain of the CRR by questions such as, "Have qualified staff been assigned to perform activities as planned?"

But ML systems are trained in a vastly different manner. ML systems require gigabyte- or terabyte-sized datasets that can exceed the capability of human operators to review, even at a cursory level, and that can easily obfuscate why an activity is being performed. These risks are further compounded by the vulnerability of ML training data to bias, corruption, or contamination that may significantly affect the system's performance in poorly understood ways. Assessing an organization's understanding of how this impacts their resilience may involve asking questions like the following:

- How is the ML system baselined to expected behaviors?

- How do we ensure that the ML system is trained properly?

- Do ML systems need training "refreshers"?

CERT-RMM and the CRR address training programs and the training elements they need to stay relevant to employees, their jobs, and the organization. These tools will need to be expanded to account for training of ML systems.

A New Assessment

The differences discussed above, as well as others not discussed, could yield even more questions than the ones we've suggested. We propose that a new assessment be developed for the CERT-RMM, with a focus on issues surrounding ML and resilience, with questions like those presented above. There is a precedent for making new CERT-RMM tools: the EDM assessment was developed in response to a need to better understand an organization's relationship with external entities it relies on.

An ML resilience assessment could ask detailed questions to illuminate key issues when considering resilience of an ML system. Such an assessment would be a specialized, drill-down tool used only if an organization's resilience is strongly affected by ML systems.

Issues covered by the assessment would include those detailed above, such as the organization's interaction with an ML system and how systems are trained or re-trained. Other issues might include whether an organization has safeguards to prevent ML system deviation from safe operating conditions, how an organization provides feedback to ML systems when undesirable events occur, or how an organization tests ML system performance under rare or unforeseen circumstances.

More Work to Be Done

An ML system is an intelligent, non-human decision maker in an organization, but it is fundamentally different than a human decision maker. Accurately measuring the resilience of such a system requires us to extend our tools to accommodate these unique differences.

Further research is required to develop an assessment of ML system resilience, and it's possible that this research will point toward changing the CERT-RMM itself. Even if that happens, the overarching goal of the CERT-RMM and its derivative tools remains the same ever: to allow an organization to have a measurable and repeatable level of confidence in the resilience of a system by identifying, defining, and understanding the policies, procedures, and practices that affect its resilience. The fundamental principles and objectives remain unchanged when we consider an ML system instead of a traditional system. If we are to be confident that ML systems will securely operate the way they were intended and for the critical missions they support, it is imperative that we possess the right tools to assess their resilience.

More By The Authors

More In Artificial Intelligence Engineering

PUBLISHED IN

Artificial Intelligence EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed