Artificial Intelligence in Practice: Securing Your Code Using Natural Language Processing

PUBLISHED IN

Artificial Intelligence EngineeringMany techniques are available to help developers find bugs in their code, but none are perfect: an adversary needs only one to cause problems. In this post, I'll discuss how a branch of artificial intelligence called natural language processing, or NLP, is being applied to computer code and cybersecurity. NLP is how machines extract information from naturally occurring language, such as written prose or transcribed speech. Using NLP, we can gain insight into the code we generate, and can find bugs that aren't visible to existing techniques. While this field is still young, advances are coming rapidly, and I will also discuss the current state of the art and what we expect to see in the near future.

What Is NLP?

Machines can use NLP to extract information from naturally occurring language. Applications of NLP that will be familiar to most readers include customer-service chatbots, auto-completion in text messages, and recommended replies to email. NLP is the technology underlying the Apple Siri, Google Voice Recognition, and Amazon Alexa tools that listen and respond to human speech. The Google search engine also uses NLP to understand content on webpages when it builds its responses to users to provide search results. Beginning with raw text, NLP entails producing a machine-friendly representation of that text so that machines can search such representations for patterns and repetition.

The field of NLP is broad, and there are many different approaches to creating a computational representation of language. The general principle underlying NLP is that instances of natural language follow a structured set of rules. These rules may be complicated and may have a wide range of exceptions and edge cases, but they still exist. In some cases, we can state these rules ahead of time. Other instances require us to infer the rules based on sample usage of the language. In all cases, however, these rules allow us to generate natural sequences of language (e.g., chatbots), as well as to summarize, translate, and classify natural text.

How NLP Works

The first step in getting computers to understand and do something with natural language is to produce a representation of language that the computer can work with. The computer has to be taught how to recognize the same word in different usages (e.g., "said" vs "says"), the concept of tense, and how to interpret context-dependent exchanges. For example, depending on the context, the word "bank" could refer to a financial institution, the slopes bordering a river, or an airplane's change of direction toward the inside of a curve. Language ambiguity is also illustrated in this statement by Groucho Marx's character in the film Animal Crackers: "One morning I shot an elephant in my pajamas. How he got into my pajamas I don't know."

Although a full discussion of how NLP disambiguates homophones or ambiguous sentence structures like those in these examples is beyond the scope of this blog post, interested readers can watch this SEI webinar to learn more about the mechanics of how NLP works.

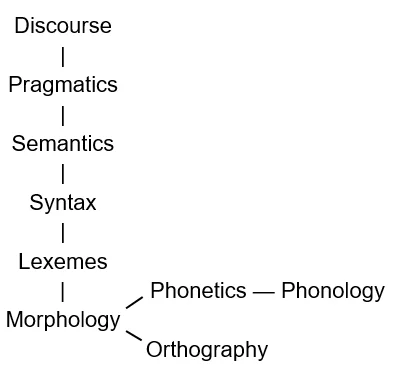

To help the computer understand language, linguists have teamed with computer scientists to break the problem into distinct tasks. Figure 1 shows all the different levels at which human language can be understood by either a trained linguist or by a computer. In trying to have the computer understand what it's looking at, it has to understand all these different parts of the hierarchy to make sense of it.

Morphology is the process of taking a single word and breaking down that word into its components. Some words, like the or baseball stand alone but others, for example, mustn't, comprise two components, must and not. Once you understand what the words themselves mean, then you have to understand how they're being applied--which particular definition of that word should be used, as in our previous example of bank. This process is referred to as lexical analysis. For a computer to understand a sentence using that word, it has to be taught how the word is being used in context. Syntax refers to the rules mentioned earlier; different languages follow their own grammar rules, and to understand a given chunk of text, the computer must apply the correct set of grammatical rules. Even after applying the rules, though, the sentence doesn't exist in a vacuum--it has a context, which is referred to by semantics. Pragmatics and discourse refer to the nuances of understanding conversations between more than one party, or referring to information not present in the immediate sentence.

Statistical Models in NLP

NLP enables us to find patterns and sources of repetition in data. Discovery of a pattern enables prediction of what comes next. Take for example the sentence, For lunch today I had a peanut butter and jelly ________. Most of us intuitively fill in the blank with sandwich. We have a vocabulary, a list of words that we could fill in the blank with, and we intuitively assign a probability or at least a feeling of how likely a given word is. Sandwich intuitively feels likely.

This concept is called an n-gram in NLP, with n referring to the number of words we are given as context for our prediction. A bigram is just two words, such as jelly ________. One example of a 4-gram would be peanut butter and jelly _______. Note that it is easier to predict the word in the blank when n is a higher number. In the bigram, many equally plausible possibilities present themselves--donut, roll, jar, fish, and so on. Sandwich in the bigram does not seem intuitively likely. Because it provides more context, the 4-gram increases the likelihood of sandwich and decreases the likelihood of the other possibilities.

While n-grams can be useful in certain contexts, they have a number of limitations, the most limiting being that they look only for exact matches of a specific phrase. Recent advances in NLP have yielded many techniques that can overcome this limitation. One that has gained unique notoriety is word2vec. While this technique is technically complex, it can be understood through the words of the late English linguist John Rupert Firth: You shall know a word by the company it keeps. Different words that are similar to each other are often used in similar contexts. Consider these two sentences (emphasis mine):

Usually the large-scale factory is portrayed as a product of capitalism.

At the magnetron workshop in the old biscuit factory, Fisk sometimes wore a striped...

It is the words around the word factory that provide the key to its meaning in these two contexts. We represent a word by its neighbors because that is a natural way to understand the meaning of a word, as opposed to trying to come up with an index that is very specific. Using word2vec, it is possible to make a kind of map of all of the different words that it has been trained on and their spatial relationships with each other as a way of discerning their meaning.

As I stated previously, NLP requires a representation of natural language in a form amenable to the computer's understanding--a natural language model, a probability distribution over sequences of words. A frequently used example is this sentence, originally stated by one of the most influential linguists in history, Dr. Noam Chomsky:

Colorless green ideas sleep furiously.

Although this sentence is syntactically correct, it has no meaning. As such, you would never see it used anywhere. Building a language model entails

- defining what words and phrases do and do not appear with each other, using a training data set to define our ground truth

- looking at a set of data and remembering how the words, phrases, concepts, and other linguistic properties relate to each other.

After a language model is constructed it is possible to answer the question of how likely it is to see whatever I have in front of me compared to what I've seen elsewhere in the text, preceding or following.

The language model is entirely dependent on the training data. If you are going to train a language model on, for example, Shakespeare, you are unlikely to be able to replicate Beyoncé lyrics--those two data sets are completely different. The training data is critical. If there are certain things you are looking for, or a certain important aspect you want to find in your data that isn't present in the training set, you will not be able to find it using whatever model is built from that training set. You will need to get a better data set for that purpose.

For more on the basics of NLP, be sure to watch our webinar.

NLP Applications

We can think of the computer as an expensive filing cabinet. Computers store text, video, customer databases, and much more. Through machine learning, the computer can learn to answer questions about this data.

Researchers have created a range of complex algorithms to get computers to understand the things that they are holding. Computers are not intrinsically introspective--they don't know what they are storing. NLP can be used to enable systems to understand what they are made out of. Through NLP, a computer can be made to understand the words in the documentation for the items in storage, and can then do something else with the items that the documentation describes. A database of terms that are associated with known vulnerabilities, for example, can be assembled and searched, and then some action can be taken to confirm that the vulnerability does exist on the computer and if so, to fix it.

To cite one example, at the SEI we work on many specification documents. A smart analyst can look at these and find clues pointing to vulnerabilities in whatever system is being described. This analysis takes time, however, and requires many other related documents for context. Our researchers are training the computer to do this itself, without the need for an analyst at every step.

Other sources of clues for identifying potential vulnerabilities can be found in documentation for the applications on a computer or in a system, or even the missions that are being conducted within projects. Documentation can focus an inquiry on what exists within a system as well as what is being done with it. We know that certain applications are prone to developing vulnerabilities, just as we know that certain tactics, techniques, and procedures (TTPs) within missions may be sources of risk for introducing vulnerabilities into systems or indications that a system has been compromised. NLP provides an alternative to manually scanning application and mission documentation, which is time consuming, prone to error, and limited in scale.

Documentation tells us what is there, but not which of the potential vulnerabilities have been patched. If you combine an NLP scan of documentation with other network-security practices such as scanning for all devices on the network for version numbers, or network mapping procedures, these techniques can provide a rich picture of what is on a network.

How NLP Can Be Used to Identify Software Vulnerabilities

The previous examples highlight the application of generic NLP techniques to the cybersecurity realm. Recently, though, researchers have been asking whether the techniques within NLP can be adapted to a more cybersecurity-focused application: finding bugs in written code. We've seen how NLP can be used to understand prosaic language, but how do we know that these techniques will work with software code? In 2012, Dr. Abram Hindle devised what he termed the naturalness hypothesis:

Programming languages, in theory, are complex, flexible and powerful, but the programs that real people actually write are mostly simple and rather repetitive, and thus they have usefully predictable statistical properties that can be captured in statistical language models and leveraged for software engineering tasks.

This hypothesis theorized that just as language is repetitive and predictable, software code is as well. One measure of repetition in language is the perplexity of the text. Simply speaking, perplexity is a measure of how surprised you are to see a word in a certain context. The word donut in jelly donut isn't very surprising, whereas in jelly flashlight it would be. By analyzing software code as though it were prosaic text, Dr. Hindle demonstrated that the perplexity of written language and of software code follow similar patterns. In fact, code is more repetitive and more predictable than written or spoken natural human language, due to the need for it to follow strict syntax rules for the computer to understand the instructions. This demonstration and other proofs led to the application of NLP to a wide range of computer instructions.

Techniques for Finding Bugs in Software Code Using NLP-Derived Tools

Software engineers have come to recognize that clean, well-designed code is a prerequisite for software that doesn't have bugs. Code conventions in use within organizations make code easier to navigate and maintain within those organizations and improve the ability to write good, maintainable, and trustworthy software and prevent bugs. If code is written in a way that others can easily read it and others on a team or project can easily contribute to it, introduction of bugs during the development process is less likely. Clean code also makes it easier for other people who are checking it to read it and find errors.

Assuming that you have a set of NLP tools that can be trained and applied to software code, one of the first things you can do is just use the system to clean up your code. You can use source code written within the organization that adheres to code conventions to develop the language model for NLP, then code can be compared to the language model to flag deviations and suggest alternative, possibly cleaner, versions.

Another possibility is to have the computer automatically write secure code. We understand what secure code looks like; we've seen a lot of it. Maybe we can just have the computer create it for us. Computers are good at automation. Another thing we can have the computer do is to describe what it thinks the code should be doing based on code that was previously written. That can be useful just generally to describe it, as the documentation explains what it is, or, if I read the documentation and it's incorrect, maybe the code is written in a way that it's not doing what it is supposed to be doing.

But what if bugs do get introduced? Static analysis and dynamic analysis, along with manual code review and simple grep pattern matching, are the techniques in common use for identifying bugs. NLP can augment these techniques with statistical analysis. Based on the premise that most of your code is probably correct and that buggy code is relatively rare, rare code can be flagged by NLP tools.

We can bring this back to the previous example. If I have a sentence that includes the phrase, peanut butter and jelly_________, most likely it's sandwich. If someone wrote peanut butter and jelly bathtub, that's probably wrong. If someone wrote peanut butter and jelly donut, that's unexpected, and it's probably worth a second look, because who knows whether that's right or wrong, but really it doesn't look so correct because I'm used to seeing the word sandwich. I should probably check it out. This concept has actually been used by researchers to build a tool that does exactly this. It looks through the historical code you've already written and tries to find areas where it looks almost exactly like other code. The tool does not know whether it's correct or incorrect, but it is able to compare every piece of code to all the other code you have and say, This one looks really similar, but it's a little different.

Bugram is a tool developed by Song Wang and colleagues that compares every piece of code to all other code from the same organization or project and flags outliers. The tool is also able to auto-correct the buggy code.

Others tools do similar things. Deep belief networks can be used to build another kind of predictor that can identify whether code is similar to other code and if not, where it diverges and what it should look like to adhere to organizational conventions and typical design patterns.

Emerging Applications of NLP and Areas of Active Research

One emerging application for the use of NLP is the effort to automate the writing of documentation from source code by means of a technique called statistical machine translation (SMT). SMT finds relationships between tokens--basic units of meaning in language such as words and punctuation marks--in different language models, and proposes many sentences, using statistical models to identify those that are best.

The language model encodes software code into an intermediate representation, then translates that representation into another language model to eventually render the code into English. This application, while impressive, is still fairly limited. The language models we work with are currently only at the bottom rung of semantic in Figure 1 above. Running a piece of code through a language model and back down through another language model affords only a limited level of understanding. But the technique does hold promise for development of more sophisticated and robust applications in the future.

Additional Resources

Watch the SEI webinar, Secure Your Code with AI and NLP.

Read other SEI blog posts about machine learning.

More By The Author

More In Artificial Intelligence Engineering

PUBLISHED IN

Artificial Intelligence EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed