Multicore Processing

The first blog entry in this series introduced the basic concepts of multicore processing and virtualization, highlighted their benefits, and outlined the challenges these technologies present. This second post will concentrate on multicore processing, where I will define its various types, list its current trends, examine its pros and cons, and briefly address its safety and security ramifications.

Definitions

A multicore processor is a single integrated circuit (a.k.a., chip multiprocessor or CMP) that contains multiple core processing units, more commonly known as cores. There are many different multicore processor architectures, which vary in terms of

- Number of cores. Different multicore processors often have different numbers of cores. For example, a quad-core processor has four cores. The number of cores is usually a power of two.

- Number of core types.

- Homogeneous (symmetric) cores. All of the cores in a homogeneous multicore processor are of the same type; typically the core processing units are general-purpose central processing units that run a single multicore operating system.

- Heterogeneous (asymmetric) cores. Heterogeneous multicore processors have a mix of core types that often run different operating systems and include graphics processing units.

- Number and level of caches. Multicore processors vary in terms of their instruction and data caches, which are relatively small and fast pools of local memory.

- How cores are interconnected. Multicore processors also vary in terms of their bus architectures.

- Isolation. The amount, typically minimal, of in-chip support for the spatial and temporal isolation of cores:

- Physical isolation ensures that different cores cannot access the same physical hardware (e.g., memory locations such as caches and RAM).

- Temporal isolation ensures that the execution of software on one core does not impact the temporal behavior of software running on another core.

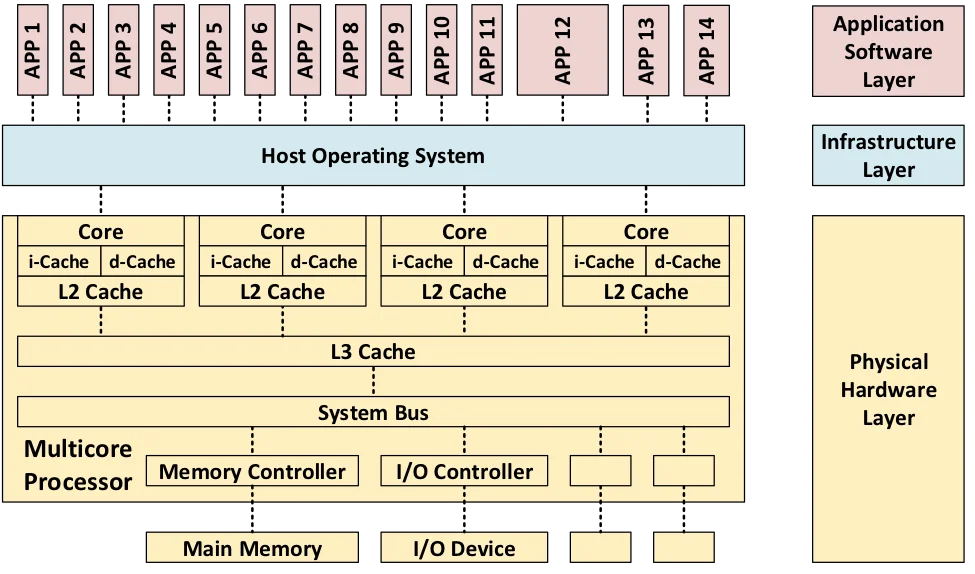

Homogeneous Multicore Processor

The following figure notionally shows the architecture of a system in which 14 software applications are allocated by a single host operating system to the cores in a homogeneous quad-core processor. In this architecture, there are three levels of cache, which are progressively larger but slower: L1 (consisting of an instruction cache and a data cache), L2, and L3. Note that the L1 and L2 caches are local to a single core, whereas L3 is shared among all four cores.

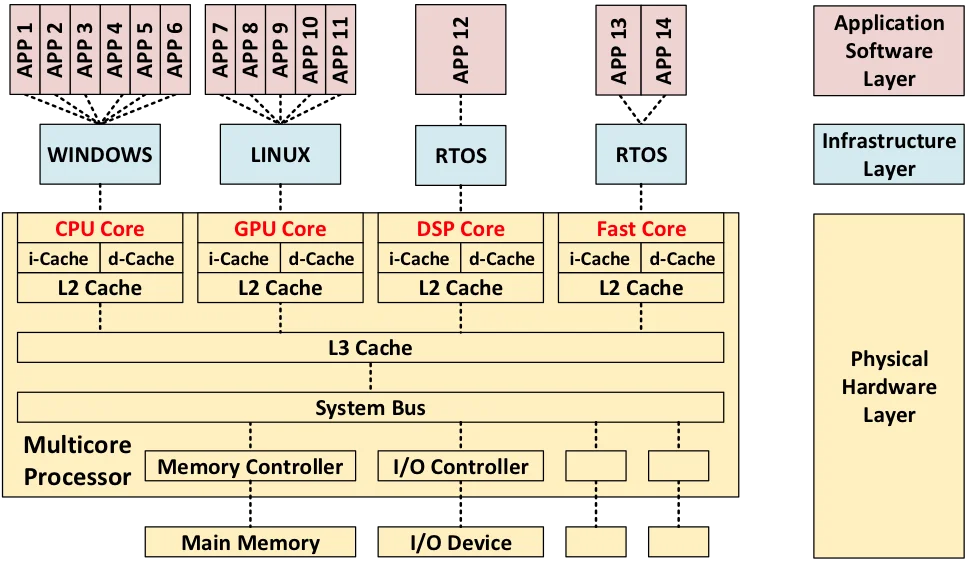

Heterogeneous Multicore Processor

The following figure notionally shows how these 14 applications could be allocated to four different operating systems, which in turn are allocated to four different cores, in a heterogeneous, quad-core processor. From left to right, the cores include a general-purpose central processing unit core running Windows; a graphical processing unit (GPU) core running graphics-intensive applications on Linux; a digital signal processing (DSP) core running a real-time operating system (RTOS); and a high-performance core also running an RTOS.

Current Trends in Multicore Processing

Multicore processors are replacing traditional, single-core processors so that fewer single-core processors are being produced and supported. Consequently, single-core processors are becoming technologically obsolete. Heterogeneous multicore processors, such as computer-on-a-chip processors, are becoming more common.

Although multicore processors have largely saturated some application domains (e.g., cloud computing, data warehousing, and on-line shopping), they are just starting to be used in real-time, safety- and security-critical, cyber-physical systems. One area in which multicore processing is becoming popular is in environments constrained by size, weight, and power, and cooling (SWAP-C), in which significantly increased performance is required.

Pros of Multicore Processing

Multicore processing is typically commonplace because it offers advantages in the following seven areas:

- Energy Efficiency. By using multicore processors, architects can decrease the number of embedded computers. They overcome increased heat generation due to Moore's Law (i.e., smaller circuits increase electrical resistance, which creates more heat), which in turn decreases the need for cooling. The use of multicore processing reduces power consumption (less energy wasted as heat), which increases battery life.

- True Concurrency. By allocating applications to different cores, multicore processing increases the intrinsic support for actual (as opposed to virtual) parallel processing within individual software applications across multiple applications.

- Performance. Multicore processing can increase performance by running multiple applications concurrently. The decreased distance between cores on an integrated chip enables shorter resource access latency and higher cache speeds when compared to using separate processors or computers. However, the size of the performance increase depends on the number of cores, the level of real concurrency in the actual software, and the use of shared resources.

- Isolation. Multicore processors may improve (but do not guarantee) spatial and temporal isolation (segregation) compared to single-core architectures. Software running on one core is less likely to affect software on another core than if both are executing on the same single core. This decoupling is due to both spatial isolation (of data in core-specific cashes) and temporal isolation, because threads on one core are not delayed by threads on another core. Multicore processing may also improve robustness by localizing the impact of defects to single core. This increased isolation is particularly important in the independent execution of mixed-criticality applications (mission-critical, safety critical, and security-critical).

- Reliability and Robustness. Allocating software to multiple cores increases reliability and robustness (i.e., fault and failure tolerance) by limiting fault and/or failure propagation from software on one core to software on another. The allocation of software to multiple cores also supports failure tolerance by supporting failover from one core to another (and subsequent recovery).

- Obsolescence Avoidance. The use of multicore processors enables architects to avoid technological obsolescence and improve maintainability. Chip manufacturers are applying the latest technical advances to their multicore chips. As the number of cores continues to increase, it becomes increasingly hard to obtain single-core chips.

- Hardware Costs. By using multicore processors, architects can produce systems with fewer computers and processors.

Cons of Multicore Processing

Although there are many advantages to moving to multicore processors, architects must address disadvantages and associated risks in the following six areas:

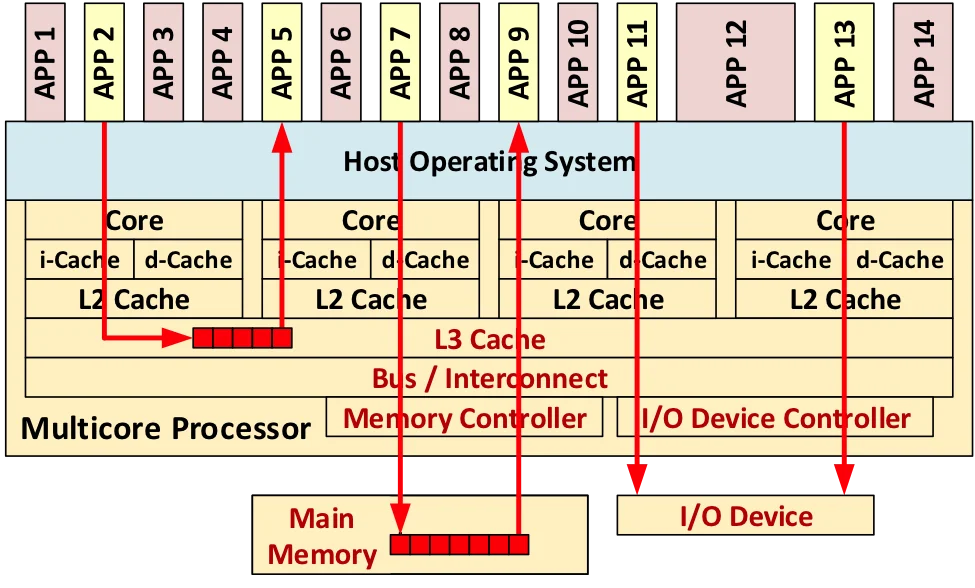

- Shared Resources. Cores on the same processor share both processor-internal resources (L3 cache, system bus, memory controller, I/O controllers, and interconnects) and processor-external resources (main memory, I/O devices, and networks). These shared resources imply (1) the existence of single points of failure, (2) two applications running on the same core can interfere with each other, and (3) software running on one core can impact software running on another core (i.e., interference can violate spatial and temporal isolation because multicore support for isolation is limited). The diagram below uses the color red to illustrate six shared resources.

- Interference. Interference occurs when software executing on one core impacts the behavior of software executing on other cores in the same processor. This interference includes failures of both spatial isolation (due to shared memory access) and failure of temporal isolation (due to interference delays and/or penalties). Temporal isolation is a bigger problem than spatial isolation since multicore processors may have special hardware that can be used to enforce spatial isolation (to prevent software running on different cores from accessing the same processor-internal memory). The number of interference paths increases rapidly with the number of cores and the exhaustive analysis of all interference paths is often impossible. The impracticality of exhaustive analysis necessitates the selection of representative interference paths when analyzing isolation. The following diagram uses the color red to illustrate three possible interference paths between pairs of applications involving six shared resources.

- Concurrency Defects. Cores execute concurrently, creating the potential for concurrency defects including deadlock, livelock, starvation, suspension, (data) race conditions, priority inversion, order violations, and atomicity violations. Note that these are essentially the same types of concurrency defects that can occur when software is allocated to multiple threads on a single core.

- Non-determinism. Multicore processing increases non-determinism. For example, I/O Interrupts have top-level hardware priority (also a problem with single core processors). Multicore processing is also subject to lock trashing, which stems from excessive lock conflicts due to simultaneous access of kernel services by different cores (resulting in decreased concurrency and performance). The resulting non-deterministic behavior can be unpredictable, can cause related faults and failures, and can make testing more difficult (e.g., running the same test multiple times may not yield the same test result).

- Analysis Difficulty. The real concurrency due to multicore processing requires different memory consistency models than virtual interleaved concurrency. It also breaks traditional analysis approaches for work on single core processors. The analysis of maximum time limits is harder and may be overly conservative. Although interference analysis becomes more complex as the number of cores-per-processor increases, overly-restricting the core number may not provide adequate performance.

- Accreditation and Certification. Interference between cores can cause missed deadlines and excessive jitter, which in turn can cause faults (hazards) and failures (accidents). Verifying a multicore system requires proper real-time scheduling and timing analysis and/or specialized performance testing. Moving from a single-core to a multicore architecture may require recertification. Unfortunately, current safety policy guidelines are based on single-core architectures and must be updated based on the recommendations that will be listed in the final blog entry in this series.

SEI-Research on Multicore Processing

Real-time scheduling on multicore processing platforms is a Department of Defense (DoD) technical area of urgent concern for unmanned aerial vehicles (UAVs) and other systems that demand ever-increasing computational power. SEI researchers have provided a range of techniques and tools that improve scheduling on multicore processors. We developed a mode-change protocol for multicores with several operational modes, such as aircraft taxi, takeoff, flight, and landing modes. The SEI developed the first protocol to allow multicore software to switch modes while meeting all timing requirements, thereby allowing software designers add or remove software functions while ensuring safety.

This SEI is transitioning this research through activities that include the following:

- a workshop with participants from Carnegie Mellon University, Nagoya University, the University of Illinois at Urbana-Champaign, and NEC Electronics

- collaboration with Lockheed-Martin researchers to publish papers on the use of multicore architectures in cyber-physical systems

- invited talks at conferences such as IEEE International Conference on Embedded and Real-Time Computing Systems and Applications

- authoring a chapter of the Multicore Programming Practices Guide published by The Multicore Association

Associated Research Projects

Future Blog Entries

The next two blog entries in this series will define virtualization via virtual machines and containers, list their current trends, and document their pros and cons. These postings will be followed by a final blog entry providing general recommendations regarding the use of these three technologies.

Additional Resources

Read the introductory post in this series.

More By The Author

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed