What is Explainable AI?

PUBLISHED IN

Artificial Intelligence EngineeringConsider a production line in which workers run heavy, potentially dangerous equipment to manufacture steel tubing. Company executives hire a team of machine learning (ML) practitioners to develop an artificial intelligence (AI) model that can assist the frontline workers in making safe decisions, with the hopes that this model will revolutionize their business by improving worker efficiency and safety. After an expensive development process, manufacturers unveil their complex, high-accuracy model to the production line expecting to see their investment pay off. Instead, they see extremely limited adoption by their workers. What went wrong?

This hypothetical example, adapted from a real-world case study in McKinsey’s The State of AI in 2020, demonstrates the crucial role that explainability plays in the world of AI. While the model in the example may have been safe and accurate, the target users did not trust the AI system because they didn’t know how it made decisions. End-users deserve to understand the underlying decision-making processes of the systems they are expected to employ, especially in high-stakes situations. Perhaps unsurprisingly, McKinsey found that improving the explainability of systems led to increased technology adoption.

Explainable artificial intelligence (XAI) is a powerful tool in answering critical How? and Why? questions about AI systems and can be used to address rising ethical and legal concerns. As a result, AI researchers have identified XAI as a necessary feature of trustworthy AI, and explainability has experienced a recent surge in attention. However, despite the growing interest in XAI research and the demand for explainability across disparate domains, XAI still suffers from a number of limitations. This blog post presents an introduction to the current state of XAI, including the strengths and weaknesses of this practice.

The Basics of Explainable AI

Despite the prevalence of explainability research, exact definitions surrounding explainable AI are not yet consolidated. For the purposes of this blog post, explainable AI refers to the

set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms.

This definition captures a sense of the broad range of explanation types and audiences, and acknowledges that explainability techniques can be applied to a system, as opposed to always baked in.

Leaders in academia, industry, and the government have been studying the benefits of explainability and developing algorithms to address a wide range of contexts. In the healthcare domain, for instance, researchers have identified explainability as a requirement for AI clinical decision support systems because the ability to interpret system outputs facilitates shared decision-making between medical professionals and patients and provides much-needed system transparency. In finance, explanations of AI systems are used to meet regulatory requirements and equip analysts with the information needed to audit high-risk decisions.

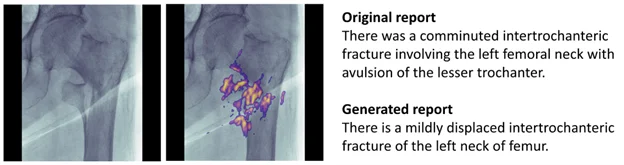

Explanations can vary greatly in form based on context and intent. Figure 1 below shows both human-language and heat-map explanations of model actions. The ML model used below can detect hip fractures using frontal pelvic x-rays and is designed for use by doctors. The Original report presents a “ground-truth” report from a doctor based on the x-ray on the far left. The Generated report consists of an explanation of the model’s diagnosis and a heat-map showing regions of the x-ray that impacted the decision. The Generated report provides doctors with an explanation of the model’s diagnosis that can be easily understood and vetted.

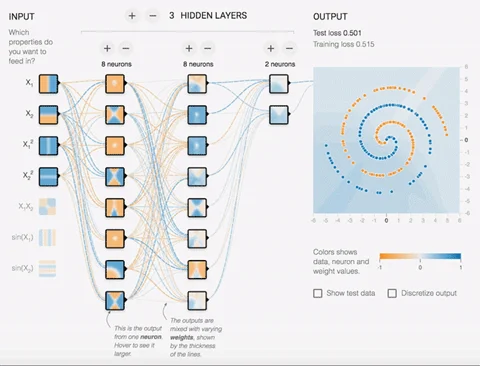

Figure 2 below depicts a highly technical, interactive visualization of the layers of a neural network. This open-source tool allows users to tinker with the architecture of a neural network and watch how the individual neurons change throughout training. Heat-map explanations of underlying ML model structures can provide ML practitioners with important information about the inner workings of opaque models.

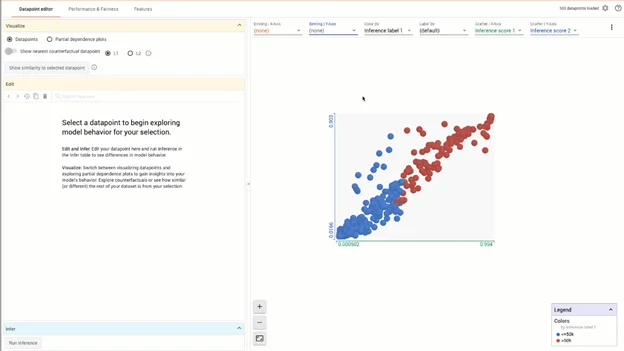

Figure 3 below shows a graph produced by the What-If Tool depicting the relationship between two inference score types. Through this interactive visualization, users can leverage graphical explanations to analyze model performance across different “slices” of the data, determine which input attributes have the greatest impact on model decisions, and inspect their data for biases or outliers. These graphs, while most easily interpretable by ML experts, can lead to important insights related to performance and fairness that can then be communicated to non-technical stakeholders.

Explainability aims to answer stakeholder questions about the decision-making processes of AI systems. Developers and ML practitioners can use explanations to ensure that ML model and AI system project requirements are met during building, debugging, and testing. Explanations can be used to help non-technical audiences, such as end-users, gain a better understanding of how AI systems work and clarify questions and concerns about their behavior. This increased transparency helps build trust and supports system monitoring and auditability.

Techniques for creating explainable AI have been developed and applied across all steps of the ML lifecycle. Methods exist for analyzing the data used to develop models (pre-modeling), incorporating interpretability into the architecture of a system (explainable modeling), and producing post-hoc explanations of system behavior (post-modeling).

Why Interest in XAI is Exploding

As the field of AI has matured, increasingly complex opaque models have been developed and deployed to solve hard problems. Unlike many predecessor models, these models, by the nature of their architecture, are harder to understand and oversee. When such models fail or do not behave as expected or hoped, it can be hard for developers and end-users to pinpoint why or determine methods for addressing the problem. XAI meets the emerging demands of AI engineering by providing insight into the inner workings of these opaque models. Oversight can result in significant performance improvements. For example, a study by IBM suggests that users of their XAI platform achieved a 15 percent to 30 percent rise in model accuracy and a 4.1 to 15.6 million dollar increase in profits.

Transparency is also important given the current context of rising ethical concerns surrounding AI. In particular, AI systems are becoming more prevalent in our lives, and their decisions can bear significant consequences. Theoretically, these systems could help eliminate human bias from decision-making processes that are historically fraught with prejudice, such as determining bail or assessing home loan eligibility. Despite efforts to remove racial discrimination from these processes through AI, implemented systems unintentionally upheld discriminatory practices due to the biased nature of the data on which they were trained. As reliance on AI systems to make important real-world choices expands, it is paramount that these systems are thoroughly vetted and developed using responsible AI (RAI) principles.

The development of legal requirements to address ethical concerns and violations is ongoing. The European Union’s 2016 General Data Protection Regulation (GDPR), for instance, states that when individuals are impacted by decisions made through “automated processing,” they are entitled to “meaningful information about the logic involved.” Likewise, the 2020 California Consumer Privacy Act (CCPA) dictates that users have a right to know inferences made about them by AI systems and what data was used to make those inferences. As legal demand grows for transparency, researchers and practitioners push XAI forward to meet new stipulations.

Current Limitations of XAI

One obstacle that XAI research faces is a lack of consensus on the definitions of several key terms. Precise definitions of explainable AI vary across papers and contexts. Some researchers use the terms explainability and interpretability interchangeably to refer to the concept of making models and their outputs understandable. Others draw a variety of distinctions between the terms. For instance, one academic source asserts that explainability refers to a priori explanations, while interpretability refers to a posterio explanations. Definitions within the domain of XAI must be strengthened and clarified to provide a common language for describing and researching XAI topics.

In a similar vein, while papers proposing new XAI techniques are abundant, real-world guidance on how to select, implement, and test these explanations to support project needs is scarce. Explanations have been shown to improve understanding of ML systems for many audiences, but their ability to build trust among non-AI experts has been debated. Research is ongoing on how to best leverage explainability to build trust among non-AI experts; interactive explanations, including question-and-answer based explanations, have shown promise.

Another subject of debate is the value of explainability compared to other methods for providing transparency. Although explainability for opaque models is in high demand, XAI practitioners run the risk of over-simplifying and/or misrepresenting complicated systems. As a result, the argument has been made that opaque models should be replaced altogether with inherently interpretable models, in which transparency is built in. Others argue that, particularly in the medical domain, opaque models should be evaluated through rigorous testing including clinical trials, rather than explainability. Human-centered XAI research contends that XAI needs to expand beyond technical transparency to include social transparency.

Why is the SEI Exploring XAI?

Explainability has been identified by the U.S. government as a key tool for developing trust and transparency in AI systems. During her opening talk at the Defense Department's Artificial Intelligence Symposium and Tech Exchange, Deputy Defense Secretary Kathleen H. Hicks stated, “Our operators must come to trust the outputs of AI systems; our commanders must come to trust the legal, ethical and moral foundations of explainable AI; and the American people must come to trust the values their DoD has integrated into every application.” The DoD’s efforts towards developing what Hicks described as a “robust responsible AI ecosystem,” including the adoption of ethical principles for AI, indicate a rising demand for XAI within the government. Similarly, the U.S. Department of Health and Human Services lists an effort to “promote ethical, trustworthy AI use and development,” including explainable AI, as one of the focus areas of their AI strategy.

To address stakeholder needs, the SEI is developing a growing body of XAI and responsible AI work. In a month-long, exploratory project titled “Survey of the State of the Art of Interactive XAI” from May 2021, I collected and labelled a corpus of 54 examples of open-source interactive AI tools from academia and industry. Interactive XAI has been identified within the XAI research community as an important emerging area of research because interactive explanations, unlike static, one-shot explanations, encourage user engagement and exploration. Findings from this survey will be published in a future blog post. Additional examples of the SEI’s recent work in explainable and responsible AI are available below.

Additional Resources

SEI researchers Rotem Guttman and Carol Smith explored how explainability can be used to answer end-users' questions in the context of game-play in their paper “Play for Real(ism) – Using Games to Predict Human-AI interactions in the Real World”, published alongside two CMU HCII researchers.

Researchers Alex Van Deusen and Carol Smith contributed to the DIU’s Responsible AI Guidelines to provide practical guidance for implementing RAI.

More By The Author

More In Artificial Intelligence Engineering

PUBLISHED IN

Artificial Intelligence EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed