Understanding How Network Security Professionals Perceive Risk

PUBLISHED IN

Enterprise Risk and Resilience ManagementRisk inherent in any military, government, or industry network system cannot be completely eliminated, but it can be reduced by implementing certain network controls. These controls include administrative, management, technical, or legal methods. Decisions about what controls to implement often rely on computed-risk models that mathematically calculate the amount of risk inherent in a given network configuration. These computed-risk models, however, may not calculate risk levels that human decision makers actually perceive.

These decision makers include the network team (e.g., those people who work to implement the controls to ensure the network is secure), the information assurance (IA) officer, and authorizing officials. For example, these models may be missing immeasurable risk factors (e.g., adversarial sophistication or the value to an adversary of data stored on the network) that decision makers perceive as high risk. This blog post describes the problem of how network security professionals perceive risk in more depth, as well as the SEI's research efforts to study what risk factors influence perceptions of network risk and how risk perceptions are formulated.

Within the Department of Defense (DoD) and .gov civilian government space, organizations must go through a rigorous process of control implementation, control testing and validation, and documentation of results before bringing an information system or network into production. In this process, controls selected for a particular network are configured and tested by an organization's network team, led by an information assurance (IA) officer, to reduce the amount of inherent network risk. Guidelines such as the International Organization for Standardization's (ISO) 27000 series and the National Institute of Standards and Technology's (NIST) 800 series exist for this rigorous process; however, these guidelines are generic and not tailored to an organization. After control configuration and testing is complete, a so-called authorizing official signs-off on the network on behalf of the organization. This official indicates that prior to bringing the network into production, the level of residual risk is acceptable.

Risk cannot be eliminated, but it needs to be managed at a level that allows the organization to carry out its mission. The implementation of certain controls comes with tradeoffs (e.g., network performance decrements, network usability constraints, etc.). The ratio of risks to benefits accrued from a particular implementation of controls must be tolerable to the organization's users, management, IA officer, and authorizing official.

While the adverse consequences of a particular control configuration may be known during the process of assessing risks and benefits, the risk factors that the IA officer and authorizing official perceive are not known. Risk factors are pieces of information that are considered when formulating a perception of risk. For example, an IA officer may believe the network risk is minimal based on risk factors such as

- minimal adversarial activity

- a fully staffed network team

- a network architecture without wireless connections

Complications can arise, however, when the IA officer and authorizing official form different network risk perceptions. Circumstances can be equally dangerous when they agree. For example, an IA officer and authorizing official may agree that a particular network risk level is minimal, but the two parties may in fact be considering different risk factors. Consequently, communications between both parties about risk-mitigating strategies can be hampered. Risk perception and its underlying risk factors must be studied to improve network security.

One challenge to the study of risk perception is determining who has the ground truth on network risk levels. For example, the network team and the IA officer may consider different risk factors than the authorizing official because they all often have different experiences and expertise. These risk factors may be contingent upon a person's job role (e.g., the firewall expert may perceive risk factors related to protecting a network from intrusions that other team members may not perceive) or the context of the organization (e.g., the organization has no firewall expert). Conversely, the authorizing official may consider additional risk factors that can be applied to any organization. This potential for inconsistent perceptions of network risk led us to consider the following two questions:

- Who has the ground truth on the network risk level at any given moment?

- What risk factors are most important to perceptions of network risk?

Our research is not intended to identify the ground truth, but rather to document the variance of risk factors considered when formulating a risk perception. The SEI research team includes Jennifer Cowley, Sam Merrell, Andrew Boyd, David Mundie, Dewanne Phillips, Harry Levinson, James Smith, Jonathan Spring, Kevin Partridge, and myself. Through a mixed methods research approach, we aim to assess

- the factors that individuals use when formulating a perception of network risk

- whether different perceptions of network risk occur

- whether those differences are due to different network contexts, personal experiences, and different job-related expertise

This research seeks to extend the field of risk perception research into cybersecurity. There have been several studies examining computed risk in cybersecurity, but little research exists on perceived risk in cybersecurity-related disciplines.

The SEI has studied computed-risk modeling, which associates risk with the likelihood that an outcome will occur and the impact it will have on those affected. Computed risk modeling is frequently recommended as a means to conduct network risk assessments because it is based on measurable factors such as the degree of implementation of controls. In contrast, perceived risk is what individuals identify, understand, and view as the current network risk, independent of computed risk.

Risk perceptions may be based on a variety of factors much broader than the factors used in computed risk models. These factors may include prior success or failure experiences with controls currently implemented, knowledge of the adversarial sophistication level, and organizational constraints. Computed risk levels may be one risk-perceptive factor among many considered when formulating network risk perceptions.

Our Research Approach

Computer scientists and practitioners have recently developed an interest in studying the human element of network security. Our project is one of the first human-subjects studies undertaken at the SEI. Prior to the initiation of studies, the SEI conducted a moderated focus group of subject matter experts (SMEs, e.g., former auditing officials and individuals with prior industry experience with the network accrediting process) to identify all possible factors that may impact network risk perceptions. Examples of these factors include

- the presence or absence of a known adversary on the network

- the system of network controls that were or were not implemented

- workforce shortages

- current adversarial activity that is known by the operator

Note: Internal factors, such as personality and mood, may be equally important when researching network risk perceptions. To scope the work appropriately, however, we did not include them in this research.

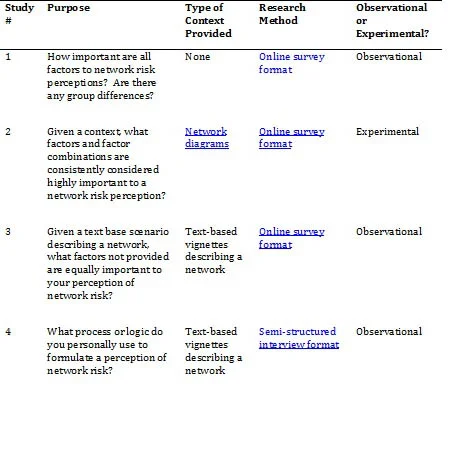

Our mixed methods research approach involves four human-subject studies, each with a unique mission and research method. Table 1 summarizes our study purposes and methodology. The completed results will be available later this year. The four small studies sampled a wide variety of network professionals such as individuals who implement and test network controls, IA officers, and authorizing officials. Job-related demographics were also collected.

Table 1. Overview of the parameters of all four studies

The factors generated in the SME focus group were tested in all four studies to assess the importance of each factor to perception of network risk. Because there is no unifying definition of risk, we asked participants to define risk in their own words, so we can analyze the data with respect to their definitions. In addition, we asked participants in studies 2-4 to use the NIST guidelines (NIST Special Publication 800-30) to indicate the level of network risk as low, medium, or high.

In this research effort, context is operationally defined as description of an organization's network at a particular point in time. We hope to identify factors that are consistently important for low-, medium-, and high-risk perceptions.

Future Work

Earlier studies have found that risk perceptions help predict behavior. It is therefore essential that the IA officer, network team, and authorizing official have similar network risk perceptions to motivate effective risk mitigating behavior.

Conversations about network risk factors provide a more comprehensive picture of network risk than the NIST rating of low, medium, or high mentioned above.

Our long-term goal is to develop a metric of perceived network risk, so that we can study the relationship between perceived risk and the performance of humans and teams. After we better understand how people perceive risk, we can enhance existing training curricula with strategies to mitigate the effects of perceived network risk on human and team performance.

Additional Resources

For more information about research in the SEI's CERT Division, please visit

www.cert.org.

For more information about the SEI's research in risk, please visit

www.sei.cmu.edu/risk.

More By The Author

More In Enterprise Risk and Resilience Management

Process and Technical Vulnerabilities: 6 Key Takeaways from a Chemical Plant Disaster

• By Daniel J. Kambic

PUBLISHED IN

Enterprise Risk and Resilience ManagementGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Enterprise Risk and Resilience Management

Process and Technical Vulnerabilities: 6 Key Takeaways from a Chemical Plant Disaster

• By Daniel J. Kambic

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed