Three Risks in Building Machine Learning Systems

Machine learning (ML) systems promise disruptive capabilities in multiple industries. Building ML systems can be complicated and challenging, however, especially since best practices in the nascent field of AI engineering are still coalescing. Consequently, a surprising fraction of ML projects fail or underwhelm. Behind the hype, there are three essential risks to analyze when building an ML system: 1) poor problem solution alignment, 2) excessive time or monetary cost, and 3) unexpected behavior once deployed. In this post I'll discuss each risk and provide a way of thinking about risk analysis in ML systems.

Risk #1: Poor Problem-Solution Alignment

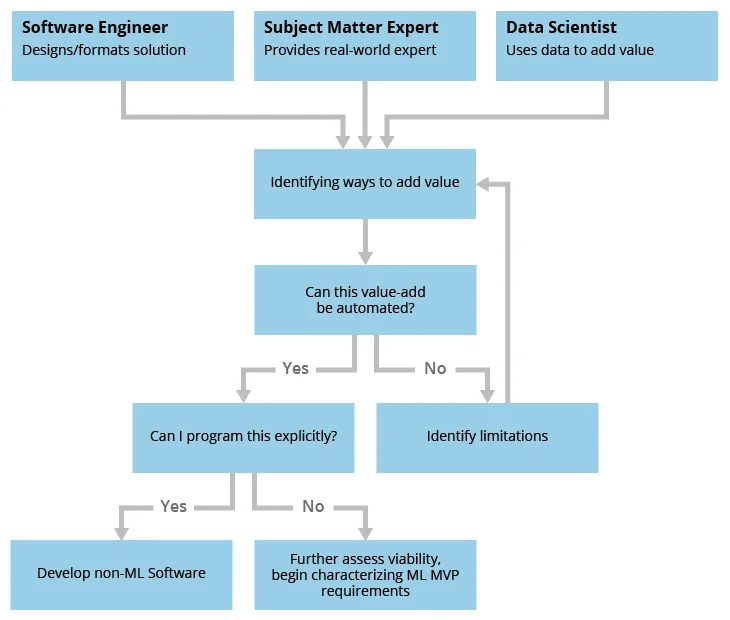

The very first risk faced by ML practitioners is applying ML to the wrong problem. The purpose of integrating ML into a product or service is to add value. Simply put, projects should center around ways to add value, not around machine learning. This point may seem obvious, but it's easy to get caught in the hype-storm and wind up with AI FOMO - worrying that you'll be left in the dust if you're not poised to deploy a deep neural net of some kind. But there's a reason that the very first rule in Google's Rules of Machine Learning is to be open to shipping a non-ML system and that the first principle of the SEI's foundational principles for engineering AI systems is ensure you have a problem that both can and should be solved by AI.

Poor problem-solution alignment can also be a symptom of overly segregated roles. If data scientists are expected to propose projects but aren't in touch with customer and business needs, they don't have the information they need to build a useful ML system. On the other hand, when other team members bring projects or problems to data scientists to solve with machine learning, data scientists aren't able to help determine where machine learning is most suitable to add value. Ideally, projects should emerge from collaborative efforts to find ways to improve upon a product or service when three types of people come together:

- a software engineer who can help to determine the form of a solution and design the solution with respect to how the data, code, and output relate to the customer need and the organization's mission

- a subject matter expert who provides real-world context and validation of whether a machine learning capability adds value by streamlining production and addressing rate-limiting factors

- a data scientist who knows how to use data to add value and assesses problem-solution alignment

It is essential for these three types of people to exercise their creativity and unique perspectives to discover new possibilities and assess their feasibility.

Defining the Minimal Viable Product (MVP)

After settling on an area for improvement for the business need, the team works to scope the problem and develop requirements by asking "What's the minimum we need for the capability to actually be useful?" Part of this scoping process should consider whether an ML solution is even appropriate for the problem. These requirements characterize the minimal viable product or MVP. The role of the data scientist in this process is then to convert business requirements into quantitative ML system requirements/metrics.

The metrics selection process should also enable the team to determine what kinds of ML solutions might be appropriate. This process requires the data scientist to straddle two roles: representing stakeholder interests and guiding engineering efforts. Doing both requires excellent communication skills, good collaboration with a subject matter expert representing the business case, or specialized knowledge in the domain. These reasons are part of why high-quality talent among data scientists is competitive and highly prized.

Metrics that assess performance of an ML component go beyond an accuracy constraint, to account for qualities such as asymmetric costs of false positives vs. false negatives, model size and memory requirements, training and inference time, and model interpretability. Yet another opportunity for problem-solution misalignment is for ML system metrics to inadvertently steer the MVP away from the organization's mission.

Getting the requirements wrong is a risk in software engineering, but its likelihood is elevated in ML engineering. A good data scientist is aware of these concerns, and communicates them to the software engineer and subject matter expert. After settling on candidate requirements, the product team then asks "What do I need to actually build this system?"

Risk #2: Incurring Excessive Costs

The next risk to consider before launching a project is the possibility that engineering an ML system consumes too much time and too many resources. A consequential difference between traditional software systems and ML systems is that ML systems are dependent on data. Acquiring data is expensive, and large tech companies that can stomach the cost will often employ sizeable teams to do nothing but expertly label data. After large data sets are acquired, they are technically challenging and expensive to maintain, and they demand intense computing resources. These factors raise staffing costs by imposing a need for data engineering, math, and statistics skills in addition to software engineering.

ML systems are also usually more expensive to debug and maintain than traditional software systems. One reason is that ML systems are prone to normal software bugs, but also flaws like over- or under-fitting, difficulty training due to exploding or vanishing gradients, or learning something that satisfies an optimization metric but violates the engineer's intent. These flaws cannot always be found with a debugger, and diagnosing them requires data science know-how. Maintaining a functioning model entails the additional cost of updating training data and re-training so that the training data continue to closely reflect the real-world data a model encounters. The point here is to recognize the propensity of ML projects to rack up costs quickly and exceed expectations for the time and resources they require.

The Difficulty of Planning to Build an ML System

We've seen that ML systems can be costly to build, but it's not obvious how to budget for an ML project and plan ahead to minimize costs. ML engineering is an example of research programming. Research programming has three challenges:

- Progress can be slow and expensive due to experimentation iterations required for understanding the capabilities of an ML model,

- progress can be hard to predict which makes budgeting hard, and

- the next experiment depends on the results of the current experiment which makes planning uncertain.

This last challenge imposes the requirement that a team be able to adapt on-the-fly, which is easier said than done in light of the changing anything changes everything (CACE) phenomenon in ML engineering. Adapting on-the-fly can result in unforeseen engineering changes both to the ML model and the software system, which can have significant schedule and cost impact.

To hammer home the point that we tend to underestimate the costs of building ML systems, here are some disheartening figures from a study by Allegion surveying hundreds of AI-adjacent industry employees

- 81 percent admit training AI with data is harder than expected

- 96 percent encounter challenges related to training data quality and quantity

- 78 percent of AI or ML projects stall at some stage before deployment

ML engineering is an especially uncertain and expensive subset of software engineering. Established software engineering processes, such as Agile software development and Lean software development, have practices to manage uncertain requirements and tasks dependencies which may take longer than a predetermined sprint schedule. Since these processes not only allow for re-orienting/adjusting for changes, but embraces such uncertainty they are well suited for managing ML engineering projects.

CACE Study: ML for Code Analysis

Here's an example of the CACE principle in action, on our own home turf. One of our interests at the SEI is using ML to analyze software. A reasonable starting point for a project might involve parsing source code into a linear stream of tokens. Engineering better features might involve extracting a syntax tree or dependency graph, which entails selecting a different compiler tool, re-compiling code, storing it in a database, likely changing the machine learning architecture, and training a new model from scratch. A simple feature engineering experiment practically requires building a whole new pipeline. The team needs to accept up front that a large amount of engineering may be required to produce the intermediate results needed just to understand the problem and build that engineering overhead into cost and time projections from the beginning.

Increasing Cost Awareness

Appreciating the engineering challenges you may face with an ML project up front can help determine if it still makes sense to launch the project and also helps to set realistic expectations for the road ahead. Part of determining whether it makes sense to move forward with an ML project is assessing whether an alternative solution can address the business need. It can be easy to get swept up in the power of ML solutions, but they are expensive to develop and maintain because they are fragile to changes in the data, business case, or problem statement.

To get a sense of the possible costs and ways a project can pan out, simply walk through the ML development cycle in advance and consider how things might go wrong. While this is just a thought experiment and not completely rigorous, there aren't many alternatives currently, and the tendency to underestimate costs is a problem. Below is a list of possible costs you might face, based roughly on Amerishi's model of the ML development cycle.

- Data

- Acquisition

- Storage and computing resources

- Analyzing for bias, imbalance, similarity to real world

- Cleaning

- Labeling

- Pre-processing and feature engineering

- Updating data to meet data freshness requirements

- Building the ML system

- Staffing diverse skill sets (engineering, statistics, etc.)

- Experimentation: consider algorithms, hyperparameters, ways to measure success, number of experiments

- Model size: consider impact on training time, storage space, iterations speed

- Model interpretability: difficulty interpreting results can make debugging take longer, increase the number of experiments, and slow down iteration speed

- Reproducibility: organizing experiments, tracking versions of data and models, communicating to other team members. Failure to organize can increase number of experiments, extend debugging time, or result in duplicated efforts.

- Converting research code into production code

- Engineering the ML system (not just the model): supporting infrastructure, development of the software elements, development of the production environment, etc.

- Testing and evaluation

- Software testing: may include static analysis, dynamic analysis, mutation testing, fuzzing, formal verification, etc.

- ML testing: interpreting dev set metrics, assessing robustness/security/privacy/fairness, error analysis

- Metamorphic / simulation-based testing

- Systems level real-world testing

- Deploying and monitoring

- Situational awareness, analysis of data from monitoring, interpreting behavior

- Response to unexpected behaviors

The list above is not exhaustive, and not all elements may be relevant to a particular project.

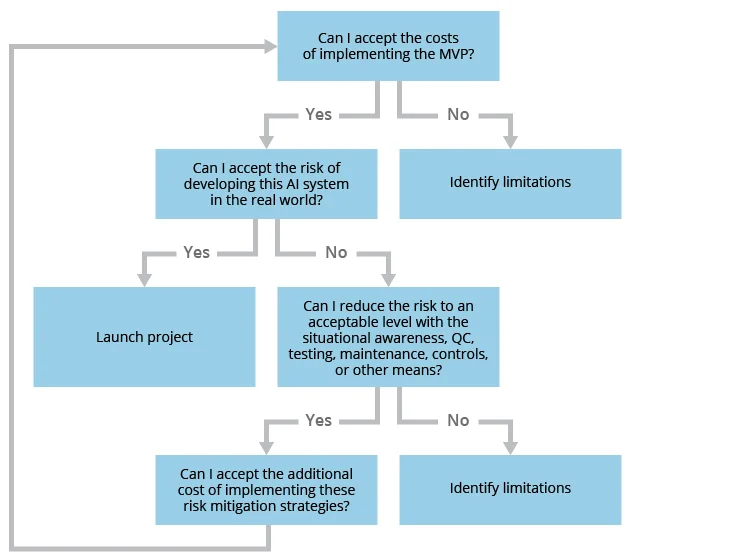

Risk #3: Unexpected Behavior and Unintended Consequences

If you're prepared to accept the costs of satisfying the MVP requirements, a final risk to consider is that ML systems can exhibit a range of unexpected behaviors in the real world. Examples include vulnerability to adversarial examples, interactions between ML models and software systems, feedback loops which can propagate biases in training data, and others. It may be possible to mitigate these risks to an extent by vetting the training data, adding features to the ML system that guide safe behavior, doing additional quality control and testing prior to release, and carefully maintaining a live model. But these additional costs should be factored in when re-evaluating risk #2.

Tradeoff Between Excessive Cost and Unintended Behavior

As shown in Figure 2, you may need to go back and forth between examining cost and unexpected behavior risks several times during planning, before striking a balance between your tolerance for production risks and your budget. Here are some examples to illustrate the tradeoff between engineering costs and preventive measures:

- To ensure that the data and model are continually attuned to an ever-changing real-world setting, you need to routinely update your data, assess the data for bias, imbalance, and representativeness, and retrain your model(s). The price paid is a reduced iteration speed.

- Discrepancies between development and production environments (not just the datasets) between two versions of the training data and between your mission and your model's objective function can culminate in undesirable results and expensive debugging. Situational awareness efforts reduce the likelihood of being bitten by one of these bugs, but require careful thinking and offer no guarantees.

- Testing an ML system can provide information about how it will behave in the real world, which helps to mitigate or at least inform risk #3. However, testing isn't cheap or easy. Some estimates suggest testing autonomous systems covers 25 percent of all development costs.

The takeaway is that assessing tolerance for unintended consequences at the start of a project helps to gauge the distribution of engineering efforts, because tolerance for failure or unreliable output shapes the types of preventive measures required. In turn, this takeaway helps an organization estimate development costs and determine if a project falls within tolerance for incurring excessive costs and determining if the costs are excessive with respect to the risk associated with failure.

The success stories of ML systems tend to exist in applications where the risk associated with failure is low (e.g., recommending a movie or serving an ad that is not relevant to the recipient) and the capacity to incur costs is high - the output of companies like Google and Amazon. A major disconnect for people outside the field is not understanding why the successes in these applications do not extend to higher risk and more cost-constrained applications.

Irreducible Risk

Finally, some risks cannot be mitigated. Researchers know how to build ML systems, but that assuring ML systems remains an open challenge. Similarly, adversarial ML attacks and defenses are still being discovered and explored. At present, we must accept a certain level of risk in deploying AI systems while researchers converge on best practices in these fields. Even with all of these factors to keep in mind, I don't want to discourage taking risks. Instead, my point here is to acknowledge the risks, and if they can't be mitigated, determine if they fall within tolerance before launching.

Future Research: Adaptable AI

We've seen that ML projects can rack up technical debt during development and behave unexpectedly during production. Due to the CACE phenomenon, adapting to changing requirements on-the-fly is often expensive. We should therefore strive to build ML pipelines around the assumption that change is necessary, but costly. Future research should seek to answer the question, "How do we design ML pipelines up front to be composable and adaptable?" We will continue to investigate this complex question as part of our ongoing efforts to characterize best practices for AI engineering.

In the meantime, we've identified three of the most foundational risks in ML systems, and provided a basic framework for managing them. For now, simply assessing tolerance for unexpected behaviors in the production environment, and developing a better sense of the costs involved in building ML systems can stop bad projects from getting off the ground, and cut costs in projects that launch.

Additional Resources

Read the SEI Blog Post AI Engineering: 11 Foundational Principles for Decision Makers by Angela Horneman, Andrew Mellinger, and Ipek Ozkaya.

Matthew Churilla "Toward Machine Learning Assurance" Cybersecurity Developmental Test (CyberDT) Cross-Service Working Group (XSWG)

More In Artificial Intelligence Engineering

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed