System Resilience Part 2: How System Resilience Relates to Other Quality Attributes

PUBLISHED IN

Cybersecurity EngineeringTo most people, a system is resilient if it continues to perform its mission in the face of adversity. In other words, a system is resilient if it continues to operate appropriately and provide required capabilities despite excessive stresses that can or do cause disruptions.

System resilience is not an isolated quality attribute. As this post, the second in a series on system resilience, details, it is directly related to robustness, safety, cybersecurity, anti-tamper, survivability, capacity, longevity, and interoperability. It is less closely related to adaptability, availability, performance, reliability, and reparability.

System Resilience - a Brief Recap

Clearly, system resilience is important, because no one wants a brittle system that cannot overcome the inevitable adversity. If adverse events or conditions cause a system to fail to operate appropriately, all manner of harm to valuable assets can result.

Unfortunately, the preceding definitions of system resilience contain significant ambiguities and do not address specific questions. What kinds of adversities and disruptions are included? What does continue to perform its mission mean, and what harm can occur when the system fails to operate appropriately? In the first post in this series on system resilience, I addressed these questions by providing the following more detailed and nuanced definition:

A system is resilient to the degree to which it rapidly and effectively protects its critical capabilities from harm caused by adverse events and conditions.

I then clarified this definition as follows:

- Protection consists of detecting adverse events and conditions, responding to these adversities by limiting the harm they are causing to critical assets, and recovering from that harm afterwards. Prevention of adversities is outside of the scope of system resilience, because resilience assumes that adversities will occur and is concerned with continuity of critical services in the face of these adversities.

- Assets are system capabilities, components, and data, as well as system-external assets that must be protected from harm caused by adverse events and conditions because they implement the system's critical capabilities.

- Each of these assets is subject to many different types of asset-specific harm, such as loss or degradation of service, damage to or destruction of critical system components, loss of confidentiality and integrity of critical data, and damage or destruction of an external system or object needed for continued operations.

While my first post explored the various interpretations of system resilience, in the remainder of this post I explore how system resilience relates to other quality attributes.

Resilience in Terms of Subordinate Quality-Attributes

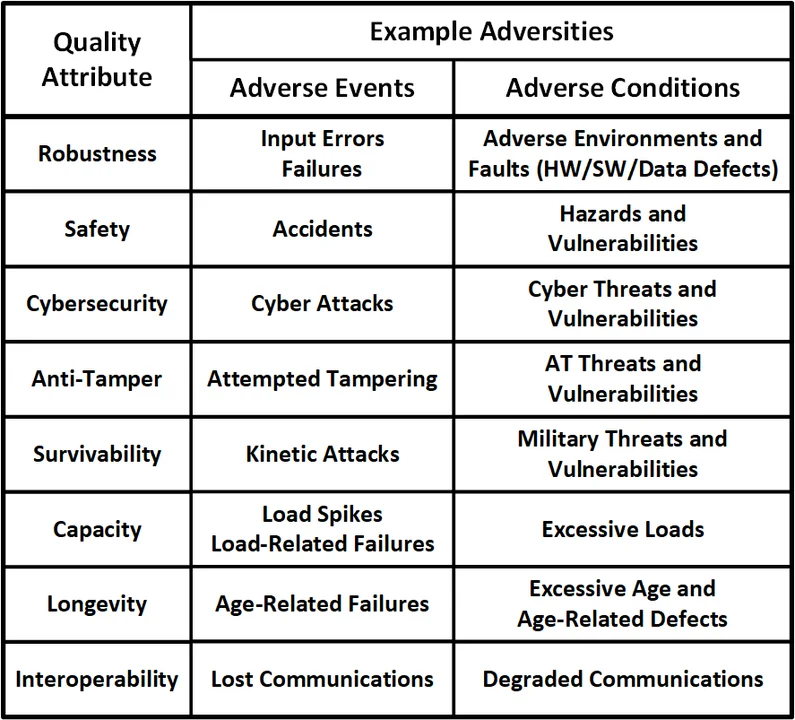

As shown in the following table, the adverse events and conditions to which a system much be resilient can be categorized in terms of their associated quality attributes: robustness, safety, cybersecurity, anti-tamper, survivability, capacity, longevity, and interoperability.

Although survivability is sometimes used as a synonym for resilience, this post uses the military definition of survivability: the ability of the system to remain mission capable after a single engagement. Survivability is typically characterized in terms of a system's ability to avoid detection by an enemy (i.e., low detectability), its ability to avoid being hit by a weapon (i.e., low susceptibility), its ability to withstand the hit (i.e., low vulnerability), and its ability to recover from being hit (i.e., high recoverability).

Resilience in Terms of Quality-Attribute-Based Features

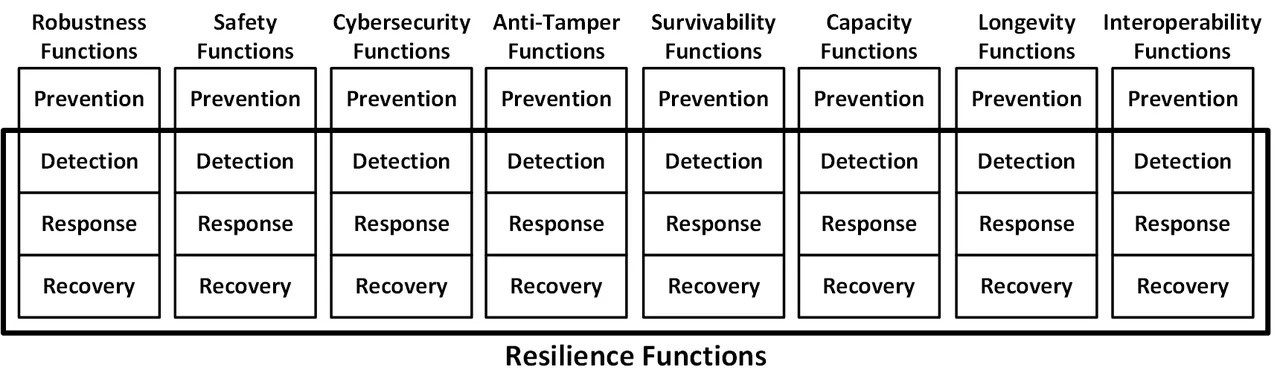

System resilience is achieved by incorporating an appropriate set of architectural, design, and implementation features (e.g., safeguards, security controls, architectural and design patterns, and implementation idioms). As shown in the following figure, resilience features can be categorized in two ways:

- Resilience features support one or more of the eight subordinate quality attributes (e.g., robustness, safety, and cybersecurity) of system resilience that are listed in the above table. The same resilience feature can often improve multiple of these quality attributes, so they should not be viewed as unrelated quality attributes to be achieved independently of each other. Rather, they should be viewed holistically, largely under the rubric of system resilience. These resilience features will be the subject of the fourth post in this series.

- Resilience features will support one of the three protection subfunctions of resilience: detection, reaction, and recovery. System resilience is concerned with ensuring continuity of operations after an adverse event occurs or an adverse condition exists, so prevention of adversity is outside of the scope of system resilience, even though it is within the scope of these subordinate quality attributes.

Resilience in Terms of Tolerance

Another way of looking at system resilience is that a system is resilient to the degree to which it tolerates various types of adverse events and conditions. In other words, a system is resilient to the degree to which it exhibits the following types of tolerance (organized by subordinate quality attribute):

- Robustness.

- Environmental Tolerance is the system's ability to handle adverse environmental events or conditions. For example, one reason autonomous land vehicles incorporate redundant sensors of multiple types (e.g., camera, lidar, radar, and ultrasonic sensors) is so that sensor fusion and AI software can safely and accurately identify and classify road objects during adverse weather conditions (e.g., night, fog, rain, and snow).

- Error Tolerance is "the ability of a system or component to continue normal operation despite the presence of erroneous inputs." [ISO/IEC/IEEE 24765:2017] Error tolerance includes the system's ability to detect, respond to, or recover from erroneous inputs from both humans and external systems.

- Fault Tolerance is the "degree to which a system, product, or component operates as intended despite the presence of... faults." Fault tolerance includes the system's ability to prevent, detect, respond to, or recover from faults. A fault is defined as (1) a "defect in a system or a representation of a system that if executed/activated could potentially result an error" [ISO/IEC 20 ISO/IEC 15026-1:2013], (2) a "defect in a hardware device or component" [ISO/IEC/IEEE 24765:2017], and (3) an "incorrect step, process, or data definition in a computer program" [ISO/IEC 25040:2011].

- Failure Tolerance is the system's ability to detect, respond to, or recover from total or partial failures. A failure is the termination of the ability of a system to perform a required function or its inability to perform within previously specified limits; an externally visible deviation from the system's specification [ISO/IEC 20 ISO/IEC 15026-1:2013].

- Safety.

- Accident Tolerance is the system's ability to detect, respond to, and recover from accidents and any resulting accidental harm to assets.

- Hazard Tolerance is the system's ability to detect and respond to the existence of hazards (e.g., to avoid accidents or limit the harm they cause).

- Cybersecurity:

- Cyberattack Tolerance is the system's ability to protect its critical capabilities from harm due to cyberattack.

- Threat Tolerance is the system's ability to detect and respond to the existence of cybersecurity threats and vulnerabilities (e.g., by making a successful attack less likely).

- Anti-Tamper:

- Tamper Tolerance (Anti-Tamper) is the system's ability to protect its critical capabilities from harm due to tampering (i.e., reverse engineering) intended to result in the disclosure or modification of critical program information (CPI) and due to vulnerabilities to tampering.

- Survivability.

- Physical Attack Tolerance is the system's ability to protect its critical capabilities from harm due to physical attacks.

- Threat Tolerance is the system's ability to detect and respond to threats (e.g., an aircraft detecting a missile lock and jettison flare).

- Capacity.

- Excessive Load Tolerance is the system's ability to protect its critical capabilities from harm due to excessive loads (e.g., transactions, commands, and messages).

- Longevity.

As the ages of a system's physical components reach or exceed their design limits, the probability of their failure increases. A system requiring a long operational lifespan without maintenance can avoid disruptions by incorporating longevity controls. For example, a spacecraft can include radiation-hardened hardware and implement subsystem hibernation by selectively turning off unused subsystems.

- Excessive Age Tolerance is the system's ability to detect, respond to, or recover from physical component ages exceeding their design limits and any resulting harm to assets.

- Interoperability.

- Faulty Communications Tolerance is the system's ability to protect its critical capabilities from harm due to lost or degraded communication with external systems.

Resilience in Terms of Other Quality Attributes

As a quality attribute, system resilience is influenced by other quality attributes. In addition to its subordinate quality attributes discussed above, resilience is also influenced by:

- Adaptability. A system that can adapt itself (e.g., by restructuring, reconfiguring, load balancing, and spinning up additional virtual machines or containers) to changing conditions might be able to respond to and recover from adverse events and conditions as well as from any harm they might cause.

- Availability. A system with high availability is typically able to rapidly recover from adverse events. A resilient system needs to maintain the availability of its critical capabilities/services during adverse conditions and despite the occurrence of adverse events.

- Maintainability. A system is somewhat more resilient in terms of recoverability if it supports corrective maintenance. A system is considered more resilient if it is able to repair itself rather than relying on a human maintainer.

- Performance. High levels of resilience in terms of detection decrease performance in terms of throughput and response time, which may be unacceptable for hard, real-time, cyber-physical systems.

- Reliability. Low levels of reliability lead to numerous faults and failures, which require higher levels of resilience (detection, response, and recovery) related to fault and failure tolerance. A resilient system needs to maintain the reliability of its critical capabilities/services during adverse conditions and despite the occurrence of adverse events.

- Reparability. A system that can repair itself (e.g., by automatically replacing certain subsystems or line replaceable units) can better recover after an adverse event.

Wrapping Up and Looking Ahead

This post has clarified how system resilience relates to other closely-related quality attributes. The third post in this series will cover system resilience requirements, including their subtypes with examples. The fourth post will cover resiliency features that support the detection of, reaction to, and recovery from adverse events and conditions.

More By The Author

More In Cybersecurity Engineering

PUBLISHED IN

Cybersecurity EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Cybersecurity Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed