Selecting Measurement Data for Software Assurance Practices

Measuring the software assurance of a product as it is developed and delivered to function in a specific system context involves assembling carefully chosen metrics. These metrics should demonstrate a range of behaviors to confirm confidence that the product functions as intended and is free of vulnerabilities.

The Software Assurance Framework (SAF) is a collection of cybersecurity practices that programs can apply across the acquisition lifecycle and supply chain to promote the desired assurance behaviors. The SAF can be used to assess an acquisition program's current cybersecurity practices and chart a course for improvement, ultimately reducing the cybersecurity risk of deployed software-reliant systems. Metrics enable programs to determine how effectively they are performing the cybersecurity practices identified in the SAF to address software assurance. In this blog post, I demonstrate how a program can select measurement data for each SAF practice to monitor and manage the progress in establishing confidence for software assurance.

The Challenges of Measuring Software Assurance

Measuring software assurance is a complex and hard problem. The first challenge is evaluating whether a product's assembled requirements define the appropriate behavior. The second challenge is to confirm that the completed product, as built, fully satisfies the specifications for use under realistic conditions.

Determining assurance for the second challenge is an incremental process applied across the lifecycle. There are many lifecycle approaches, but, in a broad sense, some form of requirements, design, construction, and test is performed to define what is wanted, enable its construction, and confirm its completion. Many metrics are used to evaluate parts of these activities in isolation, but establishing confidence for software assurance requires considering the fully integrated solution to establish overall sufficiency.

An additional complexity for software assurance is to recognize that software is never defect free, and that up to 5% of the unaddressed defects are vulnerabilities. According to Jones and Bonsignour, the average defect level in the U.S. is 0.75 defects per function point or 6,000 per million lines of code (MLOC) for a high-level language. Very good levels would be 600 to 1,000 defects per MLOC, and exceptional levels would be below 600 defects per MLOC.

Since software cannot always function perfectly as intended, how can confidence for software assurance be established?

One option is to use measures that establish reasonable confidence that security is sufficient for the operational context. Assurance measures are not absolutes, but information can be collected that indicates whether key aspects of security have been sufficiently addressed throughout the lifecycle to establish confidence that assurance is sufficient for operational needs.

At the start of development, much about the operational context remains undefined, and there is a general knowledge of the operational and security risks that might arise, as well as the security behavior that is desired when the system is deployed. This vision provides only a limited basis for establishing confidence in the behavior of the delivered system.

Over the development lifecycle, as the details of the software and operational context incrementally take shape, it is possible, with well-selected measurements, to incrementally increase confidence and eventually confirm that the delivered system will achieve the level of software assurance desired. When acquiring a product, if it is not possible to conduct measurement directly, the vendor should be contracted to provide data that shows product and process confidence. Independent verification and validation should also be performed to confirm the vendor's information

Software assurance must be engineered into the design of a software-intensive system. Designing in software assurance entails more than just identifying defects and security vulnerabilities toward the end of the lifecycle (reacting); it also requires evaluating how system requirements and the engineering decisions made during design contribute to vulnerabilities. Many known attacks are the result of poor acquisition and development practices.

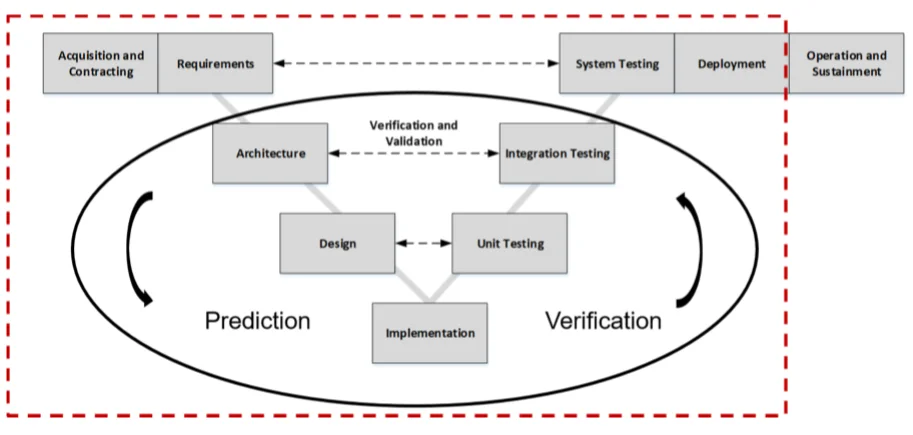

This approach to software assurance depends on establishing measures for managing software faults across the full acquisition lifecycle. It also requires increased attention to earlier lifecycle steps, which anticipate results and consider the verification side as shown in Figure 1. Many of these steps can be performed iteratively with opportunities in each cycle to identify assurance limitations and confirm results.

Figure 1: Lifecycle Measures

Selecting Measurement Data for Software Assurance Practices

The Goal/Question/Metric (GQM) paradigm can be used to establish a link between the software assurance target and the engineering practices that should support the target. The GQM approach was developed in the 1980s as a mechanism for structuring metrics and is a well-recognized and widely used metrics approach.

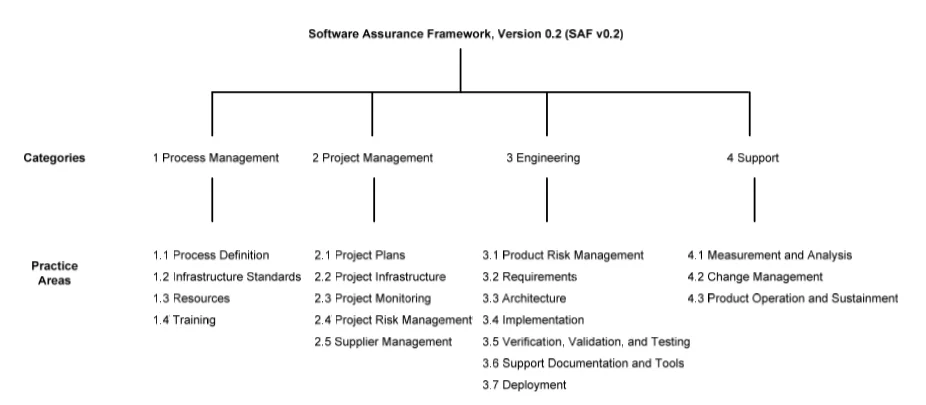

The SAF documents practices for process management, program management, engineering, and support. For any given software assurance target, there are GQM questions that can be linked to each practice area and individual practice to help identify potential evidence. The SAF provides practices as a starting point for a program, based on the SEI's expertise in software assurance, cybersecurity engineering, and risk management. Each organization must tailor the practices to support its specific software assurance target--possibly modifying the questions for each relevant software assurance practice--and select a starting set of metrics for evidence that is worth the time and effort needed to collect it.

The following example using this approach shows how practices in each area can be used to provide evidence in support of a software assurance target.

Example Software Assurance Target and Relevant SAF Practices

Consider the following software assurance target: Supply software to the warfighter with acceptable software risk. To meet this software assurance target, two sub-goals are needed (based on the definition of software assurance):

- Sub-Goal 1: Supply software to the warfighter that functions in the intended manner. (Since this is the primary focus of every program, and volumes of material are published about it, this sub-goal does not need to be further elaborated.)

- Sub-Goal 2: Supply software to the warfighter with a minimal number of exploitable vulnerabilities. (The remainder of this section provides a way to address this sub-goal.)

Figure 2: Software Assurance Framework

Figure 2 above shows the SAF's structure of practice areas. Using the SAF, the following high-level questions should be asked to address sub-goal 2: Supply software to the warfighter with a minimal number of exploitable vulnerabilities. We provide more detailed questions for each of these framework categories in our technical note, Exploring the Use of Metrics for Software Assurance.

- Process Management: Do process management activities help minimize the potential for exploitable software vulnerabilities?

- Program Management: Do program management activities help minimize the potential for exploitable software vulnerabilities?

- Engineering: Do engineering activities minimize the potential for exploitable software vulnerabilities?

- Support: Do support activities help minimize the potential for exploitable software vulnerabilities?

There are many possible metrics that could provide indicators of how well each practice in each practice area is addressing its assigned responsibility for meeting the goal. The tables in Appendices B-E of the SEI technical note provide metric options to consider when addressing the questions for the practice areas.

Selecting Evidence for Software Assurance Practices

A reasonable starting point for software assurance measurement is with practices that the organization understands and is already addressing. Consider the following example.

The DoD requires a program protection plan, and evidence could be collected using metrics for engineering practices (see Figure 2, practice group 3) that show how a program is handling program protection.

In Engineering practice area 3.2 Requirements, data can be collected to provide a basis for completing the program protection plan. Relevant software assurance data can come from requirements that include

- the attack surface

- weaknesses resulting from the analysis of the attack surface, such as a threat model for the system

In Engineering practice area 3.3 Architecture, data is collected to show that requirements can be addressed. This data might include

- the results of an expert review by those with security expertise to determine the security effectiveness of the architecture

- attack paths identified and mapped to security controls

- security controls mapped to weaknesses identified in the threat modeling activities in practice 3.2

In Engineering practice area 3.4 Implementation, data can be provided from activities, such as code scanning, to show how weaknesses are identified and removed. This data might include

- results from static and dynamic tools and related code updates

- the percentage of software evaluated with tools and peer review

In Engineering practice area 3.5 Verification, Validation, and Testing, data can be collected to determine that requirements have been confirmed, and the following evidence would be useful:

- percentage of security requirements tested (total number of security requirements and MLOC)

- code exercised in testing (MLOC)

- code surface tested (% of code exercises)

Each selected metric must have a process that establishes how data is collected, analyzed, and evaluated.

Finding Metrics Data in Available Documentation

For each SAF practice, a range of outputs (e.g., documents, presentations, dashboards) is typically created. The form of an output may vary based on the lifecycle in use. An output may be provided at multiple points in a lifecycle with increased content specificity. Available outputs can be evaluated and tuned to include the desired measurement data.

In Engineering practice area 3.2 Requirements, the SAF includes the following practice:

A security risk assessment is an engineering-based security risk analysis that includes the attack surface (those aspects of the system that are exposed to an external agent) and abuse/misuse cases (potential weaknesses associated with the attack surface that could lead to a compromise). This activity may also be referred to as threat modeling.

A security risk assessment exhibits outputs with specificity that varies by lifecycle phase. Initial risk assessment results might include only that the planned use of a commercial database manager raises a specific vulnerability risk that should be addressed during detailed design. The risk assessment associated with that detailed design should recommend specific mitigations to the development team. Testing plans should cover high-priority weaknesses and proposed mitigations.

Examples of useful data related to measuring this practice and that support the software assurance target appear in the following list:

- recommended reductions in the attack surface to simplify development and reduce security risks

- prioritized list of software security risks

- prioritized list of design weaknesses

- prioritized list of controls/mitigations

- mapping of controls/mitigations to design weaknesses

- prioritized list of issues to be addressed in test, validation, and verification

The outputs of a security risk assessment depend on the experience of the participants as well as constraints imposed by costs and the schedule. An analysis of this data should include consideration for missing security weaknesses or poor mitigation analysis, which increases operational risks and future expenses.

Looking Ahead

A key area of concern for software assurance is the supply chain for a product. When contracting for specific functions in a software system, acquirers must know how to communicate to the vendors for those functions what they want and how the vendors can meet the acquirers' needs for assurance. Furthermore, supply chains may involve more than a single tier of vendors--a contractor may buy software parts from another contractor, further complicating the assurance challenge. We are exploring measures relevant to software assurance for the supply chain.

Commercial components used in a final product may not have been intended for the functionality or activity for which they are being used in that product. In a product composed from commercial components, determining that the product does what it is supposed to do--and does not do what it is not supposed to do--can be complicated. Context is important, and care must be taken when components of a system are used in a context for which those components were not designed: The disparity between a generically built component and the context in which that component is implemented can introduce unintended risk. In such cases, which are becoming more common, acquirers must determine their level of concern about these risks, and metrics will be of great value.

Additional Resources

Read the SEI technical note, Exploring the Use of Metrics for Software Assurance.

Read the Prototype Software Assurance Framework (SAF): Introduction and Overview.

Learn about SEI software assurance curricula.

Learn about the book, Cyber Security Engineering: A Practical Approach for Systems and Software Assurance, by Nancy R. Mead and Carol Woody.

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed