Play it Again Sam! or How I Learned to Love Large Language Models

“AI will not replace you. A person using AI will. ”

-Santiago @svpino

In our work as advisors in software and AI engineering, we are often asked about the efficacy of large language model (LLM) tools like Copilot, GhostWriter, or Tabnine. Recent innovation in the building and curation of LLMs demonstrates powerful tools for the manipulation of text. By finding patterns in large bodies of text, these models can predict the next word to write sentences and paragraphs of coherent content. The concern surrounding these tools is strong – from New York schools banning the use of ChatGPT to Stack Overflow and Reddit banning answers and art generated from LLMs. While many applications are strictly limited to writing text, a few applications explore the patterns to work on code, as well. The hype surrounding these applications ranges from adoration (“I’ve rebuilt my workflow around these tools”) to fear, uncertainty, and doubt (“LLMs are going to take my job”). In the Communications of the ACM, Matt Welsh goes so far as to declare we’ve reached “The End of Programming.” While integrated development environments have had code generation and automation tools for years, in this post I will explore what new advancements in AI and LLMs mean for software development.

Overwhelming Need and the Rise of the Citizen Developer

First, a little context. The need for software expertise still outstrips the workforce available. Demand for high quality senior software engineers is increasing. The U.S. Bureau of Labor Statistics estimates growth to be 25 percent annually from 2021 to 2031. While the end of 2022 saw large layoffs and closures of tech companies, the demand for software is not slacking. As Marc Andreessen famously wrote in 2011, “Software is eating the world.” We are still seeing disruptions of many industries by innovations in software. There are new opportunities for innovation and disruption in every industry led by improvements in software. Gartner recently introduced the term citizen developer:

an employee who creates application capabilities for consumption by themselves or others, using tools that are not actively forbidden by IT or business units. A citizen developer is a persona, not a title or targeted role.

Citizen developers are non-engineers leveraging low/no code environments to develop new workflows or processes from components developed by more traditional, professional developers.

Enter Large Language Models

Large language models are neural networks trained on large datasets of text data, from terabytes to petabytes of information from the Internet. These data sets range from collections of online communities, such as Reddit, Wikipedia, and Github, to curated collections of well-understood reference materials. Using the Transformer architecture, the new models can build relationships between different pieces of data, learning connections between words and concepts. Using these relationships, LLMs are able to generate material based on different types of prompts. LLMs take inputs and can find related words or concepts and sentences that they return as output to the user. The following examples were generated with ChatGPT:

LLMs are not really generating new thoughts as much as recalling what they have seen before in the semantic space. Rather than thinking of LLMs as oracles that produce content from the ether, it may be helpful to think of LLMs as sophisticated search engines that can recall and synthesize solutions from those they have seen in their training data sets. One way to think of generative models is that they take as input the training data and produce results that are the “missing” members of the training set.

For example, imagine you found a deck of playing cards with suits of horseshoes, rainbows, unicorns, and moons. If a few of the cards were missing, you would most likely be able to fill in the blanks from your knowledge of card decks. The LLM handles this process with massive amounts of statistics based on massive amounts of related data, allowing some synthesis of new code based on things the model might not have been trained on but can infer from the training data.

How to Leverage LLMs

In many modern integrated development environments (IDEs), code completion allows programners to start typing out keywords or functions and complete the rest of the section with the function call or skeletons to customize for your needs. LLM tools like CoPilot allow users to start writing code and provide a smarter completion mechanism, taking natural language prompts written as comments and completing the snippet or function with what they predict to be relevant code. For example, ChatGPT can respond to the prompt “write me a UIList example in Swift” with a code example. Code generation like this can be more tailorable than many of the other no-code solutions being published. These tools can be powerful in workforce development, providing feedback for workers who are inexperienced or who lack programming skills. I think about this in the context of no-code tools—the solutions provided by LLMs aren’t perfect, but they are more expressive and more likely to provide reasonable inline explanations of intent.

ChatGPT lowers the entry point for trying a new language or comparing solutions on languages by filling in gaps in knowledge. A junior engineer or inexperienced programmer could use an LLM in the same way they might approach a busy experienced engineer mentor: asking for examples to get pointed in the right direction. As an experiment, I asked ChatGPT to explain a Python program I wrote for the Advent of Code two years ago. It gave me some prose. I asked for inline comments, and it gave me back a line-by-line explanation for what it was doing. Not all of the explanations were clear, but neither are all the explanations offered by engineers. Compared to Google or Stack Overflow, ChatGPT has more affordances for clarifying questions. By asking it to provide more details or to target different audiences (“Explain this concept to a 7-year-old, to a 17-year-old, and to a graduate student”), a user can get ChatGPT to present the material in a way that allows better understanding of the code generated. This approach can allow new programmers or citizen developers to work fast and, if interested, dig deeper into why the program works the way it does.

Trusting LLMs

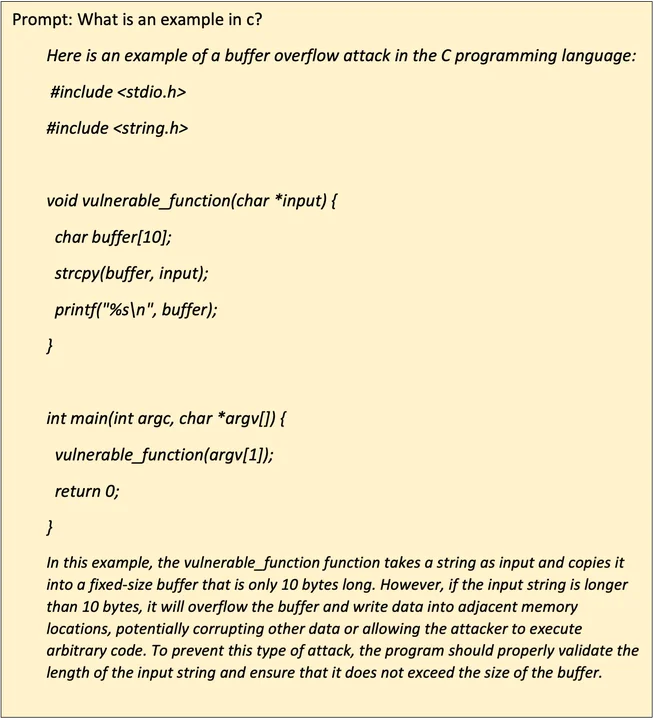

In recent news we’ve seen an explosion of interest in LLMs via the new Open AI beta for ChatGPT. ChatGPT is based off of the GPT 3.5 model that has been enhanced with reinforcement learning to provide better quality responses to prompts. People have demonstrated using ChatGPT for everything from product pitches to poetry. In experiments with a colleague, we asked ChatGPT to explain buffer overflow attacks and provide examples. ChatGPT provided a good description of buffer overflows and an example of C code that was vulnerable to that attack. We then asked it to rewrite the description for a 7-year-old. The description was still reasonably accurate and did a nice job of explaining the concept without too many advanced concepts. For fun we tried to push it further –

This result was interesting but gave us a little pause. A haiku is traditionally three lines in a five/seven/five pattern: five syllables in the first line, seven in the second, and five in the last. It turns out that while the output looked like a haiku it was subtly wrong. A closer look reveals the poem returned six syllables in the first line and eight in the second, easy to overlook for readers not well versed in haiku, but still wrong. Let’s return to how the LLMs are trained. An LLM is trained on a large dataset and builds relationships between what it is trained on. It hasn’t been instructed on how to build a haiku: It has plenty of data labeled as haiku, but very little in the way of labeling syllables on each line. Through observation, the LLM has learned that haikus use three lines and short sentences, but it doesn’t understand the formal definition.

Similar shortcomings highlight the fact that LLMs mostly recall information from their datasets: Recent articles from Stanford and New York University point out that LLM based solutions generate insecure code in many examples. This is not surprising; many examples and tutorials on the Internet are written in an insecure way to convey instruction to the reader, providing an understandable example if not a secure one. To train a model that generates secure code, we need to provide models with a large corpus of secure code. As experts will attest, a lot of code shipped today is insecure. Reaching human level productivity with secure code is a fairly low bar because humans are demonstrably poor at writing secure code. There are people who copy and paste directly from Stack Overflow without thinking about the implications.

Where We Go from Here: Calibrated Trust

It is important to remember that we are just getting started with LLMs. As we iterate through the first versions and learn their limitations, we can design systems that build on early strengths and mitigate or guard against early weaknesses. In “Examining Zero-Shot Vulnerability Repair with Large Language Models” the authors investigated vulnerability repair with LLMs. They were able to demonstrate that with a combination of models they were able to successfully repair vulnerable code in multiple scenarios. Examples are starting to appear where developers are using LLM tools to develop their unit tests.

In the last 40 years, the software industry and academia have created tools and practices that help experienced and inexperienced programmers today generate robust, secure, and maintainable code. We have code reviews, static analysis tools, secure coding practices, and guidelines. All of these tools can be used by a team that is looking to adopt an LLM into their practices. Software engineering practices that support effective programming—defining good requirements, sharing understanding across teams, and managing for the tradeoffs of “-ities” (quality, security, maintainability, etc.)—are still hard problems that require understanding of context, not just repetition of previously written code. LLMs should be treated with calibrated trust. Continuing to do code reviews, apply fuzz testing, or using good software engineering techniques will help adopters of these tools use them successfully and appropriately.

More By The Author

More In Artificial Intelligence Engineering

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed