Machine Learning in Cybersecurity

We recently published a report that outlines relevant questions that decision makers who want to use artificial intelligence (AI) or machine learning (ML) tools as solutions in cybersecurity should ask of machine-learning practitioners to adequately prepare for implementing them. My coauthors are Joshua Fallon, April Galyardt, Angela Horneman, Leigh Metcalf, and Edward Stoner. Our goal with the report is chiefly educational, and we hope it can act like an ML-specific Heilmeier catechism and serve as a kind of checklist for decision makers to pursue and acquire good ML tools for cybersecurity.

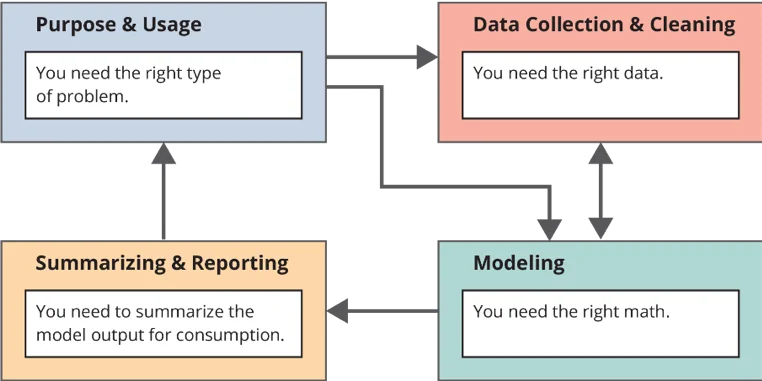

Our technical report provides an overview of the relevant parts of an ML lifecycle--selecting the right problem, the right data, and the right math and summarizing the model output for consumption--as well as questions that relate to those areas of focus.

As the federally funded research and development center (FFRDC) known for AI engineering, and with its long experience in cybersecurity, the SEI has the expertise to advise you--the decision makers adopting these tools--on evaluating the adequacy of ML tools applied to cybersecurity. To that end, we structured the report around the questions you should ask about ML tools. We chose this framing, rather than proposing a detailed guide of how to build an ML system in cybersecurity, because we want to enable you to learn what a good tool looks like.

When decision makers have difficulty identifying a good tool, the market will usually stop providing them. This conclusion is not new; it results from Akerlof's classic information asymmetry in the market for lemons.[1] One equally classic solution to this market asymmetry is to give buyers better information. The questions we suggest you ask of ML providers should help you collect that better information. Here's a preview of those questions and why they matter.

- What is your topic of interest?

The aim of this question is to establish the goal of your investigation and define the problem you want to solve. A good goal should address specific cybersecurity topics--such as the impact or implementation of a specific security policy--that will guide how you apply the tool. An answer to this question helps establish the purpose of the tool so that you can evaluate the other questions.

2. What information will help you address the topic of interest?

The aim of this question is to establish what information you will use to drive your investigation. A good response should demonstrate that the input data includes or encodes features that allow meaningful assessment, such as prediction or classification. The intent is to make sure that the right kind of information is available in the first place, that the available data is sufficient in breadth and quality, and that you can access and use the information ethically.

3. How do you anticipate that an ML tool will address the topic of interest?

This question seeks to address three aspects of an ML tool: (1) a description of the tool's applicability based on your goals; (2) a description of what sort of results to expect; and (3) a description of the kind of explanation that the tool's output, or the suppliers and developers themselves, will provide to explain how it works and ensure it meets your needs. You must choose the ML tool carefully so that it will output appropriate information at a high enough standard to evaluate it.

4. How will you protect the ML system against attacks in an adversarial, cybersecurity environment?

The purpose of this question is to evaluate the defensive disposition of the ML system. A response should describe what protections the tool itself has, as well as how the data it uses and produces is protected during both training and operation. Additionally, the response should address the measures that exist in the environment surrounding the ML tool that makes it resilient if an adversary successfully attacks it.

5. How will you find and mitigate unintended outputs and effects?

This question addresses considerations for handling sensitive information carefully to avoid introducing both errors and bias into an ML system. Failing to answer this question adequately can lead to the perpetuation of unwanted biases, harm to stakeholders, or misleading results. A good response should consider the following five principles: representation, protection, stewardship, authenticity, and resiliency.

6. Can you evaluate the ML tool adequately, accounting for errors?

A good response to this question should plan an evaluation that assesses all of the following items in detail: data sources; design of the study; appropriate measures of success; understanding the target population; analysis to explore missing evidence; and the expected generalizability of results. Our discussion of this question in the report provides three important examples of common errors decision makers should avoid, and suggests further resources for comprehensive evaluation. But in broad strokes, we give advice for you to make sure evaluations measure what you actually care about improving. Otherwise what you care about is much less likely to improve.

7. What alternative tools have you considered? What are the advantages and disadvantages of each one?

A fair answer to these questions should compare multiple types of tools. You should consider cost of development, maintenance, and operation. Since these are cybersecurity tools, you should consider how an adversary might respond to them.

Read the technical report for all the details, and you'll be better equipped to detect and avoid lemons when selecting ML tools for your cybersecurity needs. If you have comments about the report or suggestions for how to expand this work, post a comment and we'll happily start a conversation. For those of you that build ML tools in cybersecurity, we hope to write a guide in the future for building a system, support, and documentation that adequately answers these questions.

[1] Akerlof, George A. "The market for "lemons": Quality uncertainty and the market mechanism." Uncertainty in economics. Academic Press, 1978. 235-251.

More By The Author

CERT/CC Comments on Standards and Guidelines to Enhance Software Supply Chain Security

• By Jonathan Spring

Adversarial ML Threat Matrix: Adversarial Tactics, Techniques, and Common Knowledge of Machine Learning

• By Jonathan Spring

More In CERT/CC Vulnerabilities

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In CERT/CC Vulnerabilities

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed