Assuring Cyber-Physical Systems in an Age of Rising Autonomy

As developers continue to build greater autonomy into cyber-physical systems (CPSs), such as unmanned aerial vehicles (UAVs) and automobiles, these systems aggregate data from an increasing number of sensors. The systems use this data for control and for otherwise acting in their operational environments. However, more sensors not only create more data and more precise data, but they require a complex architecture to correctly transfer and process multiple data streams. This increase in complexity comes with additional challenges for functional verification and validation (V&V) a greater potential for faults (errors and failures), and a larger attack surface. What’s more, CPSs often cannot distinguish faults from attacks.

To address these challenges, researchers from the SEI and Georgia Tech collaborated on an effort to map the problem space and develop proposals for solving the challenges of increasing sensor data in CPSs. This SEI Blog post provides a summary our work, which comprised research threads addressing four subcomponents of the problem:

- addressing error propagation induced by learning components

- mapping fault and attack scenarios to the corresponding detection mechanisms

- defining a security index of the ability to detect tampering based on the monitoring of specific physical parameters

- determining the impact of clock offset on the precision of reinforcement learning (RL)

Later I will describe these research threads, which are part of a larger body of research we call Safety Analysis and Fault Detection Isolation and Recovery (SAFIR) Synthesis for Time-Sensitive Cyber-Physical Systems. First, let’s take a closer look at the problem space and the challenges we are working to overcome.

More Data, More Problems

CPS developers want more and better data so their systems can make better decisions and more precise evaluations of their operational environments. To achieve those goals, developers add more sensors to their systems and improve the ability of these sensors to gather more data. However, feeding the system more data has several implications: more data means the system must execute more, and more complex, computations. Consequently, these data-enhanced systems need more powerful central processing units (CPUs).

More powerful CPUs introduce various concerns, such as energy management and system reliability. Larger CPUs also raise questions about electrical demand and electromagnetic compatibility (i.e., the ability of the system to withstand electromagnetic disturbances, such as storms or adversarial interference).

The addition of new sensors means systems need to aggregate more data streams. This need drives greater architectural complexity. Moreover, the data streams must be synchronized. For instance, the information obtained from the left side of an autonomous automobile must arrive at the same time as information coming from the right side.

More sensors, more data, and a more complex architecture also raise challenges concerning the safety, security, and performance of these systems, whose interaction with the physical world raises the stakes. CPS developers face heightened pressure to ensure that the data on which their systems rely is accurate, that it arrives on schedule, and that an external actor has not tampered with it.

A Question of Trust

As developers strive to imbue CPSs with greater autonomy, one of the biggest hurdles is gaining the trust of users who depend on these systems to operate safely and securely. For example, consider something as simple as the air pressure sensor in your car’s tires. In the past, we had to check the tires physically, with an air pressure gauge, often miles after we’d been driving on tires dangerously underinflated. The sensors we have today let us know in real time when we need to add air. Over time, we have come to depend on these sensors. However, the moment we get a false alert telling us our front driver’s side tire is underinflated, we lose trust in the ability of the sensors to do their job.

Now, consider a similar system in which the sensors pass their information wirelessly, and a flat-tire warning triggers a safety operation that prevents the car from starting. A malicious actor learns how to generate a false alert from a spot across the parking lot or merely jams your system. Your tires are fine, your car is fine, but your car’s sensors, either detecting a simulated problem or entirely incapacitated, will not let you start the car. Extend this scenario to autonomous systems operating in airplanes, public transportation systems, or large manufacturing facilities, and trust in autonomous CPSs becomes even more critical.

As these examples demonstrate, CPSs are susceptible to both internal faults and external attacks from malicious adversaries. Examples of the latter include the Maroochy Shire incident involving sewage services in Australia in 2000, the Stuxnet attacks targeting power plants in 2010, and the Keylogger virus against a U.S. drone fleet in 2011.

Trust is critical, and it lies at the heart of the work we have been doing with Georgia Tech. It is a multidisciplinary problem. Ultimately, what developers seek to deliver is not just a piece of hardware or software, but a cyber-physical system comprising both hardware and software. Developers need an assurance case, a convincing argument that can be understood by an external party. The assurance case must demonstrate that the way the system was engineered and tested is consistent with the underlying theories used to gather evidence supporting the safety and security of the system. Making such an assurance case possible was a key part of the work described in the following sections.

Addressing Error Propagation Induced by Learning Components

As I noted above, autonomous CPSs are complex platforms that operate in both the physical and cyber domains. They employ a mix of different learning components that inform specific artificial intelligence (AI) functions. Learning components gather data about the environment and the system to help the system make corrections and improve its performance.

To achieve the level of autonomy needed by CPSs when operating in uncertain or adversarial environments, CPSs make use of learning algorithms. These algorithms use data collected by the system—before or during runtime—to enable decision making without a human in the loop. The learning process itself, however, is not without problems, and errors can be introduced by stochastic faults, malicious activity, or human error.

Many groups are working on the problem of verifying learning components. In most cases, they are interested in the correctness of the learning component itself. This line of research aims to produce an integration-ready component that has been verified with some stochastic properties, such as a probabilistic property. However, the work we conducted in this research thread examines the problem of integrating a learning-enabled component within a system.

For example, we ask, How can we define the architecture of the system so that we can fence off any learning-enabled component and assess that the data it is receiving is correct and arriving at the right time? Furthermore, Can we assess that the system outputs can be controlled for some notion of correctness? For instance, Is the acceleration of my car within the speed limit? This kind of fencing is necessary to determine whether we can trust that the system itself is correct (or, at least, not that wrong) compared to the verification of a running component, which today is not possible.

To address these questions, we described the various errors that can appear in CPS components and affect the learning process. We also provided theoretical tools that can be used to verify the presence of such errors. Our aim was to create a framework that operators of CPSs can use to assess their operation when using data-driven learning components. To do so, we adopted a divide-and-conquer approach that used the Architecture Analysis & Design Language (AADL) to create a representation of the system’s components, and their interconnections, to construct a modular environment that allowed for the inclusion of different detection and learning mechanisms. This approach supports a full model-based development, including system specification, analysis, system tuning, integration, and upgrade over the lifecycle.

We used a UAV system to illustrate how errors propagate throughout system components when adversaries attack the learning processes and obtain safety tolerance thresholds. We focused only on specific learning algorithms and detection mechanisms. We then investigated their properties of convergence, as well as the errors that can disrupt those properties.

The results of this investigation provide the starting point for a CPS designer’s guide to utilizing AADL for system-level analysis, tuning, and upgrade in a modular fashion. This guide could comprehensively describe the different errors in the learning processes during system operation. With these descriptions, the designer can automatically verify the proper operation of the CPS by quantifying marginal errors and integrating the system into AADL to evaluate critical properties in all lifecycle phases. To learn more about our approach, I encourage you to read the paper A Modular Approach to Verification of Learning Components in Cyber-Physical Systems.

Mapping Fault and Attack Scenarios to Corresponding Detection Mechanisms

UAVs have become more susceptible to both stochastic faults (stemming from faults occurring on the different components comprising the system) and malicious attacks that compromise either the physical components (sensors, actuators, airframe, etc.) or the software coordinating their operation. Different research communities, using an assortment of tools that are often incompatible with each other, have been investigating the causes and effects of faults that occur in UAVs. In this research thread, we sought to identify the core properties and elements of these approaches to decompose them and thereby enable designers of UAV systems to consider all the different results on faults and the associated detection techniques via an integrated algorithmic approach. In other words, if your system is under attack, how do you select the best mechanism for detecting that attack?

The issue of faults and attacks on UAVs has been widely studied, and a number of taxonomies have been proposed to help engineers design mitigation strategies for various attacks. In our view, however, these taxonomies were insufficient. We proposed a decision process made of two elements: first, a mapping from fault or attack scenarios to abstract error types, and second, a survey of detection mechanisms based on the abstract error types they help detect. Using this approach, designers could use both elements to select a detection mechanism to protect the system.

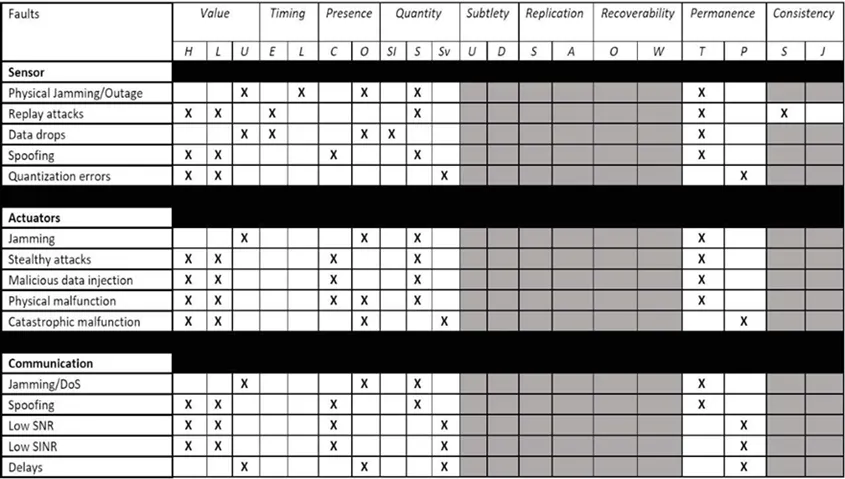

To classify the attacks on UAVs, we created a list of component compromises, focusing on those that reside at the intersection of the physical and the digital realms. The list is far from comprehensive, but it is adequate for representing the major qualities that describe the effects of those attacks to the system. We contextualized the list in terms of attacks and faults on sensing, actuating, and communication components, and more complex attacks targeting multiple elements to cause system-wide errors:

|

Sensor Attack and Faults |

Actuator Attacks and Faults |

Communications Attacks and Faults

|

|

|

|

Using this list of attacks on UAVs and those on UAV platforms, we next identified their properties in terms of the taxonomy criteria introduced by the SEI’s Sam Procter and Peter Feiler in The AADL Error Library: An Operationalized Taxonomy of System Errors. Their taxonomy provides a set of guide words to describe errors based on their class: value, timing, quantity, etc. Table 1 presents a subset of those classes as they apply to UAV faults and attacks.

We then created a taxonomy of detection mechanisms that included statistics-based, sample-based, and Bellman-based intrusion detection systems. We related these mechanisms to the attacks and faults taxonomy. Using these examples, we developed a decision-making process and illustrated it with a scenario involving a UAV system. In this scenario, the vehicle undertook a mission in which it faced a high probability of being subject to an acoustic injection attack.

In such an attack, an analyst would refer to the table containing the properties of the attack and choose the abstract attack class of the acoustic injection from the attack taxonomy. Given the nature of the attack, the appropriate choice would be the spoofing sensor attack. Based on the properties given by the attack taxonomy table, the analyst would be able to identify the key characteristics of the attack. Cross-referencing the properties of the attack with the span of detectable characteristics of the different intrusion detection mechanisms will determine the subset of mechanisms that will be successful in environments with those types of attacks.

In this research thread, we created a tool that can help UAV operators select the appropriate detection mechanisms for their system. Future work will focus on implementing the proposed taxonomy on a specific UAV platform, where the exact sources of the attacks and faults can be explicitly identified on a low architectural level. To learn more about our work on this research thread, I encourage you to read the paper Towards Intelligent Security for Unmanned Aerial Vehicles: A Taxonomy of Attacks, Faults, and Detection Mechanisms.

Defining a Security Index of the Ability to Detect Tampering by Monitoring Specific Physical Parameters

CPSs have gradually become large scale and decentralized in recent years, and they rely more and more on communication networks. This high-dimensional and decentralized structure increases the exposure to malicious attacks that can cause faults, failures, and even significant damage. Research efforts have been made on the cost-efficient placement or allocation of actuators and sensors. However, most of these developed methods mainly consider controllability or observability properties and do not take into account the security aspect.

Motivated by this gap, we considered in this research thread the dependence of CPS security on the potentially compromised actuators and sensors, in particular, on deriving a security measure under both actuator and sensor attacks. The topic of CPS security has received increasing attention recently, and different security indices have been developed. The first kind of security measure is based on reachability analysis, which quantifies the size of reachable sets (i.e., the sets of all states reachable by dynamical systems with admissible inputs). To date, however, little work has quantified reachable sets under malicious attacks and used the developed security metrics to guide actuator and sensor selection. The second kind of security index is defined as the minimal number of actuators and/or sensors that attackers need to compromise without being detected.

In this research thread, we developed a generic actuator security index. We also proposed graph-theoretic conditions for computing the index with the help of maximum linking and the generic normal rank of the corresponding structured transfer function matrix. Our contribution here was twofold. We provided conditions for the existence of dynamical and perfect undetectability. In terms of perfect undetectability, we proposed a security index for discrete-time linear-time invariant (LTI) systems under actuator and sensor attacks. Then, we developed a graph-theoretic approach for structured systems that is used to compute the security index by solving a min-cut/max-flow problem. For a detailed presentation of this work, I encourage you to read the paper A Graph-Theoretic Security Index Based on Undetectability for Cyber-Physical Systems.

Determining the Impact of Clock Offset on the Precision of Reinforcement Learning

A major challenge in autonomous CPSs is integrating more sensors and data without reducing the speed of performance. CPSs, such as cars, ships, and planes, all have timing constraints that can be catastrophic if missed. Complicating matters, timing acts in two directions: timing to react to external events and timing to engage with humans to ensure their protection. These conditions raise a number of challenges because timing, accuracy, and precision are characteristics key to ensuring trust in a system.

Methods for the development of safe-by-design systems have been mostly focused on the quality of the information in the network (i.e., in the mitigation of corrupted signals either due to stochastic faults or malicious manipulation by adversaries). However, the decentralized nature of a CPS requires the development of methods that address timing discrepancies among its components. Issues of timing have been addressed in control systems to assess their robustness against such faults, yet the effects of timing issues on learning mechanisms are rarely considered.

Motivated by this fact, our work on this research thread investigated the behavior of a system with reinforcement learning (RL) capabilities under clock offsets. We focused on the derivation of guarantees of convergence for the corresponding learning algorithm, given that the CPS suffers from discrepancies in the control and measurement timestamps. In particular, we investigated the effect of sensor-actuator clock offsets on RL-enabled CPSs. We considered an off-policy RL algorithm that receives data from the system’s sensors and actuators and uses them to approximate a desired optimal control policy.

Nevertheless, owing to timing mismatches, the control-state data obtained from these system components were inconsistent and raised questions about RL robustness. After an extensive analysis, we showed that RL does retain its robustness in an epsilon-delta sense. Given that the sensor–actuator clock offsets are not arbitrarily large and that the behavioral control input satisfies a Lipschitz continuity condition, RL converges epsilon-close to the desired optimal control policy. We conducted a two-link manipulator, which clarified and verified our theoretical findings. For a complete discussion of this work, I encourage you to read the paper Impact of Sensor and Actuator Clock Offsets on Reinforcement Learning.

Building a Chain of Trust in CPS Architecture

In conducting this research, the SEI has made some contributions in the field of CPS architecture. First, we extended AADL to make a formal semantics we can use not only to simulate a model in a very precise way, but also to verify properties on AADL models. That work enables us to reason about the architecture of CPSs. One outcome of this reasoning relevant to assuring autonomous CPSs was the idea of establishing a “fence” around vulnerable components. However, we still needed to perform fault detection to make sure inputs are not incorrect or tampered with or the outputs invalid.

Fault detection is where our collaborators from Georgia Tech made key contributions. They have done great work on statistics-based techniques for detecting faults and developed strategies that use reinforcement learning to build fault detection mechanisms. These mechanisms look for specific patterns that represent either a cyber attack or a fault in the system. They have also addressed the question of recursion in situations in which a learning component learns from another learning component (which may itself be wrong). Kyriakos Vamvoudakis of Georgia Tech’s Daniel Guggenheim School of Aerospace Engineering worked out how to use architecture patterns to address those questions by expanding the fence around those components. This work helped us implement and test fault detection, isolation, and recording mechanism on use-case missions that we implemented on a UAV platform.

We have learned that if you do not have a good CPS architecture—one that is modular, meets desired properties, and isolates fault tolerance—you must have a big fence. You have to do more processing to verify the system and gain trust. On the other hand, if you have an architecture that you can verify is amenable to these fault tolerance techniques, then you can add in the fault isolation tolerances without degrading performance. It is a tradeoff.

One of the things we have been working on in this project is a collection of design patterns that are known in the safety community for detecting and mitigating faults using a simplex architecture to switch from one version of a component to another. We need to define the aforementioned tradeoff for each of those patterns. For instance, patterns will differ in the number of redundant components, and, as we know, more redundancy is more costly because we need more CPU, more wires, more energy. Some patterns will take more time to make a decision or switch from nominal mode to degraded mode. We are evaluating all those patterns, taking into consideration the cost to implement them in terms of resources—mostly hardware resources—and the timing aspect (the time between detecting an event to reconfiguring the system). These practical considerations are what we want to address—not just a formal semantics of AADL, which is nice for computer scientists, but also this tradeoff analysis made possible by providing a careful evaluation of every pattern that has been documented in the literature.

In future work, we want to address these larger questions:

- What can we do with models when we do model-based software engineering?

- How far can we go to build a toolbox so that designing a system can be supported by evidence during every phase?

- You want to build the architecture of a system, but can you make sense of a diagram?

- What can you say about the safety of the timing of the system?

The work is grounded on the vision of rigorous model-based systems engineering progressing from requirements to a model. Developers also need supporting evidence they can use to build a trust package for an external auditor, to demonstrate that the system they designed works. Ultimately, our goal is to build a chain of trust across all of a CPS’s engineering artifacts.

Additional Resources

- Read the paper A Modular Approach to Verification of Learning Components in Cyber-Physical Systems by L. Zhai, A. Kanellopoulos, F. Fotiadis, K.G. Vamvoudakis, and J. Hugues.

- Read the paper Towards Intelligent Security for Unmanned Aerial Vehicles: A Taxonomy of Attacks, Faults, and Detection Mechanisms by L. Zhai, A. Kanellopoulos, F. Fotiadis, K.G. Vamvoudakis, and J. Hugues.

- Read the paper A Graph-Theoretic Security Index Based on Undetectability for Cyber-Physical Systems by L. Zhai, K.G. Vamvoudakis, and J. Hugues.

- Read the paper Impact of Sensor and Actuator Clock Offsets on Reinforcement Learning by F. Fotiadis, A. Kanellopoulos, K.G. Vamvoudakis, and J. Hugues.

More By The Author

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed