Anti-Tamper for Software Components

PUBLISHED IN

Secure DevelopmentThe U.S. military uses anti-tamper (AT) technologies to keep data about critical military systems from being acquired by adversaries. AT practices are intended to prevent reverse engineering of software components for exploitation. With AT technology in place, critical military information remains secret.

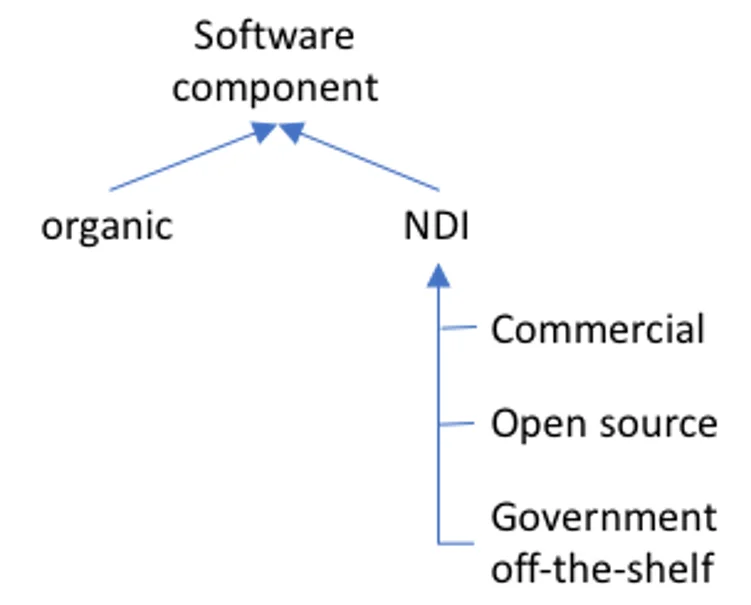

With the pervasiveness of software in systems today, it can be daunting for systems integrators to identify which software components are most in need of being protected by AT. Software can come from a variety of sources: It can be developed organically, bought commercially, downloaded from open-source repositories, and even obtained from government-controlled repositories (which can be a clearinghouse for some of these other sources). In this blog post, I discuss how to identify software components within systems that are in danger of being exploited and that should be protected by AT practices.

What Is Anti-Tamper?

AT is one aspect of a holistic approach to technology and program protection mandated by the U.S. government and outlined in DoDI 5000.83, released in July 2020. AT is specifically defined in DoDD 5200.47E, as follows:

Systems engineering activities intended to prevent or delay exploitation of critical program information (CPI) in U.S. defense systems in domestic and export configurations to impede counter-measure development, unintended technology transfer, or alteration of a system due to reverse engineering.

AT activities are intended to impede or stop a motivated adversary from gaining access to critical program information (CPI) in order to acquire knowledge, control, and the ability to exploit a system in a way that could harm U.S. interests. U.S. interests could be harmed by

- release of classified, sensitive, or proprietary data or information

- diminishing of capability

- change to the intended behavior of a U.S. defense system

There is a long history of adversaries in military conflict and in business seeking to take advantage of a competitor’s superiority or to find exploitable weaknesses. In the U.S., businesses are protected by intellectual-property laws. But such protection is of no value in a military conflict.

In 1958, there was a prominent example of a release of classified information where an AIM-9B Sidewinder air-to-air missile struck its target but did not explode as intended and unintentionally fell into the possession of the Soviet Union during the Vietnam War. The result, after reverse engineering of both the hardware and software components of the missile system, was the introduction by the Soviet Union of the AA-2 Atoll air-to-air missile (also known as the Vympel K-13). In 1999, Jacques Gansler, who was then assistant secretary of defense for acquisition and technology, issued a memorandum mandating use of AT techniques in military acquisition programs. Huber and Scott summarize the benefits of AT that were afforded by the Gansler memo:

- prevents or mitigates the unauthorized or inadvertent disclosure of U.S. technology, as well as its exploitation

- protects the U.S. warfighter from countermeasures development

- enables the consummation of foreign military sales with greater confidence that U.S. technologies will not be compromised

- reduces the burden on the taxpayer by helping to sustain U.S. technological advantages

That 1999 memo was superseded in 2015 by DoDD 5200.47E, which establishes specific AT policy to

- deter, impede, detect, and respond to the exploitation of CPI based on the consequence of CPI compromise and the anticipated system exposure through the application of cost-effective, risk-based protections, to include AT when warranted, in accordance with DoDI 5200.39

- support the sale or transfer of certain defense articles to foreign governments and their participating contractors while preserving U.S. and foreign investments in CPI through the implementation of AT, in accordance with DoDI 5000.02T and DoDI 5200.39.

Software Components and Anti-Tamper

AT, by policy, is focused on protecting CPI, such as documentation, requirements, plans, designs, and implementations (see Figure 1 below). Those implementations, driven by requirements and designs, are reflected in both hardware and software components. Such software components can come from a variety of sources. For this discussion and simplicity, those components can be characterized as being either organic or non-developmental items (NDI). An organic software component is one that is holistically created during development, integrated, tested, and deployed into operation to meet mission requirements. NDI are also configured, integrated, tested, and deployed into operation alongside the organic software components.

This bifurcation illustrates a key difference when thinking about AT: Organic software components are unique and not readily available outside the system into which that component operates. In other words, the system has sole possession of organic components. Conversely, NDI are obtained from outside sources (e.g., commercial and open-source software) and can be readily accessed by anyone and shared within the government domain (e.g., government off-the-shelf).

The case of the Sidewinder tamper, although dated, illustrates two important lessons that AT policy addresses: Software is a source of CPI for an adversary, and physical access to (or the ability to access) the software is a tamper threat vector to countermeasure development, unintended technology transfer, or alteration of a system due to reverse engineering. Since the Soviets had possession of the missile, it was possible for them to reverse engineer the software, thus exposing CPI. This CPI was subsequently reused, repurposed, and transferred to the AA-2 Atoll system, resulting in an unintentional transfer of technology.

The concept of access today is different. Donald Firesmith has noted that AT is a special case of physical possession. It is different in that it is no longer necessary to have physical access to the software by way of some physical storage medium (e.g., firmware, USB drive, weapon system’s hardware component), because possession can occur through remote tampering. Remote tampering can come from anywhere by any motivated and capable adversarial agent.

This all-too-common occurrence illustrates that although a U.S. defense system is in sole possession of organic software components, some may contain CPI. For this reason, systems-engineering activities must identify not only what elements of the system are CPI, but where and how that CPI is implemented and deployed in software components. These activities can ensure that AT techniques are used for those software components to deter, impede, detect, and respond (as stated in DoDD 5200.47E) to the tamper adversary.

Illustrated Tamper Case, CPI and Software Components

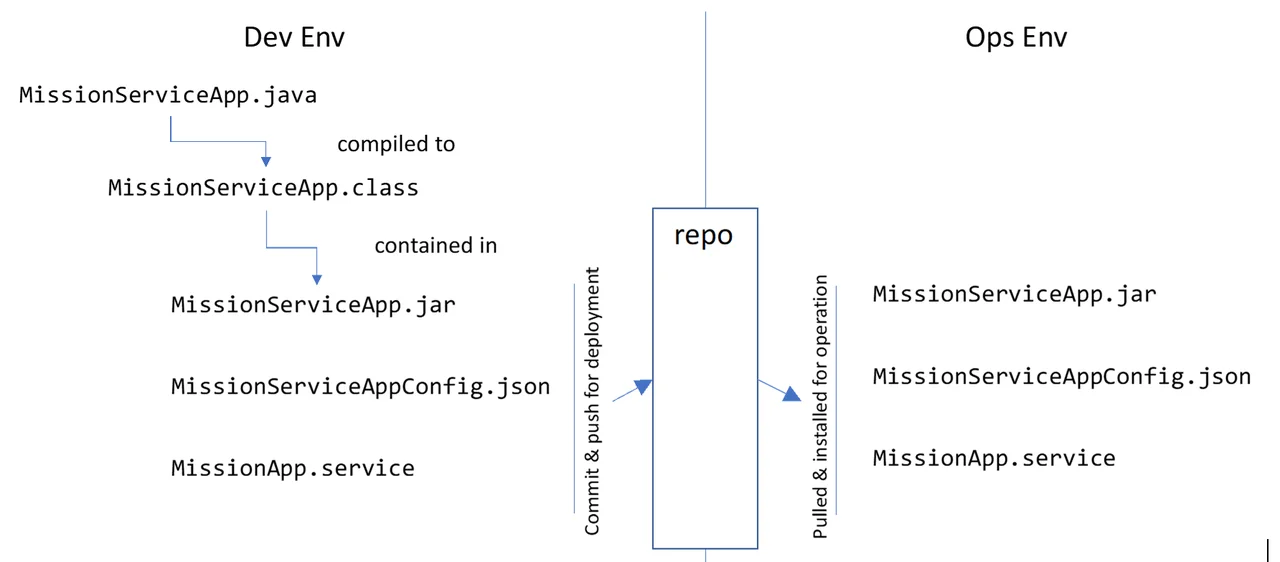

Consider a mission service, MissionServiceApp in Figure 2 below, that organically implements a business or mission-critical function for a connected, operational system. That service, once ready, is deployed into operation (in the Ops Env) as three pieces in one operational software component:

MissionServiceApp.jar—the implementation of the algorithm(s) in the mission serviceMissionServiceAppConfig.json—tunable parameters that are used to control the mission service during runtimeMissionApp.service—a system file used to automatically control the startup and shutdown of the mission service

For sake of completeness, note that the mission service depends on two other software components to operate—the actual operating system itself (e.g., RedHat SELinux) and the Java Runtime Environment (JRE), both of which are hardened and locked down in accordance with compliance mandates. Moreover, there is one component, repo, that is used to promote the tested and approved mission service from the Dev Env to the Ops Env. For the purpose of this illustration, any communication from the repo to the Ops Env is on a unidirectional network (i.e., one-way diode) and beyond the scope of this discussion.

Note that there are, at a minimum, two other pieces of the mission-service software component:

MissionServiceApp.java—This source file contains the actual, organically developed mission service written in the Java language. The mission service, in this form, is not deployed to theOps Envand remains in theDev Env.MissionServiceApp.class—This binary file contains the compiled form of the mission service that is deployed to theOps Env, but only as it is contained within the aforementionedMissionServiceApp.jar.

The Tamper Attack Surfaces

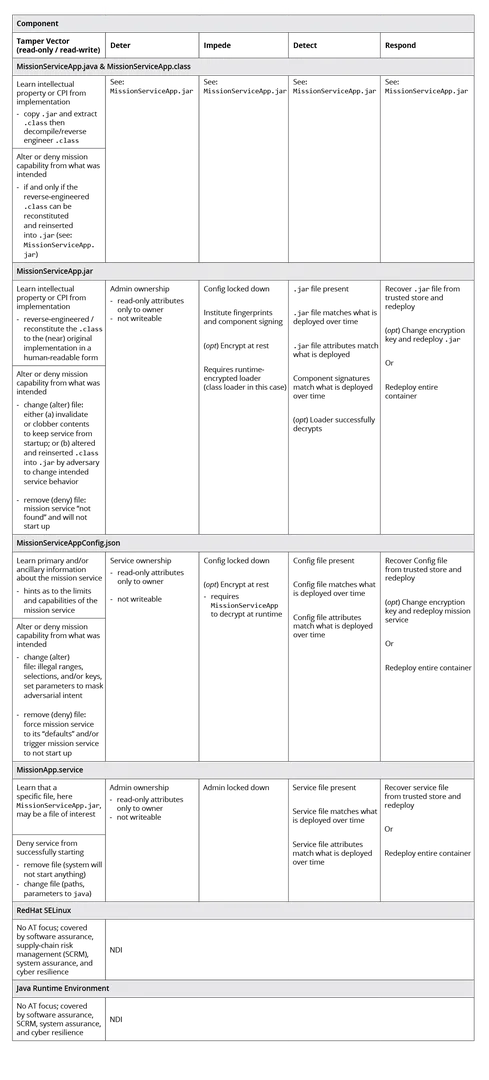

Table 1 describes the mission-service software components in an illustrated example, examining the various tamper attack vectors potentially available to an adversary either through physical access to those software components or through remote tampering on them. The example considers two specific vectors:

- read-only—The adversarial agent is able to “see” the bits at rest in the file or object associated with the mission service. Although not discussed here, further analysis could be applied to the bits in motion (e.g., being delivered across a network or being executed in memory/cache/processor).

- read-write—The adversarial agent is able to do everything along the read-only tamper vector, but is also able to change the bits at rest in the file or object associated with the mission service. (I also don’t discuss bits in motion here).

Given each potential vector, read-only and read-write, potential AT activities are given as examples of what could be done to prevent or delay exploitation of the software component by an adversary using DoDD 5200.47E’s deter, impede, detect, and respond framework. It is important to recall that the goal of an AT activity is to deny the adversary the ability to “(develop) countermeasure development, (achieve) unintended technology transfer, or (effect) alteration of a system.”

Note that this table is not intended as an exhaustive list of all possible mitigations or AT techniques, which I will cover in a future blog post, but rather an illustration of all the engineering reasoning that is possible when considering an AT plan.

Two dependent mission-service software components are not a focus of AT activities in Table 1 because these two components, Red Hat SELinux and the Java Runtime Environment, are NDI (i.e., commercially available to anyone). As such, the U.S. defense systems that deploy the MissionServiceApp are not in sole possession of these NDI software components. An adversarial agent could therefore simply acquire these NDI components through alternative commercial channels (i.e., purchase or download from an open-source marketplace). After adversaries have these components, they are free to explore reverse engineering and learn of the software components’ workings and weaknesses by means of visible and invisible penetration testing, commonly referred to as “white-box and black-box” style activities. Such activities are common for threat actors seeking zero-day vulnerabilities.

There are important considerations regarding NDIs. While the NDIs themselves are available to the general public from publicly available sources (e.g., commercial marketplace, open-source communities), any configuration files developed by a U.S. defense system, in protection of that system and in support of its mission, could be a target of an adversary. In the illustration in Table 1, one configuration file, MissionServiceAppConfig.json, was discussed for the organically developed MissionServiceApp software component. It is reasonable that configuration files for NDIs could also be developed by the defense system that are to remain as a sole possession of the U.S. defense system. If so, such configuration artifacts may fall under AT activities. For the MissionServiceApp and the two NDIs in the system in Table 1, two potential NDI configuration examples could be

/etc/shadow: File within the operating system containing credentials for user account information, often the target of hackers to conduct offline password cracking- Java keystore: Technology for storing public and private asymmetric key material, which is also a target of hackers for offline analysis.

Threats and compromises of these configuration files (like MissionServiceAppConfig.json) could cause harm to the U.S. defense system employing the MissionServiceApp, resulting in unintended system behavior.

What Is CPI for the Mission Service?

Table 1 explores only the tamper attack surface of the mission system. It is still necessary to reason about what CPI is being employed or implemented within those organically developed software components that the mission-service system solely possesses. The engineering team performing this analysis can make a CPI determination using a variety of sources; primarily, these would be discussions with subject matter experts (SMEs) and the user community, as well as a review of the Program Protection Plan (PPP) and possibly an ancillary review of the Security Classification Guide (SCG).

Discussions among SMEs, user communities, and the technical engineers on a system are important. Technical engineers need to ensure that technical adversarial capabilities are communicated to non-technical communities so that risks can be managed properly.

For the purpose of this illustration, SMEs for the MissionSystemApp confirmed that the mission’s algorithm is CPI. Moreover, the engineering team informed the SMEs of the potential to reverse engineer that algorithm from the .jar file, to the .class file, and ultimately to a near human-readable form of the original .java implementation of the algorithm. The SMEs concurred that those forms of the implementation are CPI and must be protected through AT activities. It was clear that an adversary could not only copy the CPI and put it to use, but could also implement counter-measures.

The same discussion was held regarding the contents of the mission-app configuration file, MissionServiceAppConfig.json, which for this example (not shown) elaborates on an algorithm name, a range for thresholds, and a service key. The SMEs deemed that too many hints and too many pieces of a puzzle—a form of derivative classification—are evident and that this .json file should also be protected through AT activities. The SMEs concluded that an adversary, given the thresholds configured for the algorithm, could change the app’s behavior as a counter-measure. However, those contents could not cause unintended technology transfer.

Finally, for the remaining components—the MissionApp.service and the two NDI—the discussion concluded that these components are not to be protected through AT activities. Moreover, the engineering team confirmed that no configuration files for the NDIs were modified (beyond publicly available Security Technical Implementation Guide [STIG] requirements) for the MissionServiceApp and concluded that these components were not to be protected through AT activities.

Anti-Tamper, Software Assurance, Supply-Chain Risk Management, System Assurance, and Cyber Resilience

For the MissionServiceApp in this illustration, some components were deemed to require AT activities and some were not. In either case, that does not mean there is nothing left to do. DoDI 5000.83 rightfully acknowledges that AT is only one of five engineering activities in a holistic approach to technology and program protection. Included in that list alongside AT are

- hardware and software assurance

- supply-chain risk management

- system assurance

- engineering of secure, cyber-resilient systems

There is overlap among these activities. For example, in Table 1, the Detect and Respond columns share techniques with software assurance that operationally ensure that what was deployed to operations is the same as what was deployed; in other words, that it has not been unintentionally or inexplicably changed outside of operational and maintenance activities. Similarly, if a detection of truly abnormal change is triggered, the response often is the same, such as to restore to a previously known configuration.

AT is an additional layer of defense for U.S. systems that is intended to impede countermeasure development, unintended technology transfer, or alteration of a system due to reverse engineering whether those systems are in a domestic or export configuration. Even if a component of such systems does not fall under AT activities, it is a good practice to apply the other four engineering activities of technology and program protection.

Software is pervasive in all the systems in operation today. With so much software coming from so many sources, system developers and acquirers need to know how to identify which software components in any given system require AT techniques. In this post, I focused on one key attribute, sole possession of organically developed components. For those components, engineers, SMEs, and users need to understand the advantage an adversary might gain from acquiring them. They also need to understand the adversary’s capabilities to reverse engineer those components to change their behavior or to acquire a technical capability that the adversary previously did not have.

In a future blog post, I will go into greater detail about the role of AT techniques and an AT plan in the context of an overall program-protection plan.

Additional Resources

Read the SEI blog post, System Resilience: What Exactly Is It?

Read other SEI blog posts about system resilience.

Read SEI blog posts about software assurance.

More By The Author

PUBLISHED IN

Secure DevelopmentGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed