Aircraft Systems: Three Principles for Mitigating Complexity

This post is the first in a series introducing our research into software and system complexity and its impact in avionics.

On July 6, 2013, an Asiana Airlines Boeing 777 airplane flying from Seoul, South Korea, crashed on final approach into San Francisco International airport. While 304 of the 307 passengers and crew members on board survived, almost 200 were injured (10 critically) and three young women died. The National Transportation Safety Board (NTSB) blamed the crash on the pilots, but also said "the complexity of the Boeing 777's auto throttle and auto flight director--two of the plane's key systems for controlling flight--contributed to the accident."

In a news report, acting NTSB chairman Christopher Hart stated that "The flight crew over-relied on automated systems that they did not fully understand." The NTSB report on the crash called for "reduced design complexity" and enhanced training on the airplane's autoflight system, among other remediations. Since complexity is a vague concept, it is important to determine exactly what it means in a particular setting. This blog post describes a research area that the Carnegie Mellon University Software Engineering Institute (SEI) is undertaking to address the complexity of aircraft systems and software.

The Growing Complexity of Aircraft Systems and Software

The growing complexity of aircraft systems and software may make it difficult to assess compliance to air worthiness standards and regulations. Systems are increasingly software-reliant and interconnected, making design, analysis, and evaluation harder than in the past. While new capabilities are welcome, they require more thorough validation and verification. Complexity could mean that design flaws or defects could lead to unsafe conditions that are undiscovered and unresolved.

In 2014, the Federal Aviation Administration (FAA) awarded the SEI a two-year assignment to investigate the nature of complexity, how it manifests in software-reliant systems such as avionics, how to measure it, and how to tell when too much complexity might lead to safety problems and assurance complications.

System Complexity Effects on Aircraft Safety

Our examination of the effects of system complexity on aircraft safety began in October 2014 and involved several phases, including an initial literature review of complexity in the context of aircraft safety. Our research is addressing several questions, including

- What definition of complexity is most appropriate for software-reliant systems?

- How can that kind of complexity be measured? What metrics might apply?

- How does complexity affect aircraft certifiability, validation, and verification of aircraft, their systems, and flight safety margins?

Given answers to the metrics questions above, we will then identify which of our candidate metrics would best measure complexity in a way that predicts problems and provides insight into needed validation and certification steps. Other questions our research will address include:

- Given available sources of data on an avionics system, can measurement using these metrics be performed in a way that provides useful insight?

- Within what measurement boundaries can a line be drawn between systems that can be assured with confidence and those that are too complex to assure?

The remainder of this post focuses on the findings of our literature review, which offered insights into the causes of complexity, the impacts of complexity, and three principles for mitigating complexity.

Causes of Complexity

While complexity is often blamed for problems, the term is usually not defined. When we performed a systematic literature search, we found this to be the case. As a result, our literature search broadened from simply collecting definitions to describing a taxonomy of issues and general observations associated with complexity. (This work was primarily performed by my colleague, Mike Konrad.)

Our literature review revealed that complexity is a state associated with causes that produce effects. We have a large taxonomy of different kinds of causes and another taxonomy of different kinds of effects. To prevent the impacts that complexity creates, one must reduce the causes of complexity, which typically include:

- Causes related to system design (the largest group of causes). Components that are internally complex add complexity to the system as a whole. Also, the interaction (whether functional, data, or another kind of interaction) of the components adds complexity. Dynamic behaviors also add complexity, including numbers of configurations and transitions among them. The way the system is modeled can add complexity as well.

- Causes that make a system seem complex (i.e., reasons for cognitive complexity). These causes include the level of abstractions required, the familiarity a user or operator (such as the pilot) has with the system, and the amount of information required to understand the system.

- Causes related to external stakeholders. The number or breadth of stakeholders, their political culture, and range of user capabilities also impact complexity.

- Causes related to system requirements. The inputs the system must handle, outputs the system must produce, or quality attributes the system must satisfy (such as adaptability or various kinds of security) all contribute to system complexity. In addition, if any of these change rapidly, that in itself causes complexity.

- Causes related to the speed of technological change. The added pressure that more capable, software-reliant systems place on technologies to accomplish even more also impacts complexity.

- Causes related to teams. The necessity and difficulty of working across discipline boundaries, and of creating process maturity in a rapidly evolving change also contributes to complexity.

Impacts of Complexity

After a system is deemed complex--no matter the reason--it is important to examine the problems or benefits of that complexity. Many consequences of complexity are known and considered to be negative, including higher project cost, longer schedule, and lower system performance, as well as decreased productivity and adaptability.

Also, addressing critical quality attributes (e.g., safety versus performance and usability) in a system, or achieving a desired tradeoff between conflicting quality attributes, often results in additional design complexity. For example, to reduce the probability of a hardware failure causing an unsafe condition, redundant units are frequently designed into a system. The system then not only has two units instead of one, but it also has a switching mechanism between the two units and a way to tell whether each one is working. This functionality is often supported by software, which is now considerably more complex than when there was just one unit.

Complexity also impacts human planning, design, and troubleshooting activities in the following ways:

- Complexity makes software planning harder (including software lifecycle definition and selection).

- Complexity makes the design process harder. For example, existing design and analysis techniques may fail to provide adequate safety coverage of high performance, real-time systems as they become more complex. Also, it may be hard to make a safety case just from design and test data, making it necessary to wait for operational data to strengthen the safety case.

- Complexity may make people less able to predict system properties from the properties of the components.

- Complexity makes it harder to define, report, and diagnose problems.

- Complexity makes it harder for people to follow required processes.

- Complexity drives up verification and assurance efforts and reduces confidence in the results of verification and assurance.

- Complexity makes changes harder (e.g., in software maintenance and sustainment).

- Complexity makes it harder to service the system in the field.

Three Principles for Mitigating Complexity

Our literature review also identified three general principles for mitigating complexity:

- Assess and mitigate complexity as early as possible.

- Focus on what in the system being studied is most problematic; abstract a model; and solve the problem in the model.

- Begin measuring complexity early, and when sufficient quantitative understanding of cause-effect relationships has been reached (e.g., what types of requirements or design decisions introduce complexity later in system development), establish thresholds that, when exceeded, trigger some predefined preventive or corrective action.

Of course, these principles overlap, and are expressed and sorted differently by different authors.

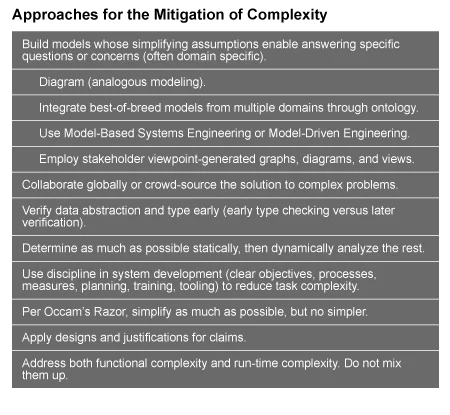

One could make the case that, in general, all practices of systems engineering started from a principle of managing complexity. The table below highlights some of the principles for managing complexity that we encountered during our literature review.

Looking Ahead

Our literature review also describes literature search results related to measurement and mitigation of complexity. Measurement and mitigation of complexity are the basis for the practice of good systems engineering, whether addressing collaboration among organizations and disciplines, requirements and systems abstractions and models, disciplined management and engineering, or even modular design, patterns, and refactoring.

The next blog post in this series will detail the second phase of our work on this project, which focuses on determining how the breadth of aspects of complexity can be measured in a way that makes sense to system and software development projects, and specifically for aircraft safety assurance and certification.

We welcome your feedback on our research.

Additional Resources

To read the SEI report Reliability Improvement and Validation Framework, by Peter H. Feiler, John B. Goodenough, Arie Gurfinkel, Charles B. Weinstock, and Lutz Wrage, please click here.

The blog post is disseminated by the Carnegie Mellon University Software Engineering Institute in the interest of information exchange. While the research task is funded by the Federal Aviation Administration, the United States Government assumes no liability for the contents of this blog post or use thereof. The United States Government does not endorse products or manufacturers. Trade or manufacturer's names appear herein solely because they are considered essential to the objective of this blog post. The interpretations, findings, and conclusions in this report are those of the author(s) and do not necessarily represent the views of the funding agency. This document does not constitute FAA certification policy.

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed