Adversarial ML Threat Matrix: Adversarial Tactics, Techniques, and Common Knowledge of Machine Learning

PUBLISHED IN

CERT/CC VulnerabilitiesMy colleagues, Nathan VanHoudnos, April Galyardt, Allen Householder, and I would like you to know that today Microsoft and MITRE are releasing their Adversarial Machine Learning Threat Matrix. This is a collaborative effort to bring MITRE's ATT&CK framework into securing production machine learning systems. You can read more at Microsoft's blog and MITRE's blog, as well as find a complete copy of the matrix on GitHub. We hope that you will join us in providing feedback and contributing to the framework via pull requests.

We are interested in this project because it prompts communication between two fields that we are interested in fostering discourse between (see our forthcoming NSPW 2020 paper). As the SEI begins leading a national AI Engineering initiative, the robustness and security of AI, and specifically machine learning, is a vital component. We will introduce the threat matrix here, to enable our readers to try out and contribute to improving it. MITRE's ATT&CK is a knowledge base of attacker techniques. Such a knowledge base can be used for tasks ranging from adversary emulation, to red teaming, to maturity assessment of a security operations center, and various other uses. ATT&CK is, however, tied to traditional cybersecurity concerns due to its history as a tool in Microsoft Window's environments. Among other missing sectors, ATT&CK does not consider the unique threats to machine learning systems. The Adversarial ML Threat Matrix is fashioned after the ATT&CK framework so these two fields can build up a discourse; maybe ATT&CK will even be able to include these considerations in a future version.

Yet, as we have discussed on this blog, machine learning (ML) is vulnerable to adversarial attacks. These can range from an attacker attempting to make the ML system learn the wrong thing (data poisoning), do the wrong thing (evasion attacks), or reveal the wrong thing (model inversion). Although there are several efforts to provide detailed taxonomies of the kinds of attacks that can be launched against a machine learning system, none are organized around operational concerns. However, operational concerns are exactly ATT&CK's goal.

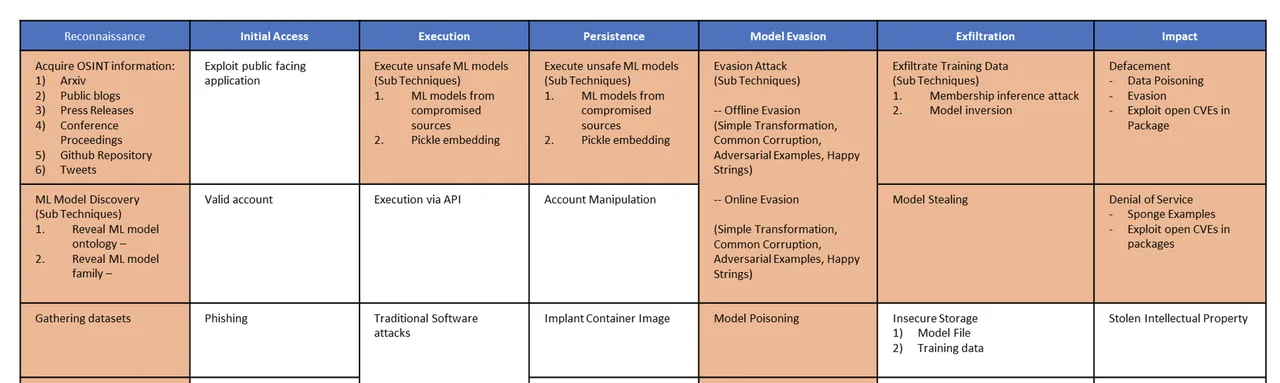

The Adversarial ML Threat Matrix aims to close this gap between academic taxonomies and operational concerns. For example, Figure 1 displays a subset of the Adversarial ML Threat matrix. At the top are the "Tactics," which are modeled after ATT&CK and correspond to the broad categories of adversary action. Each cell is a "Technique," which falls within one tactic. Some of the techniques are unique to Adversarial ML and are colored orange. The remainder are shared with the original ATT&CK, although they may have a slightly different meaning in an ML context. Note that although the matrix is displayed as a table, there is no relation a priori between cells within the same row - a given attack will have a unique pattern where techniques are chosen from tactics without regard to their order in the table.

As part of the initial release of the Adversarial ML Threat Matrix, Microsoft and MITRE put together a series of case studies. These cover how well-known attacks such as the Microsoft Tay poisoning, the Proofpoint evasion attack, and other attacks could be analyzed within the Threat Matrix. Indeed, this is how the Threat Matrix was created: by attempting to apply ATT&CK to machine learning attacks and finding which new techniques and tactics were necessary to capture the behavior observed.

So, now, the ask: What case studies might you or your organization be able to contribute? Threat management (like other areas of security management) is best if it gets input from and is tailored to diverse stakeholders. Perhaps this first version of the Adversarial ML Threat Matrix captures the adversary behavior you have observed. If not, please contribute what you can to MITRE and Microsoft so your experience can be captured. If the matrix does reflect your observations, is it helpful in communicating and understanding this adversary behavior and explaining threats to your constituents? Share those experiences with the authors as well, so the matrix can improve!

If you want to join us in contributing, please head to the GitHub page and submit an issue or pull request. If you would like assistance in crafting case studies that are generic and able to shared openly, please contact either the Threat Matrix team or the SEI. Your case studies and contributions are vitally important to the state of training and understanding in information security and incident management.

More By The Author

CERT/CC Comments on Standards and Guidelines to Enhance Software Supply Chain Security

• By Jonathan Spring

More In CERT/CC Vulnerabilities

PUBLISHED IN

CERT/CC VulnerabilitiesGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In CERT/CC Vulnerabilities

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed