Building Next-generation Autonomous Systems

PUBLISHED IN

Artificial Intelligence EngineeringAn autonomous system is a computational system that performs a desired task, often without human guidance. We use varying degrees of autonomy in robotic systems for manufacturing, exploration of planets and space debris, water treatment, ambient sensing, and even cleaning floors. This blog post discusses practical autonomous systems that we are actively developing at the SEI. Specifically, this post focuses on a new research effort at the SEI called Self-governing Mobile Adhocs with Sensors and Handhelds (SMASH) that is forging collaborations with researchers, professors, and students with the goal of enabling more effective search-and-rescue crews.

Motivating Scenario: An Earthquake Hits a Populated Area

Recent earthquakes and earthquake-induced events like tsunamis in Haiti, Japan, and Indonesia have shown both the destructive power of nature and the compassion of communities to lend aid to people in need. Compassion alone, however, won't help emergency responders locate and help all of the potential survivors. Search-and-rescue crews thus need technology to help them utilize the often meager resources they have in the best way possible.

Figure 1. Ground crews search for survivors after an earthquake hits a metropolitan area. Automated aerial robots aid crews with thermal cameras that can penetrate rubble to find the trapped and injured.

Tackling a Massive Problem

Search-and-rescue is a massive undertaking in every conceivable way. There are issues of scale in geographic area coverage and number of people, devices, and sensors involved. Despite the numbers of people in need, emergency responders must deal with limited mobility and availability of ground crews and a complete loss of most telecommunications infrastructure, which is common after an earthquake. There are only so many people available to help, and it can take days to completely search a single building. Even in a small town, a crew of thousands of emergency responders could take weeks to find survivors, and by then it may be too late.

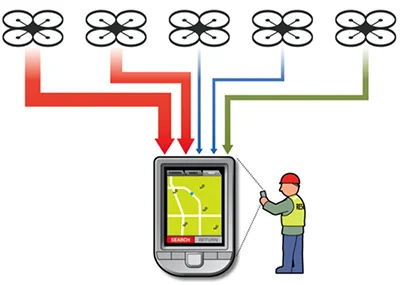

To assist in this type of important mission, the SMASH project is exploring a combination of effective software design practices, artificial intelligence, and inexpensive hardware. These capabilities will allow one responder to control a fleet of quadcopters or other robotic vehicles that search the rubble (for example, using thermal sensors, ground-penetrating radar, and other types of sensor payloads) and present the results to a smartphone or other human-computer device in a manner that's useful and efficient to human operators, as shown in Figure 2.

Figure 2. Automated aerial robots send information back to a smartphone, tablet, or other visual interface to provide ground crews with the thermal context of the area around the ground crews.

We want to extend the capabilities of emergency responders by equipping them with dozens of robotic vehicles per operator, which would allow them to infer context from thermal images and locate survivors in the rubble.

A Focus on Human-in-the-loop Autonomy

Our central idea is to extend--not replace--the capabilities of human operators, most obviously by providing them with additional coverage. We also provide ground operators the ability to penetrate the rubble and look into dangerous or out-of-reach areas and locate survivors.

Enhanced vision is useless, however, if robotic vehicles are not intelligent enough to search locations collaboratively with other agents. Likewise, the vehicles need to use maps of building locations to proactively search likely survivor zones quickly and take ground operator feedback and guidance on the likely location of survivors. These are central components to our SMASH autonomous design philosophy.

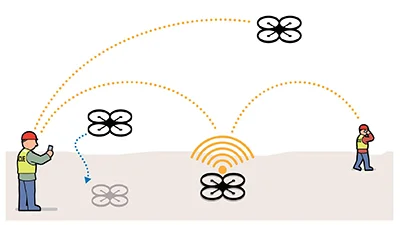

Figure 3. Aerial robots can land to form sensor networks for routing information from other personnel or sensors.

Robust telecommunications infrastructure is another capability SMASH aims to provide. The robotic vehicles we are working on form their own wireless access points and also have a long-range radio (2 km) that may be useful in communicating with other ground crews or even by survivors with cellphones who need to send important messages, e.g., that they are trapped in a car of a parking garage. Part of the autonomy we are developing focuses on landing the robotic vehicles or using throwable wireless access points to form sensor networks that route information based on the priority of information.

Figure 4. Routing and bandwidth allocation are dictated by contextual priority. In this image, two aerial robots detect thermal targets while the other three detect nothing. The two aerial robots have higher priority information and are given preferential bandwidth treatment.

Our research on SMASH will also address issues of timing and scale. In a search-and-rescue operation, timing is critical, and data volumes from thousands of ground crews and new sensors can cause an ad hoc telecommunication infrastructure to fail. Consequently, autonomy is only useful if it is designed to work within the constraints of the available bandwidth of existing networking infrastructure. Solving this problem depends on focusing on prioritization, quality-of-service, and effective bandwidth utilization.

For example, in Figure 4, only two of the aerial robot quadcopters are detecting thermal targets. The other quadcopters are not detecting anything of use to the ground crews. Our solution approach focuses on only sending information that is relevant (such as thermal targets) and not constantly broadcasting the full thermal or video images unless specifically requested by the human operators. Moreover, the quadcopters that are perceiving potential human beings have higher priority and the ability to send more data to the operator within the networking bandwidth available. This type of autonomous self-interest is important to provide a scalable distributed infrastructure.

Open-source dissemination

Open architectures, i.e., open standards and open source initiatives that allow end-users to extend products and services, are also important for critical infrastructures--not only because transparency helps in creating a better product, but also to reduce the cost of providing solutions to government agencies and non-profits. Consequently, SMASH is built on top of open-source initiatives including the Multi-Agent Distributed Adaptive Resource Allocation (MADARA) project, which provides real-time knowledge and reasoning services for distributed systems, and the Drone-RK project, which provides a programming interface for controlling and processing data from the Parrot AR.Drone 2. We are augmenting these open-source software projects with additional tools that the software community can use to enable human-in-the-loop autonomous system development and deployment.

Collaborations

To create these new autonomous techniques and tools, we are partnering with researchers and students from around the world. Within Carnegie Mellon University's department of Electrical & Computer Engineering (ECE) within the College of Engineering, we have formed a core group of professors and students including Anthony Rowe, Larry Pileggi, and Kenneth Mai of the ECE departments, who will be working on extending the Parrot AR.Drone 2 and developing a new type of thermal, wireless-enabled throwable sensor. In the fall of 2013, Tony Lattanze of CMU's Master of Software Engineering Program will work with students to investigate area coverage problems with collaborative robots and group artificial intelligence.

Students from CMU will be joined by graduate students from research universities around the world for summer programs that investigate the search-and-rescue problem space in hopes of extending the state-of-the-art and effecting real world solutions to hard research problems.

Contacts and Conclusion

The SMASH project is driving the state-of-the-art in human-aided autonomy with an expected software payload that will be deployed to real-world and often mission-critical scenarios. If you have questions about the effort or would like to discuss potential collaboration possibilities, please contact info@sei.cmu.edu or leave a comment below.

Additional Resources

For more information about the open-source knowledge and reasoning engine that we are using for distributed artificial intelligence in real-time systems, please visit the Madara project site at

https://code.google.com/archive/p/smash-cmu/

For more information on the software development kit used for manipulating and reading images and data from the Parrot AR.Drone 2,please visit the Drone-RK project site at http://www.drone-rk.org/

For a cool robotics emulation site that includes models of the Parrot AR.Drone, please try out the V-REP emulator (trial version lasts for 1 to 2 months). For more information please visit

http://www.v-rep.eu/

More By The Author

More In Artificial Intelligence Engineering

PUBLISHED IN

Artificial Intelligence EngineeringGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed