Security Analytics: Using SiLK and Mothra to Identify Data Exfiltration via the Domain Name Service

A variety of modern network threats involve data theft via abuse of network services, which is termed data exfiltration. To track such threats, analysts monitor data transfers out of the organization’s network, particularly data transfers occurring via network services not primarily intended for bulk transfer services. One such service is the Domain Name System (DNS), which is essential for many other Internet services. Unfortunately, attackers can manipulate DNS to exfiltrate data in a covert manner.

This SEI blog post focuses on how the DNS protocol can be abused to exfiltrate data by adding bytes of data onto DNS queries or making repeated queries that contain data encoded into the fields of the query. The post also examines the general traffic analytic we can use to identify this abuse and applies several tools available to implement the analytic. The aggregate size of DNS packets can provide a ready indicator of DNS abuse. However, because the DNS protocol has grown from a simple address resolution mechanism to distributed database support for network connectivity, interpreting the aggregate size requires understanding of the context of queries and responses. By understanding the volume of DNS traffic, both in isolation and in aggregate, analysts may better match outgoing queries and incoming responses.

The data used in this blog post is the CIC-BELL-DNS-EXF 2021 data set, as published in conjunction with the paper Lightweight Hybrid Detection of Data Exfiltration using DNS based on Machine Learning by Samaneh Mahdavifar et al.

The Role of DNS

DNS supports multiple types of queries. These queries are described in a variety of Internet Engineering Task Force (IETF) Request for Comment (RFC) documents. These RFCs include the following:

- A and AAAA queries for IP address corresponding to a domain name (e.g., “which address corresponds to www.example.com?” with a response like “192.0.2.27”)

- pointer record (PTR) queries for name corresponding to an IP address (e.g., “which name corresponds to 192.0.2.27?” with a response of “www.example.com”)

- name server (NS), mail exchange (MX), and service locator (SRV) queries for the identity of key servers in a given domain

- start of authority (SOA) queries for information about addresses on which the queried server may speak authoritatively

- certificate (CERT) queries for encryption certificates pertaining to the server’s covered domains

- text record (TXT) queries for additional information (as configured by the network administrator) in a text format

A given DNS query packet will request information on a given domain from a particular server, but the response from that server may include multiple resource records. The size of the response will depend on how many resource records are returned and the type of each record.

Once analysts understand the reasons for tracking DNS traffic and the context needed for interpreting the tracking results, they can then determine what information is desired from the tracking. This blog post assumes the analyst wants to track external hosts that may be receiving exfiltrated information.

Overview of the Analytic for Identifying Data Exfiltration

The analytic covered in this blog post assumes that the networks of interest are covered by traffic sensors that produce network flow records or at least packet captures that can be aggregated into network flow records. There are a variety of tools available to generate these flow records. Once produced, the flow records are archived in a flow repository or appropriate database tables, depending on the analysis tool suite.

The approach taken in this analytic is, first, to aggregate DNS traffic associated with external destinations acting like servers and, second, to profile the traffic for these destinations. The first step (association) involves identifying DNS traffic (either by service port or by actual examination of the application protocol), then identifying the external destinations involved. The second step (profiling) examines how many sources are communicating with each of the destinations, the aggregate byte count, packet count, and other revealing information as described in the following sections.

Several different tools can be used for this analysis. This blog post will discuss two sets of SEI-developed tools:

- The System for Internet-Level Knowledge (SiLK) is a collection of traffic analysis tools developed to facilitate security analysis of large networks. The SiLK tool suite supports the efficient collection, storage, and analysis of network flow data, enabling network security analysts to rapidly query large historical traffic data sets. SiLK is ideally suited for analyzing traffic on the backbone or border of a large, distributed enterprise or mid-sized ISP.

- Mothra is a collection of Apache Spark libraries that support analysis of network flow records in Internet Protocol Flow Information Export(IPFIX) format with deep packet inspection fields.

Each of the following sections will present an analytic for detecting exfiltration via DNS queries in the corresponding tool set.

Implementing the Analytic via SiLK

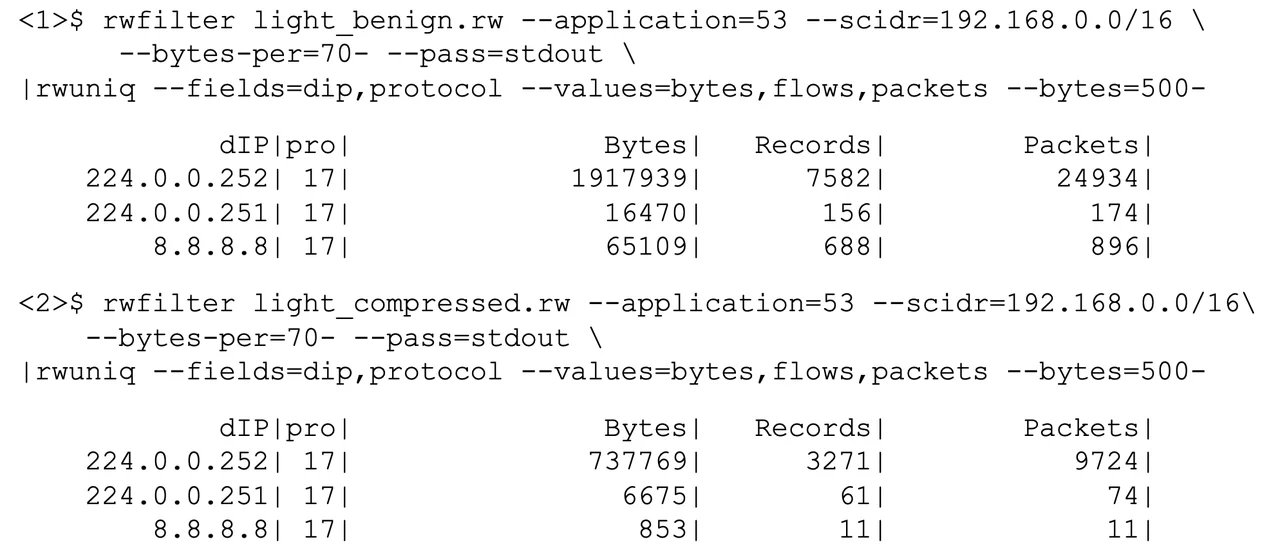

Figure 1 below presents a series of SiLK commands to implement an analytic to detect exfiltration. The first command applies a filter to normal, benign DNS traffic, isolating DNS traffic (identified by protocol recognition as indicated by the application label of 53) coming from the internal network (classless inter-domain routing [CIDR] block 192.168.0.0/16) and of relatively long (70 bytes or more) packets. The output of the filter is then summarized by destination address and transport protocol, counting bytes, flow records, and packets for each combination of address and protocol. The resulting counts are only shown if the accumulated bytes are 500 or more. After applying the analytic to benign DNS data, it is applied in the second sequence to DNS data encompassing compressed data for exfiltration.

The results in Figure 1 show that the network talks to a primary DNS server, a secondary DNS server, and a public server. In the benign case, the data is mainly directed to the primary DNS server and the public server. In the exfiltration case, the data is mainly directed to the primary DNS server and the secondary DNS server. This shift of destination, in isolation, is not enough to make the exfiltration traffic suspicious or provide a basis for moving beyond suspicion into investigation. In the benign case, there is a notable fraction of the traffic directed to the public DNS server at 8.8.8.8. In the traffic labeled as abusive, this fraction is lessened, and the fraction to a private DNS server (the exfiltration target) at 224.0.0.252 is increased. Unfortunately, given the limited nature of SiLK flow records, security analysts have a hard time exfiltrating additional traffic. To go further, more DNS-specific fields are required. These fields are provided by deep packet inspection (DPI) data in expanded flow records in IPFIX format. While SiLK cannot process IPFIX flow records, other tools such as Mothra and databases can.

Implementing the Analytic via Mothra

The code sample below shows the analytic implemented in Spark using the Mothra libraries. These libraries allow definition and loading of data frames with network flow record data in either SiLK or IPFIX format. A data frame is a collection of data organized into named columns. Data frames can be manipulated by Spark functions to isolate flows of interest and to summarize those flows. Defining the data frames involves identifying the columns and the data to populate the columns. In the code sample, the data frames are defined by the spark.read.field function and populated by data from either the captured benign traffic or the captured exfiltration traffic via Mothra’s ipfix function. Together, these functions establish the data data frame.

The result data frame is constructed from the data data frame via a series of filtering and summarization functions. The initial filter restricts it to traffic labeled as DNS traffic, followed by another filter that ensures the records contain DNS resource record queries or responses. The select function that follows isolates specific record features for summarization: time, traffic source and destination, byte and packet volumes, DNS names, DNS flags, and DNS resource record types. The groupBy function generates the summarization for each unique DNS name and resource record type combination. The agg function specifies that the summarization contain the count of flow records, the counts of source and destination IP addresses, and the totals for bytes and packets. The filter function (after the summarization) restricts output to just those showing a bytes-per-packet ratio of more than 70 with fewer than three entries in the DNS Name list. This last filter excludes summarizations of traffic that is large only due to the length of the response list rather than to the length of individual queries.

This filtering and summarization process creates a profile of large DNS requests and responses (separated by DNS flag values). The use of DNS names as a grouping value allows the analytic to distinguish repeated queries to similar domains. The counts of source and destination IP addresses allow the analyst to distinguish repeated traffic to a few locations instead of rare traffic to multiple locations or from multiple sources.

val data_dir = ".../path/to/data"

import org.cert.netsa.mothra.datasources._

import org.cert.netsa.mothra.datasources.ipfix.IPFIXFields

import org.apache.spark.sql.functions._

// In dnsIDBenign.sc:

val data_file = s"$data_dir/light_benign.ipfix"

// In dnsIDAbuse.sc:

// val data_file =

// s"$data_dir/light_compressed.ipfix"

val data = {

spark.read.fields(

IPFIXFields.default, IPFIXFields.dpi.dns

).ipfix(data_file)

}

val result = {

data

.filter(($"silkAppLabel" === 53) &&

(size($"dnsRecordList")>0))

.select(

$"startTime",

$"sourceIPAddress",

$"destinationIPAddress",

$"octetCount",

$"packetCount",

$"dnsRecordList.dnsRRType" as "dnsRRType",

$"dnsRecordList.dnsQueryResponse" as "dnsQR",

$"dnsRecordList.dnsResponseCode" as "dnsResponse",

$"dnsRecordList.dnsName" as "dnsName")

.groupBy($"dnsName",$"dnsRRType")

.agg(count($"*") as "flows",

countDistinct($"sourceIPAddress") as "#sIP",

countDistinct($"destinationIPAddress") as "#dIP",

sum($"octetCount") as "bytes",

sum($"packetCount") as "packets")

// .filter($"packets" > 20)

.filter($"bytes"/$"packets" > 70)

.filter(size($"dnsName") < 3)

.orderBy($"bytes".desc)

}

result.show(20,false)The code sample below shows the output of dnsIDExfil.sc on benign and on compressed data, the data sets used in the preceding SiLK discussion. The presence of multicast (224/8 and 239/8 CIDR blocks) and RFC1918 private addresses (192.168/16 CIDR blocks) is due to this data coming from an artificial collection environment instead of live Internet traffic capture.

Contrasting the benign output against the abuse output, we see a smaller number of lookup addresses being queried in the abuse results and a much quicker drop-off in the number of queries per host. In the benign results, there are six DNSNames that are queried repeatedly; in the abuse results, there are two. All of the queries shown are PTR (reverse. RRType=12) queries, and all are going to the same server. In the high-volume DNSName queries, the maximum average packet length is slightly larger for the abuse data than for the benign data (81 vs. 78). Taken together, these differences show a slow-and-steady release of additional data as part of the DNS data transfer, which reflects the file transfer taking place.

dnsIDBenign.sc output:

+-------------------------------------+---------+-----+----+----+------+-------+

|dnsName |dnsRRType|flows|#sIP|#dIP|bytes |packets|

+-------------------------------------+---------+-----+----+----+------+-------+

|[252.0.0.224.in-addr.arpa.] |[12] |2835 |1 |1 |416539|5901 |

|[150.20.168.192.in-addr.arpa.] |[12] |982 |1 |1 |242585|3125 |

|[200.20.168.192.in-addr.arpa.] |[12] |895 |1 |1 |134756|1836 |

|[15.20.168.192.in-addr.arpa.] |[12] |901 |1 |1 |133490|1844 |

|[100.20.168.192.in-addr.arpa.] |[12] |757 |1 |1 |112173|1533 |

|[2.20.168.192.in-addr.arpa.] |[12] |635 |1 |1 |91734 |1288 |

|[3.20.168.192.in-addr.arpa.] |[12] |315 |1 |1 |45438 |640 |

|[_ipps._tcp.local., _ipp._tcp.local.]|[12, 12] |122 |32 |1 |13161 |136 |

|[250.255.255.239.in-addr.arpa.] |[12] |74 |1 |1 |11328 |152 |

|[101.20.168.192.in-addr.arpa.] |[12] |31 |1 |1 |4666 |64 |

+-------------------------------------+---------+-----+----+----+------+-------+

only showing top 10 rows

dnsIDAbuse.sc output:

+-------------------------------------+---------+-----+----+----+------+-------+

|dnsName |dnsRRType|flows|#sIP|#dIP|bytes |packets|

+-------------------------------------+---------+-----+----+----+------+-------+

|[252.0.0.224.in-addr.arpa.] |[12] |1260 |1 |1 |191398|2696 |

|[2.20.168.192.in-addr.arpa.] |[12] |255 |1 |1 |130725|1615 |

|[150.20.168.192.in-addr.arpa.] |[12] |416 |1 |1 |63606 |866 |

|[200.20.168.192.in-addr.arpa.] |[12] |388 |1 |1 |57686 |788 |

|[15.20.168.192.in-addr.arpa.] |[12] |379 |1 |1 |56492 |781 |

|[100.20.168.192.in-addr.arpa.] |[12] |340 |1 |1 |50738 |694 |

|[3.20.168.192.in-addr.arpa.] |[12] |125 |1 |1 |17750 |250 |

|[250.255.255.239.in-addr.arpa.] |[12] |32 |1 |1 |4736 |64 |

|[_ipps._tcp.local., _ipp._tcp.local.]|[12, 12] |46 |30 |1 |4467 |51 |

|[_ipp._tcp.local., _ipps._tcp.local.]|[12, 12] |13 |9 |1 |1782 |19 |

+-------------------------------------+---------+-----+----+----+------+-------+

only showing top 10 rowsUnderstanding Data Exfiltration

Whichever form of tooling is used, analysts often need an understanding of the data transfers from their network. Repetitive queries for DNS resolution should be rather rare—caching should eliminate many of these repetitions. As repetitive queries for resolution are identified, several groups of hosts may be found:

- Hosts that generate repetitive queries not indicative of exfiltration of data are likely to exist, characterized by very consistent query size, periodic timing, and the use of expected name servers.

- Hosts that generate repetitive queries with unusual name servers or timing may require further investigation.

- Hosts that generate repetitive queries with unusual name servers or query sizes should be examined carefully to identify potential exfiltration.

The impact of these hosts on network security will vary depending on the range and criticality of assets those hosts access, but some of the traffic may demand immediate response.

What Might a Security Analyst Want to Know

This post is part of a series addressing a simple question: What might a security analyst want to know at the start of each shift regarding the network? In each post we will discuss one answer to this question and application of a variety of tools that may implement that answer. Our goal is to provide some key observations that help analysts monitor and defend their networks, focusing on useful ongoing measures, rather than those specific to one event, incident, or issue.

We will not focus on signature-based detection, since there are a variety of resources for such including intrusion detection systems (IDS)/intrusion prevention systems (IPS) and antivirus products. The tools used in these articles will primarily be part of the CERT/NetSA Analysis Suite, but we will include other tools if helpful. Earlier posts examined tools for tracking software updates and proxy bypass.

Our approach will be to highlight a given analytic, discuss the motivation behind the analytic, and provide the application as a worked example. The worked example, by intention, is illustrative rather than exhaustive. The decision of what analytics to deploy, and how, is left to the reader.

If there are specific behaviors that you would like to suggest, please send them by email to netsa-help@cert.org with “SOC Analytics Idea” in the subject line.

Additional Resources

Read the SEI Blog post Security Analytics: Tracking Proxy Bypass, which explores how to track the amount of network traffic that is evading security proxies.

Read the SEI Blog post Security Analytics: Tracking Software Updates, which presents an analytic for tracking software updates from official vendor locations.

Read the analyst’s handbook for SiLK version 3.15.0 and later, Network Traffic Analysis with SiLK, which provides more than 100 worked examples, mostly derived from analytics.

Read the CERT NetSA Security Suite Analysis Pipeline webpage, which provides several worked examples of analytics.

More By The Author

More In Cybersecurity Engineering

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Cybersecurity Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed