Traffic Analysis for Network Security: Two Approaches for Going Beyond Network Flow Data

By the close of 2016, "Annual global IP traffic will pass the zettabyte ([ZB]; 1000 exabytes [EB]) threshold and will reach 2.3 ZBs per year by 2020" according to Cisco's Visual Networking Index. The report further states that in the same time frame smartphone traffic will exceed PC traffic. While capturing and evaluating network traffic enables defenders of large-scale organizational networks to generate security alerts and identify intrusions, operators of networks with even comparatively modest size struggle with building a full, comprehensive view of network activity. To make wise security decisions, operators need to understand the mission activity on their network and the threats to that activity (referred to as network situational awareness). This blog post examines two different approaches for analyzing network security using and going beyond network flow data to gain situational awareness to improve security.

Benefits of Network Flow Analysis

Network flow data is aggregated packet header data (but no content capture) for a communication between a source and a destination. Communications are distinguished by the protocol-level information in the header and the proximity in time (i.e., a flow contains aggregated header information for all packets that use the same protocol settings within a designated time window). There are several reasons that network flow data is a useful format for analyzing network traffic:

- The lack of specific details regarding the content of a specific piece of traffic makes the collection of network flow highly concise. Network flow enables analysts to record the presence of a communication in a very small footprint, which means the data can be collected economically across a large network and stored for months to years (and also limits or eliminates personally identifying information [PII]).

- Network flow contains sufficient indicative information to allow network defenders to perform a variety of analyses to search for threats or context information that can help defenders understand what is going on. For example, when examining web traffic, network flow data would contain the source and destination IP addresses involved, the amount of data sent, the number of packets, and the time duration of the communication.

Most web traffic from server to client is quick, with high byte volume and relatively modest numbers of packets (since the server is sending relatively full packets to the client). If traffic from server to client involves more modest byte volumes and higher numbers of packets over a longer timeframe, then it can be questioned as to whether it is normal web traffic. If such abnormal flows occur in patterns outside of normal workday patterns, then suspicions would be raised further. On the other hand, network defenders and analysts must have enough context to identify key websites for users and make sure that they are not blocked.

Network flow can also be used to identify a likely source of a spam email within a five-minute window of its arrival on a network and implement remediation. For example, a rolling block can reduce spam traffic by as much as 75 percent by rapidly blocking out the source IP address, even for short periods of time.

Combining Network Flow with Other Data Sources

Although network flow is a powerful data source, it is not the only source of data that analysts and security staff should use to analyze network traffic. Content-based attacks, such as SQL injections strike through the data (dynamic database inquiries that include user supplied output) and allow attackers to execute malicious SQL statements on a web application's database server. If analysts limit their examination to network flow data after a web application attack, the lack of content in that data means that they would not be able to determine that the event was an SQL injection.

In large organizations, analysts contend with so much data traffic that network analysts need to employ a mix of methods to secure a network. Analysts must be able to, from a starting event, generalize their analysis and expand its focus so they capture all the aspects relative to understanding this unexpected change in network traffic (bottom up). These changes can be benign, for example, a new service comes out and users use this serve and security measures need to protect this new service. Analysts also need to start with a model of network behavior and then narrow the focus to specifically investigate deviations from this model that may reflect intrusions on the network (top down). Again, such deviations may be benign, for example, traffic involving a group of developers working unusually late hours and accessing sites not normally found in the network traffic.

Defenders of information networks in large-scale organizations don't just use network flow data alone. Analyses using top down or bottom up combine network flow data with other information from the network including

- intrusion detection system (IDS) alerts, generated by rules that recognize known intrusion traffic or that indicate significant anomalies from expected network traffic

- network management data (i.e., vulnerability scans, configuration checks, and population checks with respect to software versions, which can be done with network management software)

- full packet capture of traffic relating to servers or services of specific concern

- firewall records - blocked traffic or unexpected termination of connections, also proxy logs to identify service requests that were permitted or blocked

- server logs - host and application level events recorded with respect to mission related services

- network reputation data - where the addresses are registered to and the degree of previously-seen undesirable traffic related to those addresses

- active or passive domain name resolutions - where domain names are mapped to IP addresses, either by direct query or by passively recording the results of previous queries

Building a Common Understanding of Network Security

There are two basic approaches to building a common understanding of network security:

- Bottom up, where analysts start with events of interest (possibly identified from network flow data) and then pivot to other pieces of network information, (possibly firewall records or IDS alerts), to add context for the analysis. For example, a web service outage (identified from network flow data by failed attempts to contact the server), the analyst may examine IDS alerts (to find attempted or successful attacks against that service) or server logs (to find other indications as to why the outage occurred.

In another example, an IDS alert where machine with IP address 198.51.100.3 is getting an attempted SQL injection a key issue would be whether past traffic patterns indicate 198.51.100.3 normally supports database services. If the host does support database services, analysts then examine host configuration and vulnerability logs to drill down and further understand the event. If it does not, then the analysts then may recommend auditing the host to determine if unauthorized service has been started.

This type of specialized, event-specific approach allows analysts to start from an event and use network flow data to place that event into context and potentially identify other related events that are all part of the same pattern or to start from network flow data and pivot to include other data sources to more completely understand the event.

- Top-down, where analysts examine broad general patterns of usage within network traffic. For example, there is a common pattern of network traffic behavior known as the diurnal curve, where traffic usage in a network ramps up at the beginning of the workday at about 8:30 a.m. and continues throughout the day until about 4 p.m. followed by a natural drop off at the end of the workday. This curve is offset depending on the local time.

When examining this curve, for example, analysts should look for departures from the diurnal curve, sudden interruptions, or a sudden high spike. In the event of a pattern anomaly, an analyst might then turn to other data sources to drill down and better understand the divergence. For example, an examination of firewall records might help analysts identify interruptions as blocked traffic or network connections.

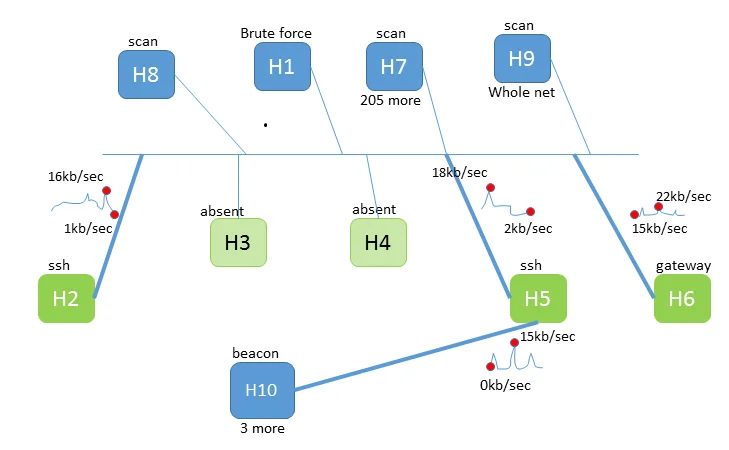

No matter which approach a defender uses, network attackers are often good at hiding behaviors with respect to any single data source. For example, if network attackers have styled their attacks so that they can't be detected through antivirus software or common IDS rules, analysts must then rely on network flow analysis, changes in service behaviors, or log file entries. Figure 1 below shows a visualization of a fictitious attack, merging IDS alerts (which produced the attack labels on the hosts), network population information (which produced the presence or absence of hosts at an IP address) and network flow data (which produced the traffic volume timelines shown on network links). Such a visualization can aid in both understanding and responding to attacks.

Figure 1: A Situational Awareness Graphic for a Small Network Merging IDS Data, Host Inventory Data, and Network Flow Data

On a separate front, many organizations with large networks have moved to managing systems automatically. Automatic analysis of network flow can provide confirmation of services provided by systems, the operating system in use (through revealing network behaviors), as well as what known vulnerabilities as determined through responses to network scans.

If network defenders compare the snapshot view of the network from network management software with a behavioral view from network flow data, they can glean a more comprehensive look of network traffic. For example, by focusing on behavior, analysts can determine which devices respond to web connection requests; comparing this with those authorized through network management software might reveal gaps in the authorization lists.

Without knowing both sides it can be hard for network analysts to determine if this is an authorized server or a non-authorized servers. Sometimes the unauthorized server can be a printer or a wireless router that uses a web-based interface for configuration. While you might have that interface available to network personnel, you really don't want to have it available to the internet.

Wrapping Up and Looking Ahead

While network flow data has proven quite useful as a record of network traffic, there are issues that still remain when using it with other data sources. The time (and possibly the order) of events may differ between data sources (depending on the interleaving of events as observed by each data source). External-facing and internal-facing IP addresses may be different, and in the case of Network Address Translation (NAT), a single external-facing IP address (seen by the firewall or network flow data) may take the place of many internal-facing IP addresses (seen in host logs, IDS alerts, and network management data). Currently, this inconsistency of characteristics is dealt with on a case-by-case basis. Establishing a robust correspondence and using this correspondence in analysis remains an active area of research.

Achieving network situational awareness depends on an organization's ability to effectively monitor its networks and, ultimately, to analyze that data to detect malicious activity. There are several resources available to network analysts and security defenders as they contend with a rapid-fire increase in global internet protocol traffic:

- The CERT Division's 2017 FloCon conference will explore advances in network traffic analytics that leverage one or more data types using the automation of well-known and novel techniques. This exploration will be reflected in conference presentations, discussion sessions, and in training offerings.

- The Network Situational Awareness (NetSA) group at CERT has developed and maintains a suite of open source tools for monitoring large-scale networks using flow data. These tools have grown out of the work of the SiLK project and ongoing efforts to integrate this work into a unified, standards-compliant flow collection and analysis platform.

- CERT researchers have also published a series of case studies that are available as technical reports. In particular, the report Network Profiling Using Flow provides a step-by-step guide for profiling and discovering public-facing assets on a network using network flow data.

We welcome your feedback about this work in the comments section below.

Additional Resources

Learn more about FloCon

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed