Improving Safety-critical Systems with a Reliability Validation & Improvement Framework

Aircraft and other safety-critical systems increasingly rely on software to provide their functionality. The exponential growth of software in safety-critical systems has pushed the cost for building aircraft to the limit of affordability. Given this increase, the current practice of build-then-test is no longer feasible. This blog posting describes recent work at the SEI to improve the quality of software-reliant systems through an approach known as the Reliability Validation and Improvement Framework that will lead to early defect discovery and incremental end-to-end validation.

A Fresh Look at Engineering Reliable Systems

Studies by the National Institute of Standards and Technology and the National Aeronautics and Space Administration show that 70 percent of software defects are introduced during the requirements and architecture design phases. Moreover, 80 percent of those defects are not discovered until system integration test or later in the development cycle. In their paper "Software Reduction Top 10 List" researchers Barry Boehm and Victor Basili wrote that "finding and fixing a software problem is 100 times more expensive than finding and fixing it during the requirements and design phase." Aircraft industry and International Council on Systems Engineering data shows a rework cost multiplier of 300 to 1,000.

Much of this defect leakage and the resulting cost penalty are due to incomplete and ambiguous requirements and mismatched assumptions in the interaction between the components of embedded software system architectures. To address these problems, I have developed--together with fellow SEI researchers John Goodenough, Arie Gurfinkel, Charles Weinstock, and Lutz Wrage--the Reliability Validation and Improvement Framework, which takes a fresh look at engineering reliable systems.

Reliability engineering has its roots in engineering physical systems. The focus of reliability engineering has been on using historical data to predict mechanical failures in physical parts, assuming that design errors have little impact due to slowly evolving designs. Defects in software systems, however, are design errors for which reliability predictions based on historical data have been a challenge. At the same time, embedded software has become the key to system integration and thus the driver for system reliability. We needed a new perspective on reliability because it is unrealistic to assume that software has zero defects.

Our work on developing a framework began when we were approached by the U.S. Army Aviation and Mission Research Development and Engineering Center (AMRDEC) Aviation Engineering Directorate (AED), the agency responsible for signing off on the flight worthiness of Army rotorcraft. AMRDEC wanted a more reliable approach than "testing until time and budgets are exhausted" to qualify increasingly software-reliant, safety-critical systems.

Four Pillars for Improving the Quality of Safety-Critical Software-Reliant Systems

We needed a new approach for making software-reliant systems more reliable, one that allows us to discover problems as early as possible in the development life cycle. For safety-critical systems these are not only defects in functional design but also problems meeting operational quality attributes, such as performance, timing, safety, reliability, and security. Our approach needed to identify not only defects before a system is built, but also issues that are hard to test for. In addition, the approach needed to ensure that unavoidable failures are addressed through fault management and that unhandled faults are identified, providing resilience to counter unplanned usage and unexpected conditions. The Reliability Validation and Improvement Framework that we developed incorporates four engineering technologies to address the challenges outlined above:

formalization of mission and safety-critical requirements at the system and software level. Requirements on a system--the first pillar of our framework--are typically determined by business needs and operational use scenarios. They are often developed by system engineers and may evolve over time. As Boehm points out in his 2006 paper, "Some Future Trends and Implications for Systems and Software Engineering Processes," there is a gap in translating, especially non-functional system requirements, into requirements for embedded software. Individual software units are then developed without this contextual knowledge, making assumptions about the physical system, computer hardware platform, and the interaction with other software tasks, each of which can affect functional and non-functional system properties.

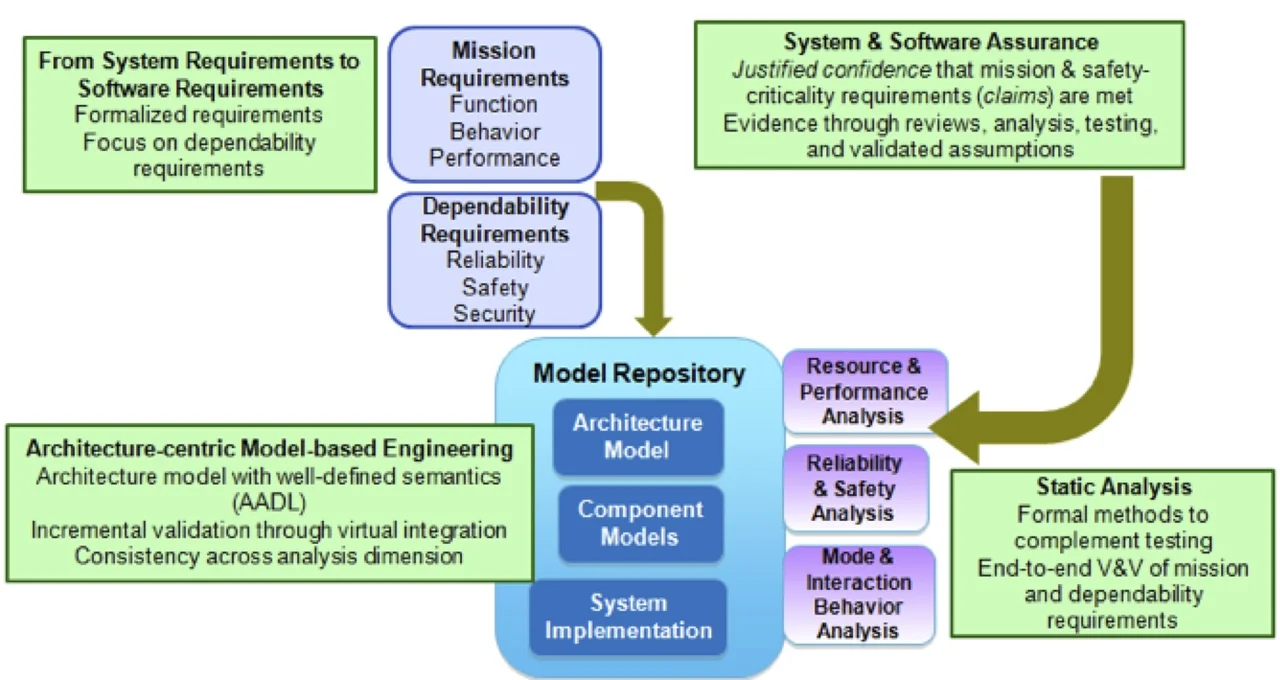

Our framework focuses on capturing the "shalls" of a system by specifying mission capabilities under normal conditions (mission requirements such as functionality, behavior, and performance), as well as the "shall nots," by specifying how the system is expected to perform when things go wrong (dependability requirements, such as safety, reliability, and security). Requirements are associated with the system in the context of assumptions about the operational environment and decomposed into requirements of different components of the system.

This approach allows the validation and verification of requirements and assumptions in the correct context. We have identified an excellent resource, the Requirements Engineering Management Handbook developed by a formal methods group at Rockwell Collins for the Federal Aviation Administration (FAA). The handbook defines an 11-step process for capturing requirements in a more systematic and formal way using an explicit architecture model as context. This approach allows for completeness and consistency checking of requirement specifications.

architecture-centric, model-based engineering. The aircraft industry has recognized that software-reliant system development must take an architecture-centric, model-based, analytical approach to address the limitations of conventional build-then-test practices. The industry has embraced virtual system integration to achieve validation through static analysis of integrated architecture and detailed design models. This approach leads to early discovery of software-induced problems, such as timing related loss of data, control instability due to workload sensitive latency jitter, and loss of physical redundancy due to load balancing of partitions in a networked systems.

This second pillar in our framework uses analyzable architecture models combined with detailed design models and implementations to evolve and validate a system incrementally. The OMG SysML architecture modeling notation is gaining popularity in the system engineering community. For embedded software systems, SysML is complemented by SAE International's Architectural Analysis and Design Language (AADL), a notation I authored that provides a set of concepts with well-defined semantics for representing embedded software system architectures, which include the software task and communication architecture, its deployment on a networked computer platform architecture, and its interaction with the physical system. These semantics lead to precise specification of execution and interaction behavior and timing. A standardized error model extension to AADL supports identification of safety hazards, fault impact analysis, and specification of fault management strategies to help meet reliability and availability requirements.

In this architecture-centric virtual integration approach, the annotated architecture model drives analysis of different functional and non-functional properties by generating analytical models and thereby consistently propagating changes into each analysis, as well as to implementations generated from validated models. The virtual integration approach uses a multi-notation model repository that utilizes standardized model interchange formats and maintains consistency across models, while allowing suppliers and system integrators to utilize their own tool chains.

static analysis of functional and non-functional system properties. Static analysis is any technique that formally proves system properties from system models prior to deployment and execution. Static analysis complements simulation and traditional testing to validate and verify a system.

This third pillar of our framework focuses on incrementally validating and verifying the virtually integrated system before the actual production software is written. Formal analysis frameworks, such as model checking for verifying functional behavior, and rate monotonic schedulability analysis for assuring timing requirements, are scalable solutions that have been successfully applied to avionics and space systems. Properties defined in the SAE AADL standard and annotations using the standardized Behavior, Error Model, and ARINC653 Partitioned Architecture extensions to AADL support different functional and non-functional analyses from the same model.

- system and software assurance. Safety cases using a goal-structured notation have been used extensively outside the United States to assure safety in nuclear reactors, railroad signaling systems, avionics systems, and other critical systems. A best practice of this fourth pillar of our framework involves the development of evidence in parallel with the system design throughout the development life cycle. This evidence ranges from requirements and design review results and predictive analysis results of virtually integrated systems, to test results to provide justified confidence. This approach records claims about the system, assumptions made in the process, and evidence required to satisfy the claims.

The SEI has been involved in evolving this approach into assurance cases for different system concerns. Assurance cases provide a systematic way of establishing confidence in the qualification of a system and its software by

- recording and tracking the evidence and arguments (as well as context and assumptions) that the claims of meeting system requirements are satisfied by the system design and implementation, and

- making the argument that the evidence is sufficient to provide justified confidence in the qualification.

Recent developments indicate that assurance cases are being adopted within the United States. For example, the U.S. Food and Drug Administration is exploring the inclusion of assurance case reports into regulatory submissions for infusion pumps. An International Organization for Standardization standard for assurance cases is currently under development.

Overview of the Reliability Validation and Improvement Framework

In the figure above, the four components combine to transform the traditional software development model of a heavily document-driven practice into an analytical practice based on architecture-centric models. As we write in our SEI technical report, the centerpiece of this framework is an architecture-centric model repository supporting multiple modeling notations and a combination of architecture and detailed design models together with source code implementations. The ability to virtually integrate and analyze the models is key to improving reliability by discovering problems early in the life cycle and reducing defect rework at a higher cost in later phases.

Since incomplete, ambiguous, and inconsistent requirements contribute 35 percent of system-level defects, it is valuable to formalize requirements to a level that can be validated and verified by static analysis tools. Formalization of requirements establishes a level of confidence by assuring consistency of the specifications and their decomposition into subsystem requirements. The requirements are decomposed in the context of an architecture specification and refined into concrete, formalized specifications for which evidence can be provided through verification and validation activities. This method is reflected in the Requirements Engineering Management Handbook process and supported by the draft Requirement Definition and Analysis Standard extension that is part of the AADL standard suite and applicable to other architecture notations.

The aerospace industry, which already has several well-entrenched safety practices, has embraced System Architecture Virtual Integration (SAVI), which a multi-year initiative conducted under the auspices of the Aerospace Vehicle Systems Institute (AVSI). SAVI aims to improve current practice and overcome the software cost explosion in aircraft, which currently makes up 65 percent to 80 percent of the total system cost with rework accounting for more than half of that.

SAVI has chosen SAE AADL as a key technology and performed several proof-of-concept demonstrations of the virtual integration concept to achieve early discovery of problems. SAVI's current work focuses on demonstrating model-based, end-to-end validation and verification of safety and reliability, as well as defining model repository requirements to facilitate commercial tool vendor adoption. SAVI also has an ongoing effort to assess return on investment and necessary changes to acquisition and qualification processes.

The application of static analysis to requirements, architecture specifications, detailed designs, and implementations leads to an end-to-end validation and verification approach. The research community in the United States and Europe has embraced AADL models as a platform for integrating formal analysis frameworks and transitioning them quickly to industrial settings. An assurance case interchange format is being standardized and evidence tracking in the context of formalized requirements is supported through the Requirement Definition and Analysis extension to AADL.

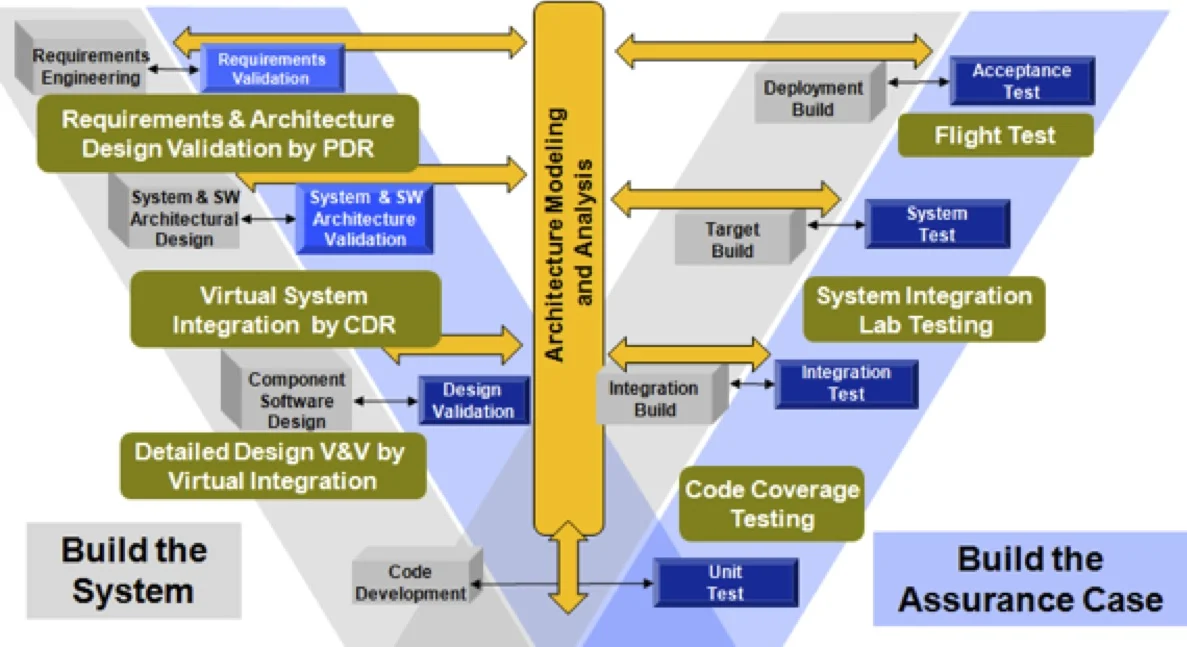

As illustrated in the figure above, our revised software development model is represented by two parallel activities:

- The first activity reflects the development process: build the system. This development process focuses on the creation of design artifacts ranging from requirement specification and architecture design, detailed design, and code development through integration, target, and deployment build.

- The second activity reflects the qualification process: build the assurance case for the system. This qualification process comprises the traditional unit test, integration test, system test, and acceptance test phases. It extends into the early phases of development through the concept of an architecture-centric virtual system integration lab to support validation and verification throughout the life cycle.

Conclusion

At the SEI we continue to provide technical leadership to the SAE AADL standards committee, participate in the SAVI initiative, codify elements of the four pillars described in this post into various methods (such as the Virtual Upgrade Validation and assurance cases). We also work with organizations such as the U.S. Army, NASA, FDA, and various industrial partners to apply different aspects of these four pillars in actual programs and projects.

We welcome your feedback on our work and are looking for organizations interested in applying this approach. If you are interested, please leave a comment below or send an email to info@sei.cmu.edu.

Additional Resources

To read the full SEI technical report, The Reliability Validation and Improvement Framework, please visit https://resources.sei.cmu.edu/library/asset-view.cfm?assetid=34069

To read the white paper describing this work, Four Pillars for Improving the Quality of Safety-Critical Software-Reliant Systems, please visit

https://resources.sei.cmu.edu/library/asset-view.cfm?assetID=47791

More By The Author

More In Software Architecture

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Software Architecture

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed