Incremental Security Hardening the DevOps Way

Where to start?

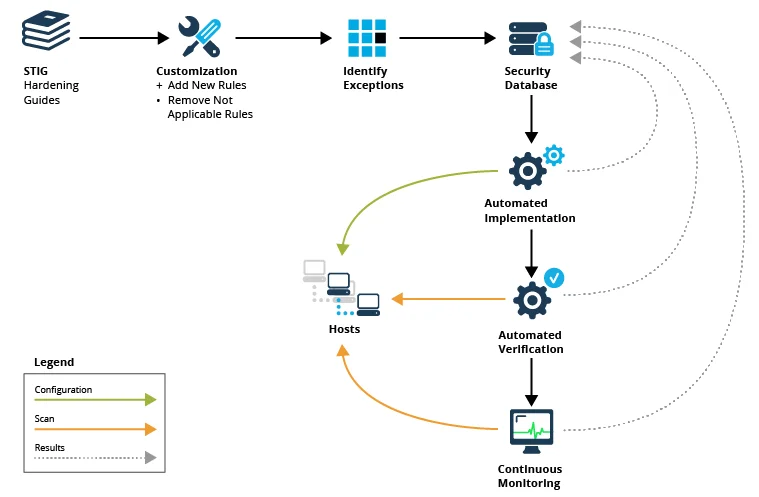

The Security Technical Implementation Guides (STIGs) created by the Defense Information Systems Agency (DISA) provide written guidelines and implementation instructions on how to security harden various operating systems, commercial off the shelf (COTS), and free-and-open source software (FOSS) products. STIGs are a valuable starting point for developing a security baseline for your system, which is the set of suggested configuration requirements that I will refer to as "rules." The application of these rules will ensure that a system is deemed security hardened and compliant.

A representative rule that applies to a Linux system states, "The /etc/passwd file must be owned by the root user. If the /etc/passwd file is owned by any other user, this is a finding."

Security Guide Customization

For a software system to function properly, exceptions to the rules are not uncommon. It is important to understand and document the reasons for these exceptions along with any added security risk they may introduce to the system. On the flip side, there are instances where your system will be able to be configured above and beyond the published rules to maximize the security posture. For example, a rule may call for a certain configuration file to be readable only by a particular user security group. It may be acceptable to further restrict access of the file to only a certain user if doing so does not disrupt legitimate system functionality.

It is also crucial to understand and track which exceptions apply to which hosts in your system during various times. For example, a server that operates as an appliance can function with many more security configurations and restrictions in place than an application server that accepts end-user logins. It is also important to understand when different rules must be relaxed on a system to accommodate expected modes of operation, such as software installation, system maintenance updates, and incident response. Different rules may be enabled or disabled during various phases of the development lifecycle. For example, during system development, the rules may be less restricted than during testing and production.

Keeping Track of it All

Maintaining thousands of rules across many hosts throughout various modes of operation can be daunting, if not impossible, without the help of a custom database or COTS/FOSS product. This data store should be integrated with the automated toolset that is responsible for applying, verifying, and monitoring the rules.

Applying Rules the DevOps Way

Automating the application of security rules has the same advantages as automating environment provisioning. The results are guaranteed to be consistent since

- human error is eliminated

- less manual labor is required

- automation scripts document precisely how a rule is applied to a system for tracking and collaboration

The automation scripts that apply the rules should be maintainable and executable at a granular level, meaning a script should be able to apply one and only one rule if needed. This granularity will grant you the flexibility of accommodating various exceptions and modes without customizing the automation scripts. To avoid masking a problem, the scripts should be designed to return an error code if they fail to perform their work. Results of the automated hardening should be maintained in a data store for visibility and analysis.

Verifying the Results with Automation

After hosts are hardened, it is advisable to test the hardening to verify all went as planned. Along with custom scripting, there are several COTS and FOSS products including Grendel-Scan, Nikto, VAddy, Nessus, and Tinfoil Security available that can automatically scan hosts and report security deficiencies. If used, these tools should be configured and customized to eliminate false positives and negatives. The exceptions and modes that dictate which rules to apply to a particular host also apply to the verification stage to eliminate false results.

Continuous Monitoring

After a host has been verified to meet the baseline, it must be monitored continuously to assure that it has not drifted from the baseline through normal operations, maintenance, or a security event. There are various COTS and FOSS products such as Open Source Tripwire, Snort, and ossec available to monitor the file system and network activity on a host and raise alerts when unexpected changes are detected.

Figure 1: The Automated Security Hardening Lifecycle

Batch Size & Shifting Left

One organization I worked with became stuck in the trap of waterfall thinking, developing thousands of rules in a particular phase before proceeding to the next. For example, they focused for months on defining the rules that apply to a particular system without working through how the rules would be implemented via automation. When they went to automate the implementation of the rules, they discovered that swaths of the rules did not apply to the operating system version they were targeting. The result was wasted effort and rework of the exceptions. Had they shifted left the automated implementation of a few rules, and focused their efforts on taking a small batch through the whole lifecycle sooner, they would have learned early on that they were on the wrong track.

It is important to conduct early testing of a few rules throughout the entirety of the hardening lifecycle to uncover hidden requirements and challenges. After relatively few pioneering rules are implemented from customization through monitoring, the rest of the rules can be developed more quickly. Lessons-learned can be incorporated and automation scripting components can be reused when implementing the remaining rules.

Continuous Improvement

With new vulnerabilities being discovered every day, what is secure today is not necessarily what's secure tomorrow. It is therefore crucial for organizations to continually reevaluate their security hardening rules, implementation, and testing to push the envelope incrementally further. Eliminating manual work in implementation and verification will free up the team to focus on processes improvement.

We welcome your feedback on the DevOps blog as well as suggestions for future content. Please leave feedback in the comments section below.

Additional Resources

To view the webinar DevOps Panel Discussion featuring Kevin Fall, Hasan Yasar, and Joseph D. Yankel, please click here.

To view the webinar Culture Shock: Unlocking DevOps with Collaboration and Communication with Aaron Volkmann and Todd Waits please click here.

To view the webinar What DevOps is Not! with Hasan Yasar and C. Aaron Cois, please click here.

To listen to the podcast DevOps--Transform Development and Operations for Fast, Secure Deployments featuring Gene Kim and Julia Allen, please click here.

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed