Automated Assurance of Security-Policy Enforcement In Critical Systems

As U.S. Department of Defense (DoD) mission-critical and safety-critical systems become increasingly connected, exposure from security infractions is likewise increasing. In the past, system developers had worked on the assumption that, because their systems were not connected and did not interact with other systems, they did not have to worry about security. "Closed" system assumptions, however, are no longer valid, and security threats affect the safe operation of systems.

To address exponential growth in the cost of system development due to the increased complexity of interactions and mismatched assumptions in embedded software systems, the safety-critical system community has embraced virtual system integration and analysis of embedded systems. In this blog post, I describe our efforts to demonstrate how virtual system integration can be extended to address security concerns at the architecture level and complement code-level security analysis.

Improving the Quality of System Security Assurance

There have been many reports of serious security issues in safety-critical domains, such as automotive and medical. The Jeep Hack and Prius Hacks exemplify the potential dangers of highly connected systems. Because many commercial operating systems and networks used in these systems have limited support for security-policy enforcement, a reactive approach to security, in which patches are applied only after a failure has occurred, is all too common. Such an approach is inadequate for mission- and life-critical systems. While mission- and life-critical systems must be intensively tested and certified, current practices (such as ISO 15408 Common Criteria) are labor-intensive and make certification of large-scale systems a costly challenge.

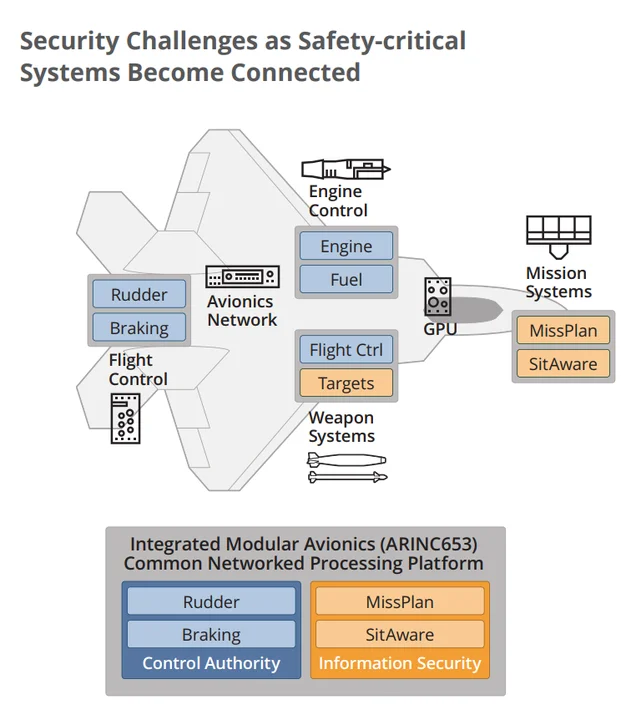

In the figure below we show an aircraft with subsystems that are concerned with flight control and subsystems that support the aircraft mission. Flight-control subsystems are the only ones with control authority to ensure the airworthiness of the aircraft. Mission subsystems, such as mission planning and situational awareness, have information-security requirements and may interact with external systems. Security challenges include the protection of mission information from a confidentiality perspective (e.g., the inability of maintenance personnel to access such information), and managed control authority within the flight-control subsystems and by the mission subsystems.

The external connectivity represents attack surfaces with attacks ranging from denial of service to intrusion. As total prevention of such attacks is highly unlikely, the safety-critical system's architecture has to deploy security mechanisms not only to protect from external attacks, but also to protect from intentional malicious behavior from within the system, e.g., spoofing of sensor data streams.

Our work on virtual system integration aims to reduce the cost and improve the quality of system security assurance. In particular, we focus on developing techniques and producing tools that enable the specification of security policies and enforcement of that specification by

- detecting security policy violations early

- assuring that the system implementation correctly enforces the policies and that no security risks are introduced by the runtime architecture

- automating the execution of security-assurance plans

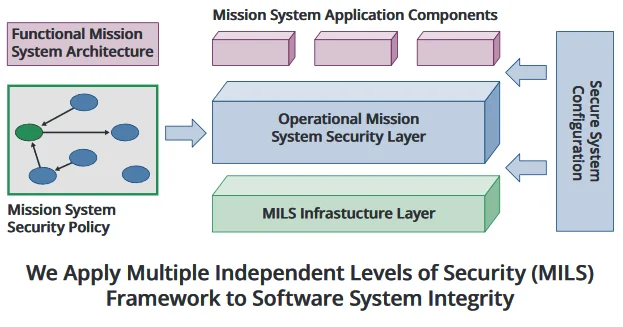

Security policies in critical systems specify restrictions on information flow and isolation for a functional mission-system architecture--what is allowed to be done and not allowed to be done within that system. A policy might state that, given a certain function in a multi-functional system, that function can control some things but not others. For example, a car entertainment system must not be allowed to send commands to a steering wheel.

Multiple Independent Level of Security (MILS) is an architectural approach developed in the 1990s at the National Security Agency (NSA) in the context of confidentiality of information. MILS specifies who is allowed to read information based on having the right security level to do so. Security policies can be enforced in a runtime architecture through infrastructure mechanisms, such as use of a separation kernel, secure network, or encryption. DoD contractors are using MILS to achieve cross-domain solutions in domains such as military communication (e.g., Falcon III) and avionics (e.g., F-22 and F-35).

Cybersecurity experts have traditionally focused on external protections, such as the use of firewalls, as opposed to protections that are internal to the system. In a mission- or safety-critical system, however, external protections alone are not sufficient. Architectural partitions that render it impossible for a function in one partition to affect a function in another add an extra level of protection. In this project, we look at the policy specification (what entities are allowed to communicate with what other entities) and policy enforcement (how does the implementation ensure that certain entities can communicate only with certain other entities, and that the information communicated is protected).

This project builds on previous SEI research to establish model-based engineering practice and responds to needs identified through several engagements with SEI customers. Our work aligns with research and development to form the architecture-led virtual integration practice, a virtual upgrade validation method, and a reliability improvement and validation framework. This framework draws from SEI technical leadership of the SAE International Architecture Analysis & Design Language (AADL) industry standard to model and analyze embedded software systems.

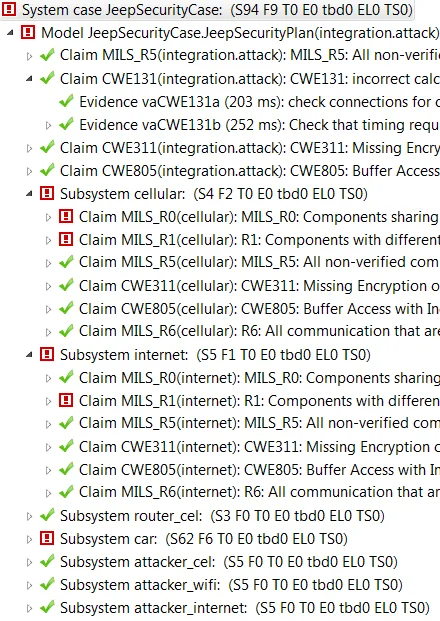

Leveraging the Architecture-Led Incremental System Assurance (ALISA) capability in the Open Source AADL Tool Environment (OSATE), we analyze security-policy specifications for consistency and gaps in flow constraints and isolation requirements. We also analyze the software system architecture for potential enforcement vulnerabilities due to incorrect deployment of security mechanisms. These analyses are run automatically as the system model evolves over time to incrementally and continually ensure that the security requirements are met. The figure below shows such a security-assurance trace produced by the ALISA capability. The figure shows a number of claims that various MILS requirements are met and Common Weakness Enumerations (CWE) are addressed through one or more pieces of evidence in the form of analysis results.

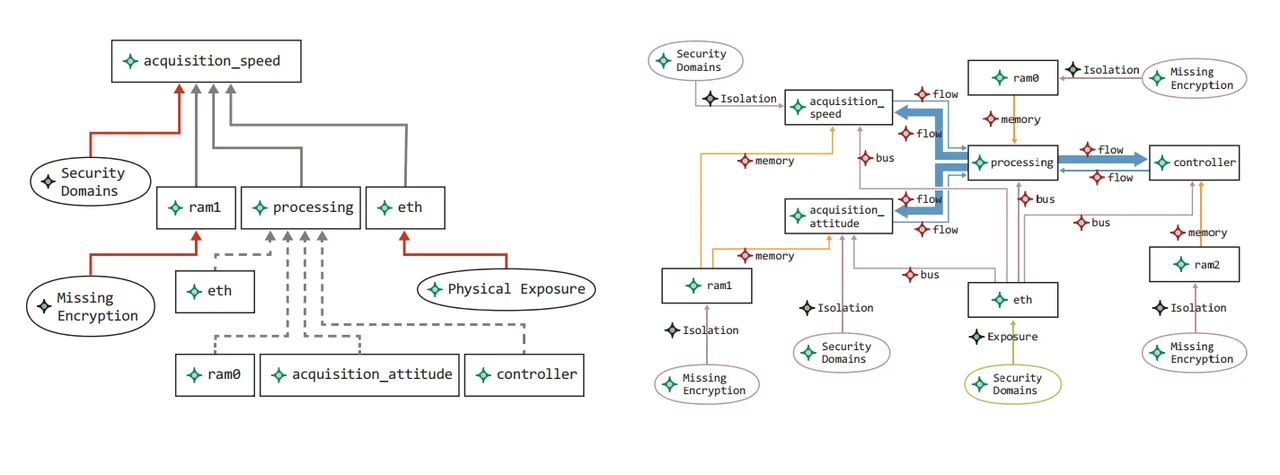

In addition to automated incremental assurance of security concerns, we have developed tools that generate attack tree and attack impact models from the AADL model of the system. A graphical presentation of an attack tree is shown on the left of the figure below. An example of a fault impact graph with specific impact traces highlighted is shown on the right in the figure below.

Our approach focuses on architecture-level verification of the operational mission-system security layer that is responsible for enforcing the specified security policies. This trusted MILS layer must be correctly implemented, the attack surface of this layer must be reduced, and the operational system must be configured to comply with the verified security-system architecture. We assume a previously verified MILS infrastructure, and separate verification of the mission-system application functions and compliance of functional code with secure coding practices.

Results and Next Steps

We have developed an architecture-centric approach to modeling and virtually integrating safety-critical systems using the SAE International AADL Standard (SAE AS5506). We have developed a set of security-related properties that are used to annotate these AADL models and facilitate the verification of security-policy specification. For example, these security related properties identify potential information leakage in the specified information flows, and the verification that these policies are correctly enforced, (i.e., that information is encrypted along the full path of information and control flows). We have implemented these capabilities as a toolset on top the Open Source AADL Tool Environment (OSATE).

We have developed tools to support two analytical techniques, attack tree analysis, and attack impact analysis. These techniques are similar to fault tree and fault impact analysis - well-established safety analysis techniques that are supported by an AADL annex for fault modeling and analysis.

We have developed several case studies for use in proof-of-concept demonstrations. They include several examples from the automotive and aerospace domains. In addition, we have developed a demonstration example that includes automatic code generation for an unmanned vehicle.

We have presented and published at several venues including the 2016 MILS workshop and ISOLA 2016.

We have released tools and example models produced in this project under an open-source license. These tools and models are available on the SEI Github code repository. They will be integrated with OSATE, which will enable them to reach an established AADL user community.

Finally, we have begun an effort to develop a Security Annex draft standard for AADL through the SAE International AS-2C committee, and we are pursuing the integration of security and safety engineering into a common engineering approach under a new SEI-funded three-year project called Integrated Safety and Security Engineering of Mission-Critical Systems.

Additional Resources

Tools produced in this project have been released under an open-source license and are available on the SEI Github code repository.

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed