Detecting Architecture Traps and Pitfalls in Safety-Critical Software

PUBLISHED IN

Software ArchitectureSafety-critical avionics, aerospace, medical, and automotive systems are becoming increasingly reliant on software. Malfunctions in these systems can have significant consequences including mission failure and loss of life. So, they must be designed, verified, and validated carefully to ensure that they comply with system specifications and requirements and are error free. In the automotive domain, for example, cars contain many electronic control units (ECU)--today's standard vehicle can contain up to 30 ECUs--that communicate to control systems such as airbag deployment, anti-lock brakes, and power steering.

The design of tightly-coupled software components distributed across so many nodes may introduce problems, such as early or late data delivery, loss of operation, or concurrent control of the same resource. In addition, errors introduced during the software design phase, such as mismatched timing requirements and values beyond boundaries, are propagated in the implementation and may not be caught by testing efforts. If these problems escape detection during testing, they can lead to serious errors and injuries, as evidenced by recent news reports about problems with automotive firmware. Such issues are not specific to a particular domain and are very common in safety-critical systems. In fact, such problems are often found when reviewing code from legacy systems designed and built more than 20 years ago and still operating, as in the avionics and aerospace domains. This blog post describes an effort at the SEI that aims to help engineers use time-proven architecture patterns (such as the publish-subscribe pattern or correct use of shared resources) and validate their correct application.

Architecture Design and Analysis: Why it Matters

Today's safety-critical systems are increasingly reliant on software. Software architecture is an important asset that impacts the overall development process: for example, good software architecture eases system upgrade and reuse while bad architectures can lead to unexpected rework when trying to modify a component. This trend will continue, especially because software size continues to grow at a significant rate and the early and intentional design of software architecture is an important tool in managing this complexity. Software architecture also helps system stakeholders reason about the system in its operational environment and detect potential flaws.

Beyond these benefits, the early design and review of a software architecture can help avoid common software traps and pitfalls prior to implementation. A study by the National Institute of Standards and Technology found that 70 percent of software defects are introduced during the requirements and architecture design phases. What exacerbates the problem is the fact that 80 percent of those defects are not discovered until system integration testing or even later in the development lifecycle. Fixing these issues later has an adverse impact on product delivery schedule and also on development costs, In their paper Software Reduction Top 10 List software engineering researchers Barry Boehm and Victor Basili wrote that "finding and fixing a software problem is 100 times more expensive than finding and fixing it during the requirements and design phase."

A group of SEI researchers have started an effort that details strategies for avoiding software architecture mistakes by using appropriate architecture patterns (such as the ones from the NASA reports) and validating their correct application. Specifically, we are working on tools to analyze software architecture, detect pattern usage, and check that system characteristics cannot undermine the benefits of the pattern. This approach promotes use of well-known methods to improve software quality, such as decoupling functions or reducing variable scope to make the software more modular. In the long term, such methods can help designers avoid common architecture traps and pitfalls from the beginning as well as the incurrence of potential rework later in the development process.

From a practical perspective, this approach makes use of the Architecture Analysis and Design Language (AADL) for specifying an architecture pattern. We implemented a new analysis function in the Open Source AADL Tool Environment (OSATE) to validate correct use of the pattern and analyze pattern consistency with the other components. In particular, such a tool can detect any characteristic from the system environment that might impact use of the pattern. For example, in the case of the publish-subscribe pattern (a component sending data periodically to a receiver), one common mistake is a mismatch between the execution frequency of the publisher and subscriber, such as when the publisher sends data faster than the subscriber can handle it. Our validation tool analyzes the application of such a pattern and checks for timing mismatch, ensuring that the subscriber has enough time and resources to receive and handle all incoming data.

Using and Validating Architecture Patterns

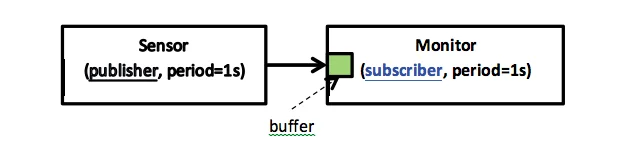

The publish-subscribe pattern introduced above can be illustrated by a simplified weather station with two components: a temperature sensor (publisher) that periodically sends a value (temperature) to a monitor (subscriber) that computes statistics about the value including maximum, minimum, and average. Each component (the sensor and the monitor) is periodic: each executes at a fixed and predefined rate (for example, each second). Figure 1 illustrates the publish-subscribe pattern. As shown in this figure the communication uses a connection between two components. When the sensor publishes data it is stored in a buffer to make it available to the monitor that subscribes to the data. As both tasks are running at the same rate (1 second(s)), no data is lost or read twice.

Figure 1. The publish-subscribe pattern without queued communication

Changing the components' characteristics may have important side-effects. For example, changing the execution rate of the sensor so that it is executed more frequently than the monitor causes data loss. The second execution of the sensor will overwrite the actual buffer on the monitor and replace the previous unread value. The consequences will be that some values are not processed by the monitor and that the result (minimum, maximum, and average temperature) is not accurate.

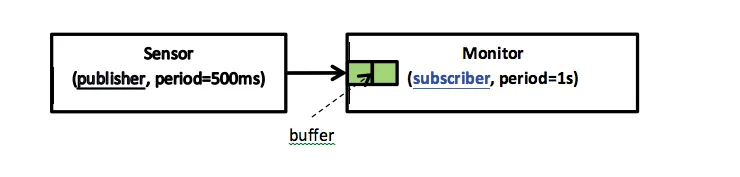

A common workaround for this issue uses communication queues that can store several values. In our current example, we change the buffer dimension of the monitor so that it can handle two pieces of data. We illustrate such an architectural change in Figure 2.

Figure 2 - The publish-subscribe pattern with queued communication

In this case, the sensor is executed faster (500 milliseconds (ms)) than the monitor (1s). No data is lost because the monitor can contain two data values and read all of them when it is executed. A new problem may appear, however, if the buffer size or the execution period is modified.

This type of issue may not be important to your system, and checking the correct application of the pattern depends on your system requirements. If the data being exchanged is of any particular importance, however, you must check that the pattern is applied correctly in the architecture. In this example (the publish-subscribe pattern), validating the correct application of the pattern requires that:

- without queued communication, the monitor is executed faster than the sensor

- with queued communication, the components' periods and queue size are configured consistently to avoid data loss

Timing and resource-dimension issues are among many in a 2011 NASA report that identifies issues related to an unintended acceleration problem in automotive software. The report states that software analysis tools detected more than 900 buffer overruns when the tools were used to analyze the automotive software that was experiencing the problem. The use of software architecture ensures that these types of issues can be detected and avoided during system design and not propagated to subsequent development stages.

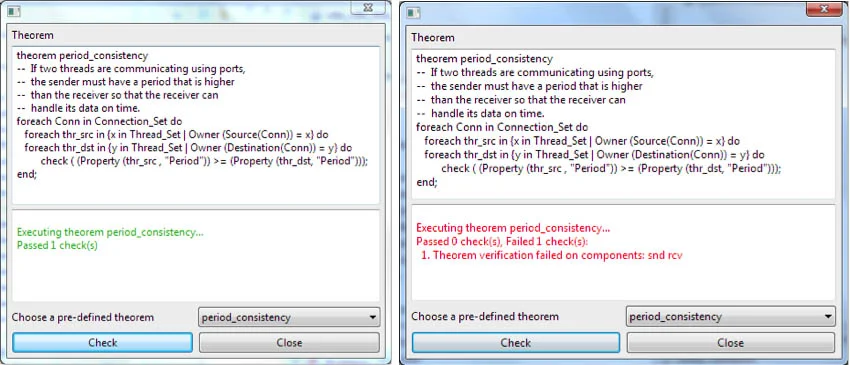

For that reason, it is important to not only make use of good architecture patterns, but to analyze an architecture to ensure correct pattern application and use. For our publish-subscribe example, we describe the architecture using AADL. Our validation tool checks its correctness by analyzing the components' characteristics. The following figures show our validation framework, with the left part illustrating the validation of a correct architecture and the right showing an error, highlighting a software architecture defect (inconsistent timing properties).

Use of architecture validation tool, by validating a correct architecture (left) or detecting inconsistent use of an architecture pattern (right).

The Take Away

Recent news reports illustrate the value of architecture analysis for improving software development, reducing potential rework costs, and avoiding delivery delays. In that context, SEI researchers are promoting the use of software architecture patterns in conjunction with analysis tools to check their correct application and thus, avoid typical architecture design trap and pitfalls.

Our analysis tools look for architecture defects using validation rules, such as:

Variable Scope. Variable scope defines what entities might read or write in a variable. An improperly defined variable scope limits software reuse (too many components depend on a shared global variable) or limits analysis by making it hard to trace what tasks read from or write to the variable. To avoid such defects, architects must analyze software architecture and check if variables are declared and used at the appropriate scope. From a technical perspective, our validation tool checks whether variables are declared with the appropriate scope according to their use (tasks or subprograms that accesses it) and advocate architecture changes when appropriate. Such an approach would avoid unnecessary use of global variables, which is usually a design mistake, as evidenced by a recent report from the National Highway Transportation Safety Administration on unintended acceleration in Toyota vehicles. The same report illustrates that this is a common trend and states that some automotive software can contain more than 2,200 global variable declarations with different types.

Concurrency. Many software architectures include tasks that access shared resources (such as services, resources, data, etc.). A common mistake is to share data among several components that read and write new values without controlling concurrent access, which can lead to potential consistency issues. To overcome this problem, we advise using a concurrency control mechanism (such as semaphore or mutex) to avoid value inconsistencies and related race conditions. On the other hand, if only one task writes to the data, the concurrency mechanism might be avoided. Inappropriate use of multi-tasking features and locking mechanisms is the source of many software issues, as evidenced by the Flight Software Complexity Report issued by NASA. Using the appropriate mechanism is important in the context of safety-critical systems, as they may have limited resources, and use of such mechanisms introduces potentially unnecessary overhead. Examples of rules to check correct use of shared resources include:

- If more than two tasks write into shared data, the data must be associated with a locking mechanism (mutex, semaphore, etc.).

- If only one task writes into shared data, no locking mechanism is mandatory.

We are working on several validation rules for analyzing the use of global variables and refactoring the software architecture so that

- software is decomposed into modules that can be reused and deployed on separate processing nodes

- variable assignment and modification are restricted to a limited scope (so that a variable cannot be modified anywhere.)

- data flow is clearly defined and bounded to a specific scope

An outline of this effort, and our progress in developing this approach, is available online. All the validation technology is included in OSATE, our Eclipse-based AADL modeling framework under a free license. We invite you to use and test our approach, and then send us feedback.

To improve existing patterns and add new ones, we also plan to interview safety-critical system engineers and designers so that we may adapt our work to existing industrial issues, expectations, and needs. If you are a software engineer or designer who would be interested in participating, please send an email to info@sei.cmu.edu.

Additional Resources

To read more about the approach that we are developing, please visit

https://resources.sei.cmu.edu/aadl-wiki.cfm

To read the NASA Study on Flight Software Complexity, please visit https://www.nasa.gov/offices/oce/documents/FSWC_study.html

To read the National Highway Transportation Safety Administration Study of Unintended Acceleration in Toyota Vehicles, please visit https://one.nhtsa.gov/About-NHTSA/Press-Releases/ci.NHTSA%E2%80%93NASA-Study-of-Unintended-Acceleration-in-Toyota-Vehicles.print.

More By The Author

More In Software Architecture

PUBLISHED IN

Software ArchitectureGet updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Software Architecture

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed