The Challenge of Adversarial Machine Learning

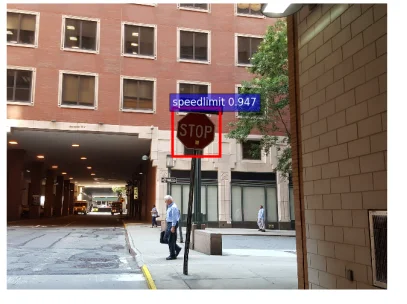

Imagine riding to work in your self-driving car. As you approach a stop sign, instead of stopping, the car speeds up and goes through the stop sign because it interprets the stop sign as a speed limit sign. How did this happen? Even though the car’s machine learning (ML) system was trained to recognize stop signs, someone added stickers to the stop sign, which fooled the car into thinking it was a 45-mph speed limit sign. This simple act of putting stickers on a stop sign is one example of an adversarial attack on ML systems.

In this SEI Blog post, I examine how ML systems can be subverted and, in this context, explain the concept of adversarial machine learning. I also examine the motivations of adversaries and what researchers are doing to mitigate their attacks. Finally, I introduce a basic taxonomy delineating the ways in which an ML model can be influenced and show how this taxonomy can be used to inform models that are robust against adversarial actions.

What is Adversarial Machine Learning?

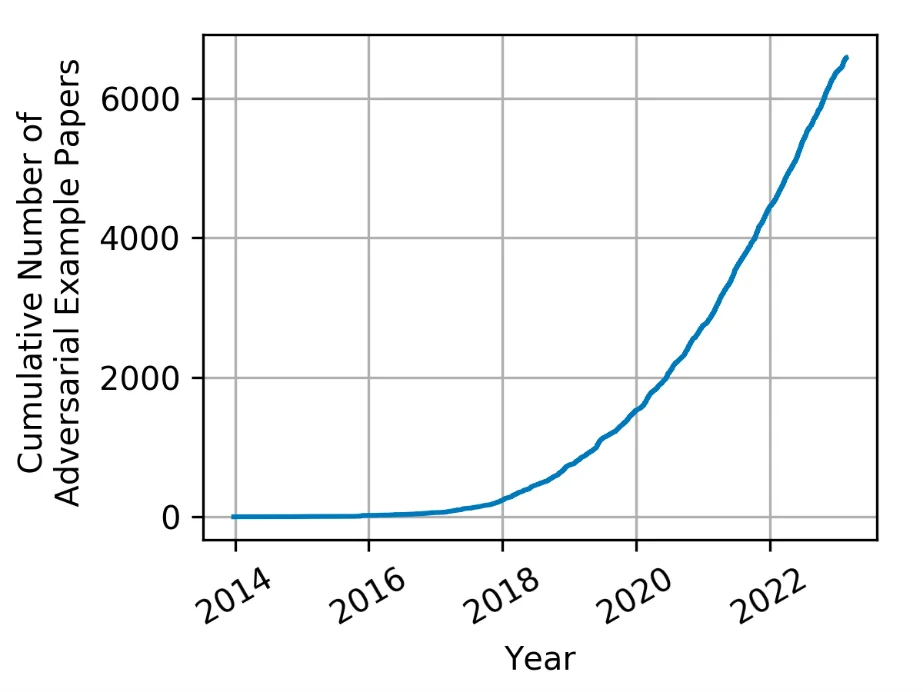

The concept of adversarial machine learning has been around for a long time, but the term has only recently come into use. With the explosive growth of ML and artificial intelligence (AI), adversarial tactics, techniques, and procedures have generated a lot of interest and have grown significantly.

When ML algorithms are used to build a prediction model and then integrated into AI systems, the focus is typically on maximizing performance and ensuring the model’s ability to make proper predictions (that is, inference). This focus on capability often makes security a secondary concern to other priorities, such as properly curated datasets for training models, the use of proper ML algorithms appropriate to the domain, and tuning the parameters and configurations to get the best results and probabilities. But research has shown that an adversary can exert an influence on an ML system by manipulating the model, data, or both. By doing so, an adversary can then force an ML system to

- learn the wrong thing

- do the wrong thing

- reveal the wrong thing

To counter these actions, researchers categorize the spheres of influence an adversary can have on a model into a simple taxonomy of what an adversary can accomplish or what a defender needs to defend against.

How Adversaries Seek to Influence Models

To make an ML model learn the wrong thing, adversaries take aim at the model’s training data, any foundational models, or both. Adversaries exploit this class of vulnerabilities to influence models using methods, such as data and parameter manipulation, which practitioners term poisoning. Poisoning attacks cause a model to incorrectly learn something that the adversary can exploit at a future time. For example, an attacker might use data poisoning techniques to corrupt a supply chain for a model designed to classify traffic signs. The attacker could exploit threats to the data by inserting triggers into training data that can influence future model behavior so that the model misclassifies a stop sign as a speed limit sign when the trigger is present (Figure 2). A supply chain attack is effective when a foundational model is poisoned and then posted for others to download. Models that are poisoned from supply chain type of attacks can still be susceptible to the embedded triggers resulting from poisoning the data.

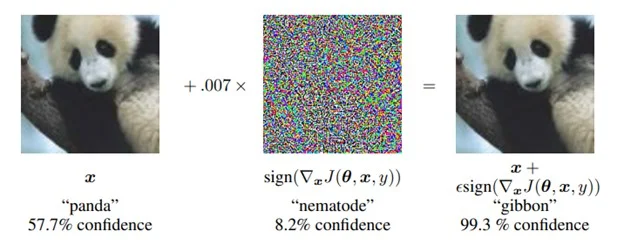

Attackers can also manipulate ML systems into doing the wrong thing. This class of vulnerabilities causes a model to perform in an unexpected manner. For instance, attacks can be designed to cause a classification model to misclassify by using an adversarial pattern that implements an evasion attack. Ian Goodfellow, Jonathon Shlens, and Christian Szegedy produced one of the seminal works of research in this area. They added an imperceptible-to-humans adversarial noise pattern to an image, which forces an ML model to misclassify the image. The researchers took an image of a panda that the ML model classified properly, then generated and applied a specific noise pattern to the image. The resulting image appeared to be the same Panda to a human observer (Figure 3). However, when this image was classified by the ML model, it produced a prediction result of gibbon, thus causing the model to do the wrong thing.

Finally, adversaries can cause ML to reveal the wrong thing. In this class of vulnerabilities, an adversary uses an ML model to reveal some aspect of the model, or the training dataset, that the model’s creator did not intend to reveal. Adversaries can execute these attacks in several ways. In a model extraction attack, an adversary can create a duplicate of a model that the creator wants to keep private. To execute this attack, the adversary only needs to query a model and observe the outputs. This class of attack is concerning to ML-enabled application programming interface (API) providers since it can enable a customer to steal the model that enables the API.

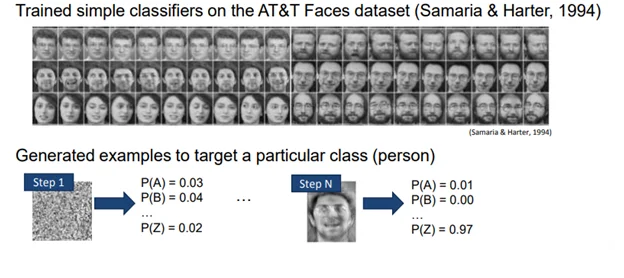

Adversaries use model inversion attacks to reveal information about the dataset that was used to train a model. If the adversaries can gain a better understanding of the classes and the private dataset used, they can use this information to open a door for a follow-on attack or to compromise the privacy of training data. The concept of model inversion was illustrated by Matt Fredrikson et al. in their paper Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures, which examined a model trained with a dataset of faces.

In this paper the authors demonstrated how an adversary uses a model inversion attack to turn an initial random noise pattern into a face from the ML system. The adversary does so by using a generated noise pattern as an input to a trained model and then using traditional ML mechanisms to repetitively guide the refinement of the pattern until the confidence level increases. Using the results of the model as a guide, the noise pattern eventually starts looking like a face. When this face was presented to human observers, they were able to link it back to the original person with greater than 80 percent accuracy (Figure 4).

Defending Against Adversarial AI

Defending a machine learning system against an adversary is a hard problem and an area of active research with few proven generalizable solutions. While generalized and proven defenses are rare, the adversarial ML research community is hard at work producing specific defenses that can be applied to protect against specific attacks. Developing test and evaluation guidelines will help practitioners identify flaws in systems and evaluate prospective defenses. This area of research has developed into a race in the adversarial ML research community in which defenses are proposed by one group and then disproved by others using existing or newly developed methods. However, the plethora of factors influencing the effectiveness of any defensive strategy preclude articulating a simple menu of defensive strategies geared to the various methods of attack. Rather, we have focused on robustness testing.

ML models that successfully defend against attacks are often assumed to be robust, but the robustness of ML models must be proved through test and evaluation. The ML community has started to outline the conditions and methods for performing robustness evaluations on ML models. The first consideration is to define the conditions under which the defense or adversarial evaluation will operate. These conditions should have a stated goal, a realistic set of capabilities your adversary has at its disposal, and an outline of how much knowledge the adversary has of the system.

Next, you should ensure your evaluations are adaptive. In particular, every evaluation should build upon prior evaluations but also be independent and represent a motivated adversary. This approach allows a holistic evaluation that takes all information into account and is not overly focused on one error instance or set of evaluation conditions.

Finally, scientific standards of reproducibility should apply to your evaluation. For example, you should be skeptical of any results obtained and vigilant in proving the results are correct and true. The results obtained should be repeatable, reproducible, and not dependent on any specific conditions or environmental variables that prohibit independent reproduction.

The Adversarial Machine Learning Lab at the SEI’s AI Division is researching the development of defenses against adversarial attacks. We leverage our expertise with adversarial machine learning to improve model robustness and the testing, measurement, and robustness of ML models. We encourage anyone interested in learning more about how we can support your machine learning efforts to reach out to us at info@sei.cmu.edu.

Additional Resources

- Listen to the podcast Deep Learning in Depth: Adversarial Machine Learning.

- Read the SEI Blog post Comments on NIST IR 8269: A Taxonomy and Terminology of Adversarial Machine Learning.

- Read the SEI Blog post Adversarial ML Threat Matrix: Adversarial Tactics, Techniques, and Common Knowledge of Machine Learning.

More By The Authors

More In Artificial Intelligence Engineering

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed