Technical Issues in Navigating the Transition from Sustainment to Engineering Software-Reliant Systems

In our work with government software programs, we have noted a sea change: Acquisition programs focused solely on sustainment are being transitioned to organic software engineering responsibilities and are increasingly charged with designing and engineering new systems. Many of these teams are taking on these responsibilities while working in new technical domains. This post explores technical issues that must be addressed.

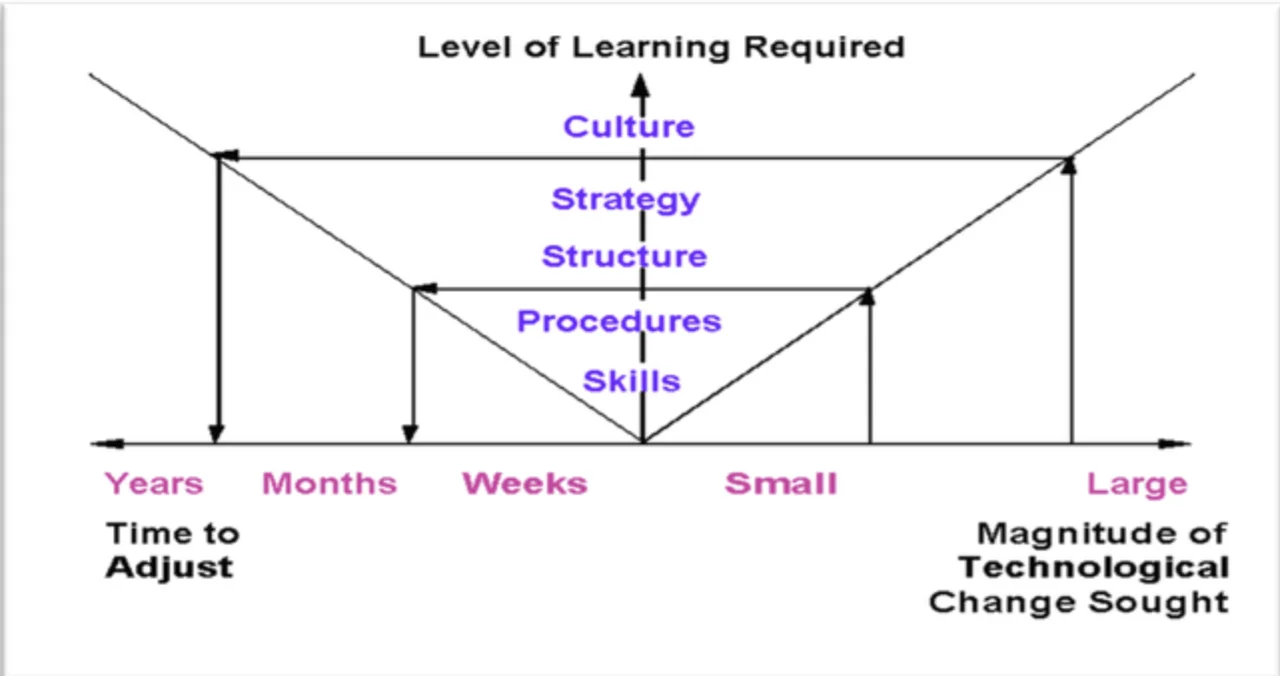

In 1990, Paul S. Adler created a framework for culture change and noted, “Possibly the single most important element in the technical base is the organization’s mix of technical and technology management skills.” As shown in the figure below, Adler argued that a four-legged stool of culture change must be supported by people (skills, culture), processes (procedures), and technology (structure, skills, strategy) situations and solutions.

In this and earlier posts, we explore issues that the federal government should address to help organic sustainment teams successfully transition into software engineering teams, while also helping present and future warfighters maintain a competitive edge. Our initial post gave an overview of the issues that the DoD should address to make this transition successful. Our second and third posts took a deeper dive into the people and process issues that must be addressed as teams make this transition. This post explores some technical concerns, such as how people use technology to assist and automate software development and testing, that must be addressed when teams that are optimized to do sustainment-style work pivot toward broader software-engineering projects.

DoD Challenges Spur Transition to Software Creation

In a November 2020 conversation with Defense Acquisition University, then Under Secretary of Defense for Acquisition and Sustainment Ellen Lord discussed how in today’s era of rapid technological advancements and the changing nature of the battlefield, warfighters need access to capabilities that enable quick decision making and give a competitive edge.

“I want our [coders] to get downrange and talk to the Warfighter, or talk to them virtually,” Lord said. “I want them to understand what the problem is, and then hand a potential solution, a prototype, and let that get in the Warfighter's hands to try.”

To help address the concerns about externally contracted software, the DoD increasingly supports the creation of government-managed labs and centers, as well as the transformation of organic sustainment organizations into engineering organizations that expand traditional government software capabilities.

The issues explored in this series of blog posts stem from the SEI’s experience as a federally funded research and development center. Our mission focuses on creating and transitioning software engineering knowledge to our stakeholders so that they are better equipped to acquire, develop, operate, and sustain software for strategic national advantage. We are keen to help DoD software sustainment organizations successfully navigate the transition to their new roles.

For each technical concern in this post, we will set the context, outline the problem, and suggest solutions.

Effectively Leveraging Software Platforms

Context. Large, complex software systems are implemented using the Layered Architecture pattern, in which a software platform is created to accommodate a broad range of capabilities and features. A software platform anticipates points of variability to support multiple efforts and serves software developers leveraging a platform to build new applications.

Software platforms are inherently meant to be reused by applications. These platforms enable large-scope projects because each new capability is built on an existing software foundation. Examples of software platforms include Android, Windows, Spring, .NET, node.js, and React.

Simply stated, a software platform consists of application programming interfaces (APIs), implementations of these APIs, and associated documentation and build artifacts (e.g., Make files, Gradle scripts, class files, etc.). Software platforms are designed to satisfy developers, so that applications (“apps”) created by developers can satisfy users.

Problem. Although platforms help simplify the development of new applications, software development teams must understand the platform and use it effectively. When teams are not familiar with a targeted platform, developers may reimplement features provided by the platform.

When engineers do not understand the constraints and interaction patterns of a platform, resultant applications will be of low quality, be hard to maintain, and may not meet specifications. For instance, a platform’s behavior is often customized using callbacks and other patterns. These Inversion of Control (IoC) architectures borrow the platform’s context of execution and limit what should be done via a callback.

Misunderstanding IoC can lead to problems. One notable example of how IoC can lead to problems is in application responsiveness. In user interface (UI) frameworks, such as Android Activity, if too much happens during a UI event (e.g., a key click, button press, menu drop down, etc.), the UI may be perceived as non-responsive and an “Application Not Responding” (ANR) exception will be thrown. Another common IoC problem is that scalability can be limited because accidental serialization may occur with processing intended to run asynchronously. Developers may create complex and unmaintainable solutions to work around perceived deficiencies in a platform.

Solutions. Use Platforms Effectively. Platforms offload common programming tasks from developers, allowing organizations to focus on new requirements rather than code infrastructure. Platforms are owned by a team that focuses on that common infrastructure and serves a user community made up of developers making applications for the platform. By developing applications that use the platform, the user community advocates for changes, reports defects, and enhances stability.

Platforms are designed to simplify efforts, and for any effort to be successful, the team must be able to understand and effectively use the platform.

Have experienced mentors. Organizational leadership needs to allow time for team members to familiarize themselves with all the available features on a platform. This learning curve can be mitigated by assigning more experienced team members, or external consultants and teachers, to serve as mentors and designers.

Use the backlog to refactor. As team members familiarize themselves with a platform, they must be willing to refactor once they have a better understanding of a platform’s capabilities, features, and constraints. Without refactoring, the implementation develops a suboptimal state. The absence of corrections creates a larger surface area for maintenance and potential problems. A backlog that contains pending obligations should be used to create an environment that supports refactoring and prioritizes maintainability, which assists in avoiding and resolving eventual software defects. Avoid the sunk-cost fallacy, where removing code is considered a waste of previous investments of time, effort, and money.

Grow the platform and grow the team’s skills: If a team believes a feature is missing from a platform, they must be willing to contribute their code into that platform for others to use. Creating a give-back environment promotes a space where people expand and create connections to other engineers. Open-source projects based on the GNU Public License (GPL) are the canonical give-back example in software; users take open-source code for personal use but are obligated to give back to the open-source development team any improvements made.

Addressing Infrastructure Issues

Context. A product’s original software development required the creation and/or integration of hardware and software.

Problem. Development of DoD systems often involves specialized hardware that requires specific expertise and tools to develop supporting software. Members of a sustainment team may not have access to all the needed artifacts to do their job.

A product always contains a degree of embedded context and historical knowledge that is inadequately documented and that requires extra effort to comprehend without access to the original team. This situation is further complicated because the hardware available may not be identical to that on which the system is deployed, complicating testing and the overall evaluation of new solutions. In particular, in real-time systems, the lack of access to deployment hardware and/or a high-fidelity test environment can delay some assessment and analysis until final system integration can occur.

Solutions. The coordination of a long list of complex capabilities is needed to address the development of large-scale software-reliant systems. In particular, a team must intuitively understand the capabilities of existing software systems and platforms, and the hardware onto which the software will be deployed. Modern software development methodologies (e.g., Agile) are aligned with activities that iteratively and incrementally allow developers to add new skills to analyze the interactions among the hardware, software, and applications of the system.

An environment that allows teams to change and revisit past decisions is necessary as part of the solution. Failure is indeed sometimes an option and is more readily correctable in software, due to its fundamentally malleable nature.

Software engineering teams need time, experience, and encouragement to develop a holistic view of a software platform and all its applications. Creating an environment that allows time for the team to acclimate to a system is a critical factor to success. Great engineering organizations have the following features:

- curiosity enablement

- engineer input on what they do next

- self-directed projects

- 20% projects; dedicated work time for skills development in personal interest areas

- mentoring (explicit and casual)

- engineers working outside their comfort zone for skill enhancement

- encouraging people with options for career development

- technical conferences

- code challenges

- rotating roles between dot releases (project lead, tech lead)

- investments in training and professional development

- internal people development pipeline

Software developers who have become competent in a narrow set of software development skills (e.g., bug fixing in a sustainment setting) may erroneously be perceived as generalized software development experts. A hallmark of great software development organizations is recognizing good software engineers and having a well-defined path to develop them into well-rounded software development experts.

Next, we will explore the software-process concerns involved with expanding organic sustainment teams into organic software engineering teams.

Strategically Focus New Development

Context. Software development is a rapidly evolving domain, but many of the basic building blocks have long existed in the form of commercial off-the-shelf (COTS) or open-source solutions. Most software efforts need common, basic operations and functionality that are available off the shelf to aid in their development of hardware and software platforms. Enterprise software development efforts likely need logging, atomicity, parallelism, and data element storage and manipulation. Modern platforms provide a range of powerful and useful infrastructure elements out of the box.

Problem. When software engineers engage a new software development platform or framework, they are often unaware of the platform’s existing features. As a result of this unfamiliarity (or a not-invented-here mindset), such software engineers reinvent features native to the unfamiliar environment.

Rarely, a unique system or processing domain (e.g., a custom system created for use in classified situations) can present challenges to using existing COTS software components for these common software operations.

Through our involvement in government software efforts, we often encounter custom implementations of common data structures and algorithms (e.g., list and hash implementations, logging, network I/O, and mutexes). These custom implementations increase the effort and cost to develop and maintain the product. They also introduce non-standard paradigms that increase the barrier of entry to new developers.

Solution. While implementing basic data structures and algorithms can be educational, deploying such reimplemented components into production detracts from overall system quality by increasing complexity and decreasing maintainability. It is rare that a custom implementation is better than an existing, long-lived, and well-maintained COTS or open-source option. Many algorithms, data structures, and system primitives for parallelism, filesystem access, locking, and so on are implemented in modern languages and toolchains. These implementations are well-optimized, tested, and supported.

When it comes to work, humans should be motivated to make things simpler. Applying this motivation to software development, it follows that engineering teams should focus on reusable software development because it has the effect of

- reducing total ownership costs

- increasing return on investment

- eliminating repetition [i.e., the Don’t Repeat Yourself (DRY) principle]

- focusing engineering effort on meeting customer needs

Software engineers should be able to recognize red-flag violations of the issues outlined above, such as opportunistic reuse (e.g., copying and pasting). Software sustainment groups transitioning to an engineering role are often unfamiliar with a code base and will encounter these problems as they do their work. Teams should be empowered and incentivized to highlight these opportunities for improvement and add them to the technical debt of the project. They should also be given the ability to address them in the portion of the work cycle dedicated to backlog work.

DoD Code at Large Vs. General Issues

Context. DoD systems are often extremely large and complex. In the interest of fostering innovation through competition, these systems will have contributions from multiple contractors, subcontractors, and organic government teams.

Problem. The wide range of contributors that feed into a large software effort can create a number of potential concerns. Software delivered from contributors will be of varying quality, and the integration into a larger system will amplify those potential issues. Engineering organizations that feed into the DoD do not always deliver systems that include comprehensive architecture and design considerations. Likewise, key pieces for maintenance, such as build and test artifacts, are often excluded from delivery if they are not properly contracted for.

In defense software, there are concerns about access to simulation environments and buildouts of hardware and other artifacts, for specialized hardware platforms. These environments are hard to tie into modern DevSecOps pipelines. These issues lead to contention for access to the hardware environments and limit test and integration opportunities.

Solution. Accept that large systems of systems require extra people power to integrate, test, and maintain. Over time, some engineers will develop a deep, organic understanding of a system. If that knowledge is combined with encouragement from leadership, new ideas to streamline and automate more of the process will emerge. This feedback loop is how iterative methodologies increase the velocity of development efforts. Iterative methodologies rely on incremental improvement to a product and the product development process to deliver software more rapidly. If an organization is too rigid about changes to the latter, the goal of a more streamlined, rapid, and innovative product lifecycle cannot be achieved.

The development of simulators, emulators, and digital twins should be prioritized to assist the project. Such simulated environments may have tradeoffs in fidelity. However, they will ease contention for the available hardware test systems.

Ongoing research efforts at the SEI and within the DoD are attempting to integrate higher fidelity simulations and digital twins, and hardware-in-the-loop (HWIL) into DevSecOps-style continuous integration (CI) efforts.

A Call to Address Underlying Incentives and Legacy Foundations

Our intent with these posts is not to place blame on any group, but to urge DoD leaders and middle-level managers to address the underlying incentives and long-held foundations that create disadvantages for sustainment groups when transitioning to engineering, which is our future reality.

Additional Resources

Read Shifting from Software Sustainment to Software Engineering in the DoD.

Read other SEI blog posts on software sustainment.

More By The Authors

More In Software Engineering Research and Development

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Software Engineering Research and Development

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed