How Easy Is It to Make and Detect a Deepfake?

A deepfake is a media file—image, video, or speech, typically representing a human subject—that has been altered deceptively using deep neural networks (DNNs) to alter a person’s identity. This alteration typically takes the form of a “faceswap” where the identity of a source subject is transferred onto a destination subject. The destination’s facial expressions and head movements remain the same, but the appearance in the video is that of the source. A report published this year estimated that there were more than 85,000 harmful deepfake videos detected up to December 2020, with the number doubling every six months since observations began in December 2018.

Determining the authenticity of video content can be an urgent priority when a video pertains to national-security concerns. Evolutionary improvements in video-generation methods are enabling relatively low-budget adversaries to use off-the-shelf machine-learning software to generate fake content with increasing scale and realism. The House Intelligence Committee discussed at length the rising risks presented by deepfakes in a public hearing on June 13, 2019. In this blog post, I describe the technology underlying the creation and detection of deepfakes and assess current and future threat levels.

The large volume of online video presents an opportunity for the United States Government to enhance its situational awareness on a global scale. As of February 2020, Internet users were uploading an average of 500 hours of new video content per minute on YouTube alone. However, the existence of a wide range of video-manipulation tools means that video discovered online can’t always be trusted. What’s more, as the idea of deepfakes has gained visibility in popular media, the press, and social media, a parallel threat has emerged from the so-called liar’s dividend—challenging the authenticity or veracity of legitimate information through a false claim that something is a deepfake even if it isn’t.

The Evolution of Deepfake Technology

A DNN is a neural network that has more than one hidden layer. There are numerous DNN architectures used in deep learning that are specialized for image, video, or speech processing. For videos, identities can be substituted in two ways: replacement or reenactment. In a replacement, also called a “faceswap,” the identity of a source subject is transferred onto a destination subject’s face. The destination’s facial expressions and head movements remain the same, but the identity takes on that of the source. In a reenactment video, a source person drives the facial expressions and head movements of a destination person, preserving the identity of the destination. This type is also called a “puppet-master” scenario because the identity of the puppet (destination) is preserved, while his or her expressions are driven by a master (source).

The term deepfake originated from the screen name of a member of a popular Reddit forum who in 2017 first posted deepfaked videos. These videos were pornographic, and after the user created a forum for them, r/deepfakes, it attracted many members, and the technology spread through the amateur world. In time, this forum was banned by Reddit, but the technology had become popular, and its implications for privacy and identity fraud became apparent.

Although the term originated in late 2017, the technology of using machine learning in the field of computer-vision research was well established in the film and videogame industries and in academia. In 1997 researchers working on lip-syncing created the Video Rewrite program, which could create a new video from existing footage of a person saying something different than what was in the original clip. While it used machine learning that was common in the computer-vision field at the time, it did not use DNNs, and hence a video it produced would not be considered a deepfake.

Computer-vision research using machine learning continued throughout the 2000s, and in the mid-2010s, the first academic works using DNNs to perform face recognition emerged. One of the main works to do so, DeepFace, used a deep convolutional neural network (CNN) to classify a set of 4 million human images. The DeepId tool expanded on this work, tweaking the CNNs in various ways.

The transition from facial recognition and image classification to facial reenactment and swapping occurred when researchers within the same field began using additional types of DNN models. The first was an entirely new type of DNN, the generative adversarial network (GAN) created in 2014. The second was the autoencoder or “encoder-decoder” architecture, which was in use for a few years but had never been used for generating data until the Variational Autoencoder (VAE) network model was introduced in 2014. Both of the open-source tools used in this work, Faceswap and DeepFaceLab, implement autoencoder networks built from convolutional layers. The third, a type of recurrent neural network called a long short-term memory (LSTM) network, had been in use for decades, but it wasn’t until 2015 with the work of Shimba et al. that they were used for facial reenactment.

An early example of using a GAN model was the open-source pix2pix tool that has been used by some to perform facial reenactments. This work used a conditional GAN (cGAN), which is a GAN that is specialized or “conditioned” to generate images. There are applications for pix2pix outside of creating deepfakes, and the authors refer to their work as image-to-image translation. In 2018, this work was extended to perform on high-definition (HD) images and video. Stemming from this image-to-image translation work, an improvement upon a cGAN called a CycleGAN was introduced. In a CycleGAN, the generated image is cyclically converted back to its input until the loss is optimized.

Early examples of using LSTM networks for facial reenactment are the works of Shimba et al. and Suwajanakorn et al., which both used LSTM networks to generate mouth shapes from audio speech excerpts. The work of Suwajanakorn et al. received attention because they chose President Obama as their target. An LSTM was used to generate mouth shapes from an audio track. The mouth shapes were then transferred onto a video with the target person using non-DNN-based machine-learning methods.

While the technology itself is neutral, it has been used many times for nefarious activities, mostly to create pornographic content without consent, and also in attempts to commit acts of fraud. For example, Symantec reported cases of CEOs being tricked into transferring money to external accounts by deepfaked audio. Another concern is the use of deepfakes to interfere at the level of nation states, either to disrupt an election process through fake videos of candidates or by creating videos of world leaders saying false things. For example, in 2018 a Belgian political party created and circulated a deepfake video of President Trump calling for Belgium to exit the Paris Agreement. And in 2019 the president of Gabon, who was hospitalized and feared dead, was shown in video giving an address that was deemed a deepfake by his rivals, leading to civil unrest.

How to Make a Deepfake and How Hard It Is

Deepfakes can be harmful, but creating a deepfake that is hard to detect is not easy. Creating a deepfake today requires the use of a graphics processing unit (GPU). To create a persuasive deepfake, a gaming-type GPU, costing a few thousand dollars, can be sufficient. Software for creating deepfakes is free, open source, and easily downloaded. However, the significant graphics-editing and audio-dubbing skills needed to create a believable deepfake are not common. Moreover, the work needed to create such a deepfake requires a time investment of several weeks to months to train the model and fix imperfections.

The two most widely used open-source software frameworks for creating deepfakes today are DeepFaceLab and FaceSwap. They are public and open source and are supported by large and committed online communities with thousands of users, many of whom actively participate in the evolution and improvement of the software and models. This ongoing development will enable deepfakes to become progressively easier to make for less sophisticated users, with greater fidelity and greater potential to create believable fake media.

As shown in Figure 1, creating a deepfake is a five-step process. The computer hardware required for each step is noted.

- Gathering of source and destination video (CPU)—A minimum of several minutes of 4K source and destination footage are required. The videos should demonstrate similar ranges of facial expressions, eye movements, and head turns. One final important point is that the identities of source and destination should already look similar. They should have similar head and face shape and size, similar head and facial hair, skin tone, and the same gender. If not, the swapping process will show these differences as visual artifacts, and even significant post-processing may not be able to remove these artifacts.

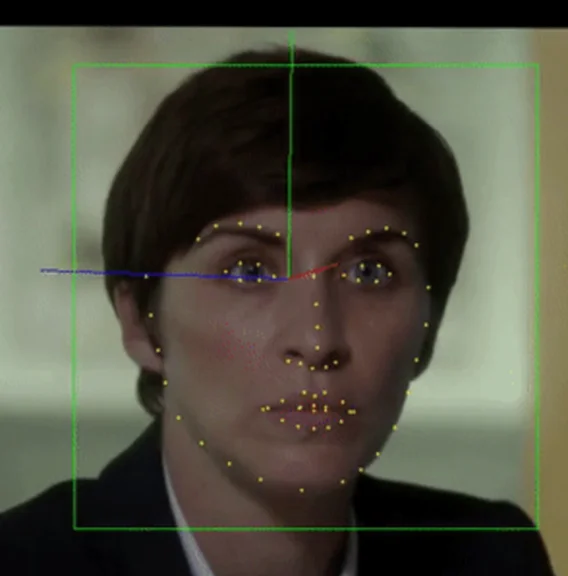

- Extraction (CPU/GPU)—In this step, each video is broken down into frames. Within each frame, the face is identified (usually using a DNN model), and approximately 30 facial landmarks are identified to serve as anchor points for the model to learn the location of facial features. An example image from the FaceSwap framework is shown in Figure 2 below.

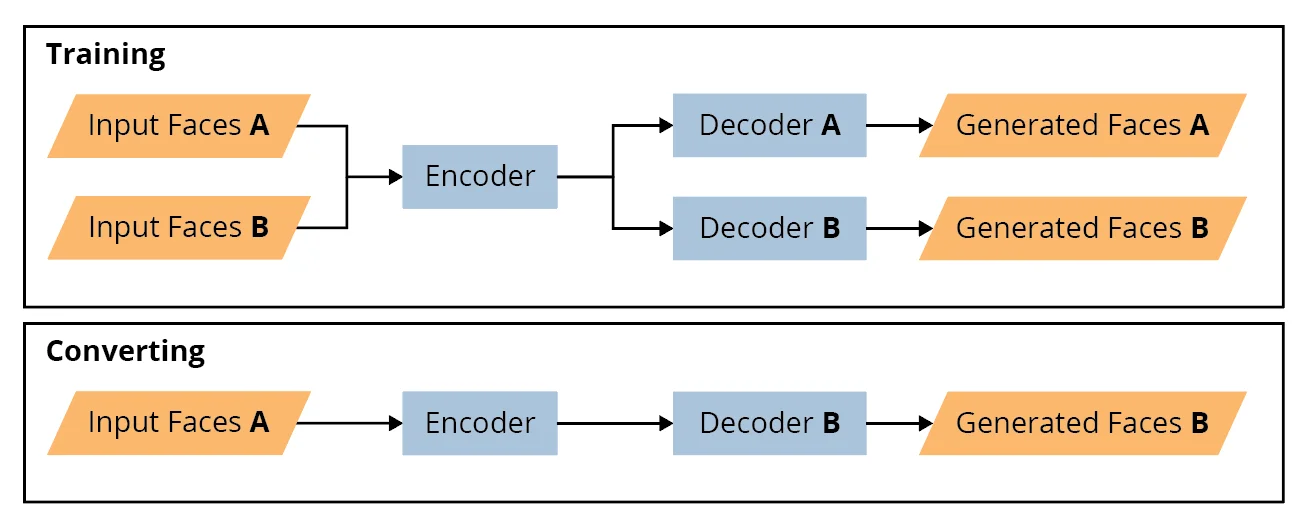

3. Training (GPU)—Each set of aligned faces is then input to the training network. A general schematic of an encoder-decoder network for training and conversion is shown in Figure 1 above. Notice that batches of aligned and masked input faces A and B (after the extraction step) are both fed into the same encoder network. The output of the encoder network is a representation of all the input faces in a lower dimensional vector space, called the latent space. These latent-space objects are then each passed separately through decoder networks for the A and B faces that attempt to generate, or recreate, each set of faces separately. The generated faces are compared to the original faces, the loss function is calculated, backpropagation occurs, and the weights for the decoder and encoder networks are updated. This occurs for another batch of faces until the desired number of epochs is achieved. The user decides when to terminate the training by visually inspecting the faces for quality or when the loss value does not decrease any further. There are times when the resolution or quality of the input faces, for various reasons, prevents the loss value from reaching a desired value. Most likely in this case, no amount of training or post-processing will result in a deepfake that is convincing.

4. Conversion (CPU/GPU)—The deepfake is created in the conversion step. If one wishes to create a faceswap, where face A is to be swapped with B, then the flow in the lower portion of Figure 1 above is used. Here, the aligned, masked input faces A are fed into the encoder. Recall that this encoder has learned a representation for both faces A and B. When the output of the encoder is passed to the decoder for B, it will attempt to generate face B swapped with the identity of A. Here, there is no learning or training that is done. The conversion step is a one-way pass of a set of input faces through the encoder-decoder network. The output of the conversion process is a set of frames that must then be put together by other software to become a video.

5. Post-processing (CPU)—This step requires extensive time and skill. Minor artifacts may be removable, but large differences will likely not be able to be edited out. While post-processing may be performed leveraging the deepfake software frameworks’ built-in compositing and masking, results are less than desirable. While DeepFaceLabs provides the ability to incrementally adjust color correction, mask position, mask size, and mask feather per each frame of video, the granularity of adjustment is limited. To achieve photorealistic post-processing, traditional media FX compositing is required. The deepfake software framework is used only to export an unmasked deepfake composite and all adjustments to the composite made with a variety of video post-production applications. DaVinci Resolve can be used to color correct and chroma key the composite to the target video. Mocha can then be used to planar motion track the target video as well as the composite video creating a custom keyframe mask. The Mocha can then be imported into Adobe After Effects for the final compositing masking of the deepfake with the target. Finally, shadows and highlights from the target would be filtered from the target video and overlayed on the deepfake. Should the masking accidentally remove pixels of the target's background, Photoshop can be used to recreate the lost pixels. The finished result creates a motion-tracked, color-corrected photorealistic deepfake limiting traditional blending artifacts.

Each open-source tool has a large number of settings and neural-network hyperparameters with some general commonalities between tools, and some differences mainly with respect to neural-network architecture. With a range of GPUs available, including a machine-learning GPU server, as well as individual gaming-type GPUs, a higher quality deepfake can be made on a single gaming-type GPU, in less time, than on a dedicated machine-learning GPU server.

Hardware requirements vary based on the deepfake media complexity; standard-definition media require less robust hardware than ultra-high-definition (UHD) 4K. The most critical hardware component to deepfake creation is the GPU. The GPU must be NVIDIA CUDA and TensorFlow compliant, which requires NVIDIA GPUs. Deepfake media complexity is affected by

- video resolution for source and destination media

- deepfake resolution

- auto-encoding dimension

- encoding dimensions

- decoding dimensions

- tuning parameters such as these, from DeepFaceLab: Random Warp, Learning Rate Drop Out, Eye Priority Mode, Background Style Power, Face Style Power, True Face Power, GAN Power, Clip Grade, Uniform Yaw, etc.

The greater each parameter, the more GPU resources are needed to perform a single deepfake iteration (one iteration is one batch of faces fed through the network with one optimization cycle performed). To compensate for complex media, deepfake software is sometimes multithreaded, distributing batches over multiple GPUs.

Once the hardware is properly configured with all needed dependencies, there are limited processing differences between operating systems. While a GUI-based operating system does use more system resources, the effect on batch size is not severely altered. Different GPUs, however, even by the same manufacturer, can have widely different performances.

Time per iteration is also a factor for creating deepfakes. The larger the batch size, the longer each iteration takes. Larger batch sizes produce lower pixel-loss values per iteration, reducing the number of iterations needed to complete training. Distributing batch sizes over multiple GPUs also increases time per iteration. It is best to run large batch sizes over a single GPU with a high amount of VRAM as well as a large core clock. Although a reasonable expectation is that using a GPU server with 16 GPUs would be superior to a couple of GPUs running in a workstation, in fact, someone with access to a couple of GPUs worth a few thousand dollars can potentially make a higher quality deepfake video than that produced by a GPU server.

The current state of the art of deepfake video creation entails a long process of recording or identifying existing source footage, training neural networks, trial and error to find the best parameters, and video post-processing. Each of these steps is required to make a convincing deepfake. The following are important factors for creating the most photorealistic deepfake:

- adequate GPU hardware

- source footage with enough even lighting and high resolution

- adequate lighting matched between source and destination footage

- source subjects with similar appearance (head shape and size, facial-hair style and quantity, gender, and skin tone) and patterns of facial hair

- video capturing of all head angles and mouth phoneme expression

- using the right model for training

- performing post-production editing of the deepfake

This process involves much trial and error with many disparate sources to get information (forums, articles, publications, etc.). Therefore, creating a deepfake is as much an art as a science. Because of the non-academic nature of deepfake creation, it may persist this way for some time.

State of Detection Technology: A Game of Cat and Mouse

A rush of new research has introduced several deepfake video-detection (DVD) methods. Some of these methods claim detection accuracy in excess of 99 percent in special cases, but such accuracy reports should be interpreted cautiously. The difficulty of detecting video manipulation varies widely based on several factors, including the level of compression, image resolution, and the composition of the test set.

A recent comparative analysis of the performance of seven state-of-the-art detectors on five public datasets that are often used in the field showed a wide range of accuracies, from 30 percent to 97 percent, with no single detector being significantly better than another. The detectors typically had wide-ranging accuracies across the five test datasets. Typically, the detectors will be tuned to look for a certain type of manipulation, and often when these detectors are turned to novel data, they do not perform well. So, while it is true that there are many efforts underway in this area, it is not the case that there are certain detectors that are vastly better than others.

Regardless of the accuracy of current detectors, DVD is a game of cat and mouse. Advances in detection methods alternate with advances in deepfake-generation methods. Successful defense will require repeatedly improving on DVD methods by anticipating the next generation of deepfaked content.

Adversaries will probably soon extend deepfake methods to produce videos that are increasingly dynamic. Most existing deepfake methods produce videos that are static in the sense that they depict stationary subjects with constant lighting and unmoving background. But deepfakes of the future will incorporate dynamism in lighting, pose, and background. The dynamic attributes of these videos have the potential to degrade the performance of existing deepfake-detection models. Equally concerning, the use of dynamism could make deepfakes more credible to human eyes. For example, a video of a foreign leader talking as she rides past on a golf cart would be more engaging and lifelike than if the same leader were to speak directly to the camera in a static studio-like scene.

To confront this threat, the academic and the corporate worlds are engaged in creating detector models, based on DNNs, that can detect various types of deepfaked media. Facebook has been a major contributor by holding the Deepfake Detection Challenge (DFDC) in 2019, which awarded a total of $US 1 million to the top five winners.

Participants were charged with creating a detector model trained and validated on a curated data set of 100,000 deepfake videos. The videos were created by Facebook with help from Microsoft and several academic institutions. While originally the dataset was available only to members of the competition, it has since been released publicly. Out of the more than 35,000 models that were submitted, the winning one achieved an accuracy of 65 percent on a test dataset of 10,000 videos that were reserved for testing, and 82 percent on the validation set used during the model-training process. The test set was not available to the participants during training. The discrepancy in accuracy between the validation and test sets indicates that there was some amount of over-fitting, and therefore a lack of generalizability, an issue that tends to plague DNN-classification models.

Knowing the many elements required to make a photorealistic deepfake—high-quality source footage of the proper length, similar appearance between source and destination, using the proper model for training, and skilled post-production—suggests how to identify a deepfake. One major element would be training a model with enough different types of deepfakes, of various qualities, covering the range of possible flaws, on a model that was complex enough to extract this information. A possible next step would be to supplement the dataset of deepfakes with a public source, such as the dataset from the Facebook DFDC, to build a model detector.

Looking Ahead

Network defenders need to understand the state of the art and the state of the practice of deepfake technology from the side of the perpetrators. Our SEI team has begun taking a look at the detection side of deepfake technology. We are planning to take our knowledge of deepfake generation and use it to improve on existing deepfake-detection models and software frameworks.

Additional Resources

The Software Engineering Institute will host Deepfakes Day 2022, a workshop on the origin of deepfake media content, its technical underpinnings, and how to spot and mitigate it. Registration is required for this free, virtual event on Tuesday, August 30, from 10 a.m. to 2 p.m.

More By The Authors

More In Artificial Intelligence Engineering

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedMore In Artificial Intelligence Engineering

Get updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed