Deploying the CERT Microcosm DevSecOps Pipeline using Docker-Compose and Kubernetes

According to DevSecOps: Early, Everywhere, at Scale, a survey published by Sonatype, "Mature DevOps organizations are able to perform automated security analysis on each phase (design, develop, test) more often than non-DevOps organizations." Since DevOps enables strong collaboration and automation of the process and enforces traceability, mature DevOps organizations are more likely to perform automated security analysis than non DevOps organizations. My previous blog post, Microcosm: A Secure DevOps Pipeline as Code, helped address the problem that most organizations do not have a complete deployment pipeline in place (and are therefore not considered to be DevOps mature) by automating penetration tests of software applications and generating HTML reports as part of the build process through the Jenkins CI service. In this follow-up blog post, I explore the use of a service evolution of Microcosm as a simple one-stop shop for anyone interested in learning how to implement a DevSecOps pipeline.

Microcosm -> Microservice: A DevSecOps Pipeline through a Microservice Architecture

Using multiple, separate virtual machines, Microcosm creates a Secure DevOps pipeline using Infrastructure as Code with Vagrant and Chef cookbooks for service provisioning. With Microcosm's popularity--and due to the extreme popularity of Docker in the DevOps community--researchers in the CERT Division's Secure Lifecycle Solutions team decided that the next evolution of Microcosm would be to offer a microservice version of the open-source deployment pipeline utilizing Docker containers.

Capitalizing on the Consolidation of IaC Technologies: Vagrant and Docker-Compose

The second version of Microcosm adds to the existing Vagrantfile. In particular, a user can choose which architectural style of deployment pipeline that they desire by simply issuing a vagrant up command. One additional Centos 7 VM, which still uses the provided secure CMU Vagrant base box, has been added to the Vagrantfile to act as the host that each microservice will live on.

In addition to using Docker containers, these microservices are provisioned and managed using Docker-Compose in parallel with the vagrant-docker-compose Vagrant plugin, which is available through a public GitHub repository. This setup allows easy and concise management of all offered Docker containers through a docker-compose.yml file, which accesses the Docker Hub to pull the specified Docker images for container creation. The vagrant-docker-compose plugin allows for the automatic installation of Docker and Docker-Compose on the defined VM, as well as the execution of the docker compose up command with the docker-compose.yml file that is passed as a configuration parameter in the Vagrantfile.

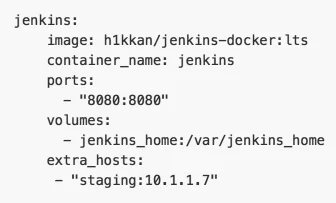

As mentioned in the provided README.md, it is important to have a solid understanding of the two levels of port forwarding that occur in the second version of Microcosm. At the service level, the initial layer of port forwarding occurs between each container and the Centos 7 VM that is running Docker-Compose. This port forwarding can be seen for each container definition in the "docker-compose.yml" file, as shown below for the Jenkins container.

Note the values assigned to the "ports" configuration option: 8080:8080. The port to the right of the colon specifies the port in which the service is listening on within the container.

The port to the left of the colon specifies forwarded port in which the Centos 7 VM is listening on.

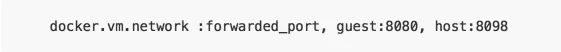

The second layer of port forwarding now occurs between the Centos 7 VM and the host machine that is running Vagrant. This port forwarding occurs in the VM definition within the Vagrantfile, as follows:

The Centos 7 VM re-forwards its forwarded port to the specified port on the host machine. Jenkins is therefore available at localhost:8098 via a browser on the host machine.

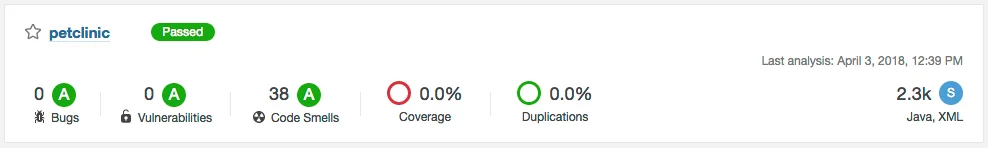

In addition to offering a containerized version of the deployment pipeline, we also added the SonarQube static code analysis and Sonatype Nexus Repository Manager services. By taking advantage of the SonarQube Scanner plugin available for Jenkins, we can run the SonarQube code analysis tool to search for bugs and vulnerabilities as part of the Jenkins continuous integration build process. Upon a successful Jenkins build, a detailed analysis report of the application's code is available via the SonarQube web interface, as shown below.

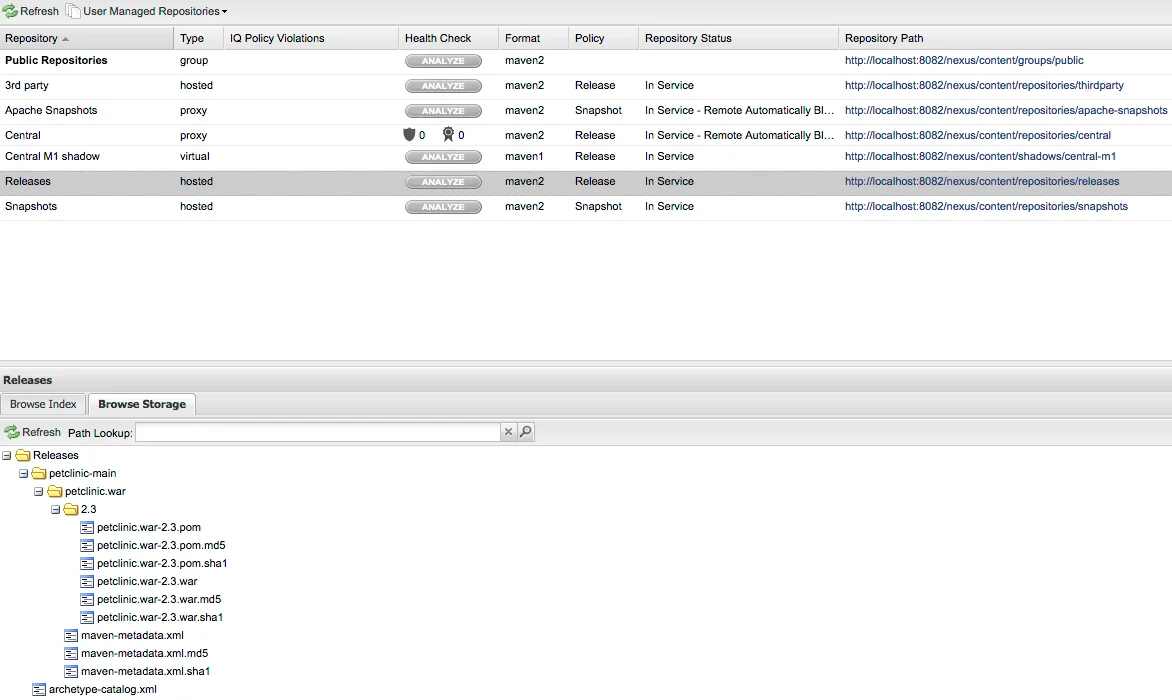

We've also taken advantage of the Sonatype Nexus Platform plugin for Jenkins that enables the deployment of artifacts selected in Jenkins packages to be available in Nexus Repository Manager 2.x. After properly configuring the Nexus Platform plugin within Jenkins and completing a successful build, the chosen artifacts can be seen within the Nexus Repository Manager, as shown below.

The Container Orchestration Approach via Minikube and Kubernetes

For those unfamiliar with Kubernetes, I recommend reading RedHat's What is Kubernetes? because it is necessary to understand basic Kubernetes concepts and terms to understand the implementation of Microcosm through a Kubernetes cluster.

Using the combination of the Minikube VM and the Kubernetes CLI kubectl, a user can stand up the entire Microcosm deployment pipeline in a matter of seconds. Using the provided deployment.yml file, the user also can also scale up any of the included services, as well as provide built in reliability across all deployments. As explained by Redhat, "Kubernetes eliminates many of the manual processes involved in deploying and scaling containerized applications. In other words, you can cluster together groups of hosts running Linux containers, and Kubernetes helps you easily and efficiently manage those clusters."

Each service in the Microcosm pipeline requires two definitions in the deployment.yml file: a deployment and a service." A Kubernetes deployment is responsible for managing stateless services that are running within your Kubernetes cluster. A deployment's job is to ensure the reliability of a set of identical pods, as well as to provide updates in a contained manner. Kubernetes services, on the other hand, is a resource that allows the configuration of a proxy to forward requests to an associated set of pods.

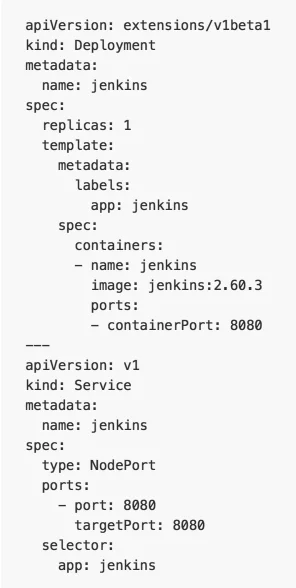

Shown below is an example of the deployment/service definition for a Jenkins CI deployment.

The replicas field in the deployment definition specifies the number of pods that will be available after the Jenkins deployment is created (in this case it is just one, since we do not need to scale a CI service for the Microcosm pipeline). If the replicas field was changed to 2 however, each different pod created under this deployment would both use the same service to have the appropriate port exposed through the Kubernetes node (in this case the Minikube VM), allowing access from an external source.

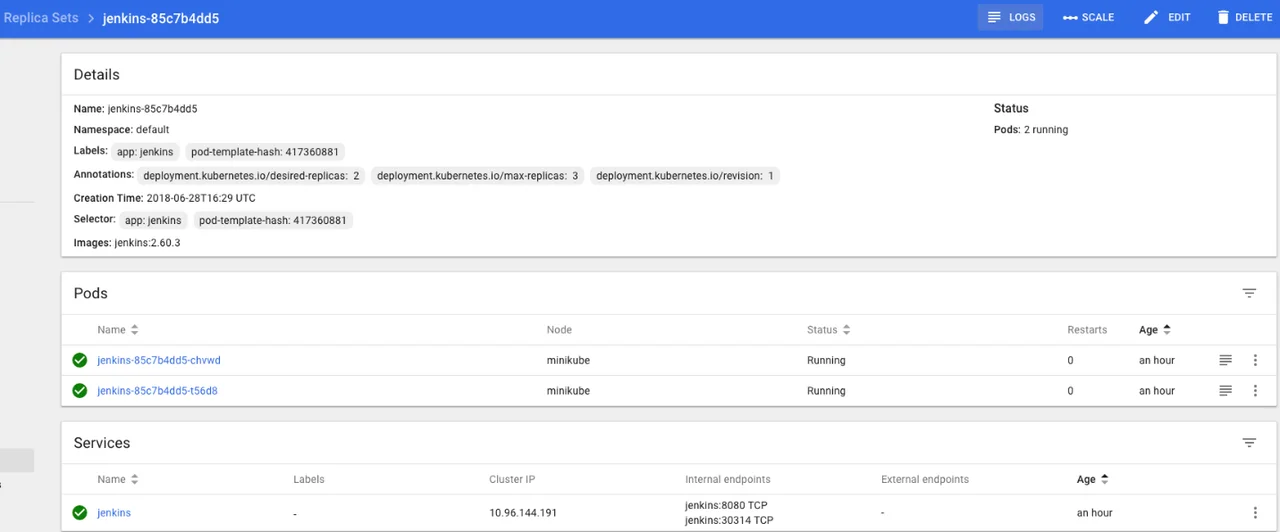

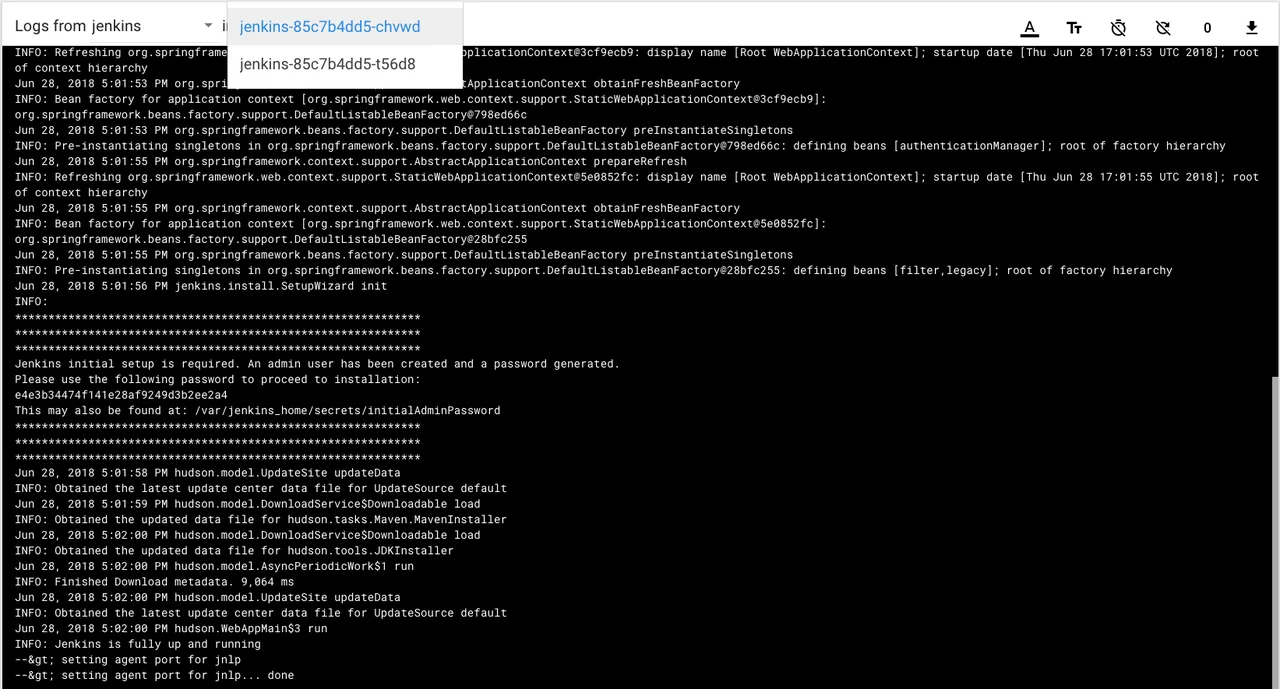

One of the added features of developing a Kubernetes cluster locally using Minikube is the dashboard. The Minikube dashboard provides the capability of not only accessing all the same information about the Kubenetes cluster, but also to complete any of the maintenance or update functions such as scaling up/down or rolling out new code to the cluster. Below is an example of the details for a Jenkins deployment that was scaled up from one to two pods, as well as the built-in logging feature for each pod.

Wrapping Up and Looking Ahead

By offering the Microcosm deployment pipeline as a stack of lightweight Docker containers and the original version comprising separate VM's, we have provided the open-source community the opportunity to choose whichever configuration is suitable for their hardware needs. If necessary, the Vagrantfile can be modified at any user's discretion to increase the amount of system resources allocated to any of the VM's. Moreover, adding the SonarQube and Sonatype Nexus services to the Jenkins CI build process helps promote DevSecOps practices by implementing multiple, automated ways of performing security assessments and best practices throughout the various stages of the deployment pipeline.

Looking ahead, we plan to enhance the Kubernetes version of Microcosm even further by providing instructions on how to deploy the Microcosm cluster to cloud providers such as AWS and Microsoft Azure.

Additional Resources

Read my previous post on this topic Microcosm: A Secure DevOps Pipeline as Code.

Read other posts in the SEI's DevOps blog.

Read the articles cited in this post:

- Gartner Research Circle. (2016). Gartner Enterprise DevOps Survey Study. Gartner Enterprise (https://www.redhat.com/en/topics/containers/what-is-kubernetes).

- Sonatype. (2018). DevSecOps: Early, Everywhere, at Scale. Fulton, MD: Sonatype (https://fr.sonatype.com/hubfs/eBooks/Ebook-%20DevSecOps_V.2.pdf).

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed