Agile Metrics: Assessing Progress to Plans

Metrics offer insight into the realization of a functioning system, as well as the performance of the processes that are in place. These insights enable oversight on the performance of a program as it delivers contracted capabilities. This blog post explores the role of metrics in a government program’s assessment of progress in an iterative, incremental delivery of a complex cyber-physical system.

The post is based on our experiences at the SEI supporting programs using incremental delivery approaches tailored to the missions of the sponsoring organizations. Metrics that are key to incremental delivery emphasize assessing progress to plan and assessing quality, both of which include flow-based metrics influenced by Lean and Agile thinking. Although this post focuses specifically on assessing progress to plan, metrics that support assessing quality also provide valuable insight into a program’s successful realization of a system. The technical adequacy of the work is a primary concern that spans both progress to plan and quality.

Correspondence Between Assessment Scope and Time Horizon for Decisions

Assessing progress to plan for iterative, incremental delivery typically involves measurements against near-, mid-, and long-term plans rather than against a single integrated master plan. Iterative-development methods establish clear criteria for the acceptability of work at different levels of detail. These criteria serve as the basis for assessing progress against plans at appropriate times.

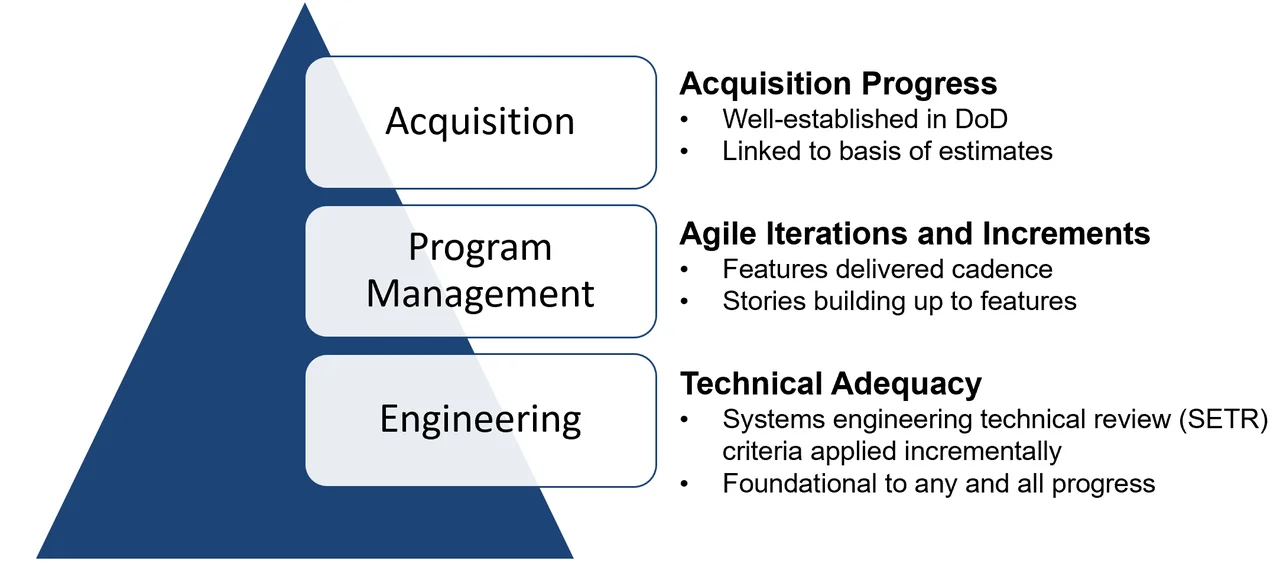

The link between the near-term accomplishments and the long-term plan for the program is an essential focus of program management. This succession of plans from near- to mid- to long-term takes the place of a single integrated master plan. Each of these levels of oversight has an aperture and focus unique to its associated plan and the time horizon. The measurements that offer insight for each of these plans are described in the sections below, starting from the bottom of Figure 1 and moving upward.

- Engineering—These metrics are based on meeting technical and engineering targets and performance goals. The technical adequacy of the detailed work is measured to ensure that timely quality work leads to maturation of capabilities. These metrics are targeted to an audience of team leads, subject-matter experts, and engineering managers.

- Program Management—For programs aspiring to a continuous-delivery approach, these metrics focus on tracking product elements delivered by a pipeline. Measuring the progress of stories, features, and capabilities provides insight as to how well the program is progressing. These metrics are targeted to an audience of middle and upper program-management personnel who oversee the delivery of finished goods over time, as they are integrated into the system.

- Acquisition—These metrics provide insight about the goals of the acquisition activity. Such measures are well established in the U.S. Department of Defense (DoD) and are directly linked to long-term program estimates and contracts. These metrics are typically targeted to an audience of executive leadership and upper management (e.g., material leaders, senior material leaders, program executive officers)—with program managers often reporting the key data. They also focus on time horizons that are longer than the timeline of project management.

Engineers and subject-matter experts, program managers, and acquisition professionals must assess work progress from these three complementary perspectives. The engineering perspective is one of technical progress. It is a major focus in programs that advance state-of-the-art technologies. The program-management perspective assesses progress against the planned deliverables and timelines established for the program. Finally, the acquisition perspective comes from the goal of the enterprise for which the program was established. Different metrics and skills are required to assess progress in these three complementary areas.

1. Engineering Progress Metrics

Assessing the maturation of complex designs demonstrating the achievement of unprecedented technical outcomes requires an engineering perspective. Engineers derive leading indicators based on the technologies used and the nature of the problem. Consequently, generic indicators, such as productivity and quality, determine progress only partially since they typically don’t account for the specific technologies in use. Engineering review of artifacts derived from requirements analysis, design activities, and examples of implementation are necessary inputs. Government personnel charged with engineering oversight measure engineering progress against near-term plans. The outcomes of these assessments ultimately drive program milestones.

Examples of reviews where engineering progress is typically assessed include the following:

- Technical reviews—The major deliverables listed in the roadmap for each capability will undergo technical reviews through a systems engineering technical review (SETR) process—a review meeting called by the contractor in which government stakeholders evaluate the adequacy of the deliverables, often in an iterative fashion, to support rapid progression of the work in smaller work packages. Government oversight personnel consider criteria for attaining the level of maturity implied by traditional milestones (e.g., preliminary design review [PDR] and critical design review [CDR]) as the technical work underway produces artifacts recording the accomplishments. These accomplishments then serve as input to the next engineering activity.

- Demonstration events—Demonstrations of the features and capabilities as they mature make visible the technical pulse of capability maturation. Whether demonstrating a small piece of functionality in a test harness or running a full simulated mission in a robust lab, developers provide deeper insight into accomplishments through demonstrations than would be obtained from a written report of their success. Appropriate subject-matter experts evaluate objectives for specific functionality or performance in demonstrations (e.g., on specified technical performance measures). Some organizations collect scores for utility (or value) of the demonstrated functionality.

- Verification events—Often conducted in specialty labs, or with production hardware on a test range, the data collected at these events are foundational. Assessment against baseline requirements, meeting documented test-coverage thresholds, and detailed analysis of test data inform decision makers. Closure of the process is often contingent on adjudicating the disposition of observed anomalies and providing certification-related data meant to assure the integrity of the work products.

2. Program-Management Progress Metrics

The program-management perspective, in contrast to the engineering perspective, is informed by a roadmap of milestones, review events, and deliverables that are designed to provide a steady source of information to confirm progress or trigger action. The most common sources of these progress indicators include completion status for units of work (e.g., stories, features, use cases, capabilities) and their satisfaction of relevant completion criteria, along with the associated schedule and cost data.

The definition of done provides the mechanism to define all the criteria that must be satisfied before the item being developed can be considered complete. In practice, determining completion requires a robust understanding, agreed to by both government and contractor, to ensure that work is, in fact, complete. It should be noted that when a developer is using all the capabilities of an application lifecycle-management tool, the tool typically contains the data needed to supply most program-management progress metrics. The tools can often generate these metrics automatically, though there may well be need of additional tools to provide summary-level data depending on attributes of the program. Measuring progress to program-management plans might include the following:

- Completion of features within an increment—The time horizon many consider when planning an engineering or test build, with the associated work products to support verification, is sometimes called an increment. Often, increments are made up of iterations that last one or two weeks. Many frameworks provide a rich elaboration of these concepts. Measuring the number of features that met the definition of done during an increment is a very common approach. This approach provides a tangible indicator of verifiable progress and is typically displayed in a burndown chart. The satisfaction of acceptance criteria, as well as the definition of done for features, typically indicates a delivery of functionality greater than the “unit level” (implying a level of integration). When combined with other data, feature completion counts provide insights as follows:

- Comparing the number of features completed to the number committed to during that timeframe provides insight into the estimation process.

- For a given capability, comparing the number of completed features to the expected number of features provides a rough estimate of the completion of the capability. Not all features are of equal difficulty, and not all features required for the capability may be evident at the start. Oversight personnel can use this same approach for other containers of work, such as epics and use cases.

- Completion of user stories within an iteration (sprint)—While such granular data is typically of more use to the teams performing the work, the government can gain insight by looking at the consistency of story-completion data. If there are wild fluctuations, it is likely that there is instability in the development process and that progress will be impeded or that stories vary widely in size/difficulty and caution is required to interpret the counts. The significance of integration for evaluating the utility of software work products often makes progress to plan at the iteration level a more speculative endeavor. Strategies for continuous integration are meant to address this essential role of software verification through development approaches and tools that effectively increase the technical scope of early testing activities, to accelerate the next tier of verification feedback.

- Traceability matrix report—This report allows the program manager to confirm for stakeholders that work is being performed according to a common understanding of program priorities. Accounting for timelines and effort required for things such as upgrades to the development or test environments, maintenance of evolving test assets, and other enablers of technical progress can be challenging. This report also provides assurance to support aggregate measures for other audiences, so that oversight personnel can make inferences regarding the completion status of the contracted capabilities.

- Defects reported/escaped—Defects identified after completion often indicate either a weakness in the definition of done or failure to adhere to that definition of done. A larger number of defects that have escaped erodes confidence in the completion data and accompanying metrics and, ultimately, slows progress when development effort must be expended to correct the defects.

3. Acquisition Progress Metrics

Metrics used for insight about the goals of the acquisition plan sometimes include the program managers’ application of earned-value management to iterative and incremental delivery. An earned-value management system (EVMS) is a program-management tool for tracking costs, schedule, and scope against an initial plan. The structure of the data generally aligns with the work breakdown structure (WBS) and master schedule. Historically, the earned-value structure was focused on computer software configuration items (CSCIs). This method, however, does not readily support an Agile-development effort. For Agile developments, using the product backlog to populate the EVMS provides tracking with a technical perspective, as recommended by Agile and Earned Value Management: A Program Manager’s Desk Guide.

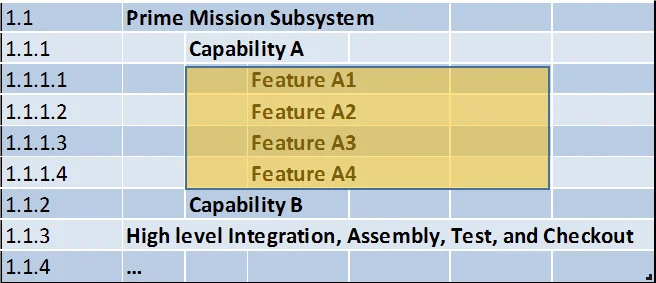

To facilitate delivery of working software, sizing constraints for each tier of the requirements breakdown (commonly described with the terms capabilities, features, and user stories) promote a consistent framework for understanding progress. After these constraints are assigned, structuring the WBS to reveal progress for contracted capabilities becomes much easier. Sizing constraints are important for two purposes: first to normalize the work packages, ensuring that they fall within a known size range so that they are comparable (and counting them leads to useful summaries); and second so the WBS depicts a consistent level of detail across the workload with traceability across the product elements.

One approach that shows promise is to use the just-in-time planning of Agile development in conjunction with the EVMS rolling-wave strategy. This approach allows acquisition personnel to incorporate the most-current program performance and product knowledge. Features committed to during increment-planning activity are updated in the EVMS to reflect the latest cost and size information as it is baselined. Greater precision in the near-term plans provide finer grained assessment of acquisition progress, while the uncertainties of longer term plans are the focus of iterative assessments based on most recent progress. The connection to the product elements, rather than CSCI structure of the software products, promotes focus on operationally relevant descriptions of progress achieved.

A cadence of quarterly increments composed of two-week iterations is common. When features are small enough to be completed within an increment, a meaningful level of detail for tracking can be achieved—while assuring a known level of verification and maturity for delivered products. Progress against scheduled releases, into test or to production, can be understood through analysis of the feature-delivery progress, and leading indicators for future-delivery performance.

The use of well-established program oversight mechanisms is a topic deserving more coverage than the discussion of EVMS found here. Total cost of ownership, and the operational performance of the system in use often overshadow the development costs. Forecasting and tracking against outcomes, expressed in terms of the needs of the system user, provide the most meaningful measure of success. Look for a future blog post on this topic.

Developing an Effective Measurement Program

Assessing progress to plan for iterative/incremental delivery typically involves measurements that assess progress against near-, mid-, and long-term plans. An effective measurement program identifies the aperture and focus unique to each associated plan and time horizon. The collection of these metrics relies on maintaining the confidence of stakeholders through timely communication of information that demonstrates accomplishment of goals. The stakeholders’ knowledge of program context and a shared understanding of the actions and outcomes driving the metrics enable the insight required to assess progress to plans.

In a future blog post, we will delve further into metrics for assessing quality.

Additional Resources

Read the SEI blog post, Agile Metrics: A New Approach to Oversight.

Read the SEI blog post, Don't Incentivize the Wrong Behaviors in Agile Development.

Read the SEI blog post, Five Perspectives on Scaling Agile.

Watch the SEI webinar, Three Secrets to Successful Agile Metrics.

Read the SEI technical note, Scaling Agile Methods for Department of Defense Programs.

Listen to the SEI podcast, Scaling Agile Methods.

Read other SEI blog posts about Agile.

Read other SEI blog posts about measurement and analysis.

More By The Authors

PUBLISHED IN

Get updates on our latest work.

Sign up to have the latest post sent to your inbox weekly.

Subscribe Get our RSS feedGet updates on our latest work.

Each week, our researchers write about the latest in software engineering, cybersecurity and artificial intelligence. Sign up to get the latest post sent to your inbox the day it's published.

Subscribe Get our RSS feed